The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Methodological)

In this article, we'll explore the evolution of MAS from a methodological or approach-based perspective.

Read the entire series

- Cross-Entropy Loss: Unraveling its Role in Machine Learning

- Batch vs. Layer Normalization - Unlocking Efficiency in Neural Networks

- Empowering AI and Machine Learning with Vector Databases

- Langchain Tools: Revolutionizing AI Development with Advanced Toolsets

- Vector Databases: Redefining the Future of Search Technology

- Local Sensitivity Hashing (L.S.H.): A Comprehensive Guide

- Optimizing AI: A Guide to Stable Diffusion and Efficient Caching Strategies

- Nemo Guardrails: Elevating AI Safety and Reliability

- Data Modeling Techniques Optimized for Vector Databases

- Demystifying Color Histograms: A Guide to Image Processing and Analysis

- Exploring BGE-M3: The Future of Information Retrieval with Milvus

- Mastering BM25: A Deep Dive into the Algorithm and Its Application in Milvus

- TF-IDF - Understanding Term Frequency-Inverse Document Frequency in NLP

- Understanding Regularization in Neural Networks

- A Beginner's Guide to Understanding Vision Transformers (ViT)

- Understanding DETR: End-to-end Object Detection with Transformers

- Vector Database vs Graph Database

- What is Computer Vision?

- Deep Residual Learning for Image Recognition

- Decoding Transformer Models: A Study of Their Architecture and Underlying Principles

- What is Object Detection? A Comprehensive Guide

- The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Algorithmic)

- The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Methodological)

- Understanding CoCa: Advancing Image-Text Foundation Models with Contrastive Captioners

- Florence: An Advanced Foundation Model for Computer Vision by Microsoft

- The Potential Transformer Replacement: Mamba

- ALIGN Explained: Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision

This is the second article in a two-part series. You can find the first article here.

In the previous article, we discussed the evolution of Multi-Agent Systems (MAS) from early neural networks to more advanced models from an algorithmic perspective. In this article, we'll explore the evolution of MAS from a methodological or approach-based perspective.

Specifically, we'll explore how early MAS relied heavily on a centralized approach, where the learning process for multiple agents took place on a single centralized server. Then, we'll discuss how this paradigm has shifted towards a more distributed or decentralized approach, which addresses various challenges ranging from scalability issues to data privacy concerns. Let's begin by exploring the centralized approach in MAS without further ado.

Centralization in MAS

Aside from the non-stationary problem, the scalability and centralized operation processes are the main challenges in developing a sophisticated multi-agent system.

In traditional multi-agent systems, operations were mostly conducted in a centralized manner. The key idea behind this centralization is the presence of a central entity—which could be a server, algorithm, or coordinator—that manages the agents. This central entity typically has access to global information about the entire system, such as the state of all agents and the environment. It also handles coordination between agents to ensure they work together harmoniously, thus reducing the risk of conflicts, inefficiencies, or redundant tasks.

Below are examples of centralized MAS:

Traffic Management Systems: In smart city applications, a centralized control system might oversee all traffic lights in a city. The traffic lights (agents) communicate their current states, and the central controller optimizes the traffic flow across the entire city.

Warehouse Robots: In a centralized warehouse system, a server may assign tasks like item retrieval to each robot, coordinating their movements and ensuring they don't collide or interfere.

Military Drones: A central command center might control a fleet of drones, assigning each drone specific targets or surveillance areas and coordinating its actions to maximize mission success.

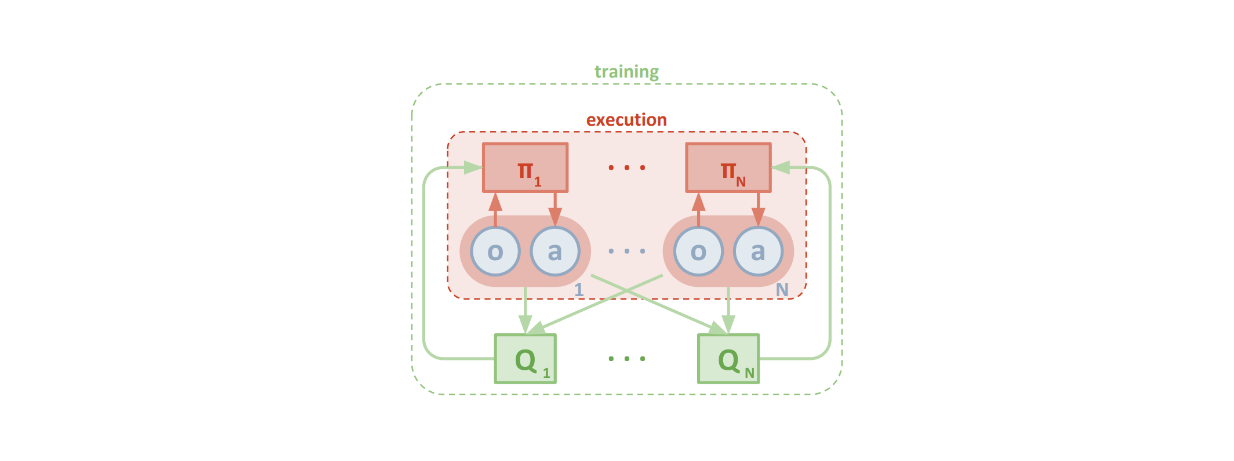

Figure- Multi-Agent DDPG Algorithm with decentralized actor, centralized critic architecture.

Figure- Multi-Agent DDPG Algorithm with decentralized actor, centralized critic architecture.

Figure: Multi-Agent DDPG Algorithm with decentralized actor, centralized critic architecture. Source.

Applying a centralized operation process has several advantages. The main advantage is optimized decision-making, as the central entity can access all the information. It also allows for easy and simple coordination between agents, reducing the chance of conflicts. For the agents themselves, relying on the central entity means they don't need complex decision-making capabilities.

However, the main disadvantage of using a centralized operation process is the scalability issue. As the number of agents increases, it becomes harder for the central entity to process all the information and make real-time decisions, leading to potential bottlenecks.

Additionally, the application of centralized operations becomes impractical when we want to train several agents simultaneously on independent data that can't be shared with the central entity due to privacy concerns. This is where the decentralized approach comes in.

The Concept of Decentralization

Decentralization is a concept that originates from various disciplines, including political science, economics, computer science, and biology. It generally refers to the distribution of authority, control, or decision-making across multiple entities or nodes rather than concentrating it on a single central authority.

In political science, for example, many countries have adopted a federal system where power is divided between central and regional governments. This concept is also highly relevant in biology, where we observe certain animals behaving in a decentralized manner. Insects like ants operate in decentralized colonies where no single entity controls the entire group. Instead, they rely on local interactions to find food.

The concept of decentralization takes many forms in our daily lives and is effective. This has led to the adoption of decentralization in technology, with the most famous examples being blockchain and cryptocurrency.

The core idea of blockchain originates from the concept of decentralization. It creates a distributed ledger where participants or nodes verify transactions independently without the need for a trusted central authority like a bank or government.

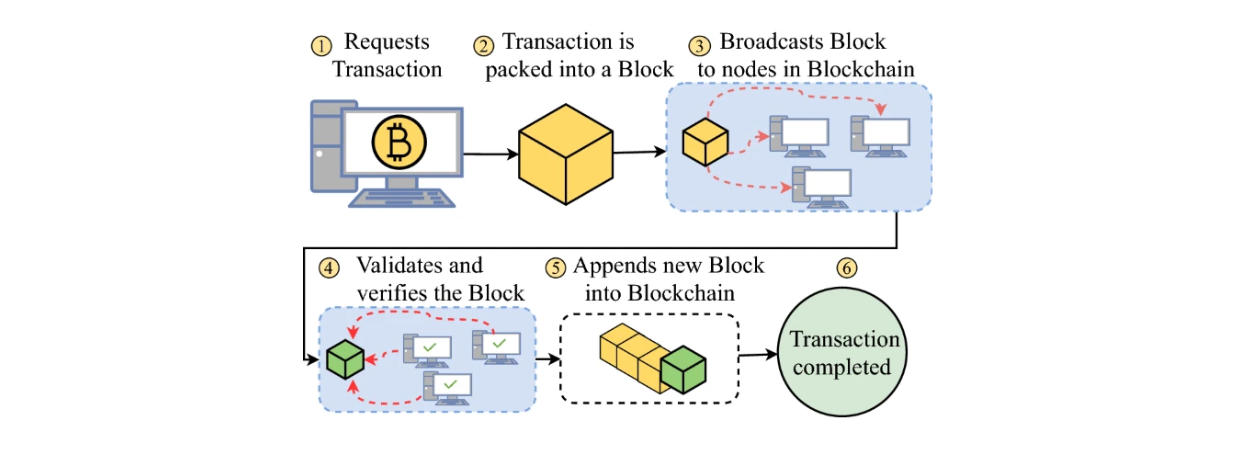

Figure- Crypto transaction workflow with blockchain technology..png

Figure- Crypto transaction workflow with blockchain technology..png

Figure: Crypto transaction workflow with blockchain technology. Source.

When we send Bitcoin to another user, this transaction is broadcast to the Bitcoin network, where it waits to be included in a block. Next, a group of participants called miners check the validity of our transaction, include it in a block, and compete to solve a cryptographic puzzle to add that block to the blockchain. The transaction is confirmed once the block is added and becomes part of the immutable blockchain ledger. The main benefit of this approach is that it reduces risks associated with security breaches and fraudulent transactions.

Following the success of implementing the decentralized concept in blockchain technology, we have seen this concept also adapted in AI, particularly in multi-agent settings.

Decentralization in Modern Multi-Agent Systems

In the context of multi-agent systems, decentralization means that no central entity manages and guides the actions of all agents. Each agent in a decentralized MAS has the autonomy to make its own decisions based on its local knowledge, environment, and interactions with other agents.

The decentralized approach solves the scalability issue commonly found in multi-agent training. No central controller means that there will be no bottleneck, and adding more agents to the system doesn't significantly increase its complexity. Also, the system will be less vulnerable to failures. If one agent fails, the others can continue operating without affecting the overall system.

However, having no central entity doesn't mean that agents can't collaborate with each other. For example, an agent can coordinate with other agents through shared goals, but this happens in a distributed way without a central command. This special concept initialized the rise of edge AI and federated learning, and we'll discuss these two concepts in the next section.

The Concept of Edge AI

When we talk about Edge AI, we typically refer to the practice of running machine learning models directly on small devices connected to a network, such as smartphones, IoT devices, sensors, and embedded systems, rather than relying on centralized cloud servers.

As you might know, when we want to use a trained machine-learning model for inference, we usually need to send our request and data to an API. We then receive the response from the model in a split second. However, the decentralization approach opens up the possibility of using trained machine learning models locally on edge devices without sending requests and data via API to a central server. For example, we can directly embed an AI trained to detect objects in images into a small camera, enabling real-time, on-device processing.

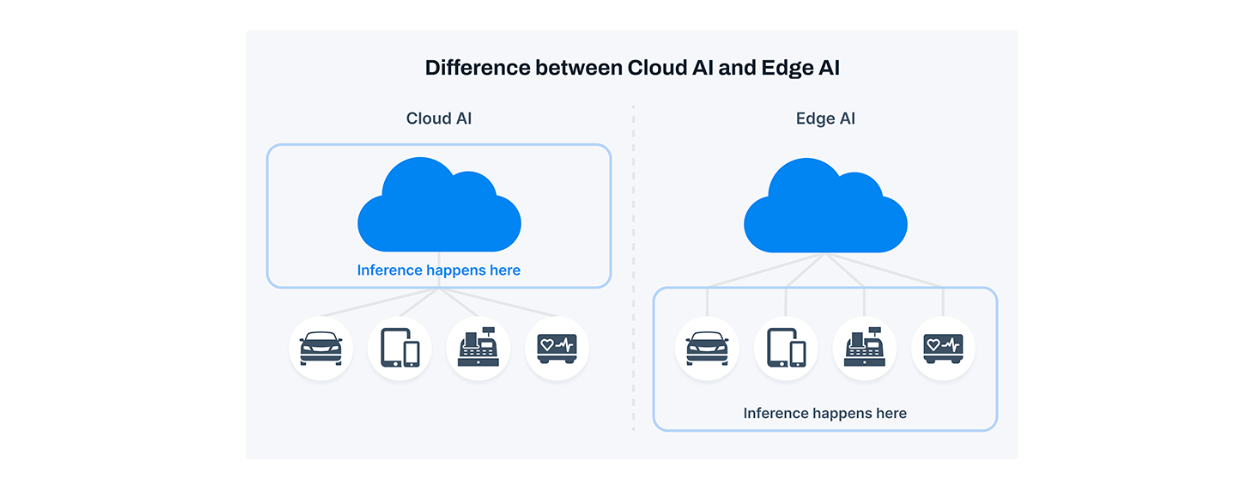

Figure- The difference between Cloud AI (centralized) and Edge AI (decentralized).

Figure- The difference between Cloud AI (centralized) and Edge AI (decentralized).

Figure: The difference between Cloud AI (centralized) and Edge AI (decentralized). Source.

This approach has several advantages. The first is related to latency. By enabling on-device processing, Edge AI can make instantaneous decisions. This is particularly important when delays could lead to critical failures, such as in autonomous driving or medical devices.

Additionally, with millions of IoT devices in operation (sensors, cameras, phones), scaling a centralized AI system would be extremely costly and complex. By distributing AI processing across edge devices, Edge AI systems can scale more easily as more devices are added without overwhelming a central system.

Since our AI is directly embedded in our devices, it also means that we don't need to share our local data with a centralized server or cloud for processing. This is crucial for minimizing the risk of data breaches or misuse. Sensitive information like personal data, health records, or location data doesn't need to leave the device, significantly enhancing privacy and compliance with regulations like GDPR.

The Concept of Federated Learning

In the previous section, we talked about how the decentralized approach to AI helps enhance data privacy and compliance, particularly for sensitive data that we don't want to share with third parties. We also briefly discussed how several agents can collaborate through shared goals without a central entity.

The combination of these two perspectives leads to the introduction of federated learning. In a nutshell, federated learning focuses on how we train a machine learning model. In this approach, the training process is done collaboratively across different devices while keeping the data decentralized and on the devices themselves.

Rather than collecting all the data in a central server or cloud for training, federated learning allows devices to process their data locally, train their portion of the model, and share only the model updates, such as learned parameters, with a central server.

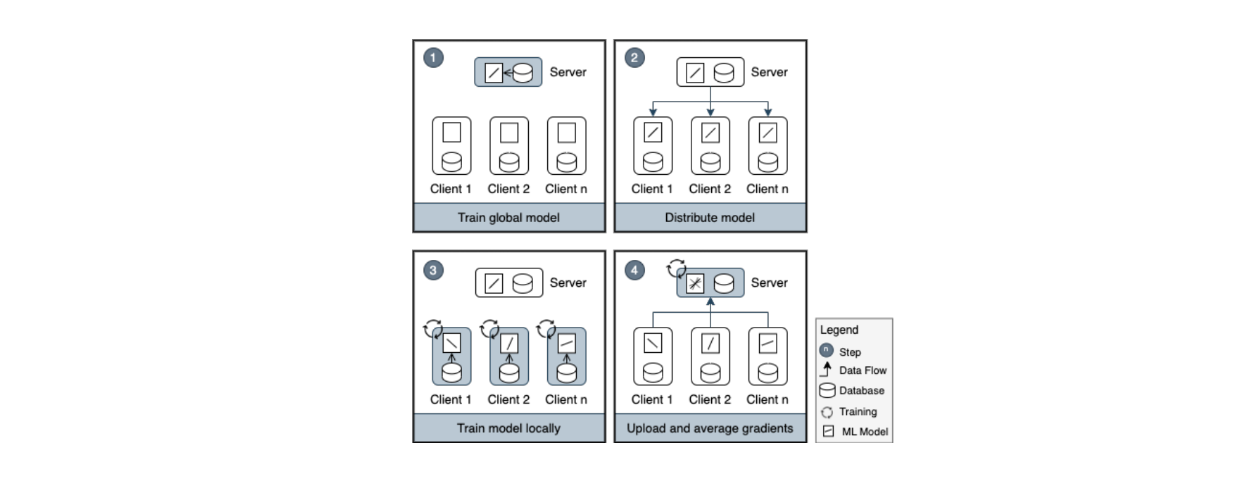

These are the complete steps of training a model with federated learning:

The server or cloud provides an initial global model to all devices.

Each device trains this model on its local data. For example, we might want to train the model on image data saved in our smartphones. The device then creates local updates regarding the model's parameters.

Devices send only the local model updates, not the data, back to the server.

The server aggregates these updates to improve the global model.

The updated global model is sent back to the devices for further local training, and the cycle continues for a specific number of iterations.

Figure- One iteration of the Federated Machine Learning process.

Figure- One iteration of the Federated Machine Learning process.

Figure: One iteration of the Federated Machine Learning process. Source.

So, how does federated learning benefit us? Since raw data never leaves the devices, data privacy is significantly enhanced. This is particularly helpful in sensitive areas like healthcare, finance, and mobile apps, where personal or sensitive data needs protection.

Additionally, the trained model will be a better fit for your use case, as it's been trained on your device's local data. For example, an AI model embedded in your smartphone can tailor its predictive text model based on your unique typing patterns while contributing to a broader global model that benefits all users.

Applications and Future Outlook of Decentralized MAS

Intelligent MAS today greatly benefits from decentralized approaches, Edge AI, and federated learning, which we have discussed in the previous sections. Here are some examples of applications where these technologies come together:

Smart Transportation and Autonomous Vehicles: In autonomous vehicles, individual vehicles are agents that make their own decisions based on their environment, such as detecting obstacles and following traffic rules. Each vehicle processes local sensor data to make real-time decisions without relying on a cloud server.

Smart Cities: In a smart city, infrastructures, such as traffic lights, energy sensors, and public transport vehicles, can be considered agents. These agents operate independently and make decisions based on local conditions. For example, smart traffic lights can adjust their timing based on real-time traffic density, and smart energy meters can adjust power usage in buildings.

Healthcare Systems: In healthcare systems, medical devices, such as wearable devices, smart monitoring systems, and medical diagnostic devices, can be considered agents. In this setting, agents can track patient health in real time or alert doctors based on local patient data.

Smart Manufacturing: In manufacturing, we can think of IoT devices such as sensors or industrial robots as agents. They can communicate and collaborate to optimize production processes, manage workloads, and prevent downtime by analyzing local production data.

Smart Farming: In the agriculture sector, we can think of various devices as agents, such as drones, irrigation systems, or robots. Multiple autonomous agents can collaboratively monitor different parts of a farm, collect data, and make localized decisions, such as adjusting irrigation based on soil moisture or targeting pest areas with pesticides.

Although it's still in its early days, the future outlook for decentralized approaches in MAS is promising, especially as the demand for scalable, privacy-preserving, and resilient AI systems continues to grow.

This approach also holds the potential for AI democratization, where smaller organizations, startups, or even individuals can contribute to developing state-of-the-art models. Of course, this would be preferable to the current centralized AI systems, which are controlled by tech giants with access to huge amounts of data and computational power.

Embedded Vector Databases for Decentralized MAS

An embedded vector database like open source Milvus is super useful for decentralized multi-agent systems and edge AI. By putting a vector database directly into edge devices or local agents, we can enhance decentralized AI systems. This allows for efficient storage and retrieval of high-dimensional data representations like embeddings from machine learning models on the device. In smart city scenarios, for example, an embedded vector database could allow traffic management agents to quickly search and compare complex traffic patterns without going to a central server. This reduces latency, improves real-time decision-making, and fits with the privacy-preserving nature of edge AI and federated learning by keeping data local. Plus, it scales in multi-agent systems as each agent can have its own optimized database for fast information processing and collaboration with other agents, all within the decentralized framework.

Conclusion

In this article, we have explored the evolution of multi-agent systems from a methodological perspective. The transition from centralized to decentralized approaches solves many challenges in developing multi-agent learning processes. Centralized MAS, while effective in its time, faced limitations in scalability and data privacy, making it less viable for modern applications that demand scalability and security.

The decentralization of MAS not only addresses these challenges but also harnesses the power of autonomy among agents. This approach allows for more efficient, real-time decision-making without the bottlenecks associated with centralized control. Technologies like Edge AI and federated learning are two examples of the practical applications of this decentralized approach. These technologies provide significant advantages in terms of data security, reduced latency, and solutions that respect user privacy.

If you’re also interested in the algorithmic evolution of multi-agent systems, check out my first article for more information.

Further Reading

How Semiconductor Manufacturing Uses Domain-Specific Models and Agentic AI for Problem-solving

Optimizing Multi-Agent Systems with Mistral Large, Nemo, and Llama-agents

What is Mixture of Experts (MoE)? How it Works and Use Cases

Efficient Memory Management for Large Language Model Serving with PagedAttention

- Centralization in MAS

- The Concept of Decentralization

- Decentralization in Modern Multi-Agent Systems

- Applications and Future Outlook of Decentralized MAS

- Embedded Vector Databases for Decentralized MAS

- Conclusion

- Further Reading

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Demystifying Color Histograms: A Guide to Image Processing and Analysis

Mastering color histograms is indispensable for anyone involved in image processing and analysis. By understanding the nuances of color distributions and leveraging advanced techniques, practitioners can unlock the full potential of color histograms in various imaging projects and research endeavors.

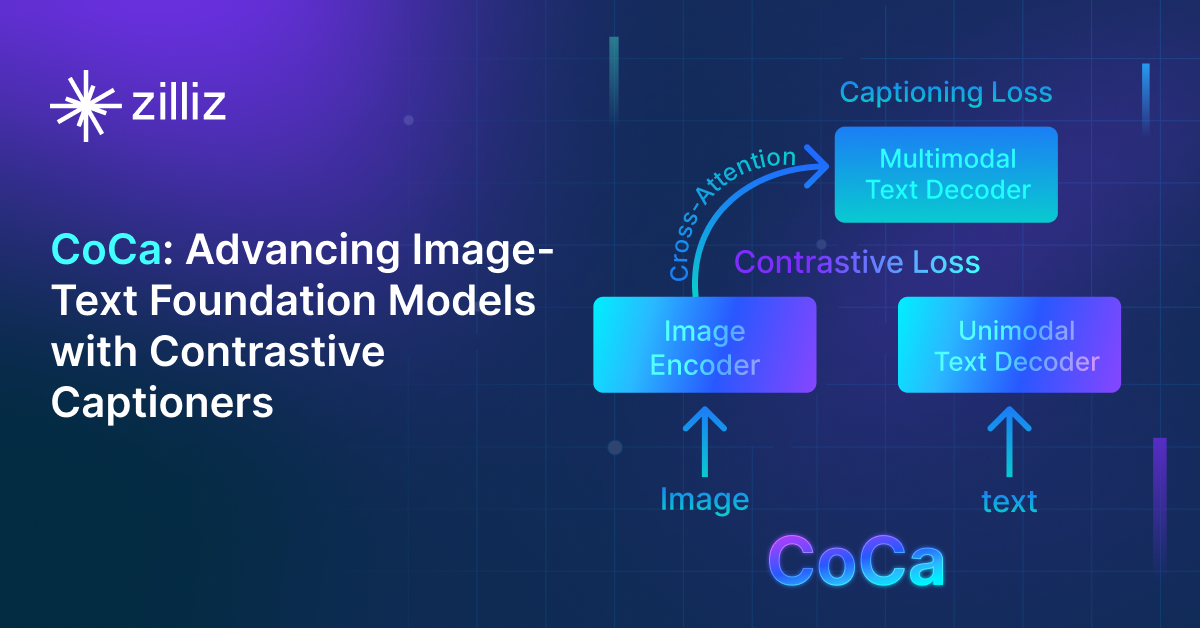

Understanding CoCa: Advancing Image-Text Foundation Models with Contrastive Captioners

Contrastive Captioners (CoCa) is an AI model developed by Microsoft that is designed to bridge the capabilities of language models and vision models.

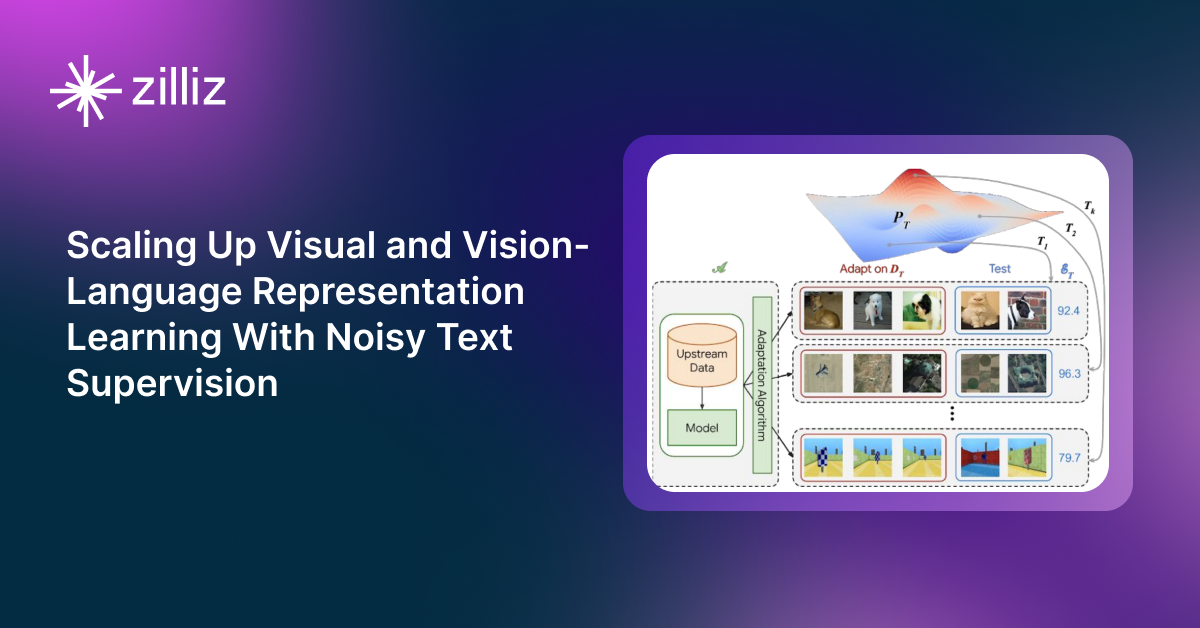

ALIGN Explained: Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision

ALIGN model is designed to learn visual and language representations from noisy image-alt-text pairs.