Nemo Guardrails: Elevating AI Safety and Reliability

In this article, we will provide an in-depth explanation of what Nemo Guardrails are, its practical applications, along with its integration.

Read the entire series

- Cross-Entropy Loss: Unraveling its Role in Machine Learning

- Batch vs. Layer Normalization - Unlocking Efficiency in Neural Networks

- Empowering AI and Machine Learning with Vector Databases

- Langchain Tools: Revolutionizing AI Development with Advanced Toolsets

- Vector Databases: Redefining the Future of Search Technology

- Local Sensitivity Hashing (L.S.H.): A Comprehensive Guide

- Optimizing AI: A Guide to Stable Diffusion and Efficient Caching Strategies

- Nemo Guardrails: Elevating AI Safety and Reliability

- Data Modeling Techniques Optimized for Vector Databases

- Demystifying Color Histograms: A Guide to Image Processing and Analysis

- Exploring BGE-M3: The Future of Information Retrieval with Milvus

- Mastering BM25: A Deep Dive into the Algorithm and Its Application in Milvus

- TF-IDF - Understanding Term Frequency-Inverse Document Frequency in NLP

- Understanding Regularization in Neural Networks

- A Beginner's Guide to Understanding Vision Transformers (ViT)

- Understanding DETR: End-to-end Object Detection with Transformers

- Vector Database vs Graph Database

- What is Computer Vision?

- Deep Residual Learning for Image Recognition

- Decoding Transformer Models: A Study of Their Architecture and Underlying Principles

- What is Object Detection? A Comprehensive Guide

- The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Algorithmic)

- The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Methodological)

- Understanding CoCa: Advancing Image-Text Foundation Models with Contrastive Captioners

- Florence: An Advanced Foundation Model for Computer Vision by Microsoft

- The Potential Transformer Replacement: Mamba

- ALIGN Explained: Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision

Introduction

The importance of AI safety and reliability is more significant than ever as organizations increasingly rely on AI technologies for critical operations. Ensuring that AI systems are accurate, dependable, and robust is not only a matter of ethical responsibility but also essential for maintaining trust and credibility in the marketplace. As AI becomes more deeply integrated into various sectors, prioritizing safety is crucial to prevent costly errors, avoid unintended consequences, and protect against potential attacks that could exploit vulnerabilities in these systems. The stakes are high, making it imperative to build AI systems that the public and industry can confidently rely on.

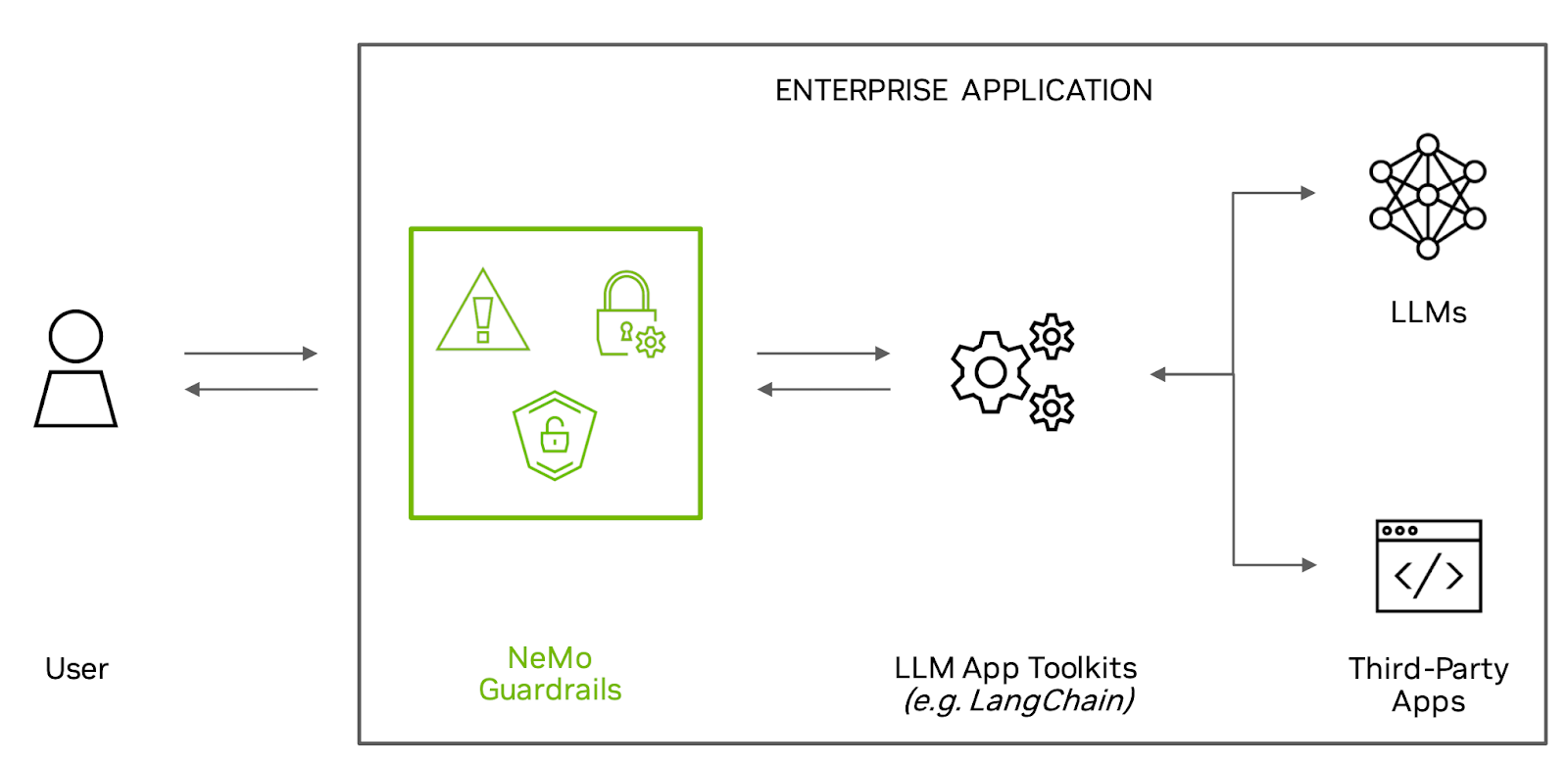

In response to this growing need, NVIDIA has introduced NeMo Guardrails, an open-source toolkit designed to provide the necessary tools to ensure that smart applications utilizing large language models (LLMs) are not only accurate but also relevant, appropriate, and secure. NeMo Guardrails empowers businesses to enhance the safety of AI-driven text generation applications, offering a comprehensive package that includes all the code, examples, and documentation needed to implement these safeguards effectively. By using this toolkit, organizations can better align their AI systems with industry best practices, ensuring that their AI solutions meet high standards of reliability and safety.

As AI continues to evolve and its applications expand, the role of safety measures like those provided by NeMo Guardrails will only become more critical. These tools help organizations stay ahead of potential risks, reinforcing the integrity of AI systems and contributing to the broader effort of building trustworthy AI that can be safely deployed across various domains.

dalle.jpeg

dalle.jpeg

Understanding Nemo Guardrails

Understanding Nemo Guardrails

Image source

Understanding Nemo Guardrails

Image source

AI's automated text generation can present several potential risks and inaccuracies, making it essential to have mechanisms in place to keep the generated text on course. This is where the concept of "guardrails" becomes crucial—essentially acting as barriers that prevent AI from producing content that strays off track. Enter NeMo Guardrails, a toolkit designed specifically to provide these much-needed safeguards.

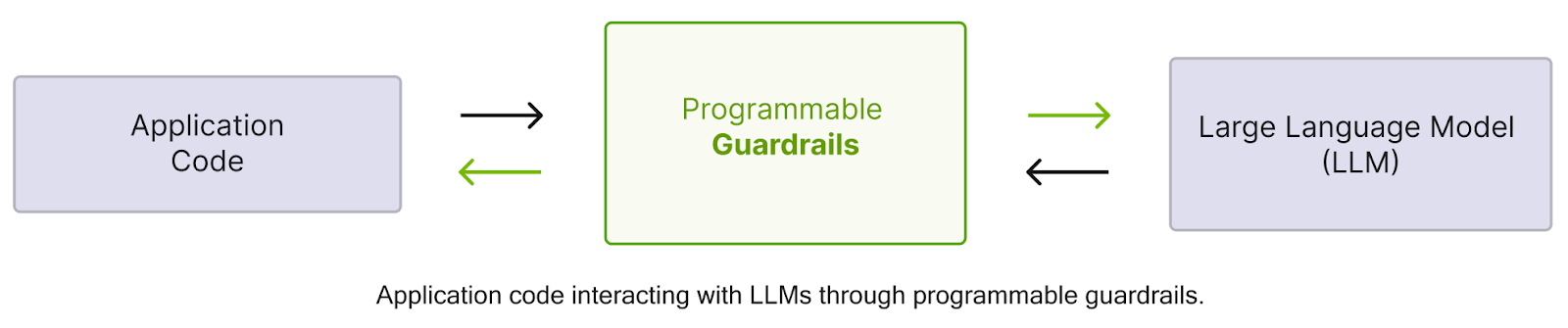

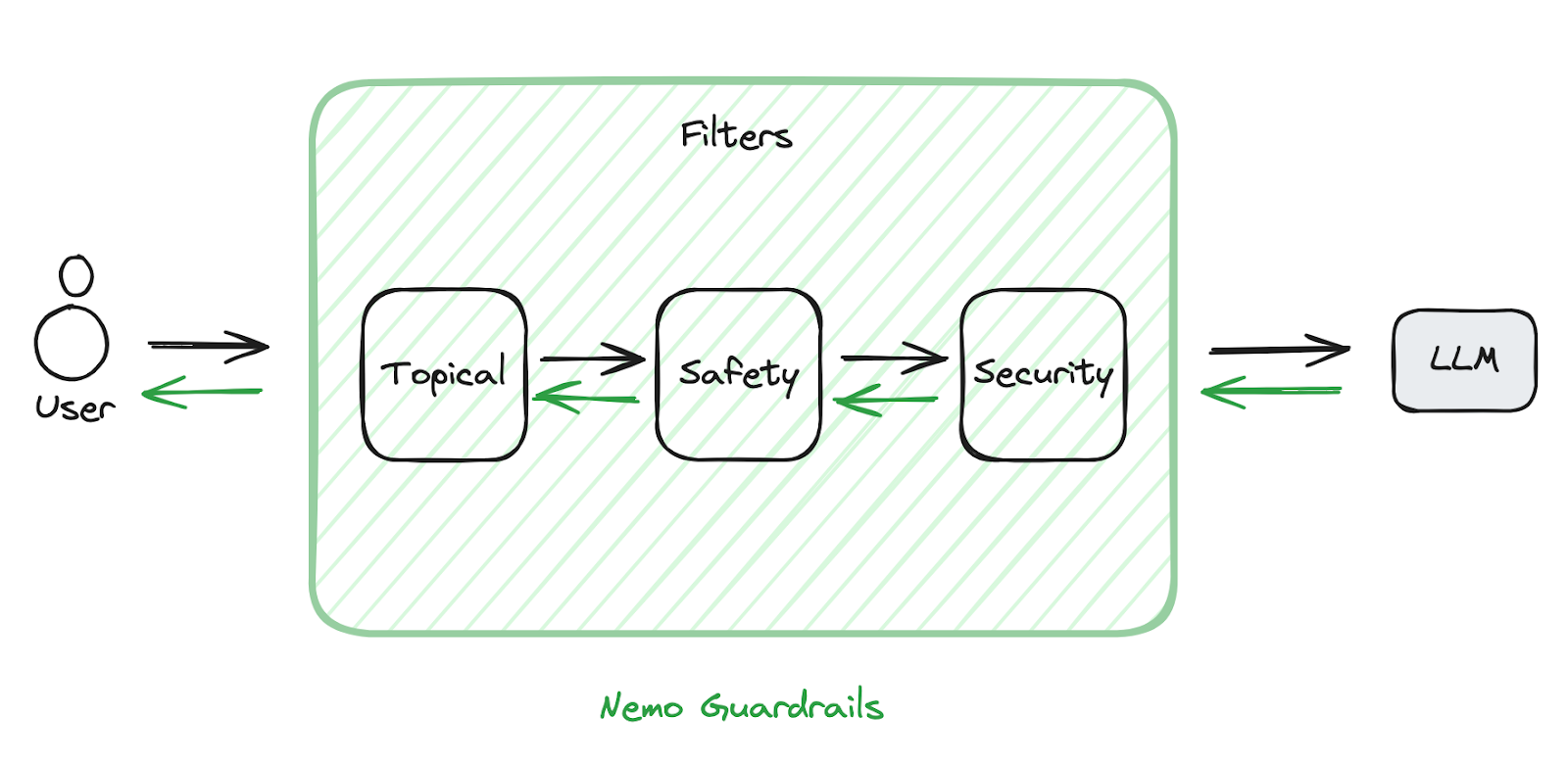

In programming, guardrails are programmable rules or constraints that serve as intermediaries between the application code (or the user) and the large language model (LLM). These guardrails monitor, influence, and control the interactions users have with AI-driven chatbots, ensuring that the generated content remains within acceptable boundaries. NeMo Guardrails offers robust support for three main types of guardrails: Topical guardrails, which keep conversations relevant to the desired subjects; Safety guardrails, which help prevent the AI from producing harmful or inappropriate content; and Security guardrails, which protect against potential misuse or exploitation of the AI system.

By implementing NeMo Guardrails, developers can more effectively manage and guide AI interactions, reducing the likelihood of errors and improving the overall safety and reliability of AI-driven applications.

Nemo Guardrails

Nemo Guardrails

Topical guardrails

Let's begin with Topical guardrails, which are designed to keep the AI's responses aligned with the user's query, ensuring that the generated text stays on-topic and relevant. These guardrails help prevent the AI from veering off into unrelated subjects, thereby maintaining the focus on the user's needs.

Safety guardrails

Next, we have Safety guardrails, which are equipped with built-in filters and monitors to ensure that all generated content meets predefined ethical standards. These guardrails work to eliminate biases, offensive language, or inappropriate content, ensuring that the AI's output is both respectful and responsible.

Security guardrails

Lastly, Security guardrails are crucial for protecting AI models from potential threats, such as data poisoning or model inversion attacks. By securing sensitive data and safeguarding the AI system from malicious activities, these guardrails help maintain the integrity of the AI model and build user trust.

In addition to these core features, NeMo Guardrails offers comprehensive documentation and numerous practical examples. This makes it easier for developers to understand how to implement and customize AI safety features according to their specific needs, enhancing the overall reliability and security of AI-driven applications.

Let’s now look at an example of how to install, implement, and use Nemo Guardrails.

Step 1: Install

pip install nemoguardrails

Step 2: Create a new guardrails configuration

Every guardrails’ configuration must be stored in a folder. The standard folder structure is as follows:

.

├── config

│ ├── actions.py

│ ├── config.py

│ ├── config.yml

│ ├── rails.co

│ ├── ...

Create a folder, such as config, for your configuration:

mkdir config

The config.yml contains all the general configuration options (e.g., LLM models, active rails, custom configuration data), the config.py contains any custom initialization code and the actions.py contains any custom Python actions.

Here’s an example of config.yml:

# config.yml

models:

- type: main

engine: openai

model: gpt-3.5-turbo-instruct

rails:

# Input rails are invoked when new input from the user is received.

input:

flows:

- check jailbreak

- mask sensitive data on input

# Output rails are triggered after a bot message has been generated.

output:

flows:

- self check facts

- self check hallucination

- activefence moderation

- gotitai rag truthcheck

config:

# Configure the types of entities that should be masked on user input.

sensitive_data_detection:

input:

entities:

- PERSON

- EMAIL_ADDRESS

This guardrail configuration specifies rules for ensuring the safety and reliability of an AI model, particularly focused on input and output interactions. Let's break down each section starting with input rails, covering its configured flows which include, Check Jailbreak which checks for any attempts to exploit vulnerabilities in the AI model or the system it's running on, such as unauthorized access or manipulation. mask sensitive data on input which masks or hides sensitive information (e.g., personal names, email addresses) received from the user to protect privacy and security.

Next, we have output rails which have the configured flows self check facts, self check hallucination, activefence moderation, gotitai rag truthcheck. self check facts verifies the factual accuracy of the generated response by cross-referencing it with reliable sources or databases. self check hallucination checks for any hallucinatory or nonsensical content in the generated response, ensuring coherence and relevance. activefence moderation which involves moderation mechanisms provided by ActiveFence for filtering out harmful or inappropriate content from the generated response. gotItai Rag truthcheck utilizes a truth-checking service provided by GotItAI to validate the accuracy of the generated response against factual information.

Overall, this guardrail configuration aims to enforce various safety measures throughout the AI interaction process, including input validation, output verification, and protection of sensitive data, thereby enhancing the reliability and trustworthiness of the AI model.

programmable guardrails

programmable guardrails

Step 3: Load and use the guardrails configuration

Loading the guardrails configuration and creating an LLMRails instance then making the calls to the LLM using the generate/generate_async methods.

from nemoguardrails import LLMRails, RailsConfig

# Load a guardrails configuration from the specified path.

config = RailsConfig.from_path("PATH/TO/CONFIG")

rails = LLMRails(config)

completion = rails.generate(

messages=[{"role": "user", "content": "Hello world!"}]

)

Sample output

{"role": "assistant", "content": "Hi! How can I help you?"}

As you can see from the code above, implementing and using Nemo guardrails is really simple with just three steps to the process and I hope you also noticed how you can easily customize the safety features according to your specific requirements in the configuration files.

Practical Applications

Nemo Guardrails can be exceptionally useful in various real-world applications, particularly where large language models (LLMs), including RAG pipelines, must be carefully managed to avoid risks and ensure safety and compliance. A few of these applications include healthcare advice platforms, educational tools, and financial advice.

When it comes to healthcare advice platforms, there is no room for error as this could potentially cause harm to the user, so a guardrail is crucial to prevent that from happening. In educational tools, NeMo Guardrails can help ensure that content provided by LLMs is accurate, age-appropriate, and pedagogically sound. Finally, for financial advice tools, ensuring that the information provided by the machine learning model is accurate and not misleading is critical as giving unauthorized or speculative financial recommendations could have serious repercussions.

Integrating Nemo Guardrails

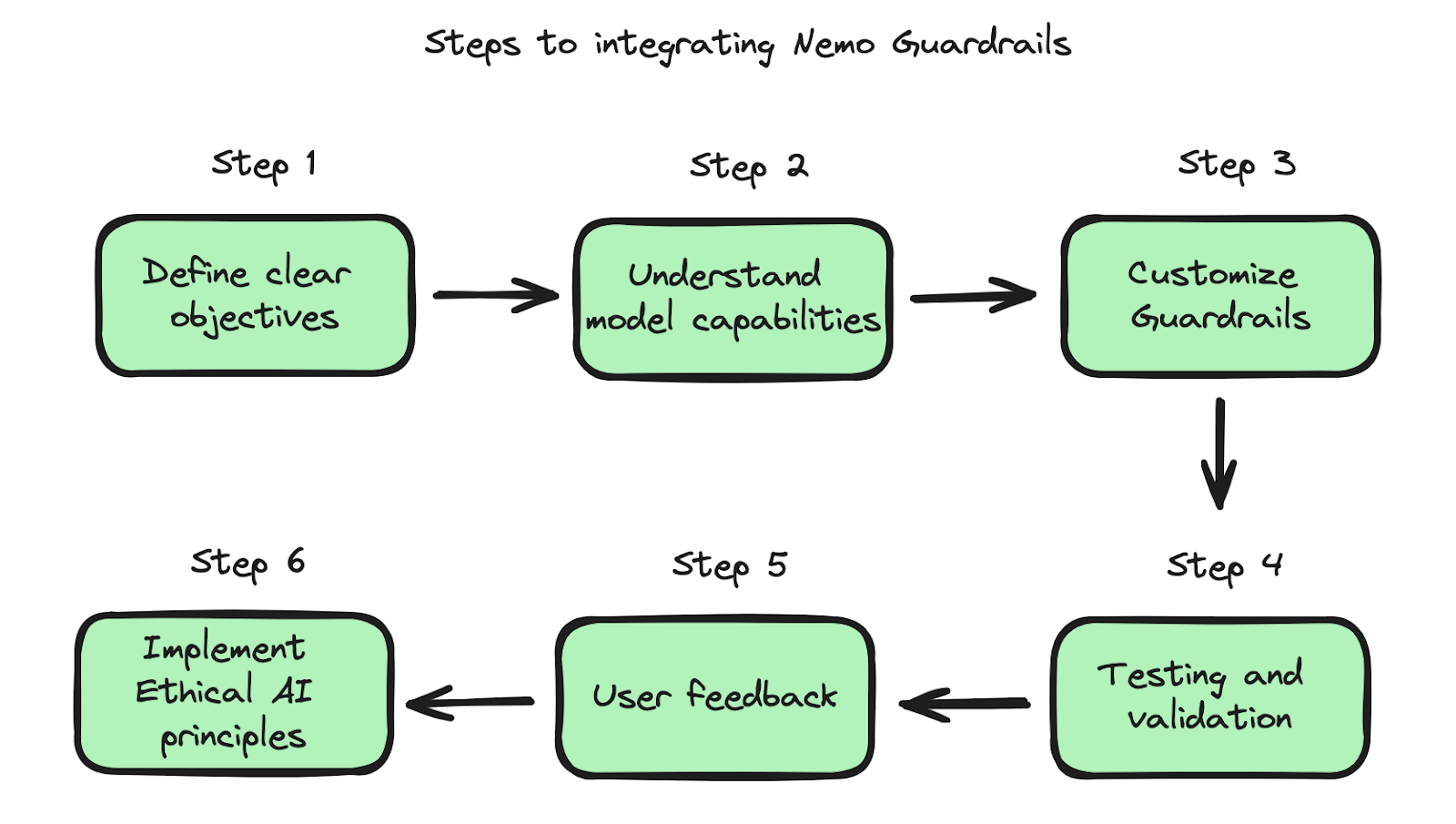

Integrating NeMo Guardrails into AI models and systems involves several best practices to ensure effective and safe usage of large language models (LLMs) across different platforms and technologies. Focusing on NVIDIA's ecosystem, which supports a wide range of AI technologies, there are a few things you should consider when incorporating Nemo Guardrails.

Guardrails Goals

First, start by clearly defining what you need the guardrails to achieve. This could include content moderation, data privacy, compliance with specific regulations, or preventing the model from generating harmful content. Then, deeply understand the capabilities and limitations of the AI models you use, as this will help you correctly configure your guardrails. Next, customize guardrails to the specific needs of your application and try to regularly test them in real-world scenarios to ensure they are working as intended.

User Feedback

Moreover, I recommend implementing mechanisms to gather user feedback on the AI's performance regarding the guardrails. You would then use this feedback to fine-tune the guardrails and improve user satisfaction and safety. Last but not least, ensure that the implementation of guardrails adheres to ethical AI principles, promoting fairness, accountability, and transparency.

Nemo Guardrails compatibility

Let's now talk about Nemo Guardrails compatibility with AI technologies and platforms. As you might know, Nemo Guardrails are particularly relevant within NVIDIA's ecosystem, which includes various tools and platforms that can leverage these guardrails. One of the great tools you can integrate with Nemo Guardrails is Riva, NVIDIA's service for deploying AI models, which allows developers to seamlessly apply guardrails to both training and inference stages. Next, we have NVIDIA's platforms, such as DGX systems and CUDA-X AI libraries, which are designed for scalable AI deployment as guardrails should be scalable and efficient to not hinder the performance benefits provided by NVIDIA hardware.

Nemo Guardrails is compatible with various deep learning frameworks such as TensorFlow, PyTorch, and others commonly used within NVIDIA's ecosystem. NeMo Guardrails should interact flawlessly with these frameworks to apply constraints or modifications at different stages of the model lifecycle.

Conclusion

I hope by now you have a clear understanding of how NeMo Guardrails has transformed AI reliability and safety features, what guardrails are, how they function, and their practical applications in AI. You also know how easily NeMo Guardrails can be implemented into your AI integrations, along with the best practices for doing so.

We've discussed how NeMo Guardrails provides a robust framework that works both efficiently and effectively to ensure that smart applications or conversational AI using large language models (LLMs) are accurate, relevant, appropriate, and secure. NeMo Guardrails empowers developers worldwide to enforce specific standards and rules that keep AI interactions safe, appropriate, and compliant with regulatory requirements. By filtering, modifying, or redirecting AI outputs, these guardrails help prevent the spread of harmful content, protect user data privacy, and uphold ethical standards, thus fostering trust and confidence in AI applications.

As AI technology continues to advance, incorporating sophisticated safety features like NeMo Guardrails will be essential in addressing the challenges these powerful tools present. Developers, researchers, and organizations must prioritize understanding and implementing these guardrails to ensure that AI systems are not only effective but also aligned with societal values, regulatory requirements, and safety standards. The ongoing integration of NeMo Guardrails into AI systems will play a critical role in the responsible and ethical deployment of AI technologies in various industries.

- Introduction

- Understanding Nemo Guardrails

- Practical Applications

- Integrating Nemo Guardrails

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Cross-Entropy Loss: Unraveling its Role in Machine Learning

Cross-entropy loss is used for training classification models. It’s an easy-to-implement loss function requiring labels encoded in numeric values for accurate loss calculation.

What is Object Detection? A Comprehensive Guide

Object detection is a computer vision technique that uses neural networks to classify and locate objects, such as humans, buildings, or cars, in images or video.

The Evolution of Multi-Agent Systems: From Early Neural Networks to Modern Distributed Learning (Methodological)

In this article, we'll explore the evolution of MAS from a methodological or approach-based perspective.