Getting Started with ScaNN

Google’s ScaNN is a library for ANNS. This guide walks you you through implementing ScaNN and demonstrate how to integrate it with Milvus.

Read the entire series

- Raft or not? The Best Solution to Data Consistency in Cloud-native Databases

- Understanding Faiss (Facebook AI Similarity Search)

- Information Retrieval Metrics

- Advanced Querying Techniques in Vector Databases

- Popular Machine-learning Algorithms Behind Vector Searches

- Hybrid Search: Combining Text and Image for Enhanced Search Capabilities

- Ensuring High Availability of Vector Databases

- Ranking Models: What Are They and When to Use Them?

- Navigating the Nuances of Lexical and Semantic Search with Zilliz

- Enhancing Efficiency in Vector Searches with Binary Quantization and Milvus

- Model Providers: Open Source vs. Closed-Source

- Embedding and Querying Multilingual Languages with Milvus

- An Ultimate Guide to Vectorizing and Querying Structured Data

- Understanding HNSWlib: A Graph-based Library for Fast Approximate Nearest Neighbor Search

- What is ScaNN (Scalable Nearest Neighbors)?

- Getting Started with ScaNN

- Next-Gen Retrieval: How Cross-Encoders and Sparse Matrix Factorization Redefine k-NN Search

- What is Voyager?

- What is Annoy?

Nearest neighbor search (NNS) is a fundamental technique for identifying similar items in large datasets, powering applications such as recommendation engines, image retrieval, and document search. For instance, in e-commerce, NNS algorithms can suggest products similar to what a customer is viewing, based on attributes like category, price, or reviews. However, as datasets grow in size and complexity, achieving both speed and accuracy becomes increasingly challenging.

To address these challenges, approximate nearest neighbor search (ANNS) offers a solution by trading a small degree of accuracy for significant improvements in speed and scalability. Google’s ScaNN (Scalable Nearest Neighbors) is a library for ANNS, designed to handle large, high-dimensional datasets efficiently. By employing advanced techniques like clustering and compression, ScaNN delivers high-performance search while maintaining accuracy.

In my previous post, I introduced the basics of vector search and ScaNN. In this post, we’ll guide you through implementing ScaNN and demonstrate how to integrate it with Milvus, an open-source vector database built for production-scale vector searches. Together, ScaNN and Milvus provide a robust and scalable solution for building next-generation applications.

Getting Started with ScaNN

To use ScaNN, start by installing it with pip:

pip install scann

This command installs ScaNN and its dependencies. After the installations are done, the next step is to prepare the dataset on which we will conduct nearest-neighbor searches.

Preparing the Dataset

In this blog, we will use the GloVe dataset, which provides pre-trained word embeddings. Word embeddings represent words as vectors in a high-dimensional space, where similar words are close together. For instance, king and queen have similar embeddings, making this dataset ideal for testing similarity searches.

Load the GloVe dataset as follows:

# Import necessary libraries

import numpy as np

import h5py

import os

import requests

import tempfile

import scann # Install with: pip install scann

# Download and prepare the dataset

with tempfile.TemporaryDirectory() as temp_dir:

# Fetching dataset from URL and saving it locally

response = requests.get("http://ann-benchmarks.com/glove-100-angular.hdf5")

file_path = os.path.join(temp_dir, "glove_data.hdf5")

with open(file_path, 'wb') as file:

file.write(response.content)

# Loading data into memory with h5py

glove_data = h5py.File(file_path, "r")

# Splitting data into vectors and queries

vectors = glove_data['train']

queries = glove_data['test']

print("Vectors shape:", vectors.shape)

print("Queries shape:", queries.shape)

This code downloads the GloVe dataset, stores it temporarily, and loads it into memory. The dataset is split into train (our main data, stored as vectors) and test (our sample queries, stored as queries).

Normalizing the Vectors

To ensure that similarity calculations are meaningful, normalize the vectors.

# Normalize vectors for efficient ScaNN indexing

normalized_vectors = vectors / np.linalg.norm(vectors, axis=1)[:, np.newaxis]

Each vector is divided by its magnitude and computed with np.linalg.norm, which brings each vector to a unit length. This is useful for dot-product similarity because it prevents longer vectors from dominating similarity calculations, leading to more accurate results.

Configuring the ScaNN Index

The next step is to configure the ScaNN index. An index is a data structure that organizes data to speed up search operations. By configuring the index, you control the balance between search speed and accuracy.

# Configure and build ScaNN indexer

# Setting up ScaNN for efficient search with hybrid scoring and reordering

index = scann.scann_ops_pybind.builder(normalized_vectors, 10, "dot_product").tree(

num_leaves=2000, num_leaves_to_search=100, training_sample_size=250000).score_ah(

2, anisotropic_quantization_threshold=0.2).reorder(100).build()

In the above code, we specify normalized_vectors as the dataset to index, with 10 as the number of nearest neighbors to retrieve for each query. We then set dot_product as the similarity metric which measures the angle between vectors. num_leaves=2000 divides the data into 2000 clusters, which reduces the search space and speeds up searches by focusing on relevant clusters. We then set num_leaves_to_search=100 to limit the search to 100 clusters, further balancing speed and accuracy. training_sample_size=250000 specifies the number of samples to train the index, which speeds up indexing without using the entire dataset.

The score_ah(2, anisotropic_quantization_threshold=0.2) parameter enables asymmetric hashing, a data compression technique with a quantization threshold to compress data, reducing memory usage and improving retrieval speed. Finally, reorder(100) refines the top 100 results to ensure high accuracy.

Running Searches

Once the index is configured, perform similarity searches to find the nearest neighbors of your queries. A search retrieves the closest matches based on the index’s configuration.

# Run initial search with default neighbors set to 10

neighbors, distances = index.search_batched(queries)

# Show search results for a sample query

sample_query_index = 0 # Change this index to see results for different queries

print(f"\nSample results for query {sample_query_index}:")

print("Nearest neighbor indices:", neighbors[sample_query_index])

print("Distances to nearest neighbors:", distances[sample_query_index])

In the above code, we run a batched search on the queries. The neighbors variable stores the indices of the closest vectors for each query, while distances contain the similarity scores for these neighbors. Lower distance values indicate closer similarity.

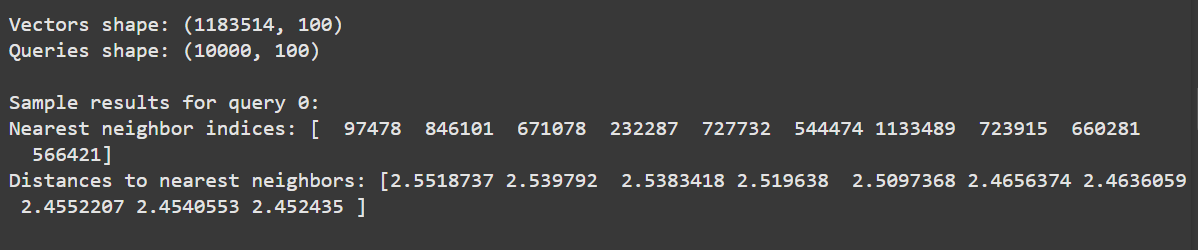

The expected output is as follows:

Figure: Nearest neighbor indices and distances for a sample query in ScaNN

The output displays the nearest neighbor indices and their distances for the first query. The distances are similarity scores, where lower values indicate higher similarity.

Let’s now integrate ScaNN with Milvus and use a more human-friendly and simple dataset.

Integrating ScaNN with Milvus

Milvus is a vector database optimized for storing and retrieving large collections (up to trillions) of vectors. Milvus natively integrates ScaNN within its architecture for fast search results. By combining ScaNN with Milvus, you gain the benefit of Milvus’s data management and scalability, making it possible to handle vast datasets efficiently.

In this section, we’ll walk through how to implement ScaNN with Milvus.

Step 1: Installing Milvus and Its Dependencies

If you haven’t installed Milvus, follow this guide to install and run it on your machine.

Then, install the Milvus Python client, Pymilvus. This client lets you interact with Milvus and manage vector data collections using Python. PyMilvus also seamlessly integrates with various embedding and reranking models, making it easier to build modern AI applications with Milvus, particularly retrieval augmented generation (RAG).

pip install "pymilvus[model]"

Step 2: Initializing the Milvus Client and Creating a Collection

Initialize the Milvus client and define a schema for the collection. In Milvus, a collection is where you store and organize your vector data.

from pymilvus import MilvusClient, DataType

from pymilvus import model

# Initialize Milvus client with URI for the full server

client = MilvusClient(uri="http://localhost:19530") # Replace with your Milvus server URI

# Define the collection schema with 768 dimensions

schema = client.create_schema(

auto_id=False,

enable_dynamic_field=False,

)

schema.add_field(field_name="id", datatype=DataType.INT64, is_primary=True)

schema.add_field(field_name="vector", datatype=DataType.FLOAT_VECTOR, dim=768) # Set to 768

schema.add_field(field_name="text", datatype=DataType.VARCHAR, max_length=200)

The above code initializes the client and creates a connection to the Milvus server running locally on your computer. Then, it defines a schema for the collection, specifying that each entry will have an id (integer identifier), a vector field (100-dimensional float vector), and a text field (to store associated text data).

Step 3: Configuring the ScaNN Index in Milvus

Configure ScaNN as the index type for this collection, organizing the data to make searches faster and more efficient.

# ScaNN index parameters with nlist

index_params = client.prepare_index_params()

index_params.add_index(

field_name="vector",

index_type="SCANN", # Use ScaNN as the index type

metric_type="L2",

nlist=128

)

# Create collection in Milvus with ScaNN indexing

collection_name = "scann_collection"

if client.has_collection(collection_name=collection_name):

client.drop_collection(collection_name=collection_name)

client.create_collection(

collection_name=collection_name,

schema=schema,

index_params=index_params

)

Setting index_type="SCANN" instructs Milvus to use ScaNN for efficient search. metric_type="L2" means Milvus will use Euclidean distance to measure similarity, and nlist=128 divides the data into 128 clusters, which enhances search speed by focusing on relevant sections.

Step 4: Inserting Data into Milvus

Generate vector embeddings for sample documents and insert them into Milvus. This step stores each vector in Milvus with an associated id and text data.

# Define embedding function and prepare sample data

embedding_fn = model.DefaultEmbeddingFunction()

docs = [

"Artificial intelligence was founded as an academic discipline in 1956.",

"Alan Turing was the first person to conduct substantial research in AI.",

"Born in Maida Vale, London, Turing was raised in southern England.",

]

vectors = embedding_fn.encode_documents(docs)

# Insert sample data into Milvus

data = [{"id": i, "vector": vectors[i], "text": docs[i]} for i in range(len(vectors))]

client.insert(collection_name=collection_name, data=data)

In the above code, we encode the sample documents as vectors and insert them into Milvus. Each document is stored with a unique id, a 100-dimensional vector, and the original text for reference.

Step 5: Performing Vector Search in Milvus

Next, perform a vector search in Milvus using ScaNN as the index. Run a search to find the nearest vector for a sample query.

# Perform vector search in Milvus using ScaNN index with nprobe

SAMPLE_QUESTION = "What's Alan Turing's achievement?"

query_vectors = embedding_fn.encode_queries([SAMPLE_QUESTION])

# Set search parameters, including nprobe for ScaNN

search_params = {"nprobe": 10} # Adjust nprobe based on accuracy/speed trade-off

search_res = client.search(

collection_name=collection_name,

data=query_vectors,

limit=1,

output_fields=["text"],

search_params=search_params

)

# Retrieve and print the result

context = search_res[0][0]["entity"]["text"]

print("Search result:", context)

In the above code, we encode the query and use its embedding to search Milvus for a possible match. Setting nprobe=10 instructs Milvus to search within 10 clusters, balancing accuracy and performance. The limit=1 parameter restricts the results to the nearest match. Running this search retrieves the closest document to the query, allowing us to see the most relevant result.

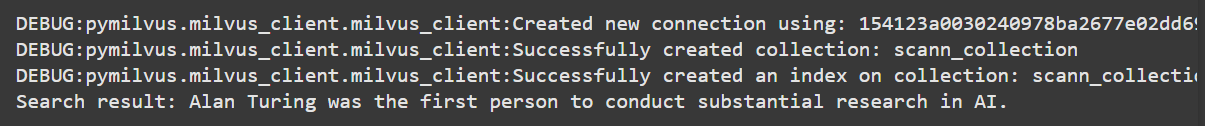

Here is the expected output:

Figure: Closest matching document for a sample query using ScaNN indexing in Milvus

The output shows the nearest match for the sample query, displaying the text of the most similar document. As you can see the most relevant result answers our question correctly. This means that Milvus and ScaNN were able to retrieve the correct result.

Conclusion

ScaNN (Scalable Nearest Neighbors) is a library for implementing approximate nearest neighbor search, striking a balance between speed and accuracy for large, high-dimensional datasets. Its advanced techniques, such as clustering and compression, make it an ideal solution for modern applications like recommendation systems, image retrieval, and natural language processing.

Milvus, an open-source vector database, natively supports and integrates ScaNN within its architecture, enabling developers to build scalable, production-ready systems capable of handling billions of vector data efficiently. Together, these tools provide the flexibility and performance needed to tackle real-world challenges in vector search.

In addition to ScaNN, Milvus also supports and optimizes many other types of ANN algorithms such as HNSW, DiskANN, and IVF, delivering optimal performance. For more details, see Milvus documentation.

Further Resources

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Raft or not? The Best Solution to Data Consistency in Cloud-native Databases

Explain why consensus-based algorithms like Paxos and Raft are not the silver bullet and propose a solution to consensus-based replication.

What is ScaNN (Scalable Nearest Neighbors)?

ScaNN is an open-source library developed by Google for fast, approximate nearest neighbor searches in large-scale datasets.

What is Voyager?

Voyager is an Approximate Nearest Neighbor (ANN) search library optimized for high-dimensional vector data.