Embedding and Querying Multilingual Languages with Milvus

This guide will explore the challenges, strategies, and approaches to embedding multilingual languages into vector spaces using Milvus and the BGE-M3 multilingual embedding model.

Read the entire series

- Raft or not? The Best Solution to Data Consistency in Cloud-native Databases

- Understanding Faiss (Facebook AI Similarity Search)

- Information Retrieval Metrics

- Advanced Querying Techniques in Vector Databases

- Popular Machine-learning Algorithms Behind Vector Searches

- Hybrid Search: Combining Text and Image for Enhanced Search Capabilities

- Ensuring High Availability of Vector Databases

- Ranking Models: What Are They and When to Use Them?

- Navigating the Nuances of Lexical and Semantic Search with Zilliz

- Enhancing Efficiency in Vector Searches with Binary Quantization and Milvus

- Model Providers: Open Source vs. Closed-Source

- Embedding and Querying Multilingual Languages with Milvus

- An Ultimate Guide to Vectorizing and Querying Structured Data

- Understanding HNSWlib: A Graph-based Library for Fast Approximate Nearest Neighbor Search

- What is ScaNN (Scalable Nearest Neighbors)?

- Getting Started with ScaNN

- Next-Gen Retrieval: How Cross-Encoders and Sparse Matrix Factorization Redefine k-NN Search

- What is Voyager?

- What is Annoy?

Introduction

The world is becoming increasingly interconnected, and the ability to effectively process and analyze data across different languages has become more crucial than ever. However, many popular natural language processing (NLP) models predominantly cater to English, leaving a significant gap in linguistic capabilities across various applications. This gap underscores the pressing need for multilingual solutions, particularly in vector search engines.

This guide will explore the challenges, strategies, and approaches to embedding multilingual languages into vector spaces using Milvus and the BGE-M3 multilingual embedding model.

What Are Multilingual Vector Embeddings

Multilingual vector embeddings are numerical representations of words or phrases across different languages, capturing their meanings within a shared vector space. For instance, words like "cat" in English, "gato" in Spanish, and "chat" in French are represented by vectors situated closely to each other in a high-dimensional space, indicating their semantic similarity. This semantic cohesion proves invaluable for vector search engines, enabling them to fetch relevant results irrespective of the query language. Multilingual embeddings are usually stored in vector databases like Milvus, facilitating seamless retrieval of semantically related information.

Milvus is an open-source vector database capable of managing large-scale datasets. One of its standout features lies in its capability of handling multilingual data. Milvus can process and index data across diverse languages by seamlessly integrating with various embedding models, such as BGE-M3, which generate multilingual vector representations. Moreover, Milvus harnesses advanced indexing techniques like IVF, HNSW, and PQ, ensuring swift and precise search performance, even amidst intricate multilingual datasets. With these comprehensive capabilities, Milvus is the preferred choice for deploying resilient, scalable, and language-agnostic search solutions.

Challenges of Embedding Multilingual Languages

Embedding multilingual languages into vector spaces presents a unique set of challenges that we must address to ensure accurate and meaningful representations. Some of the key obstacles include:

- Linguistic Diversity: Every language has unique grammar, writing style, and vocabulary. Creating a universal embedding model that works well for all languages is tough. For example, languages like Arabic or Finnish, with complex morphological systems, can trip up models that treat words as single units. These models struggle to capture the intricacies of word formation and inflection.

- Data Availability: Many languages, especially those with fewer resources, lack the high-quality datasets to train robust embedding models. Without enough data, the resulting representations may not be very accurate, leading to poor performance in real-world applications.

- Tokenization and Segmentation: Languages with logographic writing systems, like Chinese and Japanese, require specialized tokenization and segmentation techniques to break down text into meaningful units for embedding. Failing to account for these linguistic nuances can result in inaccurate or incomplete representations.

- Cross-lingual Alignment: Achieving optimal cross-lingual alignment, where semantically similar concepts across languages are mapped to nearby vectors, remains challenging, especially for distant language pairs with vastly different linguistic structures, such as English and Chinese.

Overview of Multilingual Embedding Models

Multilingual embedding models, such as BGE-M3, significantly advance natural language processing. These models are designed to understand and process multiple languages, making them highly versatile and applicable in various multilingual contexts. They work by mapping words or phrases from different languages into vectors in a shared embedding space. This allows the model to capture semantic and syntactic similarities across languages, enabling cross-lingual knowledge transfer.

The BGE-M3 model, for instance, is a multilingual embedding model that has shown remarkable performance in tasks such as machine translation, cross-lingual information retrieval, and multilingual sentiment analysis. It leverages the power of deep learning to learn language-agnostic representations, which means it can understand the meaning of words and phrases irrespective of their language.

There are many benefits to using advanced embedding models for multilingual languages. Firstly, they help overcome the language barrier in natural language processing tasks. This benefits languages with limited resources available for training machine learning models. Secondly, these models can leverage the knowledge learned from one language to improve the performance of other languages, a concept known as transfer learning. This characteristic is especially useful for low-resource languages, as the model can leverage resources from high-resource languages to improve its performance. Lastly, these models enable the development of truly global AI systems that can understand and interact with users in their native language, thereby improving the user experience and accessibility of AI systems.

Using Milvus and BGE-M3 to Encode and Retrieve Multilingual Languages

Milvus integrates many popular embedding models, including BGE-M3. With this integration, you can easily leverage these models to convert your multilingual documents into embeddings without adding additional components to your development pipeline. After you get the embeddings, you can store them in Milvus for similarity retrieval.

Below is a step-by-step guide on how to set up Milvus with BGE-M3 for managing and searching multilingual data.

Setting up the Environment

Before you write the code to set up Milvus with BGE-M3, you must install Milvus on your computer and install the necessary packages. Start by installing Milvus on your computer by following this guide. Then, install the required Python package using the command below:

pip install "pymilvus[model]"

Pymilvus[model] is a component within PyMilvus that provides functionalities for working with embedding models. It allows you to use various models to generate embeddings from your data.

In this case, you will use Pymilvus[model] to integrate BGE-M3 with Milvus. The command will also install Pymilvus, the Python SDK, to interact with Milvus .

Next, import the necessary modules and classes:

# Import necessary modules and classes

from pymilvus import MilvusClient, DataType

# Import BGEM3EmbeddingFunction for sentence encoding

from pymilvus.model.hybrid import BGEM3EmbeddingFunction

The MilvusClient will help you interact with the Milvus vector database. The DataType class will help define data types for the collection's fields. Finally, the BGEM3EmbeddingFunction class will help you load and use the BGE-M3 multilingual sentence embedding model.

After data imports, you are done setting up your environment.

Instantiating the BGE-M3 Model and Connecting to Milvus

Next, you need to instantiate the embedding model, in this case, the BGE-M3 multilingual model. This is the model you will use to embed non-English languages.

# Instantiate BGEM3EmbeddingFunction

# This is a pre-trained multilingual sentence embedding model

bge_m3_encoder = BGEM3EmbeddingFunction(

model_name='BAAI/bge-m3', device='cpu', use_fp16=False

)

This encoder can generate high-quality embeddings for text data in over 100 languages.

Next, establish a connection to the Milvus instance. You must specify the Uniform Resource Identifier (URI) where your Milvus instance is running. The default URI for Milvus is http://localhost:19530.

# Connect to Milvus instance

client = MilvusClient(

uri="http://localhost:19530"

)

Replace the uri value with your Milvus instance's configuration.

Defining the Collection Schema

After connecting with your Milvus instance, you need to define the schema for your collection, which will store non-English language text documents and their embeddings. You will use MilvusClient to achieve this.

# Define the collection schema

schema = MilvusClient.create_schema(

auto_id=True,

enable_dynamic_field=True,

)

# Add fields to schema

schema.add_field(field_name="doc_id", datatype=DataType.INT64, is_primary=True)

schema.add_field(field_name="doc_text", datatype=DataType.VARCHAR, max_length=65535)

schema.add_field(field_name="doc_embedding", datatype=DataType.FLOAT_VECTOR, dim=1024)

The above code defines the schema for the collection using create_schema. auto_id=True specifies the automatic generation of unique document IDs. enable_dynamic_field=True allows adding new fields later.

Here is what each field in the schema does:

doc_id: Stores the unique identifier for each document (auto-generated).

doc_text: Stores the text content of the document.

doc_embedding: Stores the 1024-dimensional embedding vector representing the document's semantics.

Creating the Collection

After creating the schema for your collection, the next step is to create the collection. Start by checking whether the collection you intend to create already exists in Milvus. If it does, drop it from the database and create a new one using the defined schema and description:

collection_name = "multilingual_docs"

# Drop the existing collection if it exists

if client.has_collection(collection_name):

client.drop_collection(collection_name)

# Create the collection

client.create_collection(

collection_name="multilingual_docs",

schema=schema,

description="Multilingual Document Collection"

)

Having created the collection, you now need to create an index to speed up the search for specific data within the collection

Creating the Index

Imagine a collection with thousands of documents. Searching for similar documents without an index would involve comparing the query embedding with the embedding of every single document in the collection. This can be very slow. Indexes speed up the querying process.

Proceed to create an index on the doc_embedding field to enable efficient similarity search:

# Create index params

index_params = [{

"field_name": "doc_embedding",

"index_type": "IVF_FLAT",

"metric_type": "L2",

"params": {"nlist": 128}

}]

# Create index

client.create_index(collection_name, index_params)

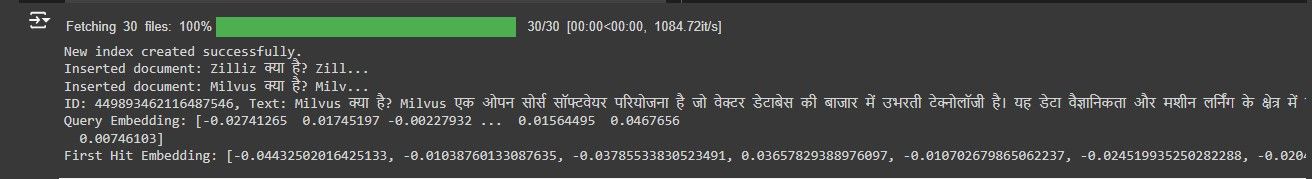

print("New index created successfully.")

The above code defines the index parameters. It then uses the create_index method to create an index on the doc_embedding field based on the defined parameters. The IVF_FLAT index type with the L2 (Euclidean) metric suits the vector similarity search.

Inserting Multilingual Documents into Milvus

After creating the index, you are ready to insert your multilingual documents. Below are some example documents in Hindi. The BGE-M3 multilingual embedding model will encode these documents.

documents = [

"Zilliz क्या है? Zilliz एक उच्च प्रदर्शन डेटा वैज्ञानिकता कंपनी है जो विशेष रूप से डेटा वैज्ञानिकता और अनुरूप साधनों पर केंद्रित है। यह उन्नत अनुकूलन और तेजी से परिसर को प्रदान करता है, जिससे वैज्ञानिक और तकनीकी समस्याओं का हल करना संभव होता है।",

"Milvus क्या है? Milvus एक ओपन सोर्स सॉफ्टवेयर परियोजना है जो वेक्टर डेटाबेस की बाजार में उभरती टेक्नोलॉजी है। यह डेटा वैज्ञानिकता और मशीन लर्निंग के क्षेत्र में एक अत्यधिक प्रभावी और सरल उपाय है जो विभिन्न उपयोग केस को समर्थ बनाता है। Milvus विशेष रूप से वेक्टर सिमिलैरिटी और उपक्रमी अनुरोधों के लिए डेटा को त्वरित और सरल तरीके से प्रोसेस करने के लिए विकसित किया गया है।"

]

Proceed to iterate over the documents and insert them into Milvus.

# Iterate over the documents and insert them into the collection

for doc_text in documents:

doc_embedding = bge_m3_encoder.encode_documents([doc_text])['dense'][0]

entity = {

"doc_text": doc_text,

"doc_embedding": doc_embedding.tolist()

}

insert_result = client.insert(collection_name, [entity])

print(f"Inserted document: {doc_text[:20]}...")

Each document's embedding is generated using the BGE-M3 encoder and inserted into the Milvus collection. Since your multilingual documents are embedded and inserted into Milvus, you can retrieve semantically related data for different purposes. Let us implement a simple querying and similarity search system. This system can later be advanced to create a retrieval augmented generation (RAG) system.

Querying and Similarity Search

To perform a similarity search, first load the collection into memory:

# Load the collection into memory

client.load_collection(collection_name)

Then, define the search parameters, including the metric type (L2 for Euclidean distance) and the nprobe value (the higher, the better, but slower):

search_params = {

"metric_type": "L2",

"params": {"nprobe": 10}

}

Then, provide a query text in Hindi since the data you previously embedded was in Hindi and generate its embedding using the BGE-M3 encoder:

query_text = "Milvus क्या है?"

query_embedding = bge_m3_encoder.encode_queries([query_text])['dense'][0]

Finally, perform the similarity search in Milvus, retrieving the most similar documents based on the query embedding:

search_result = client.search(

collection_name=collection_name,

data=[query_embedding],

limit=10,

output_fields=["doc_text", "doc_embedding"],

search_params=search_params

)

The client.search method returns the top limit results, sorted by similarity score. The first hit (if any) is then printed.

if search_result and len(search_result[0]) > 0:

first_hit = search_result[0][0]

entity = first_hit['entity']

print(f"Text: {entity['doc_text']}")

print(f"Query Embedding: {query_embedding}")

print(f"First Hit Embedding: {entity['doc_embedding']}")

else:

print("No results found.")

The results of the above code are as follows:

The results show the first hit to the query Milvus क्या है? . This is the Hindi version of What is Milvus? The first hit in the screenshot above was the document about Milvus, not Zilliz. The screenshot shows we could embed a non-English language and retrieve semantically related information to a non-English search query. You can translate the search query and output to English using Google Translate.

Other Multilingual Embedding Models

In addition to BGE-M3, many other multilingual embedding models are available in the market. Here is a comparison of BGE-M3 with other prominent embedding models:

Comparison of BGE-M3 with Other Models

BERT: It is one of the earliest and most widely used transformer models, providing embeddings for over 100 languages. However, more recent models tend to outperform BERT on multilingual benchmarks. XLM-RoBERTa, an extension of RoBERTa pre-trained on data from 100 languages, shows strong cross-lingual performance but has a smaller vocabulary than BGE-M3.

LaBSE: It is a dual-encoder model from Google that embeds over 100 languages into the same semantic space. While excelling at retrieval tasks, it may underperform compared to BGE-M3 on text generation tasks. The multilingual version of T5 (mT5), trained on data from 101 languages, can generate text but has higher computational requirements.

mAlBERT: It is an efficient multilingual model that utilizes self-supervised pretraining and cross-lingual alignment. Though lightweight, it may lag behind BGE-M3 on some benchmarks.

Selecting the Right Model

The choice of model depends on prioritizing factors like language coverage needs, application requirements (e.g., generation vs. understanding), computational constraints, and performance benchmarks on the targeted task(s).

If working with lower-resource languages, BGE-M3 provides robust multilingual capabilities across 200+ languages. For text generation tasks like translation and summarization, BGE-M3 often outperforms other models. However, dual-encoder models like LaBSE perform better for retrieval and ranking tasks.

If computational resources are limited, lightweight models like mAlBERT could be an option, though trading off performance.

Using Zilliz Cloud for Your Production Environment

So far, we have discussed using Milvus with a BGE-M3 multilingual embedding model in a self-hosted state. But what about when you do not want to host Milvus yourself and want to use a fully managed cloud-based vector database? This is where Zilliz Cloud comes in.

Zilliz Cloud is a vector database well-suited for production environments, especially when dealing with multilingual datasets. It is the cloud version of Milvus. Here are some of its advantages and real-world applications:

Advantages of using Zilliz Cloud in production environments for handling multilingual datasets:

- Efficient Vector Indexing and Searching: Zilliz Cloud uses advanced vector indexing algorithms, making it highly efficient for searching large multilingual datasets. This is particularly useful in production environments where speed and efficiency are required.

- Scalability: Zilliz Cloud is designed to handle large-scale data. It can easily scale out to accommodate growing datasets, making it a robust choice for production environments.

- Support for Multiple Languages: Zilliz Cloud also supports multiple languages, making it an excellent choice for handling multilingual datasets. It can process and index data in various languages, allowing for efficient search and retrieval in a multilingual context.

Real-world applications where Zilliz excels in multilingual settings:

- E-commerce Recommendations: In global e-commerce platforms catering to customers who speak different languages, Zilliz can provide accurate product recommendations. By processing and indexing customer behavior data in multiple languages, Zilliz can help generate personalized recommendations for each user, regardless of language.

- Multilingual Customer Support: Companies with a global customer base often must provide customer support in multiple languages. Zilliz can power intelligent chatbots that can understand and respond to customer queries in various languages, improving the efficiency and effectiveness of customer support.

- Global Content Discovery: Zilliz can improve content discovery for global content platforms like news aggregators or social media sites. Indexing content in multiple languages can help users discover relevant content in their preferred language.

These are just a few examples of how Zilliz can handle multilingual datasets in production environments. For more information, refer to this vector database use case page.

Conclusion

Throughout this guide, you've gained valuable insights into the world of multilingual vector embeddings and their powerful applications within the context of vector similarity search.

You now understand the importance of supporting multilingual languages in modern NLP applications and the challenges of embedding diverse linguistic data into vector spaces. With this knowledge, you can explore and experiment with Milvus and multilingual embedding models, unlocking new possibilities for language-agnostic applications that transcend linguistic boundaries.

Further Resources

- Full code with detailed comments: https://www.kaggle.com/code/deniskuria/notebook1e4c44ebed

- Milvus Documentation: https://milvus.io/docs/

- Embedding with BGE-M3 Documentation: BGE M3 Milvus documentation

- Zilliz Cloud: https://zilliz.com/cloud

- Milvus Community: https://github.com/milvus-io/milvus/discussions

- Introduction

- What Are Multilingual Vector Embeddings

- Challenges of Embedding Multilingual Languages

- Overview of Multilingual Embedding Models

- Using Milvus and BGE-M3 to Encode and Retrieve Multilingual Languages

- Other Multilingual Embedding Models

- Using Zilliz Cloud for Your Production Environment

- Conclusion

- Further Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Advanced Querying Techniques in Vector Databases

Vector databases enhance AI apps with advanced querying techniques like ANN, multivector, and range searches, improving data retrieval speed and accuracy.

Understanding HNSWlib: A Graph-based Library for Fast Approximate Nearest Neighbor Search

HNSWlib is an open-source C++ and Python library implementation of the HNSW algorithm, which is used for fast approximate nearest neighbor search.

Getting Started with ScaNN

Google’s ScaNN is a library for ANNS. This guide walks you you through implementing ScaNN and demonstrate how to integrate it with Milvus.