Maximizing GPT 4.x's Potential Through Fine-Tuning Techniques

This article explores the real potential of GPT 4.x, highlighting its advanced powers and the critical role of fine-tuning in making the model suitable for specific applications.

Read the entire series

- Introduction to Unstructured Data

- What is a Vector Database and how does it work: Implementation, Optimization & Scaling for Production Applications

- Understanding Vector Databases: Compare Vector Databases, Vector Search Libraries, and Vector Search Plugins

- Introduction to Milvus Vector Database

- Milvus Quickstart: Install Milvus Vector Database in 5 Minutes

- Introduction to Vector Similarity Search

- Everything You Need to Know about Vector Index Basics

- Scalar Quantization and Product Quantization

- Hierarchical Navigable Small Worlds (HNSW)

- Approximate Nearest Neighbors Oh Yeah (Annoy)

- Choosing the Right Vector Index for Your Project

- DiskANN and the Vamana Algorithm

- Safeguard Data Integrity: Backup and Recovery in Vector Databases

- Dense Vectors in AI: Maximizing Data Potential in Machine Learning

- Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges

- A Beginner's Guide to Implementing Vector Databases

- Maintaining Data Integrity in Vector Databases

- From Rows and Columns to Vectors: The Evolutionary Journey of Database Technologies

- Decoding Softmax Activation Function

- Harnessing Product Quantization for Memory Efficiency in Vector Databases

- How to Spot Search Performance Bottleneck in Vector Databases

- Ensuring High Availability of Vector Databases

- Mastering Locality Sensitive Hashing: A Comprehensive Tutorial and Use Cases

- Vector Library vs Vector Database: Which One is Right for You?

- Maximizing GPT 4.x's Potential Through Fine-Tuning Techniques

- Deploying Vector Databases in Multi-Cloud Environments

- An Introduction to Vector Embeddings: What They Are and How to Use Them

Introduction

LLMs are advanced AI systems designed to process and generate human-like text, revolutionizing natural language processing tasks. In the AI world, GPT 4.x has become a groundbreaking LLM. This particular version of GPT (Generative Pre-trained Transformer), developed by OpenAI, has a mind-blowing understanding of language and is versatile in various tasks. GPT 4.x has proved to be a valuable asset in the AI landscape when handling multiple challenges. In this article, we will go through one of the advanced techniques that can further enhance GPT 4.x's performance. The technique is known as fine-tuning. With fine-tuned GPT-4 models, we can develop AI applications that address a wide array of challenges, from content generation to customer service automation.You can customize the model to suit specific applications. OpenAI's GPT series, particularly GPT-4, stands as a pinnacle in AI advancement, showcasing remarkable language comprehension and versatility. Whether you are a developer who wants to integrate AI into your software or someone curious about the latest trends, these fine-tuning models have you covered.

Understanding GPT 4.x

GPT 4. x, variants of the Generative Pre-trained Transformer series, are now considered marvels in the AI domain, specifically in NLP (Natural Language Processing). Now, let us talk about the architecture of this advanced model, shall we?This state-of-the-art model is built on a deep-learning architecture, leveraging layers of neural networks to comprehend and generate text that mimics human-like responses based on the input. GPT 4.x outshines its predecessors, including GPT 3.5, with its enriched language context knowledge. This empowers GPT 4.x to produce responses that are more nuanced, relevant, and coherent. These advancements are a result of the integration of sophisticated training techniques, larger datasets, and enhancements in the underlying architecture.

Understanding The Fine-Tuning Process

What exactly is fine-tuning? In AI and ML, fine-tuning refers to adjusting a pre-trained model to make it an expert in a specific task. Fine-tuning aims to refine the extensive knowledge of the model acquired during its initial training to understand better and generate text for specialized tasks.

How to Fine-Tune GPT4

Fine-tuning facilitates the customization of GPT-4, allowing us to adapt its capabilities to suit specific use cases and requirements. The process of fine-tuning for GPT 4.x involves several steps, including

Data Preparation

First, you will have to curate a dataset specific to the task. This dataset should represent the type of content the model will generate, involving examples of input data and the desired output.

Model Selection

It wouldn't be wrong to consider GPT 4.x a single model because it is. But it comes in various sizes and configurations. Select the appropriate version of the model that balances computational efficiency and performance.

Training Environment Setup

Preparing a training environment for such a large and sophisticated model requires computational resources. The environment setup includes hardware and software configurations, appropriate machine learning frameworks, and GPUs for accelerated computing.

Fine-Tuning Execution

The fine-tuning process can start after successful data preparation and environment setup. This process involves training the model on the specialized dataset and adjusting the learning rate parameter to ensure efficient learning by the model. Monitoring the model's performance through validation helps achieve the best results.Following all these steps will allow you to fine-tune your GPT 4.x to excel in specific tasks. This approach makes it an even more powerful tool in the AI world

Techniques for Effective Fine-Tuning

If you want your fine-tuned model to work effectively and efficiently, then the following are some key areas to focus on.

Data Curation

Data curation is the most critical aspect when fine-tuning LLMs, as it directly impacts your model's output. Highly curated data is vital for analytics to deliver meaningful insights and avoid errors that could lead to poor results.An automated data curation approach, CLEAR (Confidence-based LLM Evaluation and Rectification), is a pipeline for instruction-tuning datasets that can be used with any language model and fine-tuning procedure. This pipeline identifies low-quality training data and corrects or filters it using LLM-derived confidence.CLEAR is considered a framework that improves the dataset and the trained model outputs without involving any additional fine-tuning computations. Tools such as NVIDIA, Lilac, NeMo Data Curator, etc., came into the market for better data pipelines.

Parameter Adjustment

The second most crucial aspect of the fine-tuning process is parameter adjustment. It is essential because it greatly maximizes the potential of LLMs like GPT-4 for specific domains or tasks. Parameters like batch size, learning rate, and other Hyperparameters must be adjusted to optimize model performance.The first one to discuss is the learning rate, which determines how much the model's knowledge is adjusted with the incoming new dataset. You need to find a learning rate that doesn't overshoot the optimal learning nor make the learning too slow.Another thing to discuss is the batch size. It directly impacts memory utilization, influencing the model's generalization ability. You have to experiment with these parameters to find the most optimal settings for your task. For example, PEFT (Parameter Efficient Fine-Tuning) offers many methods to reduce the number of trainable parameters requiring updating. This significantly decreases computational resources and memory storage.Partial fine-tuning involves updating only the outer layers of the neural network. It is another useful strategy when a new task is highly similar to the original task. On the other hand, additive fine-tuning adds extra parameters to the model but trains only those new components. It ensures that the original pre-trained weights are not changed.

Training Strategies

Now, let's discuss some training strategies. The first is gradual unfreezing, in which the model's layers begin to unfreeze slowly from the top. This increases nuanced learning and prevents the model from forgetting previously learned information. Different model layers might have different learning rates, as not all parts of the model learn at the same pace.

Monitoring and Evaluation

Last but not least, monitoring and evaluation. It is really important to monitor the model's progress and evaluate it using metrics. Tools and metrics like accuracy, loss curves, recall, specific benchmarks, and precision related to the specified task can provide insights regarding the model's learning efficiency.

Practical Applications of a Fine-Tuned GPT-4

Fine-tuned GPT 4.x has many practical applications. It is involved in almost every industry, including customer service, content creation, and coding.* Regarding content creation, GPT 4.x, which is fine-tuned, can generate step-wise solutions for mathematics, producing much more coherent and accurate responses. For example, if a developer is working on a mathematics learning platform, then he can fine-tune a GPT 4.x in which step-by-step solutions will be produced for a large set of math problems.

In customer service, a fine-tuned GPT 4.x model can be used to understand the context of images and text and provide more accurate responses to customers' queries. For instance, a fine-tuned GPT 4.x model can be used by a home décor company to understand customer requirements based on text and images.

Now, in coding, a fine-tuned GPT 4.x can be used to generate code snippets. It reduces development time and improves coding efficiency.

Medical Diagnosis: Fine-tuning GPT-4 on medical literature and patient records can help medical professionals make more accurate diagnoses and treatment recommendations.

Financial Analysis: Fine-tuning GPT-4 on financial data and market trends can help financial analysts make more informed investment decisions.

Legal Research: Fine-tuning GPT-4 on legal precedents and statutes can help legal professionals research and analyze legal cases more efficiently.**

Case Studies

A company specializing in home décor fine-tunes a GPT 4.x model to understand customer preferences based on text and images. It improved the customer engagement by almost 40% and sales by 20%.

Similarly, a software development firm fine-tunes a GPT 4.x to generate code snippets for specified.programming tasks. This reduces the development time by 25% and improves the code quality by 30%.These case studies prove the significance and impact of fine-tuning on business outcomes and model performance.

Overcoming challenges in Fine-Tuning

Here are some common challenges in fine-tuning LLMs and strategies for overcoming them:

Limited Task-Specific Data: Fine-tuning requires task-specific datasets, and acquiring sufficiently large and diverse datasets in certain domains can be months-long. To overcome this, consider using strategies like data augmentation, transfer learning, and balancing domain-specific data with more general language data.

Data Scarcity and Domain Mismatch: In many situations, getting an extensive dataset for a particular task or domain can be challenging. The mismatch between the data the model was pre-trained on and the task-specific data needed for fine-tuning poses a significant challenge. To overcome this, consider using strategies like data augmentation, transfer learning, and balancing domain-specific data with more general language data

Catastrophic Forgetting: Fine-tuning LLMs can lead to the model losing competence in tasks it was previously good at. To address this challenge, techniques like regularization, knowledge distillation, and task sequencing should be used to ensure that the fine-tuning process enhances the model's capabilities while retaining knowledge from pre-training.

Overfitting: Overfitting is common in fine-tuning LLMs, where the model becomes too specific to the training data. To combat this challenge, employ regularization strategies, cross-validation, early stopping, ensemble learning, and monitor model complexity.

Bias: If there is explicit or implicit bias in the training data, it can come up in the responses generated by the fine-tuned LLMs. To address this challenge, use a multi-pronged approach involving pre-processing training data to reduce biases and post-processing techniques to debias the model's outputs. Regular audits of the fine-tuned model's behavior can also help identify and rectify biased responses.

Hyperparameter Tuning: Selecting inappropriate hyperparameters can lead to slow convergence, poor generalization, or even unstable training. To overcome this challenge, automate hyperparameter tuning using techniques like grid search or Bayesian optimization and consider learning rate schedules, batch size experimentation, transfer learning, cross-validation, and more.**

Summary

This article explored the real potential of GPT 4.x, highlighting its advanced powers and the critical role of fine-tuning in making the model suitable for specific applications. Fine-tuning enhances GPT 4. x performance effectively through parameter adjustment, data curation, rigorous evaluation, and strategic training. As fine-tuning techniques evolve, they will play a much more significant role in bringing out the full potential of GPT 4.x.

Credits: Supersimple

fine tuning 2.png

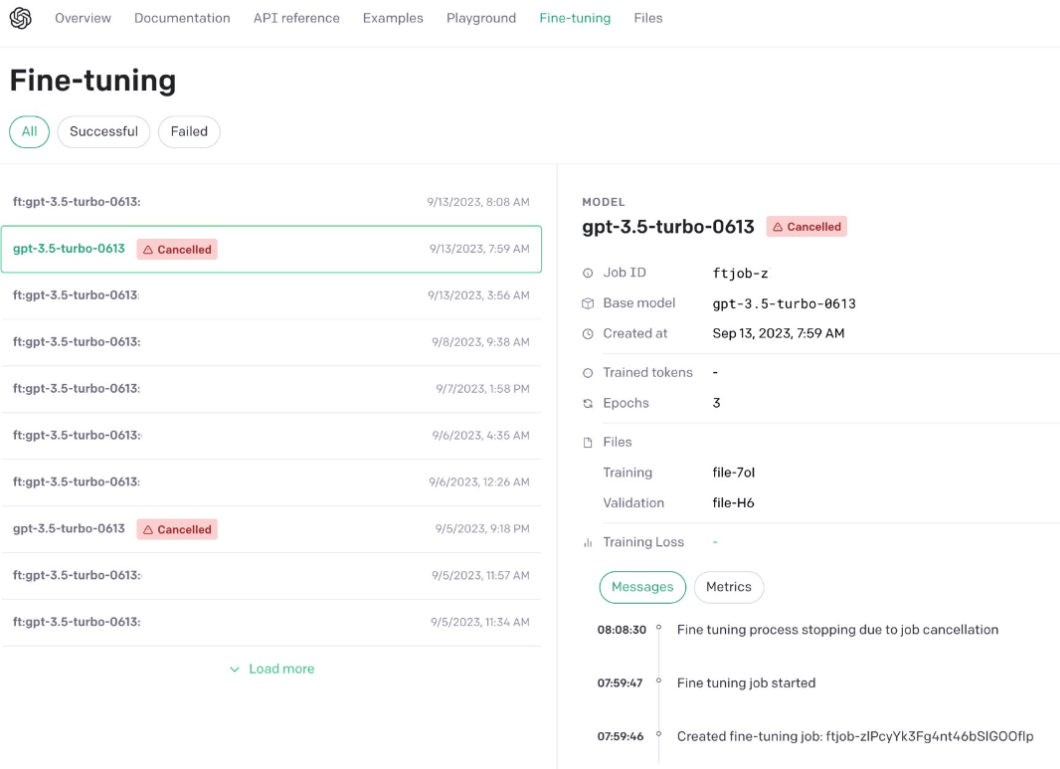

Credits: OpenAI Via Twitter

fine tuning 2.png

Credits: OpenAI Via Twitter

- Introduction

- Understanding GPT 4.x

- Understanding The Fine-Tuning Process

- How to Fine-Tune GPT4

- Techniques for Effective Fine-Tuning

- Practical Applications of a Fine-Tuned GPT-4

- Case Studies

- Overcoming challenges in Fine-Tuning

- Summary

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introduction to Unstructured Data

Buckle up for the first tutorial in our Vector Database 101 series and untangle the intricacy around Milvus with us every week.

DiskANN and the Vamana Algorithm

Dive into DiskANN, a graph-based vector index, and Vamana, the core data structure behind DiskANN.

Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges

Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning.