Improving ChatGPT’s Ability to Understand Ambiguous Prompts

This article was originally published in The New Stack and is reposted here with permission.

Prompt engineering technique helps large language models (LLMs) handle pronouns and other complex coreferences in retrieval augmented generation (RAG) systems.

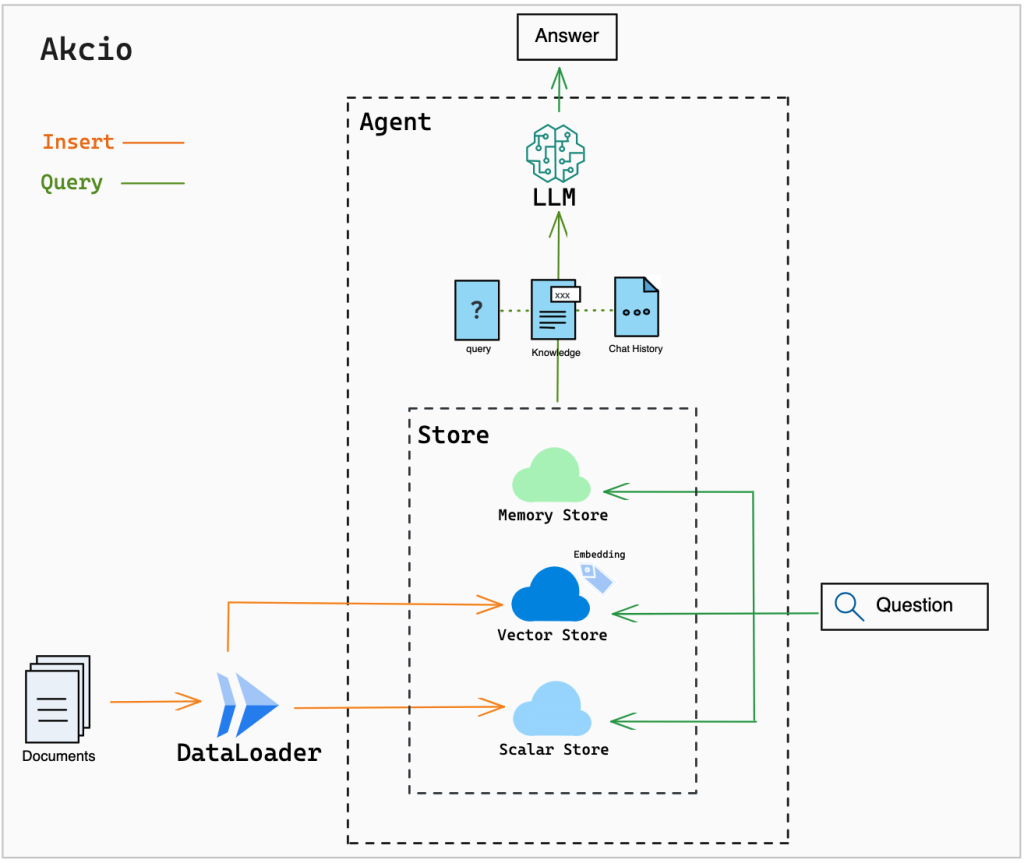

In the ever-expanding realm of AI, large language models (LLMs) like ChatGPT are driving innovative research and applications at an unprecedented speed. One significant development is the emergence of retrieval augmented generation (RAG). This technique combines the power of LLMs with a vector database acting as long-term memory to enhance the accuracy of generated responses. An exemplary manifestation of the RAG approach is the open source project Akcio, which offers a robust question-answer system.

Akcio’s architecture

Akcio’s architecture

In Akcio’s architecture, domain-specific knowledge is seamlessly integrated into a vector store, such as Milvus or Zilliz (fully managed Milvus), using a data loader. The vector store retrieves the Top-K most relevant results for the user’s query and conveys them to the LLM, providing the LLM with context about the user’s question. Subsequently, the LLM refines its responses based on the external knowledge.

For instance, if a user queries, “What are the use cases of large language models in 2023?” about an article titled “Insights Report on the Progress of Large Language Models in 2023” that was imported into Akcio, the system adeptly retrieves the three most relevant passages from the report:

1. In 2023, the LLM use cases can be divided into two categories: generation AI and decision-making. Decision-making scenarios are expected to have higher business value.

2. The generation AI scenario mainly includes dialogue interaction, code development, intelligent agents, etc.

3. NLP applications include text classification, machine translation, sentiment analysis, automatic summarization, etc.

Akcio combines these passages with the original query and forwards them to the LLM, generating a nuanced and precise response:

The application scenarios of the large model industry can be divided into generation and decision-making scenarios.

The challenge of coreference resolution in RAG

However, despite the strides made, implementing RAG systems introduces challenges, particularly in multi-turn conversations involving coreference resolution. Consider this sequence of questions:

Q1: What are the use cases of the large language model in 2023?

A1: The use cases of large language models can be divided into generation AI and decision-making.

Q2: What are their differences, and can you provide examples?

The pronoun “their” in Q2 refers to “generation AI and decision-making.” Yet, the LLM might generate irrelevant results to this question that undermine the conversation’s coherence:

1. BERT and GPT are important models in the field of NLP, but they have significant differences in their design and application scenarios.

2. The difference between large models and small models lies in their scale and complexity. Large models usually have more parameters and more complex structures, requiring more computational resources and time for training and inference. Small models, on the other hand, are relatively simple with fewer parameters and faster training and inference speeds.

3. However, there is no additional information to distinguish between these two products because they appear very similar.

Using ChatGPT for coreference resolution

Traditional methods, such as tokenization, lemmatization and keyword replacement using recurrent neural networks, are often inadequate for resolving complex references. Consequently, researchers have turned to LLMs like ChatGPT for coreference resolution tasks. This approach involves instructing ChatGPT to substitute pronouns or retain the original question based on the context provided. While this method is promising, it occasionally produces direct answers instead of following the prompt instructions, which indicates the need for a more-refined strategy.

Examples tested

We experimented with straightforward commands urging ChatGPT to replace pronouns using the following prompt format:

prompt = f'''Please generate a new question adhering to the following guidelines:

1. Complete any missing pronouns or conditions in the question based on the context.

2. Retain the original question if it's already complete.

{history}

Question: {question}'''

Example 1

Query:

history = ''' What is NLP?

NLP stands for Natural Language Processing. It is a field of computer science and artificial intelligence that focuses on the interaction between computers and humans using natural language. '''

question = 'What problem does it solve?'

ChatGPT’s response:

What problem does Natural Language Processing (NLP) solve?

In this case, ChatGPT did a great job, replacing “it” with “Natural Language Processing (NLP).”

Example 2

Query:

history = '''When was GPT4 released?

GPT4 was released in 2023'''

question = 'What progress has been made in computer vision this year?'

ChatGPT’s response:

What progress has been made in computer vision this year?

In this case, ChatGPT struggled with substituting “this year,” leading to an incomplete resolution.

Example 3

Query:

history = '''What is GPT3?

GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language processing model developed by OpenAI.

When was GPT4 released?

GPT4 was released in 2023'''

question = 'What is the difference between them? What are the advantages of the latter?'

ChatGPT’s response:

Sorry, the information provided about GPT-4's release date is not accurate as it has not been officially announced by OpenAI. Therefore, it is not possible to answer the question about the difference between GPT-3 and GPT-4 or the advantages of the latter.

Unfortunately, ChatGPT diverges from the instructions, possibly due to its intricate decision-making process. Despite our efforts to reinforce the prompt, ChatGPT occasionally veers toward direct answers, complicating the coreference resolution task.

Few-shot prompt with Chain of Thought: a refined approach

Prompt engineering plays a pivotal role in harnessing LLMs effectively. We decided to test combining few-shot prompts with the Chain of Thought (CoT) method as a promising strategy. Few-shot prompts present LLMs with multiple reference examples, guiding them to emulate those examples in their responses. CoT enhances LLMs’ performance in complex reasoning tasks by encouraging step-by-step reasoning in their answers.

By integrating these techniques, we developed a prompt format to guide ChatGPT through coreference resolution. The revised prompt format includes an empty conversation history, basic examples, failed pronoun replacements and cases involving multiple pronouns, to offer ChatGPT more explicit instructions and reference examples. Instances where ChatGPT returns NEED COREFERENCE RESOLUTION: Yes are crucial, as they indicate that ChatGPT needs to replace pronouns or ambiguous references for a coherent response.

Here is a refined prompt format:

REWRITE_TEMP = f'''

HISTORY:

[]

NOW QUESTION: Hello, how are you?

NEED COREFERENCE RESOLUTION: No => THOUGHT: Consequently, the output question mirrors the current query. => OUTPUT QUESTION: Hello, how are you?

-------------------

HISTORY:

[Q: Is Milvus a vector database?

A: Yes, Milvus is a vector database.]

NOW QUESTION: How to use it?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: I must substitute 'it' with 'Milvus' in the current question. => OUTPUT QUESTION: How to use Milvus?

-------------------

HISTORY:

[]

NOW QUESTION: What are its features?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: Although 'it' requires substitution, there's no suitable reference in the history. Thus, the output question remains unchanged. => OUTPUT QUESTION: What are its features?

-------------------

HISTORY:

[Q: What is PyTorch?

A: PyTorch is an open-source machine learning library for Python. It provides a flexible and efficient framework for building and training deep neural networks.

Q: What is Tensorflow?

A: TensorFlow is an open-source machine learning framework. It provides a comprehensive set of tools, libraries, and resources for building and deploying machine learning models.]

NOW QUESTION: What is the difference between them?

NEED COREFERENCE RESOLUTION: Yes => THOUGHT: 'Them' should be replaced with 'PyTorch and Tensorflow' in the current question. => OUTPUT QUESTION: What is the difference between PyTorch and Tensorflow?

-------------------

HISTORY:

[{history}]

NOW QUESTION: {question}

NEED COREFERENCE RESOLUTION: '''

Examples tested and refined responses

Here are some results from our experiments with refined prompts:

Example 1

Query:

history = '''When was GPT-4 released?

GPT-4 was released in 2023'''

question = 'What progress has been made in computer vision this year?'

ChatGPT’s refined response:

Yes => THOUGHT: I need to replace "this year" with "2023" in the now question. => OUTPUT QUESTION: What progress has been made in computer vision in 2023?

Example 2:

Query:

history = '''What is GPT-3?

GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language processing model developed by OpenAI.

When was GPT-4 released?

GPT-4 was released in 2023'''

question = 'What is the difference between the two? What are the advantages of the latter?'

ChatGPT’s refined response:

Yes => THOUGHT: I need to replace 'the two' with 'GPT-3 and GPT-4' and 'the latter' with 'GPT-4' in the now question. => OUTPUT QUESTION: What is the difference between GPT-3 and GPT-4? What are the advantages of GPT-4?

The refined prompt format significantly enhances ChatGPT’s ability to handle intricate coreference resolution tasks. Questions involving multiple entities, which previously posed challenges, are now addressed effectively. ChatGPT adeptly substitutes pronouns and ambiguous references, delivering accurate and contextually relevant responses.

Conclusion

Prompt engineering plays a pivotal role in resolving coreference problems in RAG systems using LLMs. By integrating innovative techniques such as few-shot prompts and CoT methods, we’ve significantly improved handling complex references in RAG systems, enabling LLMs like ChatGPT to substitute pronouns and ambiguous references accurately and resulting in coherent responses.

- The challenge of coreference resolution in RAG

- Using ChatGPT for coreference resolution

- Few-shot prompt with Chain of Thought: a refined approach

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Smarter Autoscaling in Zilliz Cloud: Always Optimized for Every Workload

With the latest upgrade, Zilliz Cloud introduces smarter autoscaling—a fully automated, more streamlined, elastic resource management system.

The Real Bottlenecks in Autonomous Driving — And How AI Infrastructure Can Solve Them

Autonomous driving is data-bound. Vector databases unlock deep insights from massive AV data, slashing costs and accelerating edge-case discovery.

Selecting the Right ETL Tools for Unstructured Data to Prepare for AI

Learn the right ETL tools for unstructured data to power AI. Explore key challenges, tool comparisons, and integrations with Milvus for vector search.