Dense Vectors in AI: Maximizing Data Potential in Machine Learning

This article zooms in on dense vectors, uncovering their advantages compared to sparse vectors and how they are widely used in ML algorithms across various domains.

Read the entire series

- Introduction to Unstructured Data

- What is a Vector Database and how does it work: Implementation, Optimization & Scaling for Production Applications

- Understanding Vector Databases: Compare Vector Databases, Vector Search Libraries, and Vector Search Plugins

- Introduction to Milvus Vector Database

- Milvus Quickstart: Install Milvus Vector Database in 5 Minutes

- Introduction to Vector Similarity Search

- Everything You Need to Know about Vector Index Basics

- Scalar Quantization and Product Quantization

- Hierarchical Navigable Small Worlds (HNSW)

- Approximate Nearest Neighbors Oh Yeah (Annoy)

- Choosing the Right Vector Index for Your Project

- DiskANN and the Vamana Algorithm

- Safeguard Data Integrity: Backup and Recovery in Vector Databases

- Dense Vectors in AI: Maximizing Data Potential in Machine Learning

- Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges

- A Beginner's Guide to Implementing Vector Databases

- Maintaining Data Integrity in Vector Databases

- From Rows and Columns to Vectors: The Evolutionary Journey of Database Technologies

- Decoding Softmax Activation Function

- Harnessing Product Quantization for Memory Efficiency in Vector Databases

- How to Spot Search Performance Bottleneck in Vector Databases

- Ensuring High Availability of Vector Databases

- Mastering Locality Sensitive Hashing: A Comprehensive Tutorial and Use Cases

- Vector Library vs Vector Database: Which One is Right for You?

- Maximizing GPT 4.x's Potential Through Fine-Tuning Techniques

- Deploying Vector Databases in Multi-Cloud Environments

- An Introduction to Vector Embeddings: What They Are and How to Use Them

Introduction to Dense Vectors

Machine learning requires data to be in a format suitable for algorithmic processing, often transforming raw inputs into numerical vectors. Transforming raw data to vectors, which are high-dimensional arrays, hence become a significant building block of ML algorithms. In this aspect, we see two common vector representations: sparse and dense. While sparse vectors are predominantly filled with zeros and focus on the absence of elements, dense vectors are compact and offer a rich representation.

Dense vectors are the foundation for encoding complex data into high-dimensional numerical representations. This article zooms in on dense vectors, uncovering their advantages compared to sparse vectors and how they are widely used in ML algorithms across various domains.

Understanding Dense Vectors

Dense vectors in machine learning are arrays where each element holds a significant value. For instance, "king" might represent a 3-dimensional dense vector [0.2, -0.1, 0.8]. Each element in this array (e.g., 0.2, -0.1, 0.8) encodes semantic and contextual features learned from data. Unlike sparse vectors, where most elements are zeros, every element in a dense vector is meaningful and contributes to the representation.

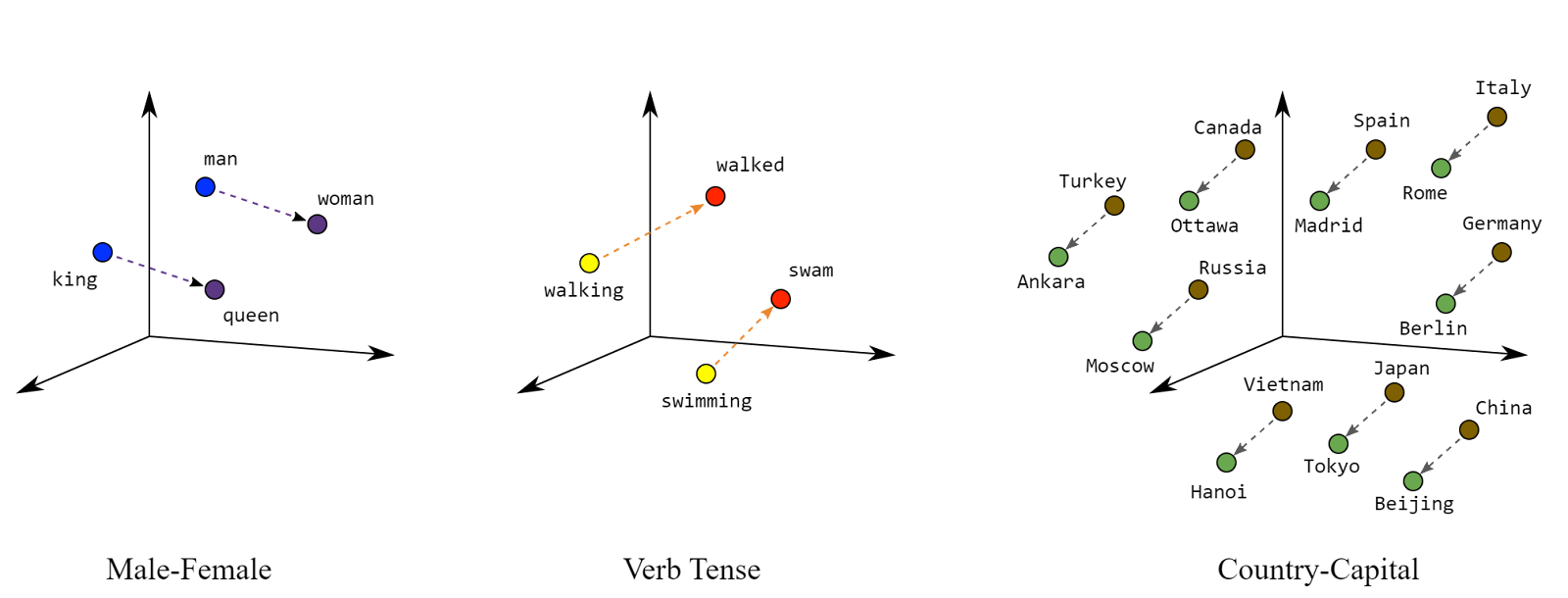

Mathematically, dense vectors occupy high-dimensional spaces, and operations like vector addition or dot products on these arrays can capture relationships between data. Within this expansive space, dense vectors enable precise measurements of similarity and dissimilarity, thus facilitating tasks like clustering, classification, and regression with heightened accuracy. For example, the vector operation on words "king - man + woman = queen" can be possible thanks to dense vectors.

Figure: Dense vectors can capture various semantic relationships. Source: Google Developers.

The Role of Dense Vectors in AI

Dense vectors turn complex data into rich, detailed formats that AI models can easily chew on. Whether making sense of intricate patterns in images or predicting the next word in a chatbot, dense vectors help AI systems get smarter and more intuitive. In computer vision, models such as Vision Transformers (ViT) leverage dense vectors to encode images and text into the same vector space, enabling image-text matching with high-similarity vectors.

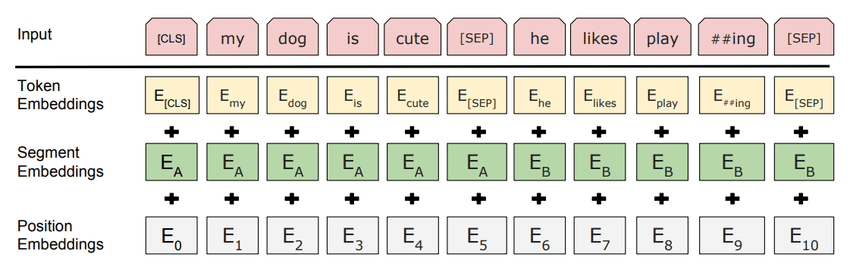

In NLP, consider Word2Vec: words are translated into dense vectors, so "king" and "queen" might be closely positioned in vector space, accurately reflecting their semantic relationship. BERT takes this further by generating context-aware embeddings. For example, "bank" in "river bank" and "bank account" would have different vectors that capture the word's contextual meaning and become crucial for tasks like sentiment analysis or language translation.

Figure: BERT Embeddings utilizes dense vectors to transform text input. Source: BERT paper.

Applications and Use Cases

Dense vectors are transforming AI applications across the board. In text classification, Google's T5 model, employing dense vectors, significantly improves the accuracy of search results and language understanding. In text generation, tools like OpenAI's GPT-4 use dense vectors processed through its neural network to understand and create nuanced text. Dense vectors in recommendation systems that represent users and items in the same vector space enable efficient similarity calculations to recommend products based on user preferences and item characteristics. For instance, Spotify's recommendation system leverages dense vectors to personalized music playlists.

Optimizing with Dense Vectors

Optimizing AI models with dense vector embeddings involves several best practices. Dimensionality reduction is key; methods like PCA help reduce vector size and maintain essential information while boosting computational efficiency. Vector normalization standardizes vectors to a consistent length, which is especially crucial for models dependent on distance calculations.

Fine-tuning embeddings tailors them to specific tasks and is particularly effective in domain-specific contexts. Transfer learning can be applied here, where you can adapt pre-trained embeddings to new data and enhance model accuracy more affordably and quickly.

However, computational efficiency challenges are significant, especially with high-dimensional vectors and large datasets. Efficient algorithms and vector databases can mitigate this issue. While dense vectors address data sparsity better than sparse vectors, ensuring the model focuses on relevant features is still vital, avoiding overfitting. Techniques such as dropout and regularization can help in this context.

Future Trends and Innovations

Emerging AI and machine learning trends indicate significant advancements in using dense vectors. We're witnessing innovative embedding techniques that enhance vectors' representational power. For instance, contextual and dynamic embeddings, evolving from static models like Word2Vec, can more accurately capture the nuances of language.

Techniques like transformer models (BERT, GPT) have revolutionized how dense vectors understand context and semantics.

In the future, we might see more sophisticated embedding techniques that capture linguistic nuances and integrate multimodal data, combining text, images, and audio. This could lead to a deeper, more holistic understanding of complex datasets. Moreover, future dense vector models might become better at transferring knowledge across different domains and languages. This would be a significant step in creating more versatile AI systems that require less domain-specific training data.

On the other hand, advances in reducing the dimensionality of dense vectors without losing significant information could make AI models more efficient and enable their deployment in resource-constrained environments.

Dense Vectors in Summary

Dense vectors have emerged as a cornerstone in AI and significantly refined the effectiveness of machine learning solutions. These vectors capture intricate patterns and nuances within data, offering a more comprehensive understanding across various domains, from natural language processing to healthcare analytics. Their role in driving innovation and facilitating more nuanced AI algorithms cannot be overstated.

Integrating dense vector embeddings is paramount for readers seeking to enhance their AI initiatives. By leveraging this technology, you can unlock deeper insights from your raw, messy data and propel your projects to new performance heights.

- Introduction to Dense Vectors

- Understanding Dense Vectors

- The Role of Dense Vectors in AI

- Applications and Use Cases

- Optimizing with Dense Vectors

- Future Trends and Innovations

- Dense Vectors in Summary

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

What is a Vector Database and how does it work: Implementation, Optimization & Scaling for Production Applications

A vector database stores, indexes, and searches vector embeddings generated by machine learning models for fast information retrieval and similarity search.

Introduction to Milvus Vector Database

Zilliz tells the story about building the world's very first open-source vector database, Milvus. The start, the pivotal, and the future.

Integrating Vector Databases with Cloud Computing: A Strategic Solution to Modern Data Challenges

Integrating vector databases and cloud computing creates a powerful infrastructure that significantly enhances the management of large-scale, complex data in AI and machine learning.