Unlocking Rich Visual Insights with RGB-X Models

Machine Learning is evolving toward multimodal, with many models in Computer Vision now extending into areas like vision and 3D. One field that has been advancing quickly, though more quietly, is RGB-X data—where additional data such as infrared, depth, or surface normals are incorporated alongside traditional RGB (Red, Green, Blue) information.

At a recent NYC Unstructured Data Meetup hosted by Zilliz, Daniel Gural, a Machine Learning and Developer Relations expert at Voxel51, delivered an insightful talk on RGB-X models, highlighting the latest advancements and best practices for working with these complex data formats. His presentation also explored some of the leading models in this growing area of Visual AI and offered valuable guidance on handling the richer, more detailed data RGB-X models bring to image analysis. In this blog, we’ll recap the key takeaways from Gural's talk. If you’re interested in more details, watch the full presentation on YouTube.

Understanding RGB-X Models

RGB-X models are advanced machine learning models in computer vision that extend traditional RGB (Red, Green, Blue) data by incorporating additional channels, such as depth, infrared, or surface normals. The X in RGB-X can represent various types of data, such as:

Depth information: Measures the distance from the camera to objects in a scene, providing spatial context. In a self-driving car scenario, for instance, depth data can help the vehicle determine that a pedestrian is 5 meters away, aiding in safe navigation.

Infrared data: Captures heat signatures, making it useful for night vision and thermal imaging. In wildlife monitoring, infrared data allows researchers to track animal movements at night without disturbing them.

Normal maps: Show the orientation of surfaces, essential for realistic 3D rendering and lighting calculations. In virtual reality gaming, normal maps enhance the realism of textures and lighting, creating more immersive environments.

Thermal imaging: Focuses on temperature variations, similar to infrared but specifically measuring heat distribution. In building inspections, thermal imaging can identify areas of heat loss or electrical issues, helping detect potential problems early.

These additional channels provide extra dimensions of information, allowing for a more comprehensive analysis and understanding of visual scenes. To understand how RGB-X models integrate this data in practice, consider the following example of a person standing in front of a building.

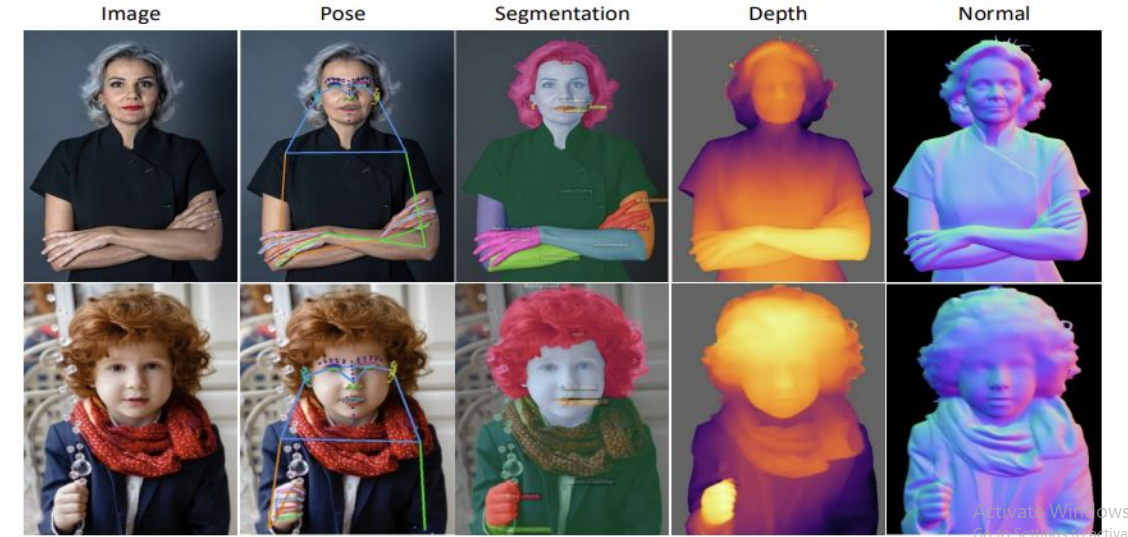

Figure- Different representations of a person standing in front of a building.png

Figure- Different representations of a person standing in front of a building.png

Figure: Different representations of a person standing in front of a building

Above are four different representations of a person standing before a building. The POSE panel shows the original image with an overlaid skeletal pose estimation. The SEG panel displays segmentation, with different body parts and clothing items color-coded. The DEPTH panel uses a color gradient to represent depth, with warmer colors indicating closer objects. The NORMAL panel shows surface normals, using color to represent the orientation of surfaces in 3D space.

This example shows that RGB-X models can simultaneously process multiple aspects of a scene, including pose estimation, segmentation, depth perception, and surface normal calculation. This multi-faceted approach enables a more holistic understanding of the visual information. Let's break down these components:

Pose Estimation: Identify the position and orientation of human body parts in an image. It uses keypoint detection to locate joints and create a skeletal representation of the person. In a fitness application, pose estimation could help users correct their form during exercises by comparing their posture to an ideal model.

Segmentation: This process divides an image into multiple segments or objects. In RGB-X models, it can differentiate between different body parts, clothing items, and background elements. For instance, in an augmented reality fashion app, segmentation could allow users to virtually try on different outfits by accurately overlaying clothing items on their bodies.

Depth Perception: Using depth information, the model can understand the 3D structure of a scene. In the image, warmer colors (reds and yellows) indicate objects closer to the camera, while cooler colors (blues and purples) represent more distant elements. This could be crucial in a robotics application, helping a robot navigate around obstacles in a warehouse.

Surface Normal Calculation: This technique computes the orientation of surfaces in 3D space. The color coding in the NORMAL panel represents different surface orientations, providing crucial information for understanding the geometry of objects in the scene. In 3D modeling software, surface normal information could help artists create more realistic textures and lighting effects.

Now that we've explored the core components of RGB-X models, let's learn how these models can be applied in various industries to tackle complex visual challenges.

Applications of RGB-X Models

With the ability to process multi-faceted visual information, RGB-X models have found applications across various industries and use cases, including Object Tracking Across Frames and Surveying Difficult Terrain.

1. Object Tracking across Frames

RGB-X models are perfect for object tracking, extending beyond traditional object detection by following objects across multiple video or image sequence frames. These models use RGB data alongside additional modalities for improved performance.

Let’s take a look at the RGB-X tracking system structure shared by Gural:

![]() Figure- RGB-X tracking system structure.png

Figure- RGB-X tracking system structure.png

Figure: RGB-X tracking system structure

The system begins with inputs from various sources - RGB cameras and sensors capturing depth, thermal, or other data types. These inputs feed into a central RGB Tracker.

Two key processing streams surround this core: Modality-Agnostic and Modality-Aware components. Modality-Agnostic parts handle features common to all input types, while Modality-Aware sections specialize in specific input modalities like depth or thermal data.

This system uses Shallow Embedding techniques, including Memory (MeME) and Embedding (Emb.) modules, to create initial input representations. Deep Prompting follows, using iterative Memory and Prompt modules to refine and contextualize the information.

These components work together to form a Generalist RGB-X Visual Object Tracking (VOT) system. This system aligns and processes information from sources like depth, event data, and thermal imaging, enabling tracking across different modalities.

This approach allows RGB-X models to track objects effectively in various conditions. Its applications include:

Surveillance systems: Tracking individuals or objects across multiple camera feeds, including switches between sensor types.

Autonomous vehicles: Tracking vehicles, pedestrians, and obstacles in real-time, using visual, depth, and potentially thermal data to maintain tracking in diverse conditions.

Robotics: Helping robots track and interact with objects in dynamic environments, using multiple data streams to maintain object persistence when visual data alone is insufficient.

Sports analysis: Tracking players and equipment for performance analysis, potentially combining visual tracking with other data types like infrared for physiological monitoring.

The multi-modal nature of RGB-X tracking allows for consistent performance in challenging conditions such as varying lighting, partial occlusions, or complex environments. When an object becomes visually obscured, depth or thermal information can help maintain tracking accuracy.

2. Surveying Difficult Terrain

RGB-X models help survey and map applications, especially in challenging environments. Some key uses include:

Drone-based mapping: Equipping drones with RGB-X capable sensors makes it possible to create detailed 3D maps of areas that are difficult or dangerous to access on foot. For example, after a natural disaster, drones with RGB-X capabilities could quickly map damaged areas, helping emergency responders plan their operations more effectively.

On-board processing: Advanced RGB-X models can perform real-time processing directly on the drone, allowing immediate analysis and decision-making. In a search and rescue scenario, a drone could autonomously identify and report a missing person's location without transmitting all its data back to a base station.

Geological surveys: RGB-X data's depth and normal information are useful for understanding terrain features and geological formations. In mining exploration, RGB-X models could help identify promising areas for mineral deposits by analyzing the surface features and composition of large land areas.

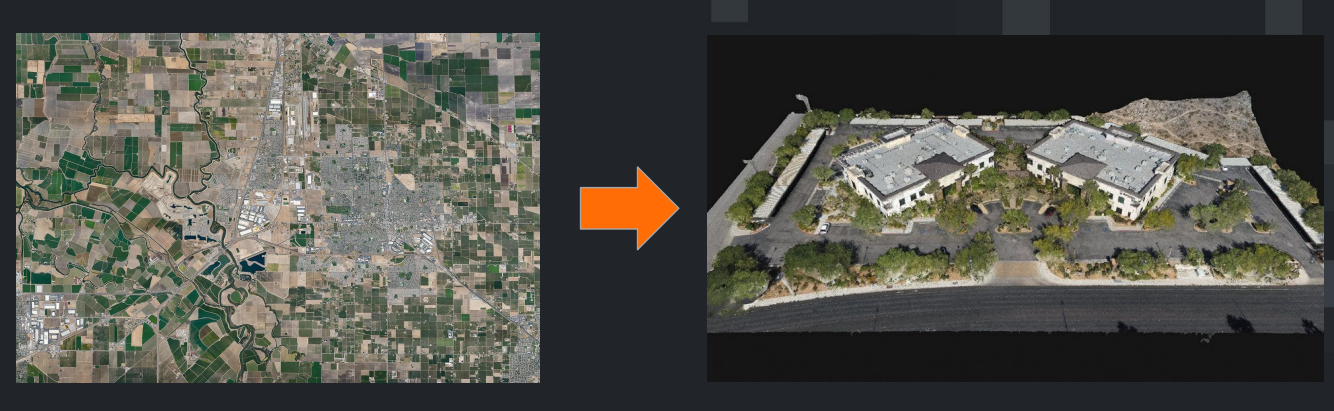

Figure- How RGB-X data can transform simple aerial imagery into 3D models .png

Figure- How RGB-X data can transform simple aerial imagery into 3D models .png

Figure: How RGB-X data can transform simple aerial imagery into 3D models

The above image illustrates how RGB-X data can transform simple aerial imagery into detailed 3D models, enabling precise analysis and planning in various fields such as urban development, agriculture, and environmental monitoring. For instance, urban planners could use such models to assess the impact of new construction projects on sunlight exposure for existing buildings.

Advancements in RGB-X Model Development

Recent developments in RGB-X model development have led to significant improvements in performance and capabilities.

1. Sapiens and Beyond

The Sapiens model represents a significant leap forward in RGB-X processing. It consists of four specialized models, each focusing on a different aspect of visual understanding:

Pose estimation: This model identifies key points on the human body, such as joints and facial landmarks, to determine the pose of individuals in the scene. It uses a combination of convolutional neural networks (CNNs) and graph neural networks (GNNs) to accurately locate and connect these key points. In a real-world application, this model could be used in a smart gym to provide real-time feedback on exercise forms.

Segmentation: The segmentation model divides the image into distinct regions, identifying different objects, body parts, or background elements. It uses fully convolutional networks (FCNs) or U-Net architectures to produce pixel-wise classifications. This model could be applied in autonomous vehicles to precisely identify and separate different elements of a street scene, such as pedestrians, vehicles, and road signs.

Depth perception: This model estimates the distance of each pixel from the camera, creating a depth map of the scene. It often employs techniques like stereo matching or monocular depth estimation using encoder-decoder architectures. In a robotics application, this depth information could help a robot accurately grasp objects of various sizes and shapes.

Surface normal calculation: This model computes the orientation of surfaces in the 3D space. It uses specialized CNN architectures to estimate the surface normal vector for each pixel, providing crucial information about the geometry of objects in the scene. This could be valuable in augmented reality applications, allowing virtual objects to interact realistically with real-world surfaces. Let’s have a look at sapiens in action.

Figure- RGB-X model outputs showing pose, segmentation, depth, and normal maps for two subjects.png

Figure- RGB-X model outputs showing pose, segmentation, depth, and normal maps for two subjects.png

Figure: RGB-X model outputs showing pose, segmentation, depth, and normal maps for two subjects

The above image shows the capabilities of advanced RGB-X models like Sapiens. It displays two sets of images, each with five panels: the original image, pose estimation, segmentation, depth map, and surface normal map. The top row shows an adult, while the bottom shows a child. This demonstrates the model's ability to accurately process diverse subjects.

2. Fine-Grain Searches

With the increased information available in RGB-X data, it's possible to perform more detailed and specific searches within visual databases. For example:

Finding drone images captured at specific angles: By utilizing the normal map information, the system can identify images where surfaces are oriented at particular angles relative to the camera. This could be useful in architectural surveys, allowing analysts to find images of buildings from specific viewpoints.

Identifying objects based on their depth in a scene: The depth channel allows for queries that specify the distance of objects from the camera, enabling more precise spatial searches. In a retail inventory management system, this could help locate products placed at specific depths on shelves.

Searching for thermal anomalies in infrared data: The thermal channel can identify areas of unusual heat signatures, which is useful in applications like industrial inspection or wildlife monitoring. For instance, in a large solar farm, this capability could quickly identify overheating panels that may require maintenance.

3. Embodied AI and Self-Driving Cars

RGB-X models are playing a crucial role in the development of embodied AI, particularly in self-driving cars. Some applications include:

Advanced navigation: Using depth and normal information to better understand road conditions and obstacles. This lets the vehicle create a detailed 3D map of its surroundings in real time. For example, the car could detect and navigate around a pothole by understanding its depth and shape.

Object identification: Combining RGB and infrared data for improved object detection in various lighting conditions. This is particularly useful for identifying pedestrians, animals, or obstacles in low-light or adverse weather conditions. For instance, on a foggy night, the system could detect pedestrians crossing the street even when they're not clearly visible in the RGB image alone.

Passenger monitoring: Using depth and thermal data to monitor passenger health and safety. This approach could detect signs of distress or unusual behavior within the vehicle. For example, the system might detect if a passenger has fallen asleep or is experiencing a medical emergency, prompting the vehicle to take appropriate action.

Applications of RGB-X models in self-driving cars go beyond simple obstacle avoidance. These models enable more sophisticated interactions, such as allowing users to point at objects outside the car and receive information about them or monitoring the health and safety of passengers inside the vehicle.

Challenges and Considerations of RGB-X Models

While these advancements open up exciting new possibilities, it’s important to recognize the challenges and considerations that come with deploying RGB-X models in real-world settings, including:

Data complexity: Managing and processing four-channel data requires more computational resources and storage capacity. This increases the demands on hardware and necessitates efficient data management strategies.

Model interpretability: As models become more complex, ensuring their decisions are interpretable and explainable becomes crucial. Developers might need to implement techniques like attention visualization or feature importance analysis to make RGB-X model decisions more transparent.

Ethics and privacy: The enhanced capabilities of RGB-X models raise new questions about data privacy and the ethical use of AI. For example, the ability to create detailed 3D avatars from short video clips could have implications for personal privacy and consent. Organizations implementing RGB-X technologies might need to develop robust data protection policies and obtain clear consent from individuals whose data is being captured and processed.

Integrating RGB-X Models with Vector Databases

As Gural emphasizes in his talk, RGB-X models go beyond simple inference. He explains, "With these models, you can not only make predictions about surface normals, depth, thermal data, or any other channels you're interested in but also create embedding for them." This insight underscores the dual functionality of RGB-X models: they can predict multi-channel outputs and generate powerful vector embeddings that capture complex visual features, providing richer representations for downstream tasks like image retrieval or classification.

As RGB-X models generate high-dimensional embeddings, efficient storage, indexing, and retrieval of those embeddings becomes critical. Vector databases like Milvus and Zilliz Cloud are purpose-built to manage this complex, multi-dimensional data, making them ideal solutions for handling and optimizing the rich embeddings produced by RGB-X models.

Here's how vector databases enhance RGB-X model applications:

Efficient Similarity Search: Vector databases find similar items in high-dimensional embedding spaces. For RGB-X data, quickly locating visually or structurally similar scenes based on complex criteria. In a large-scale surveillance system, operators could use Milvus to instantly retrieve footage with similar embedding patterns in depth, thermal, or combined RGB-X space.

Scalability: As RGB-X applications grow, so does the volume of vector embeddings. Vector databases like Zilliz Cloud can handle massive datasets of these embeddings, making it ideal for applications like city-wide sensor networks or extensive satellite imagery analysis.

Flexible Schema: RGB-X outputs often produce diverse types of vector embeddings - from visual features to depth and thermal representations. Milvus accommodates this variety, allowing unified storage and querying of different RGB-X aspect embeddings.

RAG and GenAI: By storing RGB-X vector embeddings in Milvus, you can build more effective retrieval-augmented generation (RAG) pipelines for your GenAI applications. This approach can significantly improve the performance of AI models that need to reason about complex visual scenes using multi-modal embeddings.

Multimodal Embedding Support: Milvus is well-suited for storing and comparing embeddings from different modalities. This capability is crucial for RGB-X applications that analyze relationships between visual, depth, and thermal embedding spaces.

Integrating Milvus with tools like FiftyOne can further enhance RGB-X workflows. Such integrations enable seamless management of datasets and their corresponding embeddings, visualization of complex RGB-X outputs, and efficient similarity searches across large collections of multi-channel image embeddings.

Hands-On: Monocular Depth Estimation with FiftyOne

To illustrate the practical applications of RGB-X models, let’s walk through a hands-on example of using FiftyOne for monocular depth estimation with the SUNRGBD dataset. We will cover how to get started with loading and processing RGB-D (RGB + Depth) data into Fifty One, which Gural shared in the talk. We will also include a link to the full tutorial on leveraging RGB-X models on this data.

Step 1: Setup and Installation

First, we need to install the required libraries and download the dataset:

!pip install fiftyone

!curl -o sunrgbd.zip https://rgbd.cs.princeton.edu/data/SUNRGBD.zip

!unzip sunrgbd.zip

This code installs FiftyOne, downloads the SUNRGBD dataset, and unzips it. FiftyOne is a tool for dataset management and visualization that is useful for computer vision tasks.

Step 2: Importing Required Libraries

Next, we import the necessary Python libraries:

from glob import glob

import numpy as np

from PIL import Image

import torch

import fiftyone as fo

import fiftyone.zoo as foz

import fiftyone.brain as fob

from fiftyone import ViewField as F

These imports provide us with tools for file handling (glob), numerical operations (numpy), image processing (PIL), deep learning (torch), and dataset management (fiftyone).

Step 3: Creating the Dataset

Now, we'll create a FiftyOne dataset and populate it with samples from the SUNRGBD dataset:

dataset = fo.Dataset(name="SUNRGBD-20", persistent=True)

# Restrict to 20 scenes

scene_dirs = glob("SUNRGBD/k*/*/*")[:20]

samples = []

for scene_dir in scene_dirs:

# Get image file path from scene directory

image_path = glob(f"{scene_dir}/image/*")[0]

# Get depth map file path from scene directory

depth_path = glob(f"{scene_dir}/depth_bfx/*")[0]

depth_map = np.array(Image.open(depth_path))

depth_map = (depth_map * 255 / np.max(depth_map)).astype("uint8")

sample = fo.Sample(

filepath=image_path,

gt_depth=fo.Heatmap(map=depth_map),

)

samples.append(sample)

dataset.add_samples(samples)

This code creates a new FiftyOne dataset named "SUNRGBD-20". It then iterates through 20 scenes from the SUNRGBD dataset, loading both the RGB image and its corresponding depth map for each scene. The depth maps are normalized and converted to 8-bit format for easier visualization. Each image-depth pair is added to the dataset as a sample, with the depth map stored as a heatmap.

Step 4: Launching the FiftyOne App

Finally, we launch the FiftyOne app to visualize our dataset:

session = fo.launch_app(dataset, auto=False)

# Then open tab to localhost:5151 in browser

The code launches the FiftyOne app, which provides a web-based interface for exploring and analyzing the dataset. You can access this interface by opening a web browser and navigating to localhost:5151.

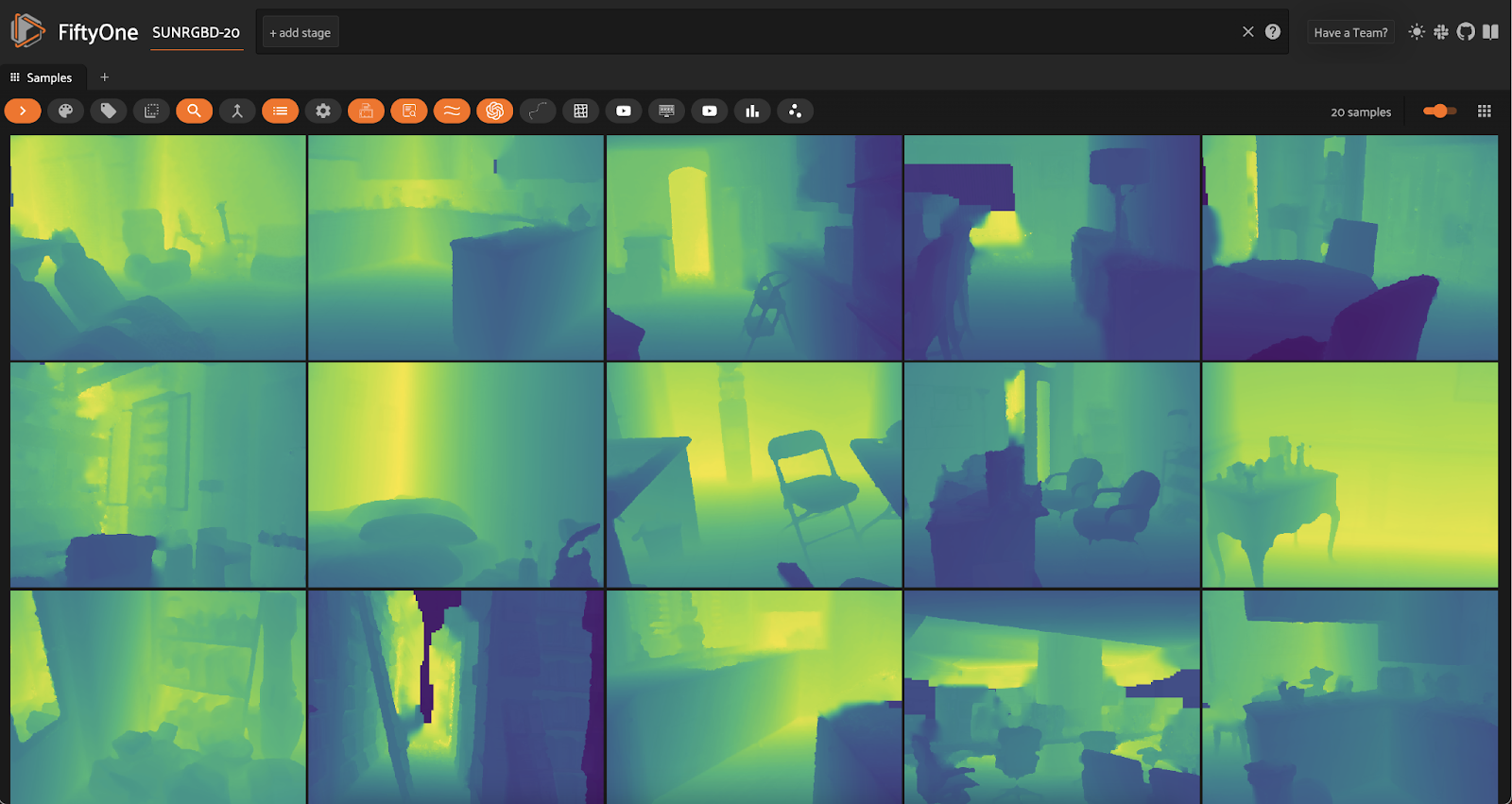

The interface should be similar to this one:

Figure- Depth maps from the SUNRGBD-20 dataset in FiftyOne.png

Figure- Depth maps from the SUNRGBD-20 dataset in FiftyOne.png

Figure: Depth maps from the SUNRGBD-20 dataset in FiftyOne

This output shows depth maps from the SUNRGBD-20 dataset we created. The heatmaps represent the depth information of various indoor scenes, with brighter yellows and greens indicating closer objects or surfaces and darker blues representing areas farther from the camera.

Now that you have loaded the dataset, you can follow this tutorial to run monocular depth estimation models on it.

The Future of Machine Learning with RGB-X Models

As RGB-X models continue to evolve, they are likely to have a significant impact on the future of machine learning and AI:

1. Enhanced Human-Digital Interaction

RGB-X models allow more natural and intuitive interactions between humans and digital systems. Some potential applications include:

Creating realistic 3D avatars from short video clips: RGB-X models can generate more accurate and detailed 3D representations of individuals from limited input data by leveraging depth and normal information. This could revolutionize virtual meetings, allowing participants to be represented by lifelike avatars that accurately mimic their expressions and movements.

Improving gesture recognition for virtual and augmented reality: The additional information channels allow for more precise tracking of hand and body movements, enabling more responsive and immersive VR/AR experiences. For example, in a VR sculpting application, the system could accurately detect fine finger movements, allowing for more precise and intuitive manipulation of virtual clay.

Enhancing facial recognition and emotion detection systems: By incorporating depth and thermal data, these systems can better understand facial expressions and physiological responses, leading to more accurate emotion detection. This could be applied in market research, where companies could more accurately gauge audience reactions to products or advertisements.

Gural highlighted the potential of RGB-X models in creating detailed 3D avatars from simple video inputs. This technology could change how we interact in virtual environments, from video games to virtual offices.

2. Advanced Robotics

The multi-modal nature of RGB-X data is particularly valuable in robotics:

Improved object manipulation: Depth and normal data can help robots better understand object shapes and textures, enabling more precise grasping and manipulation of diverse objects. In a warehouse setting, robots could handle a wide variety of products, from delicate glassware to oddly shaped packaging, with equal dexterity.

Enhanced navigation: Combining RGB with depth information allows for more precise movement in complex environments, improving a robot's ability to navigate cluttered or dynamic spaces. For instance, a home assistance robot could navigate around furniture and pets and move people more effectively.

Better human-robot interaction: By understanding human poses and gestures more accurately, robots can interact more naturally with people, interpreting subtle cues and responding appropriately. This could be particularly valuable in healthcare settings, where robots could assist patients while being sensitive to their movements and non-verbal communication.

3. Environmental Monitoring and Conservation

RGB-X models have the potential to revolutionize how we monitor and protect the environment:

Precise forest mapping: Combines RGB imagery with depth information for accurate tree counting and species identification. This can aid in forest management and conservation efforts. For example, researchers could use drones equipped with RGB-X sensors to quickly assess a forest's health, identifying areas affected by disease or deforestation with high precision.

Wildlife tracking: Using thermal and RGB data to monitor animal populations noninvasively. This approach can provide valuable insights into animal behavior and habitat use without disturbing the subjects. For instance, conservationists could use RGB-X-equipped cameras to track endangered species in their natural habitats, even in low-light conditions or dense vegetation.

Climate change impact assessment: Utilizing depth and normal data to track changes in terrain over time, such as coastal erosion or glacial retreat. By creating detailed 3D models of landscapes over time, scientists can quantify and visualize the impacts of climate change more accurately. This could be particularly useful in monitoring sea level rise and its effects on coastal communities.

Conclusion

Gural shared the advancements in RGB-X model development, showcasing how these models go beyond traditional RGB channels to unlock new dimensions in computer vision and AI. By integrating additional data like depth, infrared, and more, RGB-X models have redefined visual analysis capabilities, making them invaluable in diverse applications such as autonomous vehicles, robotics, and environmental monitoring.

Further reading

Paper: [2408.12569] Sapiens: Foundation for Human Vision Models

Paper: [2405.17773] Towards a Generalist and Blind RGB-X Tracker

Blog: An Introduction to Vector Embeddings: What They Are and How to Use Them

Blog: Demystifying Color Histograms: A Guide to Image Processing and Analysis

Blog: OpenAI Whisper: Transforming Speech-to-Text with Advanced AI

- Understanding RGB-X Models

- Applications of RGB-X Models

- Advancements in RGB-X Model Development

- Challenges and Considerations of RGB-X Models

- Integrating RGB-X Models with Vector Databases

- Hands-On: Monocular Depth Estimation with FiftyOne

- The Future of Machine Learning with RGB-X Models

- Conclusion

- Further reading

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introducing Zilliz MCP Server: Natural Language Access to Your Vector Database

The Zilliz MCP Server enables developers to manage vector databases using natural language, simplifying database operations and AI workflows.

Vector Databases vs. Object-Relational Databases

Use a vector database for AI-powered similarity search; use an object-relational database for complex data modeling with both relational integrity and object-oriented features.

Vector Databases vs. Document Databases

Use a vector database for similarity search and AI-powered applications; use a document database for flexible schema and JSON-like data storage.