How to Use Anthropic MCP Server with Milvus

AI agents are getting smarter by the day, but there's one major bottleneck: accessing the right data at the right time.

Honestly, I’m getting tired of adding yet another Function Tool to my LLM stack. It’s a mess. But without those, and no context-aware information, even the best models can struggle.

That’s where MCP changes the game. Instead of constantly hacking together new integrations, it gives your LLM a universal way to fetch data—no extra work needed. And when you pair it with Milvus, it gets even better. So, what exactly is MCP?

Model Context Protocol (MCP)

MCP is an open protocol that has a goal of standardizing ways to connect AI Models to different data sources and tools.

In simple terms, MCP makes it way easier to build smart AI agents that can actually use external knowledge effectively. It provides:

- A list of pre-built integrations that LLMs can directly plug into

- The flexibility to switch between LLM providers and vendors

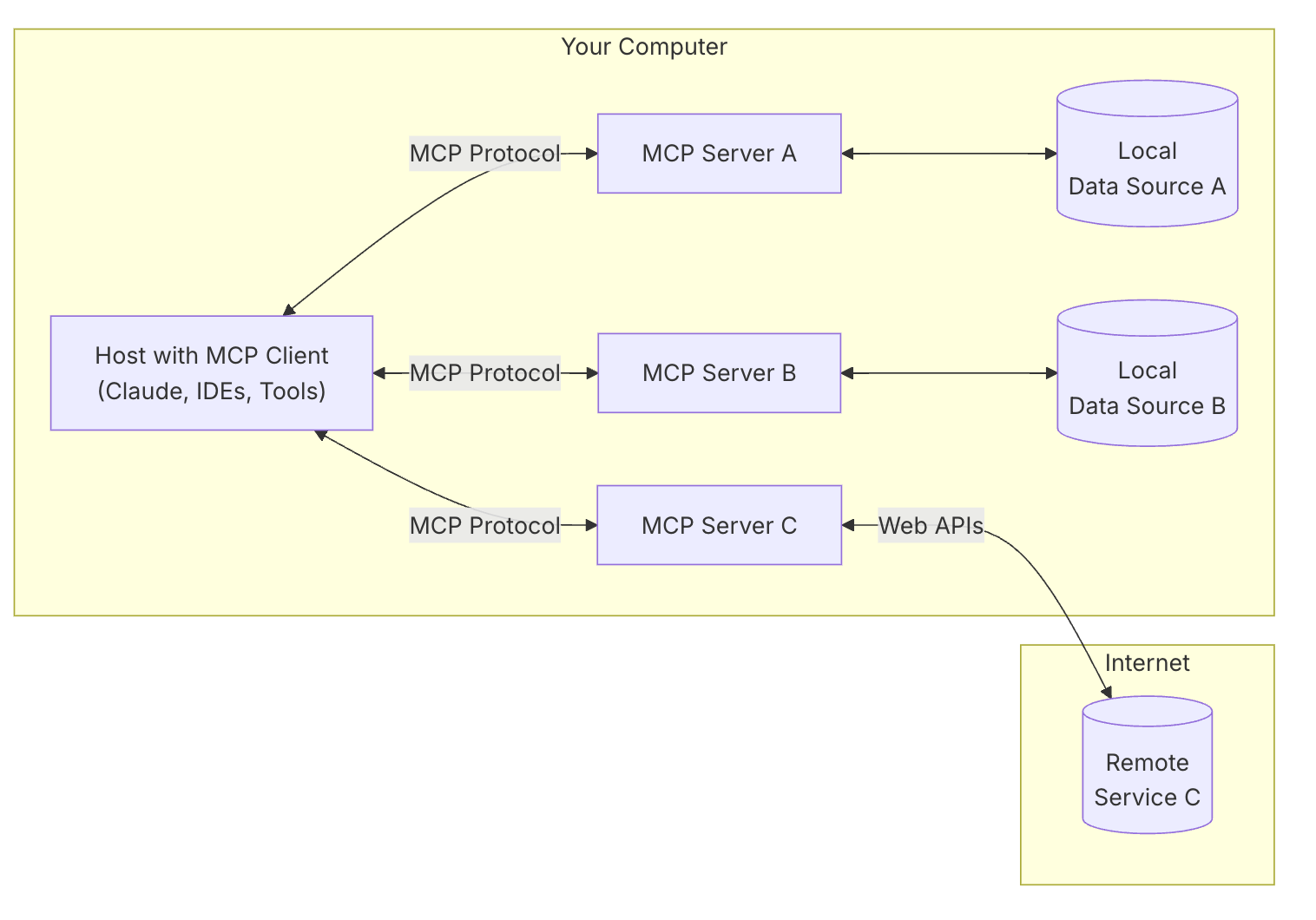

The general idea is for MCP to follow a client-server architecture, where a host application can connect to multiple servers:

- MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP

- MCP Clients: Protocol clients that maintain 1:1 connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol, for example what we have here with Milvus

- Local Data Sources: Your computer’s files, databases, and services that MCP servers can securely access

- Remote Services: External systems available over the internet (e.g., through APIs) that MCP servers can connect to

Why Milvus and MCP Together?

Milvus isn’t just good at managing massive amounts of data -- it’s built for it. Its blazing-fast similarity search and scalable vector storage make it a dream for AI agents. Meanwhile, MCP acts as the perfect middleman, ensuring seamless, standardised access to that knowledge without extra engineering hassle

Together, Milvus and MCP unlock powerful new possibilities:

- Seamless integration: Leverage MCP’s client-server architecture to plug Milvus into your AI workflows, instantly providing agents with direct access to your data.

- Rapid innovation: Quickly test, iterate, and deploy sophisticated AI-driven use cases without needing to build complex custom integrations or APIs.

- Model & Vendor Flexibility: MCP frees you from constraints around specific AI models or vendors. Connect Milvus once, then confidently use multiple LLM providers or models knowing your data access layer stays consistent and stable.

- Enhanced AI Reasoning: Provide your agents richer contextual insights than traditional data stores can easily deliver. Milvus lets you store and efficiently retrieve embeddings, powering agents that are more accurate, insightful, and context-aware.

So, what does this actually look like in action? And why should you care?

Why is this so cool?

Imagine a world with:

MCP Hosts (e.g., Claude Desktop, Cursor, etc) initiate contextualized queries to MCP Servers to enrich agent interactions.

MCP Milvus Servers allow you to have full visibility and control over your Milvus DB. Not only can you run a Vector Search, but you can also do:

- Collection Management: Easily list, create, and manage collections through standardized MCP queries - no need to check SDK or the API.

- Schema Exploration: Inspect collection schemas, field types, and indexing configurations directly through your AI agent interface.

- Real-time Monitoring: Query collection statistics, entity counts, and database health metrics to ensure optimal performance.

- Dynamic Operations: Create new collections, insert data, and modify schemas on-the-fly as your agent workflows evolve.

- Full Text Search: Since Milvus 2.5, we also support Full Text Search, making it way easier to actually run queries with the data you're searching for and without having to think of embedding models.

Local & Remote Data Sources connected in a unified ecosystem give AI agents rich contextual knowledge exactly when needed— they can either access your local instance of Milvus, but also your hosted one:

- Update the URI, add the connection token, and the MCP server will handle the rest—seamlessly connecting to your hosted Milvus instance. ✨

In other words, with MCP and Milvus working together, developers get a smooth experience, and AI agents get the context they need to be smarter.

Real-world Impact & Use Cases

With the Milvus-MCP integration, it's now simpler than ever to empower your AI agents across a wide range of emerging use-cases:

- Simplified Architecture: Eliminate custom integrations between your agents and Milvus - MCP handles all the complexity.

- Flexible Deployment Options: Deploy agents that can seamlessly connect to local Milvus instances during development and remote Zilliz cloud instances in production.

This integration eliminates much of the infrastructure complexity traditionally associated with deploying AI agents at scale.

Powerful Capabilities at Your Fingertips

With the MCP Milvus server, you can now:

- Perform full-text searches across your vector database

- Execute complex vector similarity searches

- Manage collections dynamically

- Count and filter entities

- mCreate and manipulate indexes

Next Steps

Ready to build? Head over to the GitHub repo for the full documentation, join our Discord to chat with the community, and start building today!

- Model Context Protocol (MCP)

- Why Milvus and MCP Together?

- Why is this so cool?

- Real-world Impact & Use Cases

- Powerful Capabilities at Your Fingertips

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

What is the K-Nearest Neighbors (KNN) Algorithm in Machine Learning?

KNN is a supervised machine learning technique and algorithm for classification and regression. This post is the ultimate guide to KNN.

Introducing DeepSearcher: A Local Open Source Deep Research

In contrast to OpenAI’s Deep Research, this example ran locally, using only open-source models and tools like Milvus and LangChain.

Zilliz Cloud BYOC Upgrades: Bring Enterprise-Grade Security, Networking Isolation, and More

Discover how Zilliz Cloud BYOC brings enterprise-grade security, networking isolation, and infrastructure automation to vector database deployments in AWS