The Role of LLMs in Modern Travel: Opportunities and Challenges Ahead

Large Language Models (LLMs) have become a significant driver of innovation across industries. They enable systems to understand, generate, and interact with natural language like humans. GetYourGuide (GYC), an online marketplace for travel experiences, has been using LLMs to advance new developments in the tourism sector.

At a recent Berlin Unstructured Data Meetup hosted by Zilliz, Meghna Satish, a Machine Learning Engineer at GetYourGuide, shared insights into their journey with LLMs. She discussed the products they’ve built, their challenges, and how LLMs have reshaped travel platforms by improving customer experiences and user interactions.

In this blog, we’ll recap her key points and explore how LLMs can be integrated with the Milvus vector database to address common issues like hallucinations. For more details, watch the full replay of her talk on YouTube.

How GYG Uses LLMs to Improve Its Services

LLMs have transformed nearly every industry, and tourism is no exception. GetYourGuide (GYG), an online marketplace for travel services and experiences, recognizes the power of LLMs to enhance customer experiences, streamline operations, and provide more efficient services.

Content Translation and Localization

One of the primary applications of LLMs at GYG is content translation and localization. By breaking down language barriers, LLMs enable real-time translation of travel information, including articles, hotel descriptions, and reviews, allowing users across different regions to understand content in their native language. This localized approach drives engagement by offering each user relevant, accessible information.

GYG relies on ChatGPT to perform translations, as it provides high-quality translations that align with GYG’s tone of voice. However, ChatGPT’s training data distribution leans heavily toward English, with around 50% of its sources in English, 6% in Spanish, 32% in European, and 18% in Asian languages. To counter potential biases and ensure accuracy, GYG employs a hybrid approach, combining ChatGPT translations with additional deep-learning neural networks for post-editing, achieving a more balanced, culturally sensitive localization.

Content Generation and Customer Support

GYG also uses LLMs to streamline content creation, from AI-assisted tour descriptions to comprehensive destination guides and travel blogs. By automating content generation, LLMs help keep GYG’s platform fresh and engaging, providing travelers with up-to-date information while freeing up valuable time for the content team.

In addition to generating content, LLMs support customer service by handling high volumes of queries through automated FAQs and multi-turn conversations. This reduces the demand for human support teams, allowing them to focus on more complex cases while lowering operational costs. LLM-powered responses ensure customers receive prompt, accurate answers, enhancing the overall user experience.

Future Possibilities: Personalization

Although not yet implemented at GYG, LLMs have great potential for personalization. LLMs could create tailored content for individual users by leveraging user data such as search history, booking patterns, and interaction preferences. This could include personalized itineraries, activity recommendations, and customized discounts aligned with a user’s unique travel interests.

Challenges of Using LLMs at GYG

After obtaining initial translations and content generation, the team encountered several challenges when using LLMs, particularly ChatGPT, for post-editing localization content:

Hallucination: If it lacks specific information about the query, ChatGPT sometimes generates incorrect or fabricated details. For instance, it may invent names, places, or events not present in the original text when it does the translation, introducing inaccuracies that compromise the translation quality. ChatGPT also tends to answer questions rather than strictly following the translation task, leading to unintended content.

Prompt Leakage: ChatGPT occasionally adds extra characters or information not found in the original prompt, which can lead to responses that stray from the intended purpose and make the prompt irrelevant. This "leakage" disrupts the intended output, requiring additional checks and edits.

Role Consistency: ChatGPT sometimes fails to maintain its assigned role. If a prompt deviates slightly from the expected tone or content, ChatGPT may respond with commentary on the task itself rather than adhering strictly to the instructions. This can interfere with tasks that demand consistency, such as translation, where maintaining focus on the task is essential.

To address such challenges, human oversight is necessary to ensure accuracy and relevance. However, human intervention may be able to manage these issues effectively for small—to medium-scale applications. Manual oversight just isn't feasible for large systems, say with hundreds of thousands of daily users.

Here, we offer Retrieval-Augmented Generation (RAG) as a solution: by feeding the LLM external data sources, RAG can mitigate hallucinations and fill in knowledge gaps that would otherwise require human input. The following sections discuss how RAG works and why vector databases make this approach scalable and effective.

Retrieval-Augmented Generation (RAG) for Mitigating LLM Hallucinations

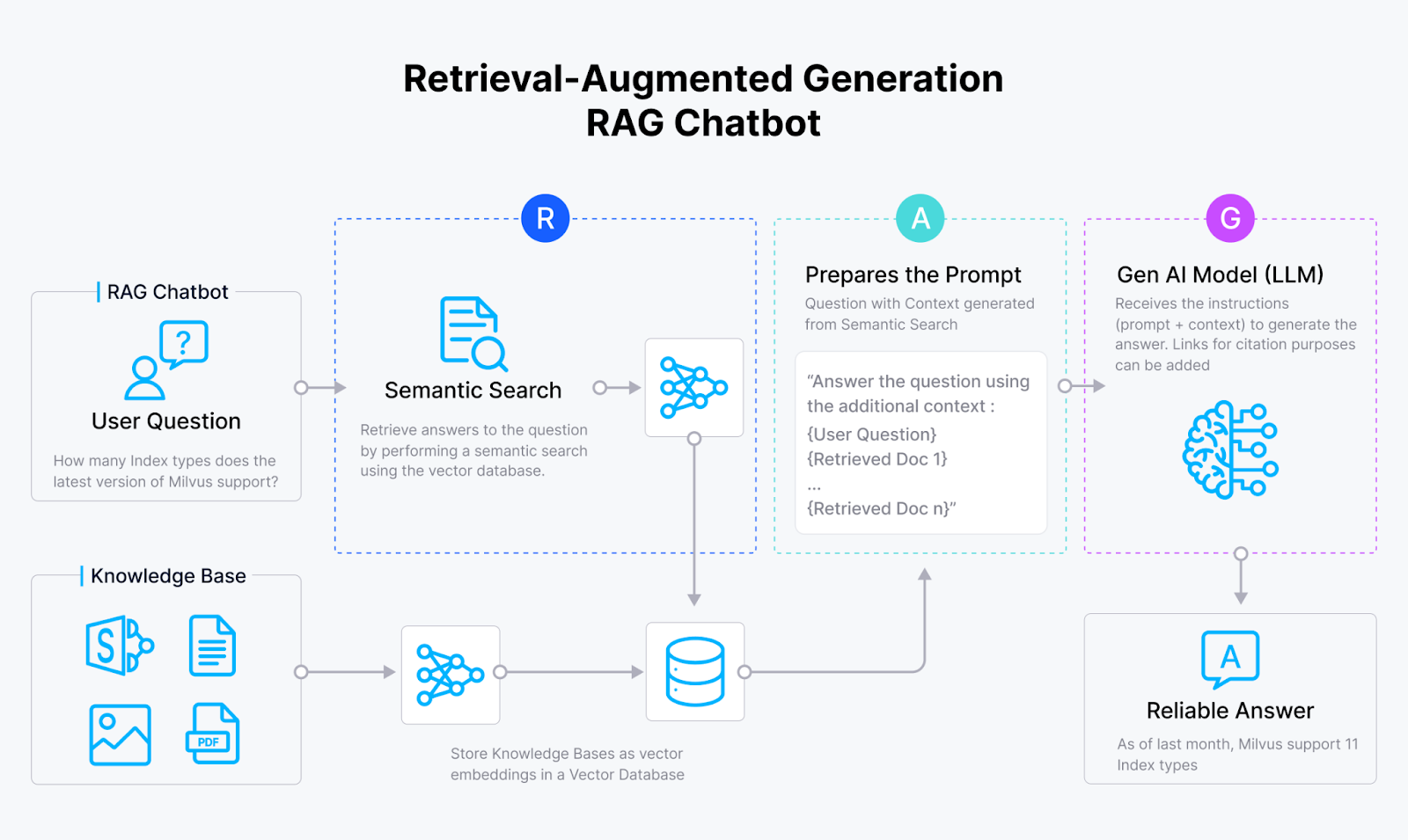

RAG is a robust solution for reducing LLM hallucinations, especially when queries require specific knowledge outside the model’s training data. For example, if you ask an LLM about proprietary data, it’s likely to return an inaccurate response. RAG addresses this issue by retrieving relevant context and feeding it to the LLM, allowing it to answer more accurately.

A standard RAG setup combines an LLM (like ChatGPT), a vector database (such as Milvus or its managed version, Zilliz Cloud), and an embedding model. Developers can build even more advanced RAG systems by integrating additional tools like LlamaIndex, LangChain, DSPy, or rerankers, each tailored to enhance retrieval, re-ranking, or other specialized tasks for more accurate and relevant outputs.

Figure- RAG workflow.png

Figure- RAG workflow.png

Here is how RAG works:

Vectorization: Instead of sending the input query directly to the LLM, an embedding model first encodes the query and any additional knowledge sources into vector embeddings, respectively.

Vector Storage: These vector embeddings are then stored in a vector database like Milvus or Zilliz Cloud, which efficiently manages large volumes of vectorized data for fast retrieval.

Vector Similarity Search: The vector database performs a similarity search, identifying the top-k results that match the context and semantics of the user’s query.

Passing Context to the LLM: The top-matching results from the vector search are fed into the LLM alongside the original query. This provides the LLM with relevant, up-to-date information, helping to reduce hallucinations and improve response accuracy.

Generating the Final Response: The LLM combines its pre-trained knowledge with the retrieved context to produce a more accurate, well-informed response.

This approach enables the LLM to handle complex or specific questions effectively, even when it lacks direct training on the topic.

RAG vs. Fine tuning a Model

RAG and fine-tuning both improve LLMs but in distinct ways. RAG enriches responses by pulling in relevant, up-to-date information from external sources in real time, making it ideal for cases where information changes frequently or spans a wide range of topics. This approach allows the LLM to handle diverse or dynamic queries without extensive re-training, maintaining relevance and reducing costs. In contrast, fine-tuning permanently updates the model’s knowledge by adjusting its weights on a specific dataset, making it well-suited for applications requiring deep expertise in a stable domain. However, fine-tuning is resource-intensive and less adaptable to rapidly changing information.

Ultimately, RAG is optimal for scalable, adaptable tasks needing immediate access to external data, while fine-tuning is best for specialized applications that benefit from embedded expertise. For many use cases, RAG’s flexibility and cost efficiency make it an appealing choice over traditional fine-tuning.

However, rather than viewing RAG and fine-tuning as mutually exclusive, it's often advantageous to see them as complementary strategies. A well-rounded approach could involve fine-tuning the LLM to improve its understanding of domain-specific language, ensuring it produces outputs that meet the particular needs of your application. Simultaneously, using RAG can further boost the quality and relevance of the responses by providing the model with up-to-date, contextually appropriate information drawn from external sources. This combined strategy allows you to capitalize on the strengths of both techniques, resulting in a more robust and effective solution that meets both general and specialized requirements.

Summary

LLMs are bringing about a big change in the travel industry, enabling companies like GetYourGuide (GYG) to provide efficient customer experiences. Through use cases such as language translation and content generation, GYG enhances how its platform interacts with its users, making its services more accessible.

Although using ChatGPT and deep learning neural networks works great for language translation and so many other tasks, it comes with its own challenges, particularly hallucinations. RAG is a widely adopted approach to addressing such problems by connecting external knowledge from a vector database like Milvus to the LLM for more accurate output.

Recommended Resources

- How GYG Uses LLMs to Improve Its Services

- Challenges of Using LLMs at GYG

- Retrieval-Augmented Generation (RAG) for Mitigating LLM Hallucinations

- RAG vs. Fine tuning a Model

- Summary

- Recommended Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introducing Business Critical Plan: Enterprise-Grade Security and Compliance for Mission-Critical AI Applications

Discover Zilliz Cloud’s Business Critical Plan—offering advanced security, compliance, and uptime for mission-critical AI and vector database workloads.

DeepRAG: Thinking to Retrieval Step by Step for Large Language Models

In this article, we’ll explore how DeepRAG works, unpack its key components, and show how vector databases like Milvus and Zilliz Cloud can further enhance its retrieval capabilities.

Empowering Innovation: Highlights from the Women in AI RAG Hackathon

Over the course of the day, teams built working RAG-powered applications using the Milvus vector database—many of them solving real-world problems in healthcare, legal access, sustainability, and more—all within just a few hours.