Introduction to LangChain

A guide to LangChain, including its definition, workflow, benefits, use cases, and available resources to get started.

Introduction to LangChain

LangChain stands at the forefront of LLM-driven application development, offering a versatile framework that significantly enhances how developers interact with text-based systems. By seamlessly integrating LLMs with external data sources, LangChain enables applications to become context-aware, allowing for more effective reasoning and delivering responses to user input that are not only coherent but also grounded in relevant information. This capability bridges the gap between abstract language models and the specific contextual cues required for informed decision-making, unlocking new possibilities for creating intelligent and responsive applications.

At the core of LangChain’s architecture are several key components, each meticulously crafted to streamline the development and deployment processes, making it easier for developers to build powerful applications. These components work in concert to provide a comprehensive toolkit for working with LLMs:

LangChain Libraries: Available in both Python and JavaScript, these libraries form the backbone of the LangChain framework. They offer a wide range of interfaces and integrations, enabling developers to assemble complex chains and agents with ease. These libraries include prebuilt implementations that cater to various tasks, such as natural language understanding, text generation, and data retrieval, allowing developers to focus on building the application logic rather than worrying about the underlying complexities.

LangChain Templates: To further simplify the development process, LangChain provides a repository of deployable reference architectures known as LangChain Templates. These templates offer streamlined solutions for various use cases, ranging from customer support bots to automated content generation tools. By leveraging these templates, developers can confidently kick-start their projects with a solid foundation, reducing the time and effort required to get from concept to deployment. In addition, LangChain offers a prompt templates to help format user input into a format that the language model can best handle. There is a prompt template for few shot examples, composing prompts together, and partially formate prompt templates.

LangServe: Deploying LangChain-based applications is made easier with LangServe, a dedicated library designed to facilitate the deployment of LangChain chains as RESTful APIs. LangServe ensures that these chains can be effortlessly integrated into existing systems, providing compatibility and scalability across diverse application environments. This makes it possible to deploy sophisticated LLM-powered applications in a production setting without the need for extensive infrastructure modifications.

LangSmith: LangSmith is a hosted developer platform that enhances the entire lifecycle of language-powered application development. It provides robust tools for debugging, testing, evaluation, and monitoring, allowing developers to fine-tune their applications with precision. LangSmith’s seamless integration with any LLM framework extends the capabilities of LangChain, making it easier to optimize and maintain language-centric applications. By offering a centralized platform for managing these tasks, LangSmith drives innovation and efficiency, enabling developers to push the boundaries of what’s possible with LLMs.

Together, these components make LangChain a powerful framework for developers looking to harness the full potential of LLMs. Whether you’re building a simple chatbot or a complex AI-driven application, LangChain provides the tools and infrastructure needed to create applications that are not only intelligent but also deeply integrated with the contextual data they need to perform effectively.

Getting Started with LangChain

Getting started to learn Langchain with the base installation package of LangChain for Python is quick and easy. The base install is barebones and does not include any of the dependencies for specific integrations you may be interested in. Please note, that the default LLM used with LangChain is OpenAI and you will need to have an OpenAI API key.

Pip

pip install langchain

If you are interested in a package containing their-party integrations, use the following community version:

pip install langchain-community

You can also create a free LangSmith account from the LangChain website.

LangChain Features

LangChain offers features designed to empower developers in building sophisticated language-powered applications. Here's a detailed explanation of the core features of LangChain:

Context-Aware Responses: LangChain enables applications to generate context-aware responses by connecting LLMs to various context sources, often stored in a vector db. This feature allows applications to understand and respond to user queries more meaningfully and reasonably.

Effective Reasoning: With LangChain, applications can leverage language models to make informed decisions based on the provided context. This feature enables applications to reason effectively and deliver more accurate and insightful responses to user queries.

Integration Flexibility: LangChain provides flexible integration options, allowing developers to integrate language models into their applications seamlessly. Whether through prompt instructions, few-shot examples, or other sources of context, LangChain offers a variety of integration methods to suit different use cases.

Customizable Components: LangChain offers customizable components that developers can use to tailor the framework to their needs. From prebuilt implementations to customizable templates, LangChain provides developers with the tools to build powerful language-powered applications.

What problem does LangChain solve?

LangChain solves the problem of developing applications for artificial intelligence that require sophisticated language understanding and reasoning capabilities. In many natural language processing (NLP) tasks, such as chatbots, virtual assistants, or content summarization systems, understanding the context and nuances of human language is crucial for providing accurate and relevant responses and avoiding presenting reasonable but false answers (AI Hallucinations) to users. However, building such applications from scratch can be complex and time-consuming, especially when integrating large language models (LLMs) and managing contextual information.

LangChain addresses this challenge by providing a versatile framework that facilitates the development of applications powered by language models. It enables developers to create context-aware applications that can connect language models to various sources of context, such as prompt instructions, few-shot examples, or other content. By leveraging LangChain expression language, and a vector database, developers can build applications that can comprehend and respond to natural language input more effectively, thereby improving user interactions and overall user experience.

How to Use LangChain by Use Case

There are many use cases supported by LangChain. In this section, we will review three of the most popular use cases: Retrieval Augmented Generation (RAG), RAG Chatbot, and Summarization.

Retrieval Augmented Generation (RAG)

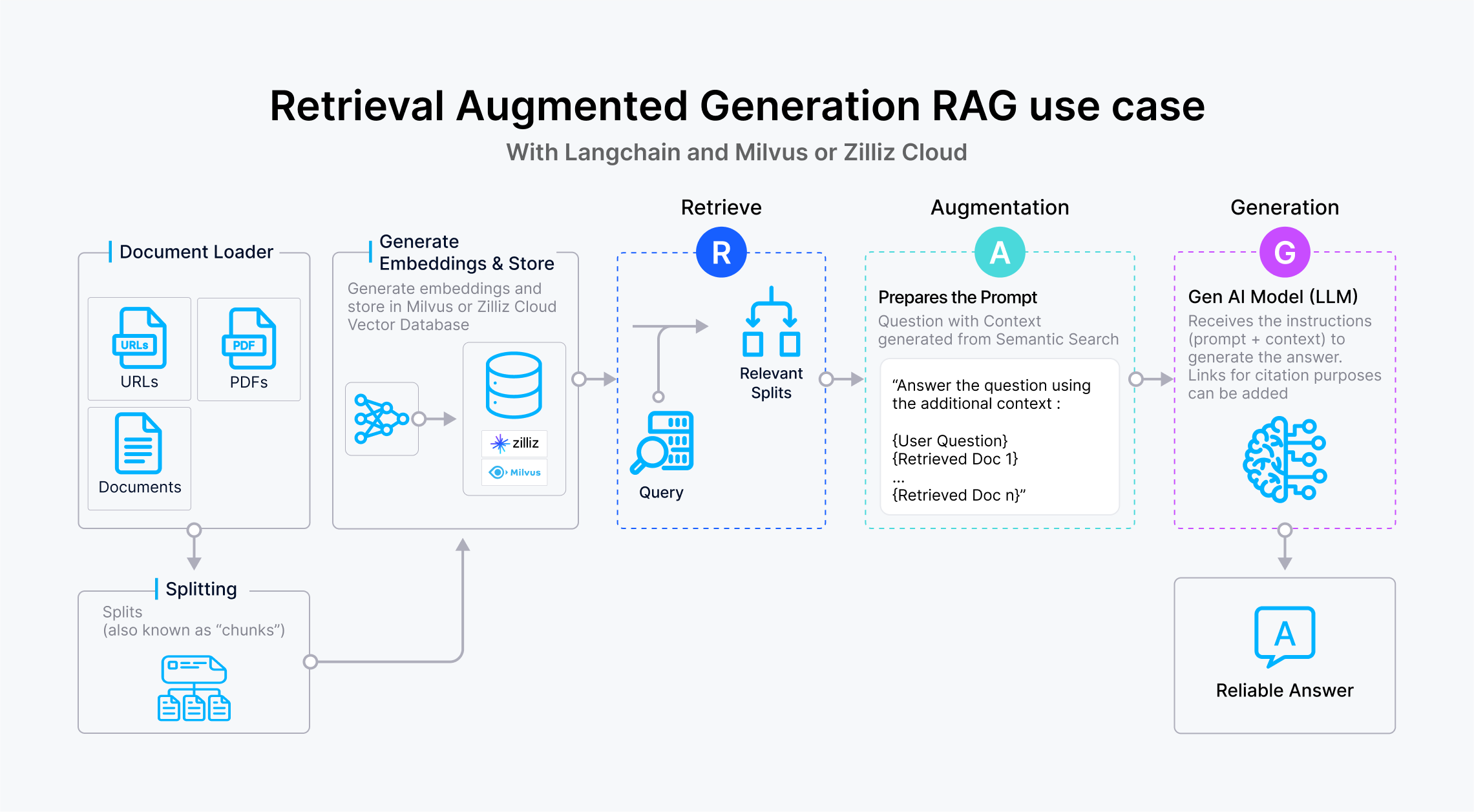

One of the techniques that LangChain can help you use with vector databases and large language models is Retrieval Augmented Generation (RAG). RAG enables developers to build applications that understand and respond to user queries with depth and accuracy. RAG blends information stored and retrieved from a vector db with large language models to generate a response. By leveraging external knowledge, this approach uses relevant information from vast knowledge sources to produce more accurate, coherent, and contextually relevant outputs.

RAG use case with LangChain and Zilliz Cloud

RAG use case with LangChain and Zilliz Cloud

Start by pulling together your collection of documents you want to use for your RAG solution. LangChain has over 100 different document loaders for all types of documents (html, pdf, code), from all types of locations (S3, public websites) and integrations with AirByte and Unstructured.

Next, split the text up into small, semantically meaningful chunks (often sentences). There are two different axes along which you can customize your text splitter: How the text is split or How the chunk size is measured. In addition, there are different types of text splitters when you use LangChain. They are characterized by Name, Splits On (how the text is split), Adds Metadata (whether or not metadata is added) and Description (this also includes recommendations on use case).

Generate embeddings of your splits and store them in a vector database like Zilliz Cloud or open source Milvus. There are a number of text embedding models you can choose from, please note that the default for LangChain is OpenAI which will require an OpenAI key and a cost. During the prototype phase, you might want to consider an open source model instead.

At query time, the query is converted into a vector embedding (Note: use the same model that you used for your text chunks that you originally stored) which is then used to trigger a semantic search in the vector database. The response is then sent to the LLM to generate a reliable answer.

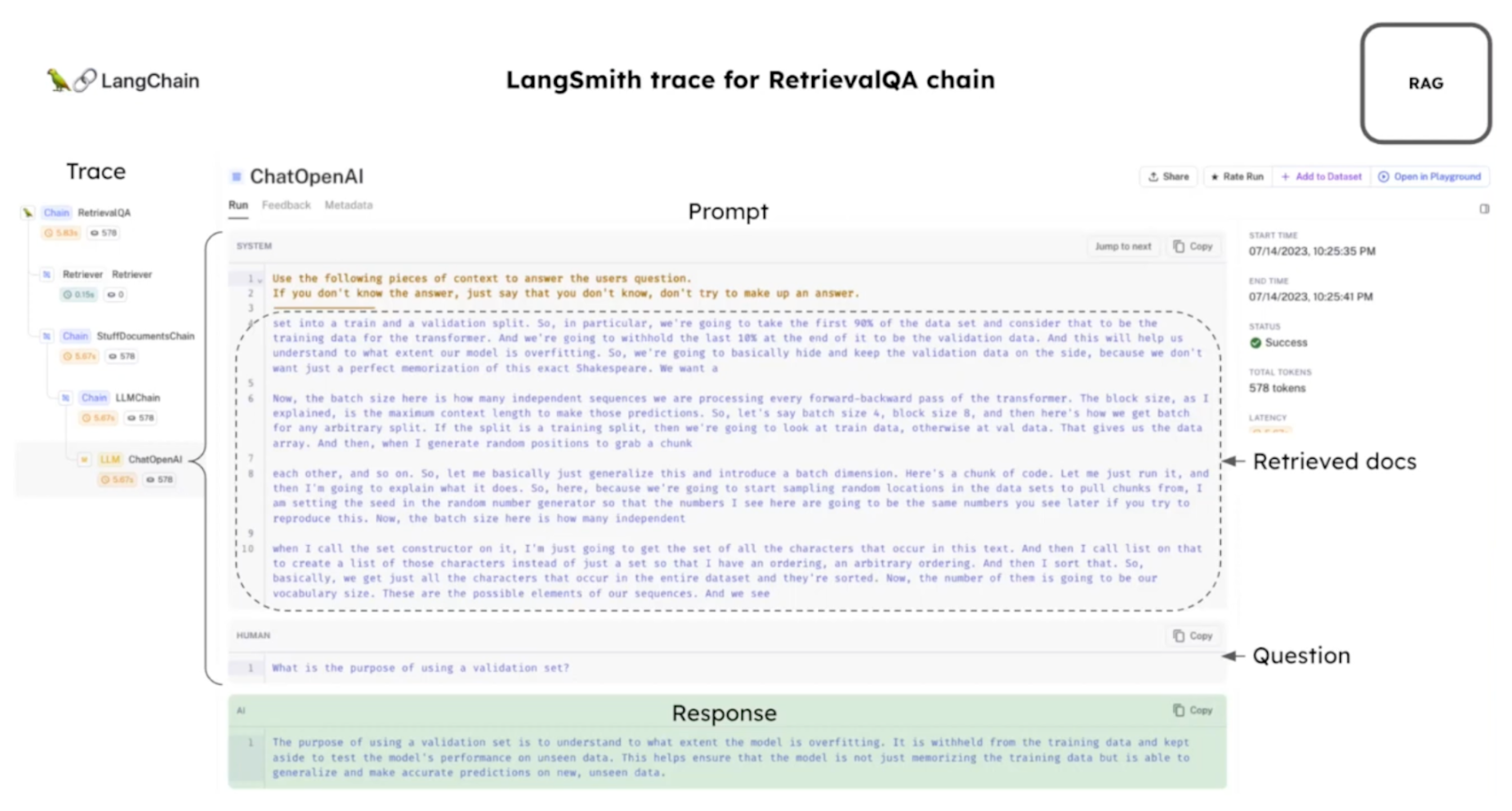

LangSmith trace for RAG use case with Zilliz.png

LangSmith trace for RAG use case with Zilliz.png

Chatbot

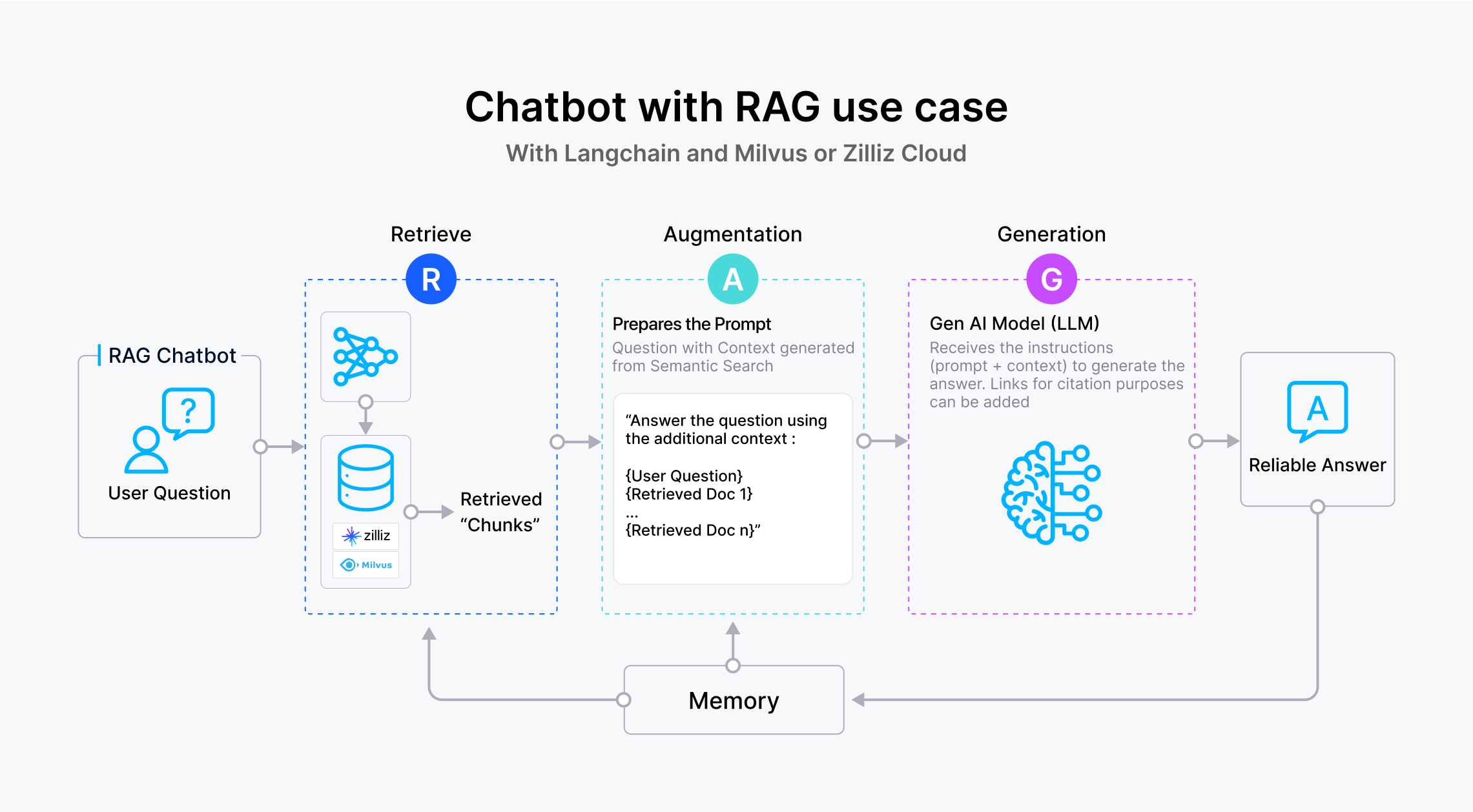

Chatbot with RAG use case with LangChain and Zilliz Cloud

Chatbot with RAG use case with LangChain and Zilliz Cloud

The Chatbot utilizes the RAG technique discussed earlier, integrating the concept of "Memory" to enhance its functionality. Unlike traditional RAG use cases, the Chatbot with RAG incorporates a memory feature, enabling it to recall and incorporate past conversations into current interactions. For instance, if a user inquires about a topic previously discussed, the Chatbot can retrieve the summary of that conversation and incorporate it into the ongoing dialogue. By storing past chat sequences in a vector database, the Chatbot can efficiently retrieve and leverage historical information to enrich present interactions, even if the conversation occurred days or weeks earlier.

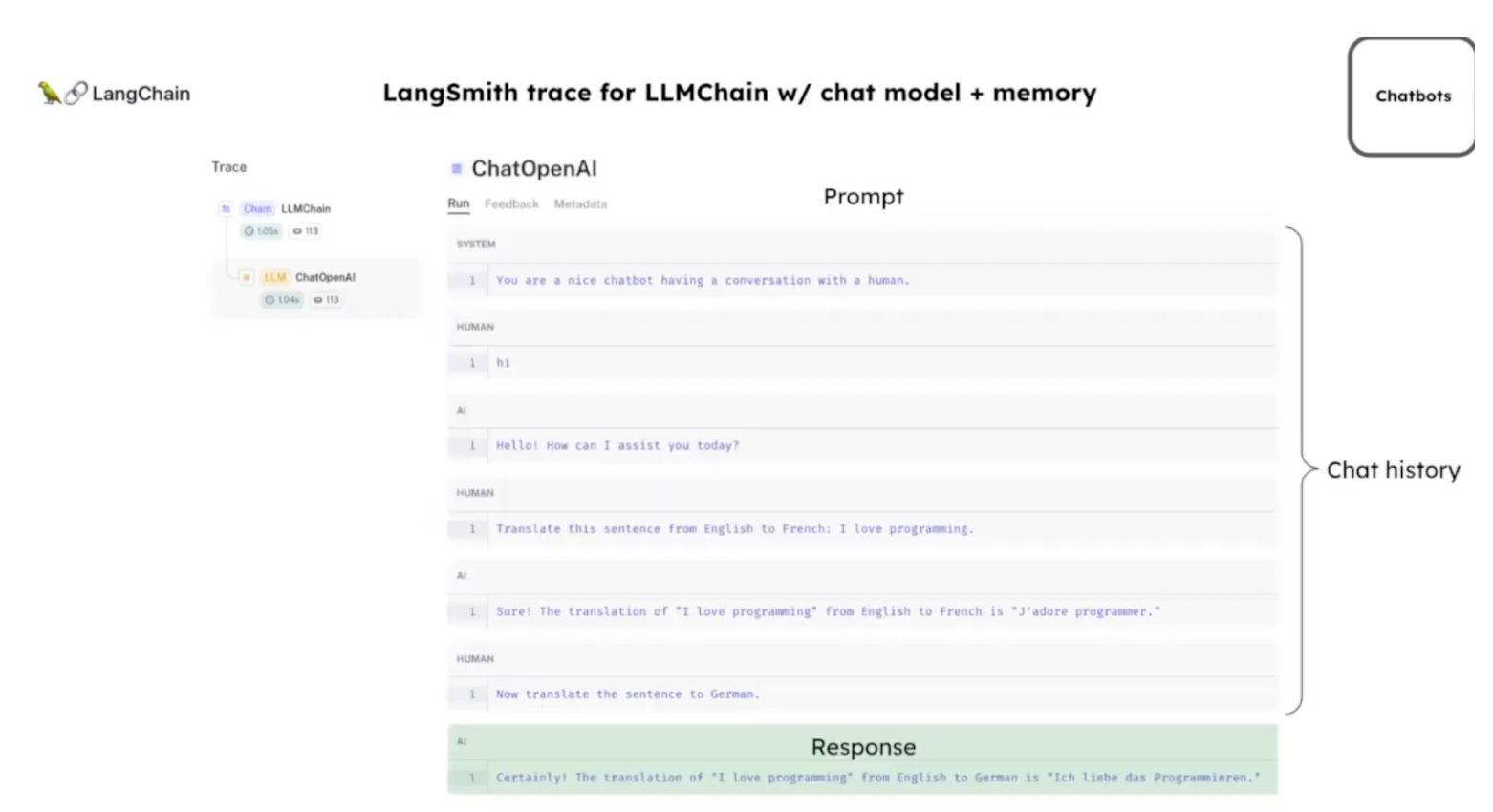

LangSmith trace for RAG Chatbot use case | Zilliz

LangSmith trace for RAG Chatbot use case | Zilliz

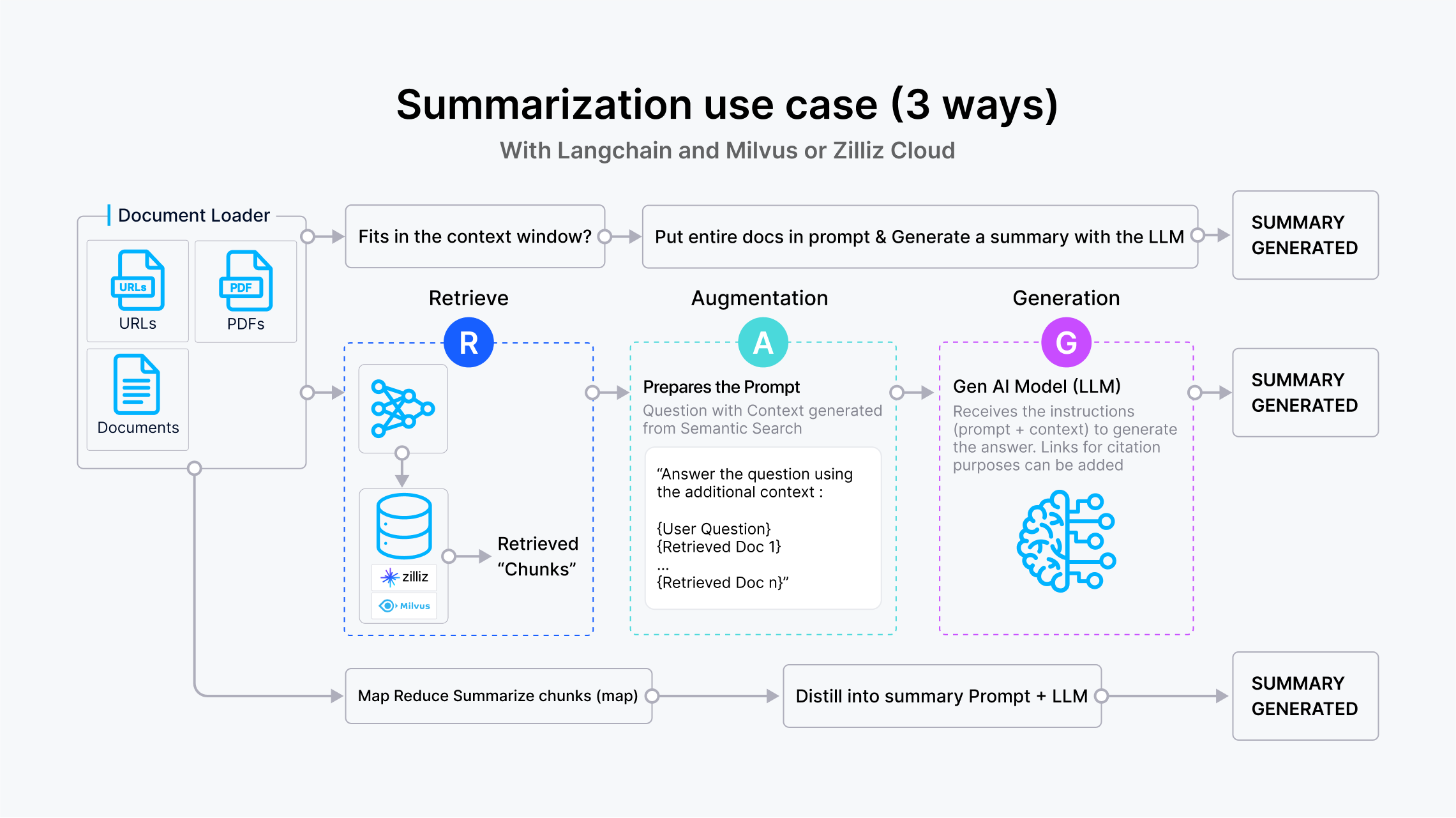

Summarization

Summarization use case with LangChain and Zilliz Cloud

Summarization use case with LangChain and Zilliz Cloud

- For the Summarization use case, there are three things to add to your implementation so you can ensure a good user experience.

If the content is small enough to fit in the LLM’s context window, you can simply send it in its entirety to the LLM with the prompt to generate the summary

If the content is too large for the context window, you can split the text, generate the embeddings, and store in a vector database. You can then conduct a semantic similarity search and use the results with your prompt and LLM to generate the summary (similar to the RAG approach discussed above).

Finally, you can also use the Map Reduce approach available with LangChain where you can send a distilled version of the content to the prompt and LLM.

LangChain & Zilliz Cloud and Milvus Resources

- Building an Open Source Chatbot Using LangChain and Milvus in Under 5 Minutes

- How LangChain Implements Self Querying

- Retrieval Augmented Generation on Notion Docs via LangChain

- Experimenting with Different Chunking Strategies via LangChain

- Using LangChain to Self-Query a Vector Database

- Prompting in LangChain

- Enhancing ChatGPT's Intelligence and Efficiency: The Power of LangChain and Milvus

- Query Multiple Documents Using LlamaIndex, LangChain, and Milvus

- Prompting in LangChain

- GPTCache, LangChain, Strong Alliance

- Ultimate Guide to Getting Started with LangChain

- LangChain and Zilliz Cloud and Milvus Integration

Frequently Asked LangChain Questions (FAQs)

What does LangChain do?

LangChain is a framework designed to facilitate the development of applications powered by language models. It enables applications to be context-aware, connecting language models to various sources of context for grounded responses. Additionally, LangChain empowers applications to reason effectively by leveraging language models to make informed decisions based on the provided context.

What problem does LangChain solve?

LangChain addresses the challenge of developing applications that require sophisticated language understanding and reasoning capabilities. By providing a framework that seamlessly integrates with large language models and allows for contextual awareness, LangChain enables developers to build applications that can comprehend and respond to natural language input more effectively.

What is the difference between an LLM and LangChain?

While a Large Language Model (LLM) focuses on the language understanding and generation capabilities, LangChain serves as a framework that enables the integration and orchestration of LLMs within applications. In other words, LLMs provide the language processing capabilities, while LangChain provides the infrastructure and tools to harness and utilize these capabilities in various applications.

- Introduction to LangChain

- Getting Started with LangChain

- LangChain Features

- What problem does LangChain solve?

- How to Use LangChain by Use Case

- LangChain & Zilliz Cloud and Milvus Resources

- Frequently Asked LangChain Questions (FAQs)

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

LangChain Memory: Enhancing AI Conversational Capabilities

This article explores the memory capabilities of modern LLMs, using LangChain modules to establish memory buffers and build conversational AI applications.

Langchain Tools: Revolutionizing AI Development with Advanced Toolsets

LangChain tools redefine the boundaries of what’s achievable with AI.

Exploring the Langchain Community API: Seamless Vector Database Integration with Milvus and Zilliz

This article will explore the LangChain Community API and how it simplifies the process of integrating Milvus and Zilliz for efficient vector database interaction.