A Beginner’s Guide to Using OpenAI Text Embedding Models

A comprehensive guide to using OpenAI text embedding models for embedding creation and semantic search.

Read the entire series

- Exploring BGE-M3 and Splade: Two Machine Learning Models for Generating Sparse Embeddings

- Comparing SPLADE Sparse Vectors with BM25

- Exploring ColBERT: A Token-Level Embedding and Ranking Model for Efficient Similarity Search

- Vectorizing and Querying EPUB Content with the Unstructured and Milvus

- What Are Binary Embeddings?

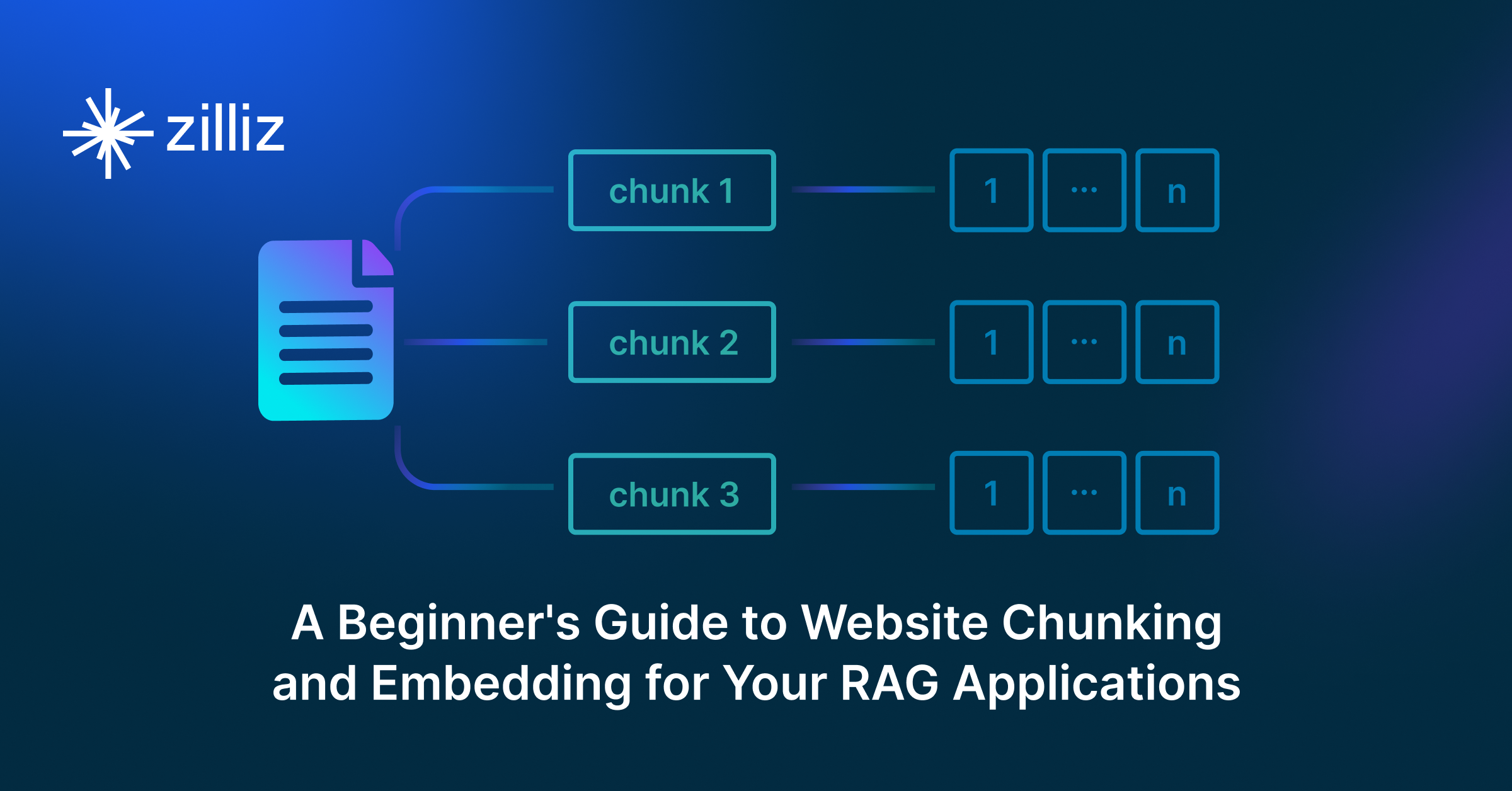

- A Beginner's Guide to Website Chunking and Embedding for Your RAG Applications

- An Introduction to Vector Embeddings: What They Are and How to Use Them

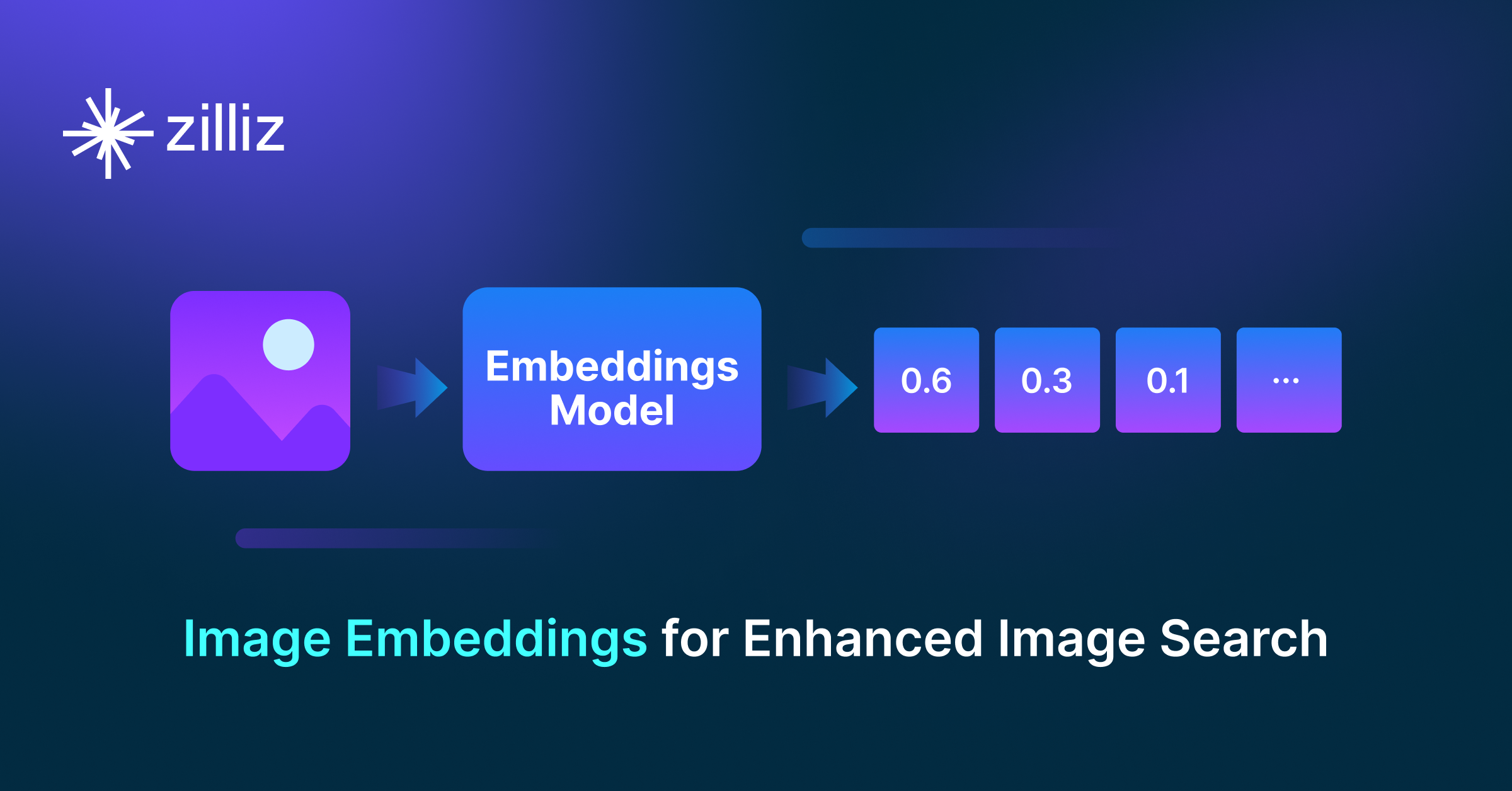

- Image Embeddings for Enhanced Image Search: An In-depth Explainer

- A Beginner’s Guide to Using OpenAI Text Embedding Models

- DistilBERT: A Distilled Version of BERT

- Unlocking the Power of Vector Quantization: Techniques for Efficient Data Compression and Retrieval

Introduction to Vector Embeddings and Embedding Models

Vector embeddings are a core concept in AI, representing complex, unstructured data—such as images, text, videos, or audio files—as numerical vectors that machines can understand and process. These vectorized forms capture semantic meaning and relationships within the data, enabling AI models to analyze, compare, and generate content more effectively. In natural language processing (NLP), words, sentences, or entire documents are converted into dense vectors, allowing algorithms to grasp not only individual meanings but also the contextual relationships between them.

Embedding models are specialized algorithms designed to generate these vector representations. Trained on vast datasets, these models learn to encode the underlying patterns and relationships within the data, producing embeddings that are both accurate and contextually aware. OpenAI’s text embedding models, for instance, are particularly powerful, offering high-quality embeddings that can be applied across a wide range of tasks, including semantic search, clustering, recommendations, anomaly detection, diversity measurement, and classifications.

One of the key applications of vector embeddings and embedding models is in building Retrieval Augmented Generation (RAG) systems. RAG combines the strengths of embeddings with generative AI, enabling more sophisticated and accurate responses by retrieving relevant information from vast databases stored in a vector database like Milvus before generating an answer. This approach is invaluable in situations where the AI needs to provide detailed, fact-based responses, making it an essential tool for businesses and developers alike.

In this guide, we’ll explore how to harness the power of OpenAI’s text embedding models, providing you with the tools and insights needed to build more intelligent, responsive AI systems.

OpenAI Text Embedding Models

OpenAI offers a suite of text embedding models tailored to various natural language processing (NLP) tasks. Among these, the legacy text-embedding-ada-002 and the latest text-embedding-3-small and text-embedding-3-large models stand out. Released on January 25, 2024, the text-embedding-3-small and text-embedding-3-large models represent significant advancements in the field, building upon the strong foundation laid by text-embedding-ada-002 . The following table provides a quick comparison of these three models, highlighting their key features:

| Model | Description | Output Dimension | Max Input | Price |

| text-embedding-3-large | Most capable embedding model for both english and non-english tasks | 3,072 | 8191 | $0.13 / 1M tokens |

| text-embedding-3-small | Increased performance over 2nd generation ada embedding model | 1,536 | 8191 | $0.10 / 1M tokens |

| text-embedding-ada-002 | Most capable 2nd generation embedding model, replacing 16 first generation models | 1,536 | 8191 | $0.02 / 1M tokens |

Table: Comparing OpenAI’s text embedding models

text-embedding-3-large is designed for high-precision tasks, where capturing the nuances of language is critical. With a larger embedding dimension of 3,072, it can encode detailed semantic information, making it ideal for complex applications such as deep semantic search, advanced recommendation systems, and sophisticated text analysis.

text-embedding-3-small strikes an excellent balance between performance and resource efficiency. With an output dimension of 1,536, it offers improved performance compared to the older text-embedding-ada-002, while maintaining a more compact representation of text. This model is particularly well-suited for real-time applications or use cases where computational resources are limited, providing high accuracy without the overhead of larger models.

text-embedding-ada-002 was the leading performer among OpenAI's embedding models before the introduction of the above two newer models. It is known for its capability to handle a wide range of NLP tasks with a balanced approach. With an output dimension of 1,536, it offers a significant upgrade over its predecessors by providing high-quality embeddings suitable for various applications, including semantic search, classification, and clustering.

Creating Embeddings with OpenAI Text Embedding Models

There are two primary ways to create vector embeddings with OpenAI text embedding models.

PyMilvus: The Python SDK for the Milvus vector database, which seamlessly integrates with models like

text-embedding-ada-002.OpenAI Library: the python SDK offered by OpenAI.

In the following sections, we'll use PyMilvus as an example to demonstrate how to create embeddings with OpenAI’s text embedding models. For more details on creating embeddings using the OpenAI Library, we recommend referring to our AI model page or consult OpenAI’s documentation.

Once the vector embeddings are generated, they can be stored in Zilliz Cloud (a fully managed vector database service powered by Milvus) and used for semantic similarity search. Here are four key steps to get started quickly.

Sign up for a Zilliz Cloud account for free.

Set up a serverless cluster and obtain the Public Endpoint and API Key.

Create a vector collection and insert your vector embeddings.

Run a semantic search on the stored embeddings.

Create embeddings with text-embedding-ada-002 and insert them into Zilliz Cloud for semantic search

from pymilvus.model.dense import OpenAIEmbeddingFunction

from pymilvus import MilvusClient

OPENAI_API_KEY = "your-openai-api-key"

ef = OpenAIEmbeddingFunction("text-embedding-ada-002", api_key=OPENAI_API_KEY)

docs = [

"Artificial intelligence was founded as an academic discipline in 1956.",

"Alan Turing was the first person to conduct substantial research in AI.",

"Born in Maida Vale, London, Turing was raised in southern England."

]

# Generate embeddings for documents

docs_embeddings = ef(docs)

queries = ["When was artificial intelligence founded",

"Where was Alan Turing born?"]

# Generate embeddings for queries

query_embeddings = ef(queries)

# Connect to Zilliz Cloud with Public Endpoint and API Key

client = MilvusClient(

uri=ZILLIZ_PUBLIC_ENDPOINT,

token=ZILLIZ_API_KEY)

COLLECTION = "documents"

if client.has_collection(collection_name=COLLECTION):

client.drop_collection(collection_name=COLLECTION)

client.create_collection(

collection_name=COLLECTION,

dimension=ef.dim,

auto_id=True)

for doc, embedding in zip(docs, docs_embeddings):

client.insert(COLLECTION, {"text": doc, "vector": embedding})

results = client.search(

collection_name=COLLECTION,

data=query_embeddings,

consistency_level="Strong",

output_fields=["text"])

Create embeddings with text-embedding-3-small and insert them into Zilliz Cloud for semantic search

from pymilvus import model, MilvusClient

OPENAI_API_KEY = "your-openai-api-key"

ef = model.dense.OpenAIEmbeddingFunction(

model_name="text-embedding-3-small",

api_key=OPENAI_API_KEY,

)

# Generate embeddings for documents

docs = [

"Artificial intelligence was founded as an academic discipline in 1956.",

"Alan Turing was the first person to conduct substantial research in AI.",

"Born in Maida Vale, London, Turing was raised in southern England."

]

docs_embeddings = ef.encode_documents(docs)

# Generate embeddings for queries

queries = ["When was artificial intelligence founded",

"Where was Alan Turing born?"]

query_embeddings = ef.encode_queries(queries)

# Connect to Zilliz Cloud with Public Endpoint and API Key

client = MilvusClient(

uri=ZILLIZ_PUBLIC_ENDPOINT,

token=ZILLIZ_API_KEY)

COLLECTION = "documents"

if client.has_collection(collection_name=COLLECTION):

client.drop_collection(collection_name=COLLECTION)

client.create_collection(

collection_name=COLLECTION,

dimension=ef.dim,

auto_id=True)

for doc, embedding in zip(docs, docs_embeddings):

client.insert(COLLECTION, {"text": doc, "vector": embedding})

results = client.search(

collection_name=COLLECTION,

data=query_embeddings,

consistency_level="Strong",

output_fields=["text"])

Create embeddings with text-embedding-3-large and insert them into Zilliz Cloud for semantic search

from pymilvus.model.dense import OpenAIEmbeddingFunction

from pymilvus import MilvusClient

OPENAI_API_KEY = "your-openai-api-key"

ef = OpenAIEmbeddingFunction("text-embedding-3-large", api_key=OPENAI_API_KEY)

docs = [

"Artificial intelligence was founded as an academic discipline in 1956.",

"Alan Turing was the first person to conduct substantial research in AI.",

"Born in Maida Vale, London, Turing was raised in southern England."

]

# Generate embeddings for documents

docs_embeddings = ef(docs)

queries = ["When was artificial intelligence founded",

"Where was Alan Turing born?"]

# Generate embeddings for queries

query_embeddings = ef(queries)

# Connect to Zilliz Cloud with Public Endpoint and API Key

client = MilvusClient(

uri=ZILLIZ_PUBLIC_ENDPOINT,

token=ZILLIZ_API_KEY)

COLLECTION = "documents"

if client.has_collection(collection_name=COLLECTION):

client.drop_collection(collection_name=COLLECTION)

client.create_collection(

collection_name=COLLECTION,

dimension=ef.dim,

auto_id=True)

for doc, embedding in zip(docs, docs_embeddings):

client.insert(COLLECTION, {"text": doc, "vector": embedding})

results = client.search(

collection_name=COLLECTION,

data=query_embeddings,

consistency_level="Strong",

output_fields=["text"])

Comparing OpenAI Text Embedding Models with Other Popular Models

With fast advancements in natural language processing (NLP), new embedding models are continually emerging, each pushing the boundaries of performance. The Hugging Face MTEB leaderboard is a valuable resource for tracking the latest developments in this field. Below, we list some of the most recent and notable embedding models available that perform at par with OpenAI models.

Voyage-large-2-instruct: An instruction-tuned embedding model optimized for clustering, classification, and retrieval tasks. It excels in tasks involving direct instructions or queries.

Cohere Embed v3: Designed for evaluating query-document relevance and content quality. It improves document ranking and handles noisy datasets effectively.

Google Gecko: A compact model that distills knowledge from large language models for strong retrieval performance while maintaining efficiency.

Mxbai-embed-2d-large-v1: Features a dual reduction strategy, reducing both layers and embedding dimensions for a more compact model with competitive performance.

Nomic-embed-text-v1: An open-source, reproducible embedding model that emphasizes transparency and accessibility with open training code and data.

BGE-M3: Supports over 100 languages and excels in multilingual and cross-lingual retrieval tasks. It handles dense, multi-vector, and sparse retrieval within a single framework.

Check out our AI model page for more information about embedding models and how to use them.

Conclusion

This guide explored OpenAI's latest text embedding models, particularly text-embedding-3-small and text-embedding-3-large , which mark significant improvements over text-embedding-ada-002 . These advanced models offer enhanced performance in tasks such as semantic search, real-time processing, and high-precision applications.

Additionally, we demonstrated how to leverage PyMilvus, a Python SDK for Milvus, to generate vector embeddings with OpenAI’s models and perform semantic searches using Zilliz Cloud, a fully managed service of Milvus.

Further Resources

- Introduction to Vector Embeddings and Embedding Models

- OpenAI Text Embedding Models

- Creating Embeddings with OpenAI Text Embedding Models

- Comparing OpenAI Text Embedding Models with Other Popular Models

- Conclusion

- Further Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

A Beginner's Guide to Website Chunking and Embedding for Your RAG Applications

In this post, we'll explain how to extract content from a website and use it as context for LLMs in a RAG application. However, before doing so, we need to understand website fundamentals.

An Introduction to Vector Embeddings: What They Are and How to Use Them

In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.

Image Embeddings for Enhanced Image Search: An In-depth Explainer

Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.