Generative AI Uncovered: How Machines Now Understand and Generate Text, Images, and Ideas

TL;DR: Generative AI (GenAI)refers to a subset of artificial intelligence technologies designed to create new content, ranging from text and images to music and videos. It operates by learning patterns and features from vast amounts of data and then using this knowledge to generate original outputs. Key GenAI examples include text generators like GPT (Generative Pre-trained Transformer), image creators like DALL-E, and audio synthesis systems. These AI models are particularly valued for their ability to automate creative tasks, enhance productivity, and foster innovation across various industries. However, they also pose challenges, such as the potential for generating misleading information and ethical concerns related to copyright and authorship.

Generative AI Uncovered: How Machines Now Understand and Generate Text, Images, and Ideas

Imagine a world in which machines actively create—creating stories, music, and artwork—instead of merely following commands. This is becoming a reality with the help of generative AI, which pushes the limits of creativity and technology.

Here, we'll discuss the present uses of generative AI, its operation, and the ethical issues it raises to understand this technology and its wider effects.

What Is Generative AI?

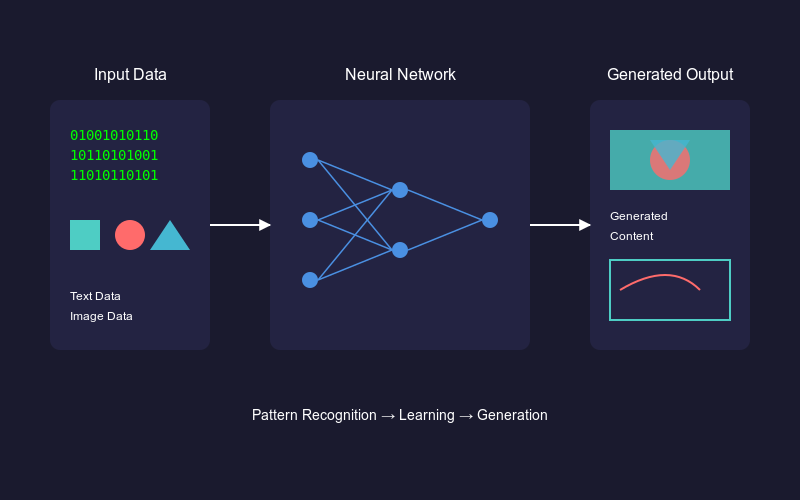

Generative AI, or GenAI, refers to deep learning models that enable computers to create new content based on learned patterns from training data. Conventional AI models, such as neural networks and machine learning algorithms, concentrate on identifying patterns to carry out tasks like regression or classification. Generative AI, on the other hand, takes one step further by recognizing connections within data, including sounds, images, and text. It utilizes these relationships to create new material based on what it has learned instead of merely classifying or predicting.

For instance, when trained on thousands of portraits, generative AI learns facial characteristics—such as feature arrangement and lighting styles—allowing it to create entirely new but realistic-looking portraits. In text generation, generative AI analyzes large volumes of text to capture flow, tone, and word choices, which it then uses to construct original sentences or stories.

Popular generative models include Claude and GPT-4 for text generation, Midjourney and DALL-E 3 for image generation from text prompts, and Jukedeck. Jukedeck composes original music by applying learned patterns.

Figure- The Architecture of Generative AI- From Data to Creation.png

Figure- The Architecture of Generative AI- From Data to Creation.png

Figure: The Architecture of Generative AI: From Data to Creation

How Generative AI Works?

At its core, Generative AI learns from vast amounts of data to grasp underlying patterns and relationships. Here's how it operates in practice.

Learning Data Patterns and Distribution

Generative models examine large datasets, such as text documents, audio recordings, or photos, to determine how various features coexist. In natural language processing (NLP), a model learns how words fit together to create sentences and express meaning. Due to this profound comprehension, AI can produce content that seems natural and appropriate for the context.

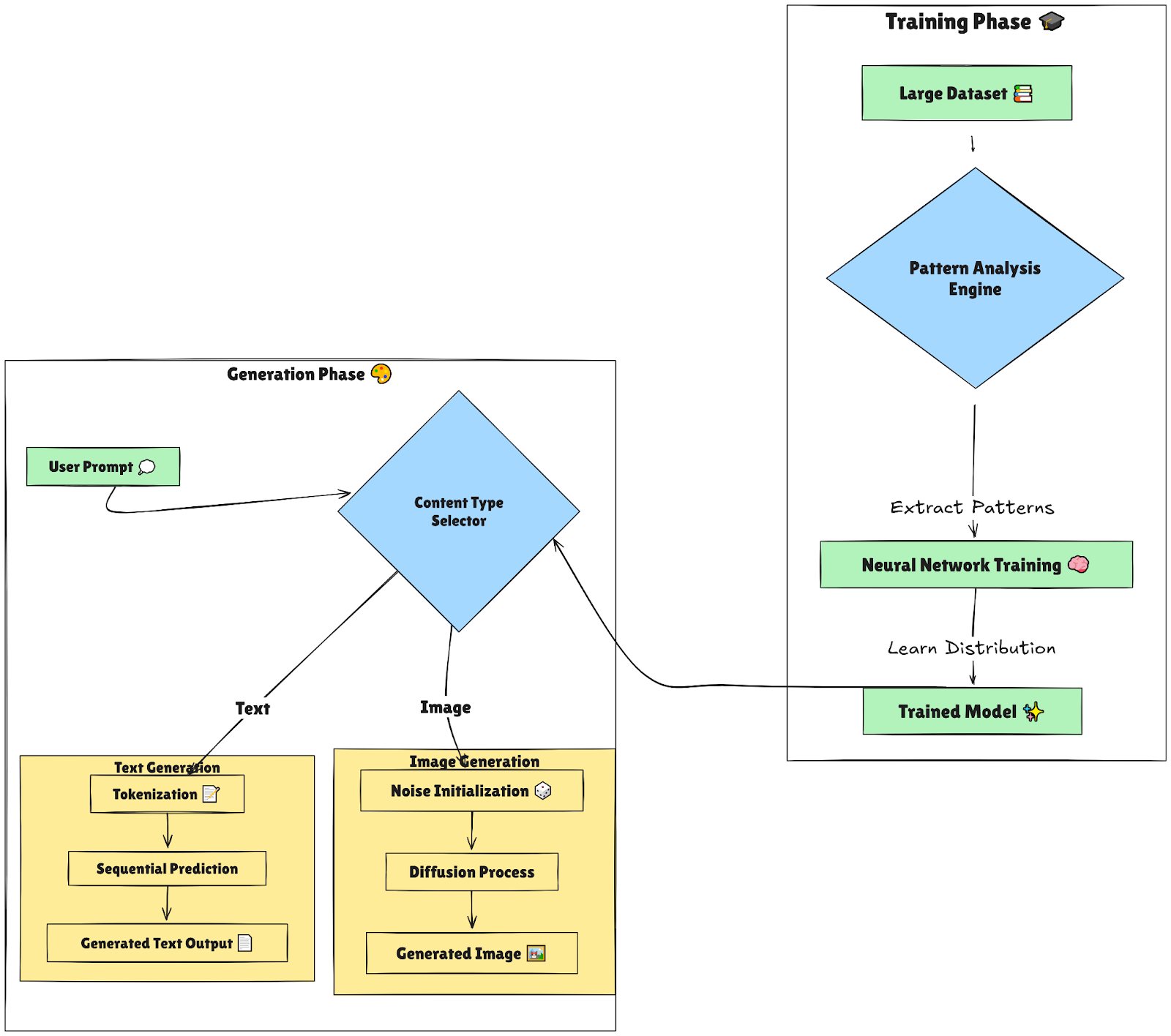

Generating New Data

Once the model has internalized these patterns, it can start producing new content:

Using Random Noise (for Images): Diffusion and visual generative models begin by generating random noise and then applying a series of denoising steps to create a coherent image. This denoising process allows diffusion models to produce unique visuals while preserving essential training data elements.

Tokenization (for Text): In text generation, models break down sentences into tokens—words or phrases. By predicting the next token in a sequence, the AI constructs sentences that flow logically from one to the next.

Figure- Generative AI workflow.png

Figure- Generative AI workflow.png

Figure: Generative AI workflow

Types of Generative AI Models

Different types of models fall under the generative AI umbrella, and they have very heterogeneous mechanisms for generating new data.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks, or GANs, are among the most revolutionary approaches in generative AI. At their core, GANs consist of two neural networks locked in a creative duel. The first, called the generator, attempts to produce data that mimics the training dataset, such as realistic images or lifelike videos. The second, known as the discriminator, acts as a critic, trying to distinguish between real data and the generator’s creations. Through this adversarial process, the generator improves over time, learning to create data so realistic that even the discriminator is fooled. GANs have been used in generating hyper-realistic images, creating deepfakes, and enhancing data for machine learning tasks. Applications like StyleGAN, which generates stunningly detailed human faces, and CycleGAN, which translates images from one domain to another (such as turning photos into paintings), showcase the vast potential this technology.

Variational Autoencoders (VAEs)

While GANs rely on competition, Variational Autoencoders (VAEs) take a more structured approach to generative AI. VAEs encode input data into a compressed latent space and then decode it back to reconstruct the original or create new variations. What sets VAEs apart is their probabilistic approach to encoding, ensuring that the latent space is smooth and continuous. This makes VAEs ideal for generating variations of data, such as morphing one face into another or interpolating between different objects. Beyond generation, VAEs are also used for tasks like data compression and anomaly detection. For instance, they can model "normal" data patterns and highlight deviations, which is useful in identifying fraud or outliers in datasets.

Diffusion Models

Diffusion models represent a new wave of generative AI, offering remarkable results in tasks like image generation. These models are inspired by the natural diffusion process, where order is lost over time, like a drop of ink spreading in water. Diffusion models learn to reverse this process: starting with random noise, they gradually refine the data until a coherent and realistic output emerges. This iterative approach allows for the generation of highly detailed and complex data. The rise of diffusion models has been marked by applications like Stable Diffusion and DALL·E 2, which have redefined what’s possible in image synthesis, including generating great visuals from simple text descriptions.

Autoregressive Models

Autoregressive models are ideal for situations where sequential data is key, such as text, music, or speech. These models predict each data part one step at a time, using previous outputs as input for future predictions. This sequential nature allows autoregressive models to be great at tasks like text generation, where coherence and context are crucial. For example, models like GPT (Generative Pre-trained Transformer) can write essays, stories, and even code snippets, mimicking human creativity. In audio, WaveNet leverages the same principle to produce lifelike speech and high-quality audio synthesis. The ability to generate coherent, context-aware content makes autoregressive models indispensable in natural language processing and generative tasks.

Transformers

Transformer-based models are the backbone of modern generative AI, powered by the attention mechanism that allows them to focus on relevant input and capture long-range dependencies. Their versatility spans multiple domains, from generating human-like text (e.g., GPT-4) to creating stunning visuals (e.g., DALL·E) and processing audio (e.g., Whisper). Transformers are good at performing tasks like text generation, image synthesis, and multimodal applications by handling data efficiently and contextually. Unlike domain-specific models, transformers are adaptable to various data types, making them indispensable in applications ranging from conversational AI to creative tools, solidifying their role as a cornerstone of generative AI innovation.

Generative AI continues to evolve, with each type of model bringing unique strengths and capabilities to a variety of creative and practical applications. The choice depends on your specific needs and the application you build—whether generating lifelike images, composing music, or writing compelling narratives.

Comparison with Traditional AI Models

Generative AI is distinct from traditional AI approaches. Here’s how these strategies compare:

| Aspect | Generative AI | Discriminative AI |

| Objective | Create new data that resembles training data | Classify or predict outcomes based on input data |

| Data Handling | Learns the entire distribution of data | Learns decision boundaries between classes |

| Examples | GANs, VAEs, Transformers, Diffusion Models | CNNs, SVMs, Random Forests, Logistic Regression |

| Typical Applications | Image synthesis, text generation, audio composition | Image classification, object detection, text classification |

| Training Requirements | Large datasets with detailed features and patterns | Labeled datasets with clear distinctions between classes |

| Complexity | Often requires higher computational resources | Typically less computationally demanding |

| Strengths | Enables creative content generation and realistic synthesis | High accuracy in classification and prediction tasks |

Generative AI: Benefits and Real-World Challenges

With its creative approaches to problem-solving, design, and creation, generative AI has emerged as a useful tool for professionals in various fields. Allowing people to draft text, generate visuals, and experiment with music or code changes how people work. Yet, despite these benefits, there are real challenges associated with generative AI.

Benefits

Automated Content Creation: Generative AI supports creative tasks in writing, design, and music. Writers use it for drafting ideas, and designers create patterns to jumpstart projects. Musicians can also experiment with new compositions before recording. This speeds up the creative process while leaving space for human touches.

Personalized Experiences: Generative AI helps make tailored recommendations that match user interests. It analyzes past behavior to create relevant ads and content. In marketing and e-commerce, this personalized touch enhances audience connection.

Inspiring New Ideas: Generative AI sparks fresh ideas, especially in research and product design. It can propose new compounds in fields like pharmaceuticals. This AI-driven creativity offers starting points that experts can refine further.

Creating Additional Data: Generative AI can create synthetic data for areas where real data is scarce or costly. This is valuable in fields like healthcare, aiding model training for diagnostics. Synthetic data helps improve models while maintaining quality.

Challenges:

Hallucinations: This refers to the phenomenon where a model generates incorrect, fabricated, or misleading information that is presented as factual or accurate.

High Demands on Data and Computing Power: Generative AI requires large datasets and advanced computing. High-resolution tasks, like image generation, need powerful hardware and long training times. These demands can limit access for smaller creators and companies.

Ensuring Quality and Consistency: Producing high-quality content with generative AI can be challenging. Models may struggle with consistency or create repetitive outputs. In fields like medical imaging, maintaining accuracy is essential.

Ethical Considerations: Generative AI raises ethical concerns, including biases and potential misuse. Deepfakes, for example, can create deceptive content. Monitoring AI outputs carefully is key to preventing misinformation and unfair practices.

Privacy and Data Security: Generative AI relies on large datasets, which can risk privacy. Sensitive information, if mishandled, might be repeated by models. Strong privacy safeguards are essential, especially in sectors like healthcare.

Need for Clear Regulations: As generative AI grows, so does the need for regulation. Ethical standards and guidelines help ensure AI benefits society. Clear rules reduce misuse, such as spreading misinformation or generating spam.

Retrieval Augmented Generation (RAG) and GenAI

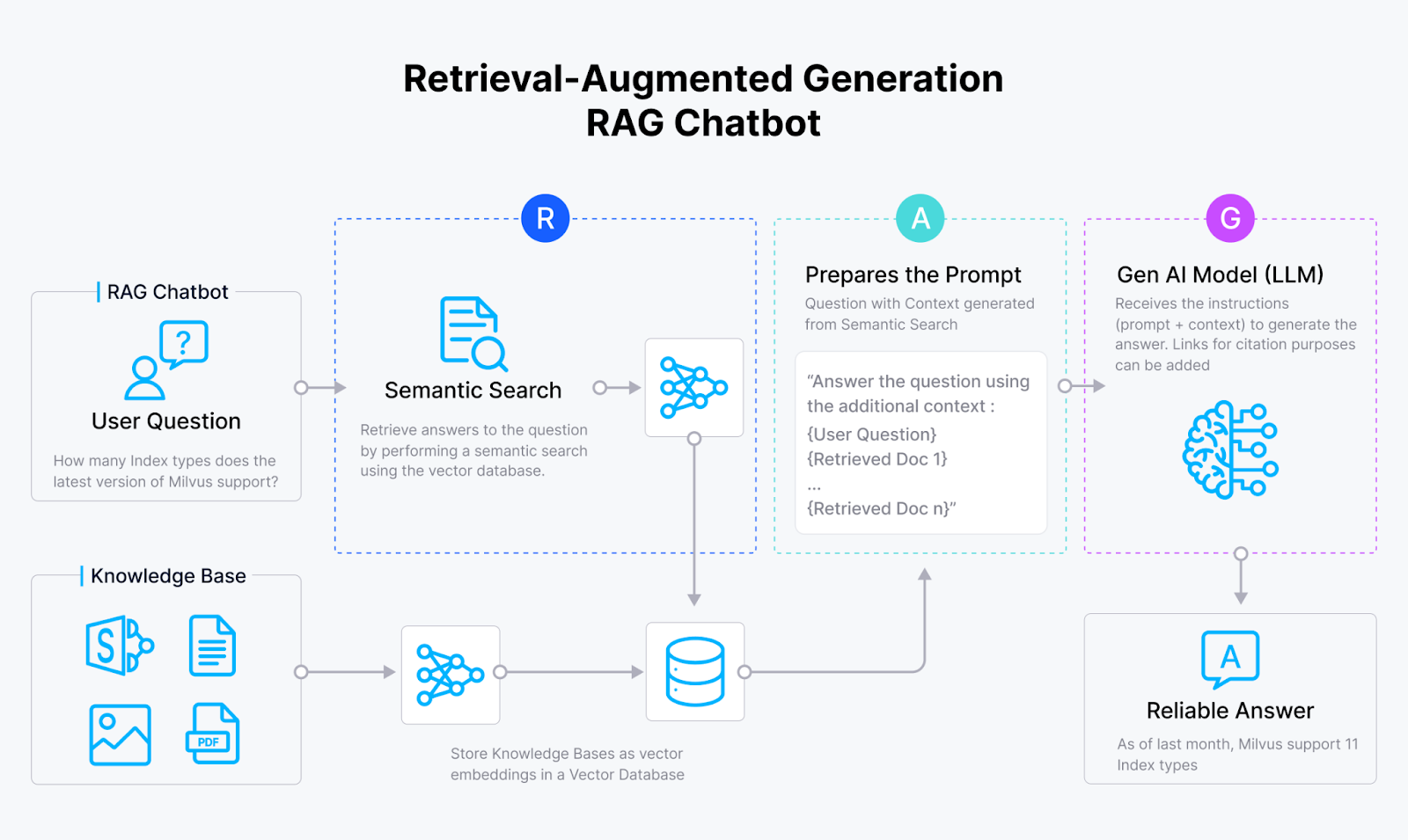

While many generative models, particularly large language models (LLMs), are powerful in generating various types of content, they have limitations. One of the biggest challenges is the issue of "hallucinations," which refers to the phenomenon where a model generates incorrect, fabricated, or misleading information that is presented as factual or accurate. This is because generative models are trained on offline and publicly available data, so they cannot generate content related to the most up-to-date or proprietary data.

Retrieval Augmented Generation (RAG) is a methodology in natural language processing that enhances the capabilities of generative models by integrating them with retrieval components. This approach allows a model to dynamically retrieve external information and then generate responses based on both the retrieved data and its internal knowledge.

A RAG system comprises a vector database like Milvus, an embedding model, and a large language model (LLM). A RAG system first uses the embedding model to transform documents into vector embeddings and store them in a vector database. Then, it retrieves relevant query information from this vector database and provides the retrieved results to the LLM. Finally, the LLM uses the retrieved information as context to generate more accurate outputs.

Figure- RAG workflow.png

Figure- RAG workflow.png

FAQs

1. What can Generative AI create? Is it only for text?

Generative AI can create not just text, but also 3D models, music, photos, and movies by combining patterns from examples to generate unique content like music or landscapes.

2. How is Generative AI different from other AI tools?

Generative AI creates original content, like new images or stories, while standard AI mainly recognizes or anticipates existing data, such as identifying a cat.

3. Are there ethical issues with Generative AI?

Concerns about generative AI include privacy issues and the potential reinforcement of biases from training data. It can create realistic images or videos like deepfakes, making responsible use essential to prevent misinformation and unfair practices.

4. Where’s Generative AI being used these days, and what impact is it making?

Generative AI is used in various fields, including customer service, healthcare, gaming, and music. It offers quick solutions and fosters innovative approaches across industries.

5. What’s the deal with vector databases, and why are they essential for Generative AI?

Vector databases store complex data patterns vital for generative AI, allowing quick information retrieval for real-time content generation and enhancing contextually accurate results.

Related Resources

- What Is Generative AI?

- How Generative AI Works?

- Types of Generative AI Models

- Comparison with Traditional AI Models

- Generative AI: Benefits and Real-World Challenges

- Retrieval Augmented Generation (RAG) and GenAI

- FAQs

- Related Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free