What Is a Diffusion Model? A Comprehensive Definition

What Is a Diffusion Model? A Comprehensive Definition

What Is a Diffusion Model?

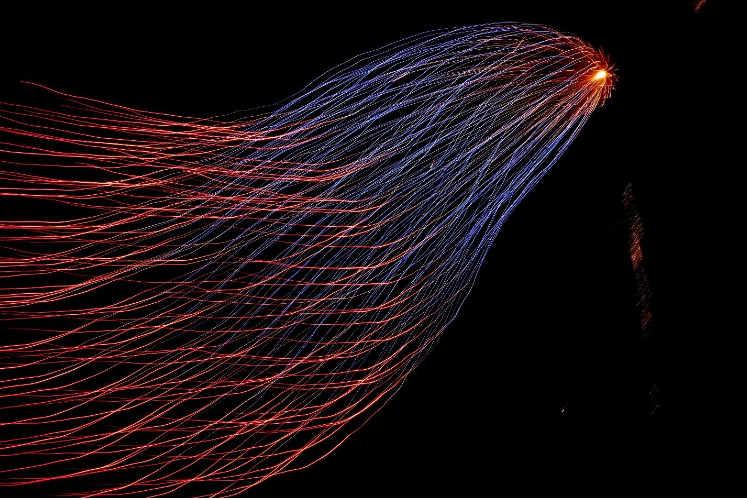

Diffusion models are a class of probabilistic generative models. They're powerful in reducing noise in machine learning as well as image synthesis. A diffusion model progressively destructs data by adding Gaussian noise and learns to reverse the process for sample generation.

In image generation, for example, diffusion models add noise to an image a hundred or a thousand times until it becomes a pure noise image. They then gradually remove the noise from the image until you end up with a coherent image. Finally, the model can generate new diverse images that are close matches to the original image.

Components of Diffusion Models

Diffusion models are based on three underlying mathematical frameworks:

- Denoising diffusion probabilistic model (DDPMs): DDPMs use two Markov chains, forward and reverse chains, to add or remove noise from data. They leverage deep neural networks to learn how to reverse the noise introduced in the forward chain, ultimately allowing the generation of data samples that closely resemble the original data distribution.

- Score-based generative models (SGMs): SGMs work by adding Gaussian noise to data and estimating score functions using a noise-trained deep neural network. These score functions guide the generation of new data samples that closely resemble the original distribution.

- Stochastic differential equations (score SDEs): Score SDEs generalize DDPMs and SGMs to model data with infinite time steps or noise levels. They use SDEs to model the noise perturbation and sample generation processes, and it relies on score functions to denoise the generated data.

How Do Diffusion Models Work?

Diffusion models operate at a sophisticated level, involving the meticulous interplay of data, controlled Gaussian noise, and an intermediate latent space. This intricate process ultimately empowers them to craft enhanced versions of the original data. Let's dive deeper into the mechanics:

1. The Role of Gaussian Noise:

Diffusion models introduce Gaussian noise systematically throughout their operations. At each iterative step, precisely controlled Gaussian noise is added to the data. This noise is sampled from a Gaussian distribution, affording fine-grained control over noise levels.

# Adding controlled Gaussian noise noisy\_data = original\_data + noise

2. Transition to the Normal Distribution:

To facilitate the diffusion process, the input data undergoes a transformation. It is first converted into a normal distribution, characterized by a mean of 0 and a standard deviation of 1. This conversion not only simplifies noise control but also lays the foundation for the diffusion journey.

# Transforming data into a normal distribution normalized\_data = (original\_data - original\_data.mean()) / original\_data.std()

3. Diffusion Modelling Process:

Let's dissect the diffusion modeling process within the context of generative models, especially for images or videos. The diffusion journey consists of two pivotal phases:

- Forward Diffusion Process: In this phase, the model embarks on a journey of transforming a pristine image's pixels. Through hundreds or even thousands of incremental steps, Gaussian noise is progressively introduced. This process unfolds in a Markov chain, with each step injecting a precisely controlled amount of noise. The image effectively "diffuses" into a state of pure noise.

# Forward diffusion process for step in range(num\_steps): noisy\_image = noisy\_image + step\_noise\[step]

- Reverse Diffusion Process: In contrast, the reverse diffusion process marks the path toward clarity. This phase involves noise removal, scaling, and iterative steps that eventually lead to the emergence of a clear image. It's akin to watching a foggy image gradually sharpen into focus.

# Reverse diffusion process for step in reversed(range(num\_steps)): noisy\_image = noisy\_image - step\_noise\[step]

In the forward diffusion process, it's critical to note that scaling the pixel values is a key step before further noise addition. Skipping this step could lead to image saturation, where details are lost.

# Scaling pixel values scaled\_image = original\_image \* scaling\_factor

4. Leveraging Convolutional Neural Networks (CNNs):

Within the reverse diffusion process, convolutional neural networks (CNNs) play a pivotal role. Given an image shrouded in pure noise, these neural networks are adept at denoising the image. They skillfully remove the noise, unveiling a clear, coherent image that closely mirrors the original.

5. Training for Future Generations:

Post-training, the diffusion model is primed for the generation of fresh images. This involves the intentional introduction of noise to the input data. The model then takes charge, using its learned denoising prowess to craft new, pristine versions of the input data.

# Generating new images by introducing noise new\_image = noisy\_input\_data + generated\_noise

Intermediate Latent Space in Modern Diffusion Models:

In the realm of modern image diffusion models, a compelling twist unfolds. These models often introduce a third player into the mix—an intermediate latent space. Rather than operating directly on raw image pixels, the diffusion process occurs within this latent space. This innovation enhances the efficiency and effectiveness of diffusion models, allowing for more nuanced and controlled transformations.

Applications of Diffusion Models in AI/ML

Diffusion model is an approach gaining popularity in generative modeling due to its many real-life use cases. It's used in image generation, text-to-image generation, image super-resolution, natural language generation, etc.

Natural Language Generation

Natural language processing (NLP) has many real-life applications in today's world of AI. Due to their capability to produce diverse outputs, diffusion models are essentially applied in natural language generation (NLG). They enable many NLG tasks in large language models (LLM) such as generative pre-trained transformers (GPT). These include content generation, text summarization, and text completion.

Image and Video Generation

Diffusion models improve the quality of generated videos. They address the complexity and spatiotemporal continuity challenges of video frames. This capability enables content creators to feed text prompts to generate short, high-quality video scripts.

Text-to-Image Generation

Today, you can generate images by feeding text prompts into a text-to-image generator. Imagen by Google and DALL-E by OpenAI are examples. LLMs use diffusion to decode visual words into an image. Ideally, these generators encode images as vector visual words. They then use these words to condition a diffusion model to generate a new image with a similar style to the original image.

Image Super Resolution

One of the earliest applications of diffusion models is image super resolution. This involves enhancing the resolution of an image from low to high resolution while retaining its content as much as possible.

Image resolution using the diffusion model happens in two steps. First, you use the diffusion model to generate low-resolution images, which are easier and faster to train. Second, you train a separate diffusion model and condition the model to a super-resolution that will improve the resolution of low-quality images.

Limitations of Diffusion Models

Diffusion models are powerful and are revolutionizing the AI industry. However, as we'll demonstrate below, they have a fair share of challenges.

Diffusion Models Take Time to Train

Diffusion models rely on denoising to generate images. Training denoiser models from scratch involves many iterations. At the early levels, diffusion models are trained longer. The training gradually reduces at the later stages when the model can generate samples that closely match training data. This process may take minutes or hours or computational time. In other words, diffusion models use thousands of calls to neural networks to generate samples compared to GANs, which use only one call. This makes diffusion models slower at sample generation.

It's Challenging to Fine-Tune a Diffusion Model

To get an individualized version of a model, you take a pre-trained model and train it with your own data. However, it's challenging to fine-tune a pre-trained unconditional diffusion model with limited data. Data limitations may lead to overfitting in the early stages of training. The resulting images from overfitted models are of low quality and limited diversity.

Diffusion Models Require Large Memory

Large diffusion models demand large memory requirements. A diffusion model may contain billions of parameters. They're therefore trained and tested on powerful servers. Considering their limited GPU memory size, implementing these models on mobile devices is demanding. With this limitation, mobile devices face difficulties running a single large diffusion model.

With GPU memory usage posing a threat to diffusion model deployment on mobile, solutions are coming up. One solution is releasing smaller versions of diffusion models. The models are compressed enough to be deployed on mobile.

FAQs

What's the Difference Between a GAN and a Diffusion Model?

Generative adversarial networks (GANs) work through an adversarial training process. They employ a generator neural network to produce data samples and a discriminator neural network to differentiate between genuine and fake data samples. A diffusion model is a likelihood-based model that generates samples by iteratively adding Gaussian noise to data and applying a learned denoising function to gradually transform the data into realistic samples.

What's the Difference Between Transformer and Diffusion Model?

Transformers apply self-attention mechanisms to learn the contextual relationship between elements in sequential data and excel in image classification and NLP. Diffusion models excel at generating high-quality, photorealistic images.

What Are Some Challenges of Diffusion Models?

Diffusion models follow a time-consuming denoising training process with many iterations. Additionally, large diffusion models with billions of parameters demand extensive memory resources. Finally, fine-tuning a diffusion model with limited data can lead to overfitting, resulting in low-quality and less diverse generated images.

How Does a Diffusion Model Generate Images?

Diffusion models generate images through an iterative process that involves diffusing and denoising an image's pixel values to generate increasingly coherent and detailed images.

- What Is a Diffusion Model?

- Components of Diffusion Models

- How Do Diffusion Models Work?

- Applications of Diffusion Models in AI/ML

- Limitations of Diffusion Models

- FAQs

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free