Ultimate Guide to Getting Started with LangChain

Have you seen the parrot + chain emoji popping up around AI lately? Those are LangChain’s signature emojis. LangChain is an AI Agent tool that adds functionality to large language models (LLMs) like GPT. In addition, it includes functionality such as token management, context management and prompt templates. For this getting started tutorial, we look at two primary LangChain examples with real-world use cases. First, how to query GPT. Second, how to query a document with a Colab notebook available here. This LangChain tutorial will guide you through the process of querying GPT and documents using LangChain.

In this tutorial we cover:

- What is LangChain?

- How Can You Run LangChain Queries?

- Query GPT

- Query a Document

- Introduction to LangChain Summary

What is LangChain?

LangChain is a framework for building applications that leverage large language models. It allows you to quickly build with the CVP Framework. The two core LangChain functionalities for leverage large language models are 1) to be data-aware and 2) to be agentic. Data-awareness is the ability to incorporate outside data sources into an LLM application. Agency is the ability to use other tools.

As with many LLM tools, LangChain’s default LLM is OpenAI’s GPT and you need an API key from OpenAI to use it. Additionally, LangChain offers the LangChain Expression Language (LCEL) for composing complex language processing chains, simplifying the transition from prototyping to production. In addition, LangChain works with both Python and JavaScript. In this tutorial, you will learn how it works using Python examples. You can install the Python library through pip by running pip install langchain.

How does LangChain work?

LangChain’s question-answering flow consists of building blocks that can be easily swapped to create a custom template according to individual needs. These blocks include the question, embedding, documents used to train the model, the constructed prompt, and a response. LangChain also utilizes output parsers to manage and refine the responses generated by language models, ensuring structured and relevant results. Therefore, if a company desires a chat experience trained by specific documents, LangChain can swap in and out the various components to achieve that goal.

How to use LangChain with GPT and Milvus vector database?

One of the primary LangChain use cases is to query text data. You can use it to query documents, vector stores, or to smooth your interactions with GPT, much like LlamaIndex. In this tutorial, we cover a simple example of how to interact with GPT using LangChain and query a document for semantic meaning using LangChain with a vector store. This process, known as Retrieval Augmented Generation (RAG), enhances the capabilities of language models by incorporating external data during the generation process.

Query GPT

Most people’s familiarity with GPT comes from chatting with ChatGPT. ChatGPT is OpenAI’s flagship interface for interacting with GPT. However, if you want to interact with GPT programmatically, you need a query interface like LangChain. LangChain provides a range of query interfaces for GPT, from simple one-question prompts to few shot learning via context.

In this example, we’ll look at how to use LangChain to chain together questions using a prompt template. There are a few Python libraries you need to install first. We can install them with # pip install langchain openai python-dotenv tiktoken. I use python-dotenv because I manage my environment variables in a .env file, but you can use whatever method you want to get your OpenAI API key loaded.

With our OpenAI API key ready, we must load up our LangChain tools. We need the PromptTemplate and LLMChain imports from langchain and the OpenAI import from langchain.llms. We use OpenAI’s text model, text-davinci-003 for this example. Next, we create a template to query GPT with. The template that we create below tells GPT to answer the given questions one at a time. First, we create a string representing input variables within brackets, similar to how f-strings work.

import os

from dotenv import load_dotenv

import openai

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

from langchain import PromptTemplate, LLMChain

from langchain.llms import OpenAI

davinci = OpenAI(model_name="text-davinci-003")

multi_template = """Answer the following questions one at a time.

Questions:

{questions}

Answers:

"""

Next, we use the PromptTemplate object to create a template from the string with specified input variables. With our prompt template ready, we can create an LLM “chain” by passing in the prompt and the chosen leverage large language models. Now it’s time to create the questions. Once we have the questions we want to ask, we run the LLM chain with the questions passed in to get our answers.

long_prompt = PromptTemplate(template=multi_template, input_variables=["questions"])

llm_chain = LLMChain(

prompt=long_prompt,

llm=davinci

)

qs_str = (

"Which NFL team won the Super Bowl in the 2010 season?\n" +

"If I am 6 ft 4 inches, how tall am I in centimeters?\n" +

"Who was the 12th person on the moon?" +

"How many eyes does a blade of grass have?"

)

print(llm_chain.run(qs_str))

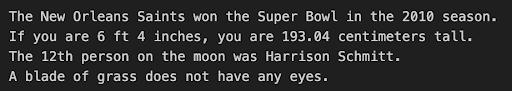

The image below shows the expected results from our example questions.

Results from example questions.

Results from example questions.

Query relevant documents

One of the areas for improvement of GPT and leverage large language models at large is that they are only trained on data available at their time of training. This means that over time, they lose context and accuracy. Much like the CVP framework, LangChain provides a way to remediate this problem with vector databases. Many vector databases are available; for this example, we use Milvus because of our familiarity with it.

LangChain excels in handling document data, transforming scanned documents into actionable data through workflow automation.

To demonstrate LangChain’s ability to inject up-to-date knowledge into your LLM application and the ability to do a semantic search, we cover how to query a document. We use a transcript from the State of the Union address for this example. You can download the transcript and find the Colab notebook here. To get the libraries you need for this part of the tutorial, run pip install langchain openai milvus [pymilvus](https://zilliz.com/blog/get-started-with-pymilvus) python-dotenv tiktoken.

The ability to fetch relevant documents dynamically based on user queries significantly improves the accuracy of the generated responses.

As with the example of chaining questions together, we start by loading our OpenAI API key and the leverage large language models. Then, we spin up a vector database using Milvus Lite, which allows us to run Milvus directly in our notebook.

import os

from dotenv import load_dotenv

import openai

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

from langchain.llms import OpenAI

davinci = OpenAI(model_name="text-davinci-003")

from milvus import default_server

default_server.start()

Now we are ready to get into the specifics of querying a document. There are a lot of imports from LangChain this time. We need the Open AI Embeddings, the character text splitter, the Milvus integration, the text loader, and the retrieval Q/A chain.

The first thing we do is set up a loader and load the text file. In this case, I have it stored in the same folder as this notebook under state_of_the_union.txt. Next, we split up the text and store it as a set of LangChain docs. Then, we can set up our vector database. In this case, we create a Milvus collection from the documents we just ingested via the TextLoader and CharacterTextSplitter. We also pass in the OpenAI embeddings as the set of text vector embeddings.

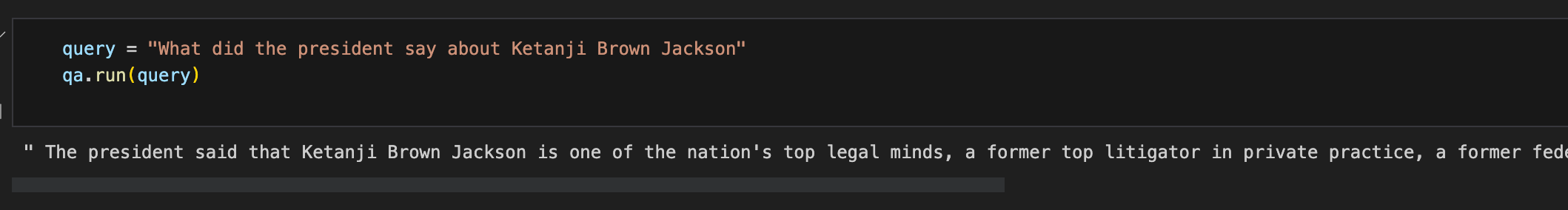

With our vector database loaded, we can use the RetrievalQA object to query the documents via a vector database. We use the chain type stuff " and pass in OpenAI as our LLM and the Milvus vector database as a retriever. Then, we can create a query, such as “What did the president say about Ketanji Brown Jackson?” andrun` the query. Finally, we should shut down our vector database for a clean close.

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import Milvus

from langchain.document_loaders import TextLoader

from langchain.chains import RetrievalQA

loader = TextLoader('./state_of_the_union.txt')

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vector_db = Milvus.from_documents(

docs,

embeddings,

connection_args={"host": "127.0.0.1", "port": default_server.listen_port},

)

qa = RetrievalQA.from_chain_type(llm=OpenAI(), chain_type="stuff", retriever=vector_db.as_retriever())

query = "What did the president say about Ketanji Brown Jackson?"

qa.run(query)

default_server.stop()

The image below shows what an expected response could look like. We should get a response like “The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, …”

Query results

Query results

LangChain alternative

There are several chat-based tools that could be considered alternatives to LangChain, and people often debate which ones are the best. Many of these alternatives also utilize specialized chat models designed for interactive chat applications. A few that come up frequently include:

- AgentGPT

- TensorFlow

- Auto-GPT

- BabyAGI

- Semantic UI

- LlamaIndex

Summary of a LangChain tutorial

In this article, we covered the basics of how to use LangChain. We learned that LangChain is a framework for building LLM applications that relies on two key factors. The first factor is using outside data, such as a text document. LangChain's ability to integrate external data enhances the effectiveness of language models by incorporating user-specific information. The second factor is using other tools, such as a vector database.

We covered two examples. First, we looked at one of the classic LangChain examples - how to chain together multiple questions. LangChain works seamlessly with various model providers, including OpenAI and Hugging Face, to enhance its functionality. Then, we looked at a practical way to use LangChain to inject domain knowledge by combining it with a vector database like Milvus to query documents.

Output parsers play a crucial role in refining the language model's responses, ensuring structured and relevant results.

- What is LangChain?

- How does LangChain work?

- How to use LangChain with GPT and Milvus vector database?

- LangChain alternative

- Summary of a LangChain tutorial

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Enterprise Vector Search Powers High-Performance AI on AWS

Zilliz Cloud delivers blazing-fast, secure vector search on AWS, optimized for AI workloads with AutoIndex, BYOC, and Cardinal engine performance.

8 Latest RAG Advancements Every Developer Should Know

Explore eight advanced RAG variants that can solve real problems you might be facing: slow retrieval, poor context understanding, multimodal data handling, and resource optimization.

Multimodal Pipelines for AI Applications

Learn how to build scalable multimodal AI pipelines using Datavolo and Milvus. Discover best practices for handling unstructured data and implementing RAG systems.