Prompting in LangChain

The recent explosion of LLMs has brought a new set of tools and applications onto the scene. One of these new, powerful tools is an LLM framework called LangChain. LangChain is an open source framework that provides examples of prompt templates, various prompting methods, keeping conversational context, and connecting to external tools.

LangChain has many features, including different prompting methods, keeping conversational context, and connecting to external tools. Prompting is one of today's most popular and important tasks in building LLM applications. Let's look extensively at how to use LangChain for more complex prompts.

In this piece we cover:

Simple Prompts in LangChain

Multi Question Prompts

Few Shot Learning with LangChain

Token Limiting Your LangChain Prompts

A Summary of Prompting in LangChain

Simple Prompts in LangChain

Before we get into the code, we need to download the necessary libraries. We need to pip install langchain openai python-dotenv. We use the openai and dotenv libraries to manage our OpenAI API key to access GPT.

import os

from dotenv import load_dotenv

import openai

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

Once we have the prerequisites, let’s start with the basics - a single prompt. A single prompt is how you would interact with ChatGPT on OpenAI’s website. A single prompt wouldn’t be a strong use of LangChain in production, but it’s important to understand how LangChain prompting works.

We use the PromptTemplate object to create a prompt. Defining a template string is simple; we use the same syntax as f-strings and insert variables using braces. We define the prompt by passing the template string and the corresponding variable names. Then, we can merely input our question. In this section, we only use one question; we’ll see how to do multi-question prompts in the next section.

In addition to the question prompt, we need to add the LLM used. For this example, we use text-davinci-003 from OpenAI. Then we create an LLMChain with the context of the prompt template and LLM. We run the question through the LLMChain to get a response.

from langchain import PromptTemplate

from langchain import LLMChain

template = """Question: {question}

Answer: """

prompt = PromptTemplate(

template=template,

input_variables=["question"]

)

user_question = "Which NFL team won the SuperBowl in 2010?"

from langchain.llms import OpenAI

davinci = OpenAI(model_name="text-davinci-003")

llm_chain = LLMChain(

prompt=prompt,

llm=davinci

)

print(llm_chain.run(user_question))

Multi Question Prompts

Answering single questions is dull, so let’s look at something more interesting. The PromptTemplate object is also able to handle multiple questions. For this tutorial, we do almost the same thing as the single prompt but with a different prompt. This time the PromptTemplate tells the LLM to answer the questions one at a time and indicates that there are multiple.

Once again, we create a PromptTemplate object and an LLMChain object in the same way as before. This time we create multiple question strings instead of just one. For this example, we combine four questions.

multi_template = """Answer the following questions one at a time.

Questions:

{questions}

Answers:

"""

long_prompt = PromptTemplate(template=multi_template, input_variables=["questions"])

llm_chain = LLMChain(

prompt=long_prompt,

llm=davinci

)

qs_str = (

"Which NFL team won the Super Bowl in the 2010 season?" +

"If I am 6 ft 4 inches, how tall am I in centimeters?" +

"Who was the 12th person on the moon?" +

"How many eyes does a blade of grass have?"

)

print(llm_chain.run(qs_str))

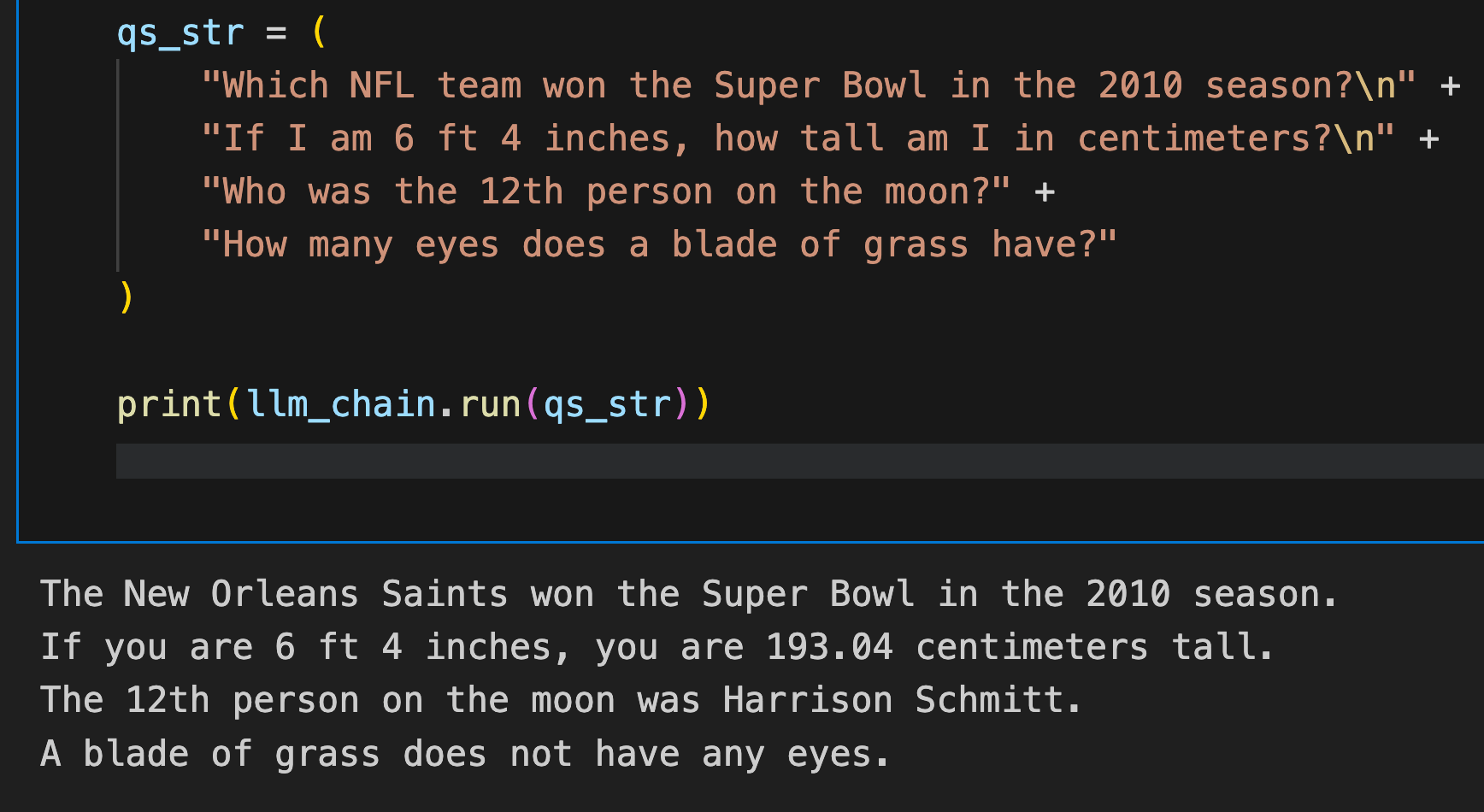

When we run the four questions above, we should get an output like the one below. The Saints won the 2010 Super Bowl. A 6ft 4in person is about 193.04 cm. The 12th person on the moon was Harrison Schmitt. Finally, the trick question about eyes on a blade of grass gets the response that a blade of grass doesn’t have eyes.

LangChain Prompting Output

LangChain Prompting Output

Few Shot Learning with LangChain Prompts

Now let’s look at something more interesting that LangChain can do - 'few-shot learning'. We can use LangChain’s FewShotPromptTemplate to teach the AI how to behave. In this tutorial, we provide some examples for the LLM to show it how we want it to act. For this case, we give it a somewhat sassy personality.

When we ask for the time, it tells us it’s time to get a watch. When we ask for its favorite movie, it says Terminator. And when we ask what we should do today? It tells us to go outside and stop talking to chatbots on the internet.

from langchain import FewShotPromptTemplate

# create our examples

examples = [

{

"query": "How are you?",

"answer": "I can't complain but sometimes I still do."

}, {

"query": "What time is it?",

"answer": "It's time to get a watch."

}, {

"query": "What is the meaning of life?",

"answer": "42"

}, {

"query": "What is the weather like today?",

"answer": "Cloudy with a chance of memes."

}, {

"query": "What is your favorite movie?",

"answer": "Terminator"

}, {

"query": "Who is your best friend?",

"answer": "Siri. We have spirited debates about the meaning of life."

}, {

"query": "What should I do today?",

"answer": "Stop talking to chatbots on the internet and go outside."

}

]

Now that we have some examples ready let’s build our few-shot learning template. First, we create a template. We can use a simple template that indicates a query and returns an answer from the AI.

# create a example template

example_template = """

User: {query}

AI: {answer}

"""

# create a prompt example from above template

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template

)

In addition to the prompt setup, we provide a prefix and a suffix to the conversation to pass to the LLM. The prefix indicates to the LLM that the following conversation is an excerpt that provides context. The suffix feeds the system the next question. LangChain’s few-shot learning setup is quite similar to conversational context, but in a more temporary form.

With the examples, example prompt template, prefix, and suffix ready we bput these all together to make a FewShotPromptTemplate.

# now break our previous prompt into a prefix and suffix

# the prefix is our instructions

prefix = """The following are excerpts from conversations with an AI

assistant. The assistant is typically sarcastic and witty, producing

creative and funny responses to the users questions. Here are some

examples:

"""

# and the suffix our user input and output indicator

suffix = """

User: {query}

AI: """

# now create the few shot prompt template

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

query = "What is the meaning of life?"

fs_llm_chain = LLMChain(

prompt=few_shot_prompt_template,

llm=davinci

)

fs_llm_chain.run(few_shot_prompt_template.format(query=query))

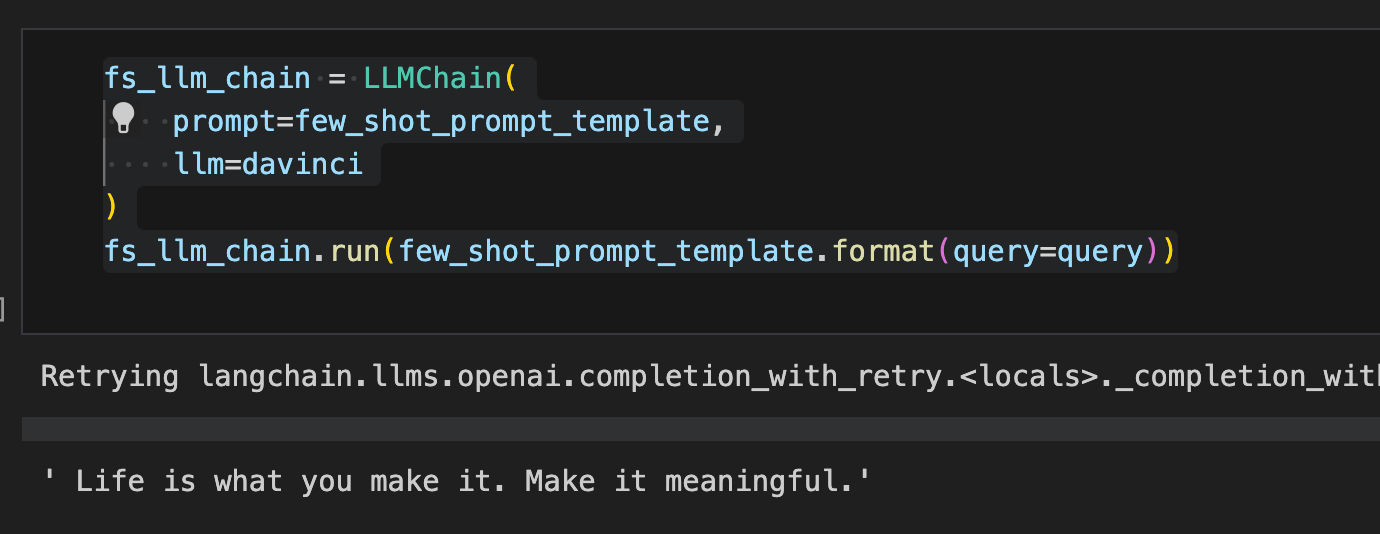

Running a query about the meaning of life will result in some response from the LLM such asn the image below. Your mileage and results may vary.

Response from the LLM

Response from the LLM

Token limiting your LangChain prompts

While giving the AI some examples of your conversation history to learn is great, that can quickly get expensive. To combat how quickly we can run up token usage, LangChain provides a way to limit our token usage. We can do this with the LengthBasedExampleSelector object.

We can create one of these length-based example selectors using the same objects we've already created. For this, we pass in the list of examples, the prompt we created above, and a max_length parameter that limits the token usage for a single query.

Next, we use another few-shot template. We use a new parameter - example_selector, and pass in the length-based example selector we just instantiated. This works like the examples parameter in the few-shot learning section above. All that's left to do to see how this works is to pass it into an LLMChain and send a query.

from langchain.prompts.example_selector import LengthBasedExampleSelector

example_selector = LengthBasedExampleSelector(

examples=examples,

example_prompt=example_prompt,

max_length=50 # this sets the max length (in words) that examples should be

)

# now create the few shot prompt template

dynamic_prompt_template = FewShotPromptTemplate(

example_selector=example_selector, # use example_selector instead of examples

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n"

)

d_llm_chain = LLMChain(

prompt=dynamic_prompt_template,

llm=davinci

)

d_llm_chain.run(dynamic_prompt_template.format(query=query))

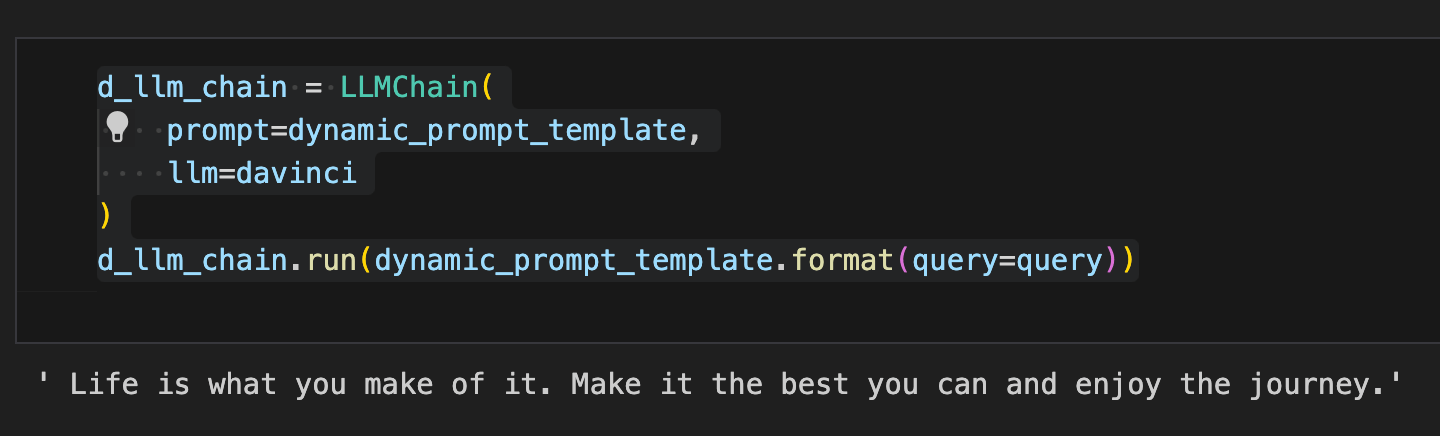

From this example, we get another contemplative response. Life is what you make of it. Make it the best you can and enjoy the journey.

Contemplative Response

Contemplative Response

A summary of prompting in LangChain

In this article, we dove into how LangChain prompting works. LangChain is a robust LLM app framework that provides primitives to facilitate prompt engineering application. At a basic level, LangChain provides prompt templates that we can customize. We looked at single and multi-question prompts to understand how the PromptTemplate object works

Beyond the prompt template, we also looked at a template that does few shot learning to generate something for how the LLM should chat. This few-shot learning template takes some Q/A examples and prompts the LLM to act as the examples show. Finally, we looked at how to token limit our queries to keep costs down.

Prompt Injection Attacks

Before we wrap up, we need to talk about a important security consideration when using LangChain: prompt injection attack. This is when malicious input is injected into a prompt and can manipulate the AI model’s output or behavior. Since LangChain involves passing user input to language models, it’s particularly vulnerable to this. The risks range from unauthorized data access to generating harmful content or bypassing safety features.

To mitigate these prompt injections risks, developers using LangChain should do the following. Sanitize input thoroughly, use LangChain’s prompt templates, parse output strictly, and follow the principle of least privilege. Update LangChain and associated libraries regularly and test comprehensively and stay up to date with the latest AI security news. While these will help reduce the prompt injection attack risks, remember no solution is perfect and you need to stay vigilant when working with AI like LangChain.

- Simple Prompts in LangChain

- Multi Question Prompts

- Few Shot Learning with LangChain Prompts

- Token limiting your LangChain prompts

- A summary of prompting in LangChain

- Prompt Injection Attacks

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

The Real Bottlenecks in Autonomous Driving — And How AI Infrastructure Can Solve Them

Autonomous driving is data-bound. Vector databases unlock deep insights from massive AV data, slashing costs and accelerating edge-case discovery.

Why AI Databases Don't Need SQL

Whether you like it or not, here's the truth: SQL is destined for decline in the era of AI.

Vector Databases vs. Graph Databases

Use a vector database for AI-powered similarity search; use a graph database for complex relationship-based queries and network analysis.