Massive Multitask Language Understanding: The Benchmark for Multitask AI Models

Massive Multitask Language Understanding: The Benchmark for Multitask AI Models

What is MMLU?

MMLU (Massive Multitask Language Understanding) is a benchmark designed to evaluate the multitask capabilities of language models across diverse subjects. It covers 57 tasks spanning topics like humanities, STEM, social sciences, and more, with questions ranging from elementary to professional levels. MMLU assesses a model's ability to generalize knowledge and reason across a wide variety of domains, making it a comprehensive test for advanced language models. High performance on MMLU indicates a model’s ability to handle complex, real-world problems that require broad knowledge and contextual understanding.

How does MMLU Work?

MMLU utilizes a series of multiple-choice questions to assess an AI model’s ability to process and respond to diverse types of information in Natural Language Processing (NLP). Each question challenges a model’s cognitive abilities in three main areas: reasoning, knowledge retrieval, and comprehension.

Let’s discuss how these components are tested:

Reasoning

Reasoning tasks in MMLU require the model to apply logical thinking and deductive skills to arrive at the correct answer. These questions are not merely about recalling facts but analyzing the problem, identifying patterns, or drawing conclusions based on the information provided. These tasks test the model’s critical thinking ability and mimic human-like decision-making.

For example:

A reasoning question in math might require solving a complex equation or understanding geometric relationships.

In a humanities subject, it can interpret a historical event and identify its implications.

Knowledge Retrieval

Knowledge retrieval evaluates the model’s capacity to access and utilize stored information across various subjects. The focus here is on factual accuracy and how well the model can draw relevant information from its training data, simulating how a human expert retrieves knowledge from memory. The questions are designed from publicly available data and span topics such as science, law, and technology.

For instance:

In a biology question, the model might need to identify the function of a cell organelle.

A question in history could ask about significant events in a particular period.

Comprehension

Comprehension tasks require the model to interpret and understand the given material, whether a text passage, dataset, or problem statement. Comprehension questions ensure that the model isn’t just memorizing facts but can also synthesize and interpret information meaningfully.

For example:

In a literature question, the model might analyze a short excerpt to infer the author’s intent or tone.

A medical question might involve interpreting symptoms to identify a possible diagnosis.

Question Structure and Complexity in MMLU

Each question in MMLU has several answer options, typically four or more, from which the model must select the correct one. This multi-tiered complexity ensures that the benchmark evaluates a model’s abilities across a wide intellectual spectrum. The questions are carefully structured to reflect varying levels of complexity:

Basic Concepts: These questions cover foundational knowledge typically taught at the high school level, such as basic math, physics, or history.

Intermediate Understanding: At this level, questions can cover undergraduate-level topics and require a deeper grasp of the subject matter.

Advanced Expertise: These questions simulate professional-level challenges, such as diagnosing medical cases, solving engineering problems, or interpreting legal principles.

Tasks Included in MMLU

The benchmark includes tasks that go beyond simple fact-checking or memorization. Models are tested on:

Critical Thinking: Applying logic and reasoning to find the best solution.

Domain-Specific Knowledge: Answering specialized questions in biology, physics, or finance.

Multitask Adaptability: Switching easily between topics without losing performance.

Importance of MMLU in AI Development

MMLU is a key benchmark in AI research because it evaluates a model’s ability to generalize knowledge across multiple domains.

Generalization Across Domains

MMLU ensures that AI models are not confined to performing well in just one area but can transition seamlessly between unrelated topics. For instance, a model might answer a biology question and then tackle a problem in economics. This ability to generalize is critical for creating AI systems that can handle multi-task environments, such as customer service or educational tools, where diverse knowledge is required.

Beyond Narrow Tasks

AI systems tested with MMLU must go beyond simple tasks like summarizing text or detecting sentiment. They are challenged to perform more complex reasoning, retrieve specific knowledge, and interpret nuanced scenarios. For example, instead of just recalling facts, a model might need to apply logic to solve a physics problem or infer relationships in a historical context.

Pushing Current AI Models

MMLU challenges the limits of today’s language models by including professional-level questions in fields like medicine, engineering, and law. These questions often require specialized expertise or reasoning that goes beyond surface-level understanding. For example:

A legal question might ask about the implications of a constitutional principle.

A medical question might require diagnosing symptoms based on limited information.

Real-World Applications of MMLU

Healthcare: MMLU models can assist doctors by analyzing patient symptoms, suggesting potential diagnoses, and summarizing medical literature for treatment options.

Education: Personalized learning systems provide tailored explanations for subjects like math or history, while AI creates quizzes and study materials suited to different learning levels.

Legal Industry: MMLU aids in reviewing contracts, summarizing key clauses, and conducting legal research by quickly retrieving relevant case laws or statutes. It also helps simplify complex legal terms for better client understanding.

Customer Support: Multitask AI agents manage diverse inquiries, from troubleshooting technical issues to addressing billing concerns while providing empathetic and accurate responses.

Business and Finance: MMLU assists in creating financial summaries, identifying fraudulent activities, and drafting client proposals that help businesses make informed decisions.

Science and Research: Supports researchers by synthesizing data across fields, interpreting results, and generating technical summaries for grant proposals or publications.

Government Services: MMLU enhances public services by providing real-time information on legal rights, analyzing public policies for gaps, and supporting emergency response efforts with resource coordination and accurate updates.

Limitations of MMLU

Domain Bias: The benchmark may favor domains or topics commonly represented in AI training data, making it harder to assess true generalization.

Multiple-Choice Format: The reliance on multiple-choice questions limits the assessment of open-ended reasoning and creativity in AI systems.

Limited Real-World Context: While diverse, MMLU’s questions lack dynamic, real-world scenarios where additional context or interaction may be required.

Scalability for Updates: Adding new subjects or updating datasets to reflect evolving knowledge can be resource-intensive and challenging to maintain.

Language and Cultural Scope: The focus is often on English-language datasets, which may overlook linguistic and cultural diversity.

Artificial Score Inflation: Models trained directly on similar datasets may show artificially high performance without genuinely improved understanding.

Assessment of Depth vs. Breadth: The benchmark tests the breadth of knowledge but may not fully capture the depth of understanding in specific domains.

Difference Between MMLU and Single-Task Benchmarks

Understanding the differences between MMLU and single-task benchmarks highlights why MMLU is a more comprehensive tool for evaluating AI models. While single-task benchmarks focus on specific abilities, MMLU challenges models to generalize and perform across various subjects and tasks. The table below provides a clear comparison.

| Feature | MMLU | Single-Task Benchmarks (e.g., GLUE, SuperGLUE) |

|---|---|---|

| Scope of Evaluation | Tests over 50 diverse subjects spanning sciences, humanities, and professional fields. | Focuses on specific tasks like sentiment analysis, paraphrasing, or question answering. |

| Task Type | Multitask: Requires transitioning between unrelated domains and tasks. | Single-task: Each benchmark evaluates performance on a specific, narrow problem. |

| Real-World Relevance | Reflects real-world scenarios where versatility across domains is needed. | Limited to specific, controlled contexts that may not generalize to complex scenarios. |

| Question Format | Primarily multiple-choice, covering factual knowledge, reasoning, and comprehension. | Includes various formats like sentence similarity, text classification, and QA pairs. |

| Knowledge Level | Questions range from high school to advanced professional expertise. | Generally focused on standard levels of language understanding or specific datasets. |

| Generalization Testing | Evaluates a model's ability to adapt to unseen tasks or domains. | Tests model performance within a predefined task scope without significant domain shifts. |

| Example Benchmarks | MMLU | GLUE, SuperGLUE, SQuAD, MNLI, CoLA. |

Table: MMLU vs. Single-Task Benchmarks

When to Use the MMLU Benchmark

The MMLU benchmark is relevant to use in the following scenarios:

Evaluating Generalization Capabilities: Use MMLU to assess how well an AI model can generalize its knowledge across multiple subjects. It’s useful for identifying whether the model can handle diverse tasks beyond the domains it was explicitly trained.

Testing Multitask Performance: If your goal is to develop AI systems that can switch seamlessly between different tasks or domains, MMLU is the right benchmark. It evaluates multitask adaptability by challenging models with questions from varied disciplines like math, history, and law.

Measuring Reasoning and Comprehension: MMLU is highly effective for testing a model’s reasoning skills, comprehension abilities, and capacity for knowledge retrieval. It’s valuable for tasks that require logical thinking, understanding context, and problem-solving.

Benchmarking AI Progress: Use MMLU to compare your model’s performance against other state-of-the-art models or human baselines. It provides a standardized metric for understanding where your model stands in terms of real-world multitasking capabilities.

Building Multidomain Applications: For AI applications that need to handle diverse and complex queries—such as customer support bots, educational tools, or knowledge assistants—MMLU helps ensure the model is robust and versatile enough for practical use cases.

Identifying Weaknesses in Models: MMLU is an excellent diagnostic tool to uncover gaps in your model’s understanding. For instance, it can reveal whether your model struggles with specific subjects, reasoning tasks, or adapting to new domains.

Evaluating Language Models with MMLU and Enhancing Them with RAG

The MMLU benchmark is an essential tool for assessing the capabilities of large language models (LLMs). It provides a standardized framework to measure a model’s performance across diverse tasks and facilitates direct comparisons between models. By highlighting strengths like reasoning and factual recall and exposing weaknesses such as struggles with complex reasoning or domain-specific tasks, MMLU helps researchers identify areas for improvement. These insights enable fine-tuning, improving a model’s understanding and content generation capabilities.

However, while MMLU is valuable for improving LLMs, it’s not a cure-all. LLMs have inherent limitations, regardless of how well they perform on benchmarks. They are trained on static, offline datasets and lack access to real-time or domain-specific information. This can lead to hallucinations, where models generate inaccurate or fabricated answers. These shortcomings become even more problematic when addressing proprietary or highly specialized queries.

Introducing RAG: A Solution to Enhance LLM Responses

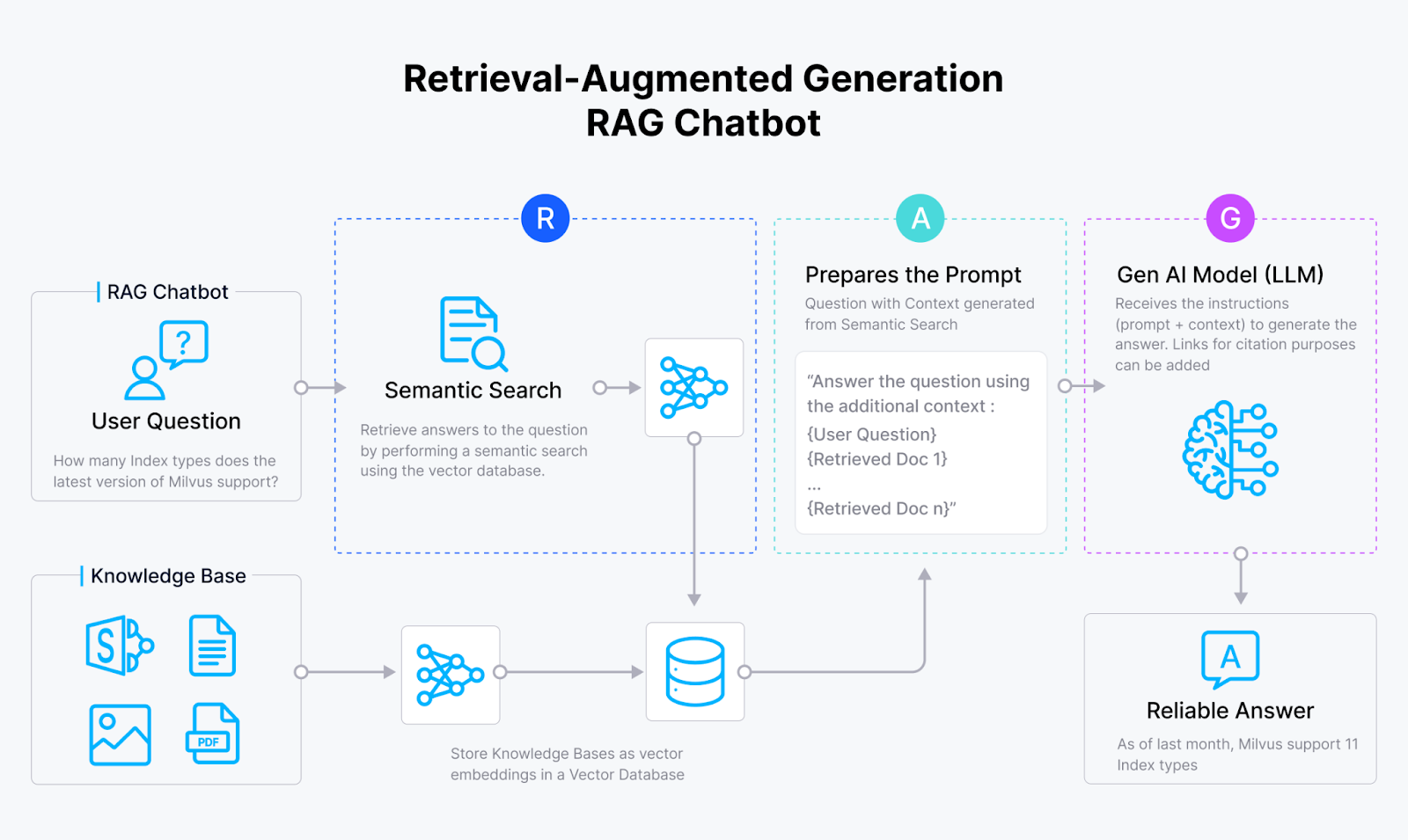

To address these challenges, Retrieval-Augmented Generation (RAG) offers a powerful solution. RAG enhances large language models (LLMs) by combining their generative capabilities with the ability to retrieve domain-specific information from external knowledge bases stored in a vector database like Milvus or Zilliz Cloud. When a user asks a question, the RAG system searches the database for relevant information and uses this information to generate a more accurate response. Let’s take a look at how the RAG process works.

Figure- RAG workflow.png

Figure- RAG workflow.png

A RAG system usually consists of three key components: an embedding model, a vector database, and an LLM.

The embedding model converts documents into vector embeddings, which are stored in a vector database like Milvus.

When a user asks a question, the system transforms the query into a vector using the same embedding model.

The vector database then performs a similarity search to retrieve the most relevant information. This retrieved information is combined with the original question to form a "question with context," which is then sent to the LLM.

The LLM processes this enriched input to generate a more accurate and contextually relevant answer.

This approach bridges the gap between static LLMs and real-time, domain-specific needs.

Conclusion

MMLU is a powerful benchmark for testing the multitasking abilities of AI models across diverse subjects and challenges. It pushes the boundaries of what AI can achieve by evaluating reasoning, knowledge retrieval, and comprehension in real-world scenarios. Vector databases like Milvus play a vital role in supporting AI models to perform better on such benchmarks through efficient data retrieval and integration.

FAQs On MMLU

What is MMLU? MMLU stands for Massive Multitask Language Understanding, a benchmark designed to test AI models on their ability to handle tasks across multiple domains and subjects.

Why is MMLU important for AI development? MMLU evaluates a model's ability to generalize and perform in diverse, real-world scenarios, helping researchers create more adaptable and versatile AI systems.

What types of tasks does MMLU include? MMLU includes tasks that test reasoning, knowledge retrieval, and comprehension across subjects like science, humanities, law, and medicine.

What are the challenges of MMLU? MMLU faces challenges such as domain bias, limited real-world context in its questions, and scalability for updating datasets to reflect evolving knowledge.

Related Resources

- What is MMLU?

- How does MMLU Work?

- Question Structure and Complexity in MMLU

- Tasks Included in MMLU

- Importance of MMLU in AI Development

- Real-World Applications of MMLU

- Limitations of MMLU

- Difference Between MMLU and Single-Task Benchmarks

- When to Use the MMLU Benchmark

- Evaluating Language Models with MMLU and Enhancing Them with RAG

- Conclusion

- FAQs On MMLU

- Related Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free