Understanding Feedforward Neural Networks (FNNs): Structure, Benefits, and Real-World Application

TL;DR:

A Feedforward Neural Network (FNN) is a type of artificial neural network in which information flows in a single direction—from the input layer through hidden layers to the output layer—without loops or feedback. This straightforward structure is often used for pattern recognition tasks like image and speech classification. Compared to Convolutional Neural Networks (CNNs), which are specialized for processing grid-like data such as images using filters to capture spatial features, FNNs don’t handle spatial relationships as effectively. Unlike Recurrent Neural Networks (RNNs), which include feedback loops to manage sequence data (like text or time series), FNNs lack memory, making them better suited for static data.

Understanding Feedforward Neural Networks (FNNs): Structure, Benefits, and Real-World Application

Have you ever wondered how image recognition software distinguishes dogs from cats? Or how do autonomous vehicles decide what to do based on their environment? Artificial intelligence (AI), especially a feedforward neural network (FNN), is the engine behind these breakthrough achievements. Many AI apps we use today are built on top of these networks.

Here, we uncover the feedforward neural network’s structure, how it functions, its benefits and challenges, and its wide-ranging applications.

What is a Feedforward Neural Network (FNN)?

A Feedforward Neural Network (FNN) is a type of neural network where information flows in a single path, starting at the input layer, passing through hidden layers, and ending at the output layer. Since the data only moves forward, FNNs work well for tasks that handle each input separately, such as identifying categories (classification) or predicting values (regression).

For instance, in a credit scoring system banks use, an FNN can analyze users' financial profiles—such as income, credit history, and spending habits—to determine their creditworthiness. Each piece of information flows through the network’s layers, where various calculations are made to produce a final score. Unlike other network types, FNNs do not retain information from previous inputs, which makes them ideal for scenarios where each decision can be made in isolation.

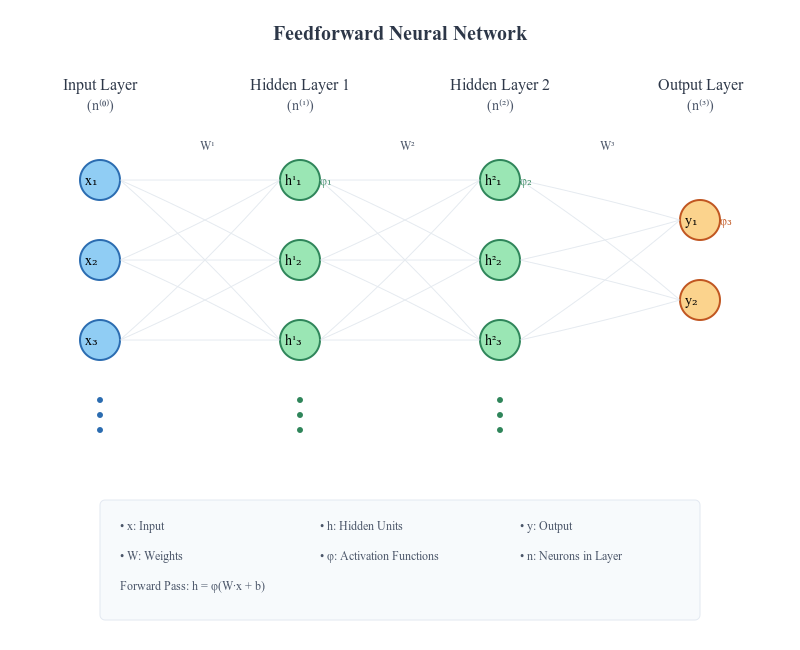

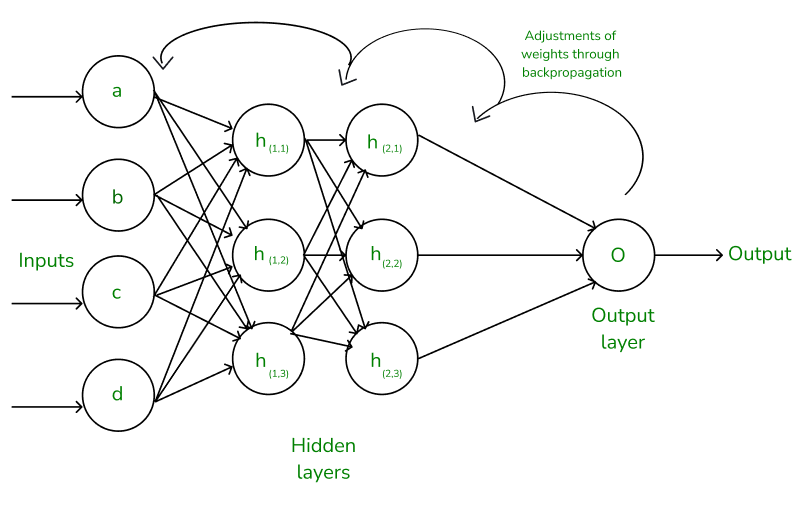

Feedforward Neural Networks Architecture and Layers

Feedforward Neural Networks rely on a structured, layered design where data flows sequentially through each layer.

Figure 1- Feedforward Neural Network Architecture.png

Figure 1- Feedforward Neural Network Architecture.png

Figure 1: Feedforward Neural Network Architecture

Input Layer: Data enters the network in the input layer. Each neuron here represents one feature of the data. For example, if the input is a 28x28 grayscale image, this layer will have 784 neurons (one for each pixel).

Hidden Layers: The hidden layers sit between the input and output layers, transforming the data through a dense network of neurons. Neurons in the preceding and subsequent layers are linked to each hidden layer neuron. In this case, each neuron applies an activation function after performing a weighted sum of its inputs and adding a bias. To assist the network in identifying patterns, neurons progressively extract more abstract elements from the data.

Output Layer: The output layer generates the final result. In classification tasks, each neuron represents a potential class and outputs a probability score for each one. In regression tasks, it might predict a continuous value, such as temperature or stock price.

Flow of Data Through the Network

Data in a FNN follows a systematic path, beginning at the input layer and progressing through the hidden levels before arriving at the output layer. The input data travels through the hidden layers after being partially processed by each neuron in the input layer. Neurons perform calculations using weights, biases, and activation functions to refine the information. By the time data reaches the output layer, the network has generated a prediction or classification.

For example, neurons in the input layer might process pixel data from an image in an image recognition task. Hidden layers then identify features like shapes and textures, and the output layer ultimately assigns a probability to each category, such as “cat” or “dog.” This clear, step-by-step flow allows FNNs to process data without remembering past inputs.

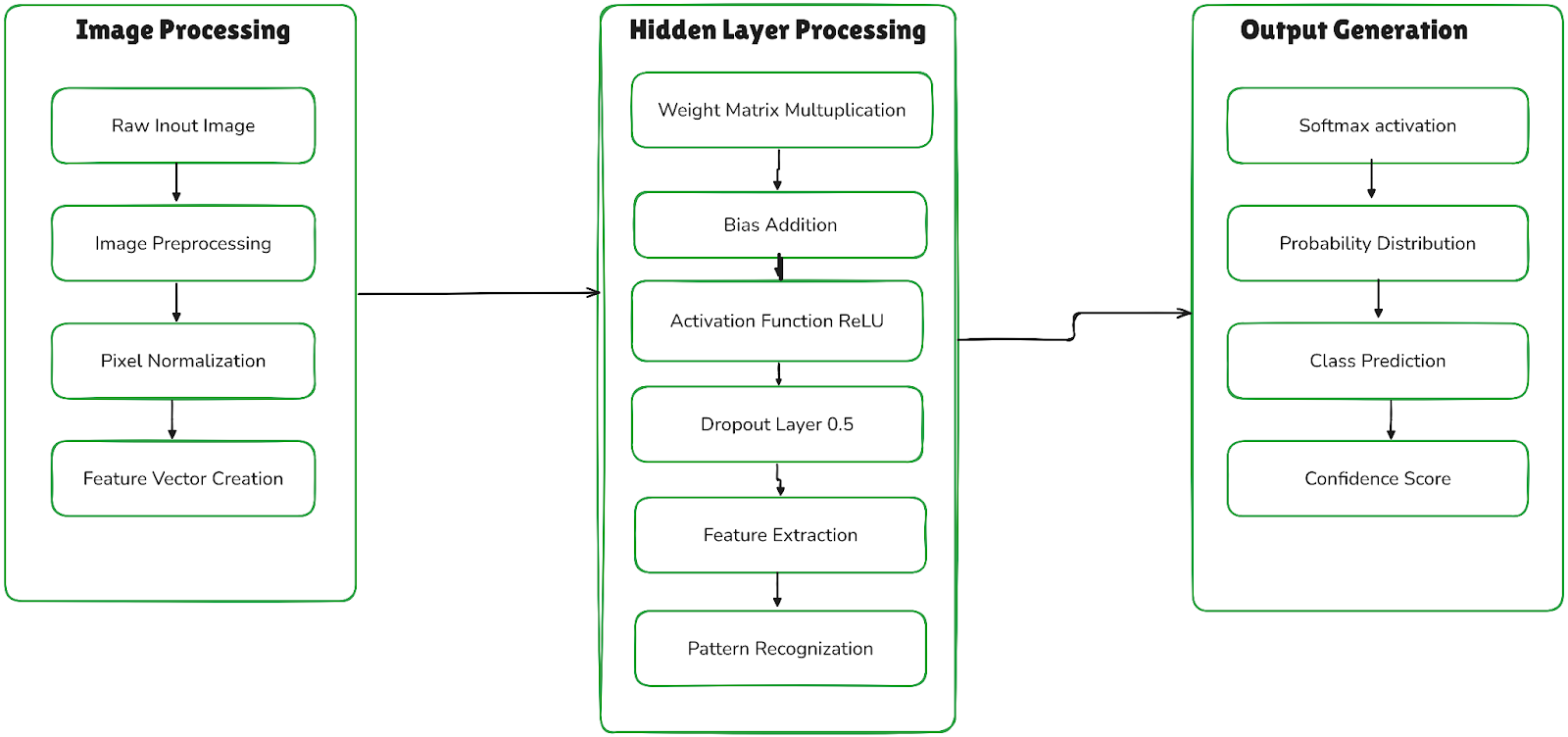

Figure 2- Data Flow and Processing in Neural Networks.png

Figure 2- Data Flow and Processing in Neural Networks.png

Figure 2: Data Flow and Processing in Neural Networks

Key Concepts in Feedforward Neural Networks

To understand how FNNs work, let’s look at the main components that enable them to learn and make predictions.

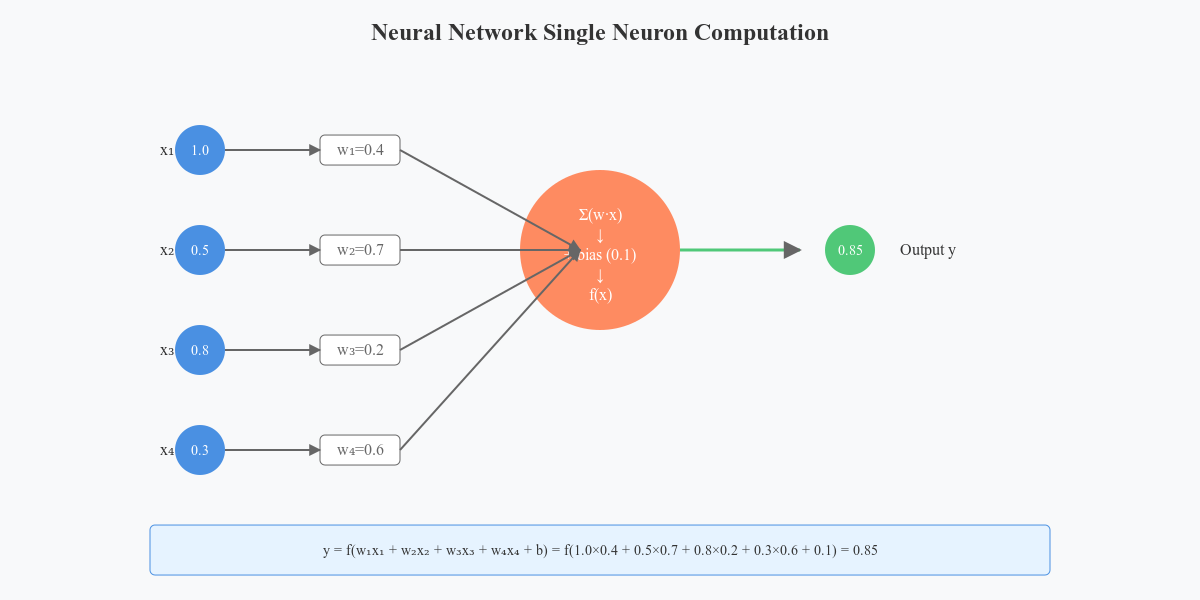

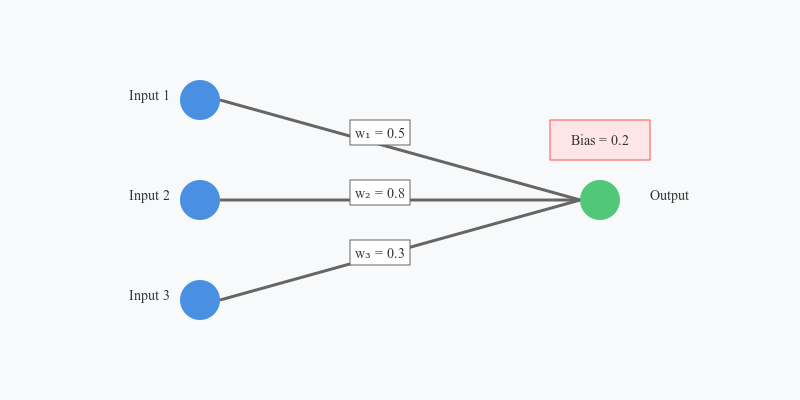

Neurons: The Core Units of Computation

Neurons are the basic building blocks of a neural network. After receiving input from the layer above, each neuron computes a weighted total and applies a bias (we’ll explain weights and bias later). The network can then identify more intricate patterns after applying an activation function, which adds non-linearity. Neurons gradually improve their comprehension of the relationships in the input as they link across several levels, guiding the network to its ultimate output.

Figure 3- Neural Network Single Neuron Computation.png

Figure 3- Neural Network Single Neuron Computation.png

Figure 3: Neural Network Single Neuron Computation

Weights and Biases: Parameters for Learning

Weights and biases are the core parameters the network uses to learn:

Weights: Weights are parameters in the network that define the influence or importance of each input in relation to a neuron. These values determine how strongly an input affects the neuron’s output. The network continuously adjusts weights during training to minimize the gap between predicted outputs and actual results, refining its predictive accuracy.

Biases: A bias is an additional parameter added to the weighted sum of inputs, allowing neurons to activate even when inputs are zero. This adjustment helps the network capture more patterns in the data, and like weights, biases are fine-tuned during training to improve performance.

Figure 4 Weights and Biases- Parameters for Learning .png

Figure 4 Weights and Biases- Parameters for Learning .png

Figure 4 Weights and Biases: Parameters for Learning

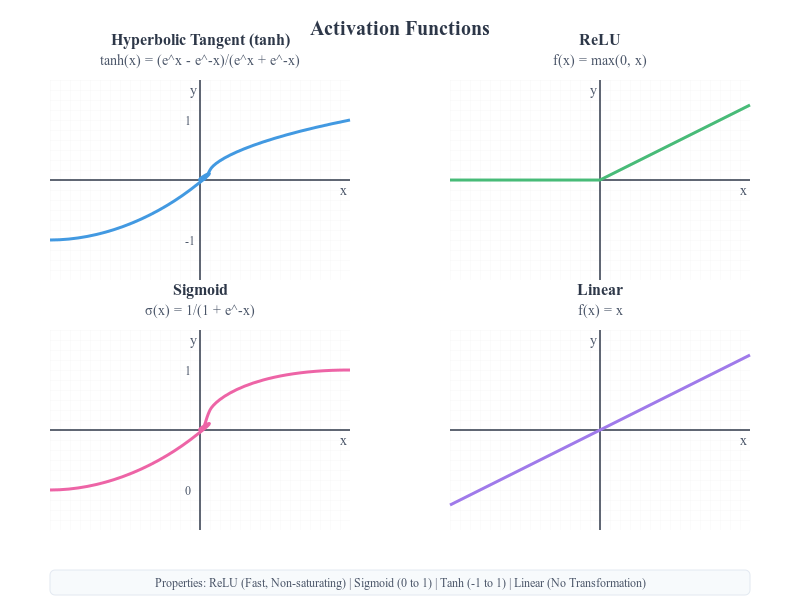

Activation Functions

Activation functions are mathematical functions applied to a neuron’s output to introduce non-linearity into the network. Without activation functions, FNNs would only be able to model linear relationships, limiting their usefulness for complex tasks.

Common activation functions include:

Sigmoid: Compresses outputs between 0 and 1. It is often used for binary classification as it provides a probability-like output.

ReLU (Rectified Linear Unit): Outputs zero for negative values and passes positive values unchanged, speeding up training and reducing the risk of vanishing gradients.

Tanh: Maps values between -1 and 1, centering the output and often used in hidden layers to improve gradient flow.

These functions allow FNNs to capture non-linear relationships, making them more versatile for real-world data.

Figure 5 Activation Functions.png

Figure 5 Activation Functions.png

Figure 5 Activation Functions

Backpropagation: Learning Through Error Correction

Backpropagation is a learning algorithm that calculates how much each connection in a neural network contributes to prediction errors. It then systematically adjusts these connections (weights and biases) backward through the network to minimize these errors and improve accuracy. The backpropagation process has several stages:

Calculate the Error: After a prediction of the output layer, the network calculates the difference between the predicted output and the actual value (known as the error or loss). For classification tasks, cross-entropy loss is commonly used, while regression tasks often use mean squared error.

Backward Error Propagation: The error spreads layer by layer backward through the network. Backpropagation uses the calculus chain rule to compute the error gradient for each weight and bias.

Modify the Weight and Bias: The gradients show how much and which way to change the weights and biases to reduce error. Optimization techniques like Adam or Stochastic Gradient Descent (SGD) update these parameters to minimize error.

Repeat Until Convergence: This process repeats across multiple epochs, gradually reducing errors and improving accuracy.

Backpropagation enables the network to learn from mistakes and refine predictions with each iteration.

Figure 6 Backpropagation in Neural Networks.png

Figure 6 Backpropagation in Neural Networks.png

Figure 6 Backpropagation in Neural Networks](https://media.geeksforgeeks.org/wp-content/uploads/20240217152156/Frame-13.png)

Comparison with Other Neural Networks

Each type of neural network—feedforward, recurrent, and convolutional—has advantages and disadvantages of its own. While CNNs and RNNs are made to address particular issues that FNNs aren't as well-suited for, FNNs are frequently used as a starting point for AI tasks.

| Feature | Feedforward Neural Networks (FNN) | Recurrent Neural Networks (RNN) | Convolutional Neural Networks (CNN) |

| Data Flow | One-directional flow from input to output | Cyclic, involving loops that allow data feedback | Primarily one-directional, with specialized spatial filters |

| Structure | Simple, layered with distinct input, hidden, and output layers | Layered, with temporal dependencies and memory cells | Uses convolutions and pooling layers for spatial data |

| Use Cases | Classification, regression, simple predictive tasks | Time series analysis, natural language processing (NLP) | Image recognition, object detection, spatial data |

| Memory | No memory of past inputs; each input is independent | Short-term memory allows the retention of previous inputs | Have no explicit memory Processes spatial data through layers. |

| Complexity | Easier to implement, interpret, and train | More complex due to sequence handling | Complexity in design and computational requirements |

| Limitations | Limited contextual understanding, no memory | Susceptible to vanishing gradient problems in long sequences | Ineffective for non-spatial or sequence-based data |

Benefits and Challenges of Feedforward Neural Networks

Feedforward Neural Networks (FNNs) have certain advantages, especially for simpler tasks that don’t rely on complex data structures or remember past inputs. Still, they have some limitations. Here’s a look at both aspects.

Benefits

Simplicity: FNNs have a straightforward design, processing data in one direction—from input to output. This makes them easy to understand and apply, even for beginners. With no looping or memory components, FNNs are effective for tasks where each input is processed independently.

Efficiency: Due to linear data flow, FNNs are computationally efficient, especially compared to more intricate networks like RNNs. They are also better suited for real-time activities or scenarios with limited system capacity because they are easier to train and use fewer resources.

Versatility: FNNs are adaptable and can support a range of tasks, including classification, regression, and prediction. They’re applied across finance, healthcare, and retail industries to handle diverse data types. For instance, FNNs are often used to classify images, assess text sentiment, and make reliable forecasts.

Challenges

Limited Contextual Understanding: FNNs handle each input separately, so they aren’t suited for tasks that rely on context or memory, like time series analysis or language translation, where previous inputs affect the output.

Overfitting Risk: FNNs can overfit, especially when training data is limited. Without proper regularization, they may memorize training data rather than generalize patterns, leading to poor performance on new data.

Lack of Memory Mechanism: Unlike RNNs, FNNs cannot remember past inputs, making them ineffective for tasks that need continuity, such as chatbots that respond based on prior conversation context.

Use Cases of Feedforward Neural Networks

Feedforward Neural Networks (FNNs) have a variety of uses, especially in tasks where each data point can be treated independently, and context isn’t essential for decision-making. Here are some of their most common applications:

Image Classification

FNNs are widely used in image classification, where the network classifies images into predetermined groups by processing visual data. For instance, using picture characteristics, FNNs can be trained to differentiate between vehicles, plants, dogs, and cats. While Convolutional Neural Networks (CNNs) are generally preferred for more complex image tasks because they capture spatial details, FNNs remain effective for simpler image classifications or situations with limited computational resources.

Sentiment Analysis of Text

FNNs can also handle natural language processing (NLP) tasks like sentiment analysis. In this case, the network is trained to label text as positive, negative, or neutral based on specific words or short phrases. Although RNNs and transformers are more adept at understanding sequences of words, FNNs perform reasonably well for sentiment analysis when the focus is on individual words or brief text snippets rather than longer passages.

Fraud Detection

FNNs are frequently used in finance to detect fraudulent activity by analyzing transaction patterns. They can spot unusual patterns, like sudden spending spikes or transactions happening in unexpected places. FNNs are an effective tool for promptly detecting fraud and controlling risk because of their prowess in categorizing and forecasting using structured data.

FAQs about Feedforward Neural Networks

- Why are Feedforward Neural Networks efficient for certain applications?

FNNs' one-directional data flow and straightforward structure make them computationally efficient. They don’t require memory of past data, which simplifies their processing and makes them useful in real-time applications or scenarios with limited resources.

- What are hidden layers, and what role do they play in FNN?

Hidden layers sit between the input and output layers, transforming data through interconnected neurons. These layers apply weighted calculations and activation functions, progressively identifying patterns in the data.

- Why are activation functions used in FNNs?

Activation functions introduce the non-linearity, allowing FNNs to model complex relationships in the data. Common functions include ReLU, Sigmoid, and Tanh, each suited to different types of tasks within the network.

- In what scenarios might an FNN not be the best choice?

FNNs might not be ideal for tasks that require memory or sequential context, like language translation or video processing, as they don’t retain past information.

- How do FNNs handle unstructured data, like images or text?

FNNs transform unstructured data into structured vector forms, capturing essential features. These vectors can be used for analysis, classification, or storage in vector databases for quick retrieval and comparison.

Related Resources

- What is a Feedforward Neural Network (FNN)?

- Feedforward Neural Networks Architecture and Layers

- Key Concepts in Feedforward Neural Networks

- Comparison with Other Neural Networks

- Benefits and Challenges of Feedforward Neural Networks

- Use Cases of Feedforward Neural Networks

- FAQs about Feedforward Neural Networks

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free