SingleStore vs Pinecone Choosing the Right Vector Database for Your AI Apps

What is a Vector Database?

Before we compare SingleStore and Pinecone, let's first explore the concept of vector databases.

A vector database is specifically designed to store and query high-dimensional vectors, which are numerical representations of unstructured data. These vectors encode complex information, such as the semantic meaning of text, the visual features of images, or product attributes. By enabling efficient similarity searches, vector databases play a pivotal role in AI applications, allowing for more advanced data analysis and retrieval.

Common use cases for vector databases include e-commerce product recommendations, content discovery platforms, anomaly detection in cybersecurity, medical image analysis, and natural language processing (NLP) tasks. They also play a crucial role in Retrieval Augmented Generation (RAG), a technique that enhances the performance of large language models (LLMs) by providing external knowledge to reduce issues like AI hallucinations.

There are many types of vector databases available in the market, including:

- Purpose-built vector databases such as Milvus, Zilliz Cloud (fully managed Milvus)

- Vector search libraries such as Faiss and Annoy.

- Lightweight vector databases such as Chroma and Milvus Lite.

- Traditional databases with vector search add-ons capable of performing small-scale vector searches.

SingleStore is a distributed, relational, SQL database management system with vector search as an add-on and Pinecone is a vector database. This post compares their vector search capabilities.

SingleStore: Overview and Core Technology

SingleStore has made vector search possible by putting it in the database itself, so you don’t need separate vector databases in your tech stack. Vectors can be stored in regular database tables and searched with standard SQL queries. For example, you can search similar product images while filtering by price range or explore document embeddings while limiting results to specific departments. The system supports both semantic search using FLAT, IVF_FLAT, IVF_PQ, IVF_PQFS, HNSW_FLAT, and HNSW_PQ for vector index and dot product and Euclidean distance for similarity matching. This is super useful for applications like recommendation systems, image recognition and AI chatbots where similarity matching is fast.

At its core SingleStore is built for performance and scale. The database distributes the data across multiple nodes so you can handle large scale vector data operations. As your data grows you can just add more nodes and you’re good to go. The query processor can combine vector search with SQL operations so you don’t need to make multiple separate queries. Unlike vector only databases SingleStore gives you these capabilities as part of a full database so you can build AI features without managing multiple systems or dealing with complex data transfers.

For vector indexing SingleStore has two options. The first is exact k-nearest neighbors (kNN) search which finds the exact set of k nearest neighbors for a query vector. But for very large datasets or high concurrency SingleStore also supports Approximate Nearest Neighbor (ANN) search using vector indexing. ANN search can find k near neighbors much faster than exact kNN search sometimes by orders of magnitude. There’s a trade off between speed and accuracy - ANN is faster but may not return the exact set of k nearest neighbors. For applications with billions of vectors that need interactive response times and don’t need absolute precision ANN search is the way to go.

The technical implementation of vector indices in SingleStore has specific requirements. These indices can only be created on columnstore tables and must be created on a single column that stores the vector data. The system currently supports Vector Type(dimensions[, F32]) format, F32 is the only supported element type. This structured approach makes SingleStore great for applications like semantic search using vectors from large language models, retrieval-augmented generation (RAG) for focused text generation and image matching based on vector embeddings. By combining these with traditional database features SingleStore allows developers to build complex AI applications using SQL syntax while maintaining performance and scale.

Pinecone: The Basics

Pinecone is a SaaS built for vector search in machine learning applications. As a managed service, Pinecone handles the infrastructure so you can focus on building applications not databases. It’s a scalable platform for storing and querying large amounts of vector embeddings for tasks like semantic search and recommendation systems.

Key features of Pinecone include real-time updates, machine learning model compatibility and a proprietary indexing technique that makes vector search fast even with billions of vectors. Namespaces allow you to divide records within an index for faster queries and multitenancy. Pinecone also supports metadata filtering, so you can add context to each record and filter search results for speed and relevance.

Pinecone’s serverless offering makes database management easy and includes efficient data ingestion methods. One of the features is the ability to import data from object storage, which is very cost effective for large scale data ingestion. This uses an asynchronous long running operation to import and index data stored as Parquet files.

To improve search Pinecone hosts the multilanguage-e5-large model for vector generation and has a two stage retrieval process with reranking using the bge-reranker-v2-m3 model. Pinecone also supports hybrid search which combines dense and sparse vector embeddings to balance semantic understanding with keyword matching. With integration into popular machine learning frameworks, multiple language support and auto scaling Pinecone is a complete solution for vector search in AI applications with both performance and ease of use.

Key Differences

Search Methodology and Implementation

SingleStore has vector search built in to its SQL database, with exact k-nearest neighbors (kNN) search and Approximate Nearest Neighbor (ANN) search. It supports multiple index types: FLAT, IVF_FLAT, IVF_PQ, IVF_PQFS, HNSW_FLAT, and HNSW_PQ. It does dot product and Euclidean distance similarity matching, so it’s good for any type of vector search application.

Pinecone has its own proprietary indexing technique optimized for vector search. It has real-time update and a two-stage retrieval process with reranking using the bge-reranker-v2-m3 model. It has a hybrid search system that combines dense and sparse vector embeddings for semantic understanding and keyword matching. Pinecone also has built-in vector generation with its multilanguage-e5-large model.

Data and Architecture

Pinecone is a SQL database with vector capabilities. You need to create vector indices on columnstore tables and use the Vector Type(dimensions[, F32]) format. What’s unique about SingleStore is that you can combine vector search with regular SQL in a single query, no need to run multiple queries. The architecture distributes data across multiple nodes so it can handle large scale vector operations.

Pinecone is designed for vector search, using namespaces to partition and divide records within indices. It has robust metadata filtering so you can do context aware search and refine based on additional attributes. One of Pinecone’s strengths is its efficient data ingestion system that can ingest data directly from object storage using Parquet files. As a managed service with a serverless architecture, Pinecone handles the infrastructure complexity for you.

Scalability and Performance

SingleStore scales horizontally by adding more nodes to the system. The query processor can handle combined vector and SQL operations efficiently, distributing the workload across nodes. You can choose exact or approximate search, so you can balance precision and performance based on your needs. This is great for applications that need to scale both vector search and regular database operations.

Pinecone scales through auto-scaling infrastructure that adjusts resources based on demand. The managed service architecture handles the complexity of scaling so you can focus on application development not infrastructure management. Pinecone’s namespace based organization allows you to query billions of vectors efficiently and its specialized indexing techniques scale well.

Integration and Development Experience

SingleStore has a SQL interface for vector operations so it’s familiar to teams with existing SQL expertise. You can build complex AI features within the database itself, combining vector search with regular database operations. This integration can simplify development for applications that need both vector search and regular database functionality.

Pinecone integrates with popular ML frameworks and supports multiple languages. It has a simple API for vector operations and pre-trained models for vector generation and reranking.

When to Choose SingleStore

SingleStore is for companies that need to combine traditional database operations with vector search in one system. It’s great for companies running complex applications that need both structured data queries and similarity search, like recommendation engines that factor in user transaction history, product catalogs that combine text search with image search, or financial apps that need to process time-series data and document embeddings. The SQL approach is especially good for teams with existing SQL expertise who want to have a unified data architecture without having to manage separate systems for vector and traditional data operations.

When to Choose Pinecone

Pinecone is for teams that are purely focused on vector search and want a managed service with minimal operational overhead. It’s great for applications that prioritize fast vector similarity search, like AI-powered content recommendation systems, semantic document search or image similarity applications that don’t need to join with traditional database tables. Pinecone’s serverless architecture and built-in machine learning makes it perfect for startups and teams that want to move fast without managing infrastructure or implementing their own vector processing algorithms.

Conclusion

The choice between SingleStore and Pinecone comes down to your data and operational needs. SingleStore is a full solution that combines traditional database and vector search, great for applications that need both in one system. Its SQL approach and scalable architecture can handle complex queries across vector and structured data. Pinecone with its specialized vector search and managed service is a more focused solution that’s great for pure vector search scenarios and gets you to market faster for vector specific applications. Your decision should be based on your existing tech stack, team expertise, operational needs and the balance of vector and traditional database operations your app needs.

Read this to get an overview of SingleStore and Pinecone but to evaluate these you need to evaluate based on your use case. One tool that can help with that is VectorDBBench, an open-source benchmarking tool for vector database comparison. In the end, thorough benchmarking with your own datasets and query patterns will be key to making a decision between these two powerful but different approaches to vector search in distributed database systems.

Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

VectorDBBench is an open-source benchmarking tool for users who need high-performance data storage and retrieval systems, especially vector databases. This tool allows users to test and compare different vector database systems like Milvus and Zilliz Cloud (the managed Milvus) using their own datasets and find the one that fits their use cases. With VectorDBBench, users can make decisions based on actual vector database performance rather than marketing claims or hearsay.

VectorDBBench is written in Python and licensed under the MIT open-source license, meaning anyone can freely use, modify, and distribute it. The tool is actively maintained by a community of developers committed to improving its features and performance.

Download VectorDBBench from its GitHub repository to reproduce our benchmark results or obtain performance results on your own datasets.

Take a quick look at the performance of mainstream vector databases on the VectorDBBench Leaderboard.

Read the following blogs to learn more about vector database evaluation.

Further Resources about VectorDB, GenAI, and ML

- What is a Vector Database?

- SingleStore: Overview and Core Technology

- Pinecone: The Basics

- Key Differences

- Data and Architecture

- Scalability and Performance

- Integration and Development Experience

- When to Choose SingleStore

- When to Choose Pinecone

- Conclusion

- Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

- Further Resources about VectorDB, GenAI, and ML

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Demystifying the Milvus Sizing Tool

Explore how to use the Sizing Tool to select the optimal configuration for your Milvus deployment.

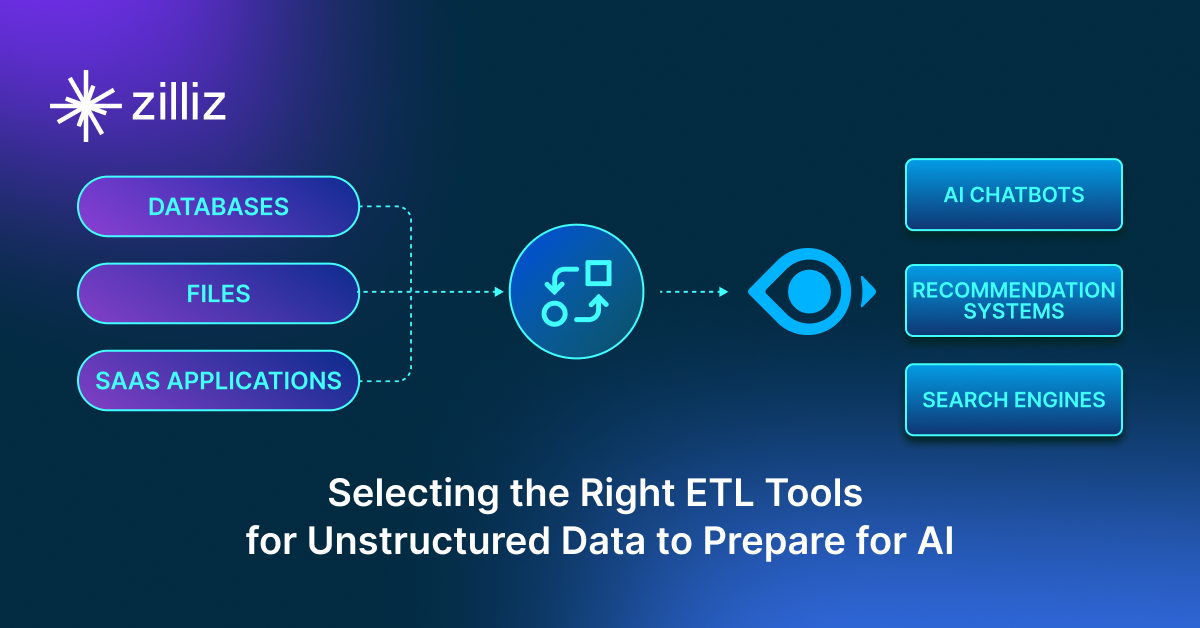

Selecting the Right ETL Tools for Unstructured Data to Prepare for AI

Learn the right ETL tools for unstructured data to power AI. Explore key challenges, tool comparisons, and integrations with Milvus for vector search.

How AI and Vector Databases Are Transforming the Consumer and Retail Sector

AI and vector databases are transforming retail, enhancing personalization, search, customer service, and operations. Discover how Zilliz Cloud helps drive growth and innovation.

The Definitive Guide to Choosing a Vector Database

Overwhelmed by all the options? Learn key features to look for & how to evaluate with your own data. Choose with confidence.