Zilliz Cloud Delivers Better Performance and Lower Costs with Arm Neoverse-based AWS Graviton

With cloud infrastructure advancing to support AI’s exponential growth, Zilliz Cloud —the fully managed vector database built on Milvus —leverages Arm Neoverse-based CPUs to deliver unmatched cost-efficiency and performance for enterprise-scale AI workloads. This blog explores how Zilliz Cloud transitioned from x86 to Arm CPUs for compute-intensive workloads, reducing operational costs and enabling billion-scale vector search for mission-critical AI workloads, such as RAG and multi-modal search.

Zilliz Cloud: Fully-managed Vector Database for Production Workloads

Zilliz Cloud is a fully managed vector database engineered for AI applications in production, delivering large-scale vector search at low cost. Built on the open-source Milvus, it supports up to 100 billion vectors across unstructured data (images, text, audio) with sub-20ms latency vector similarity search, making it ideal for applications like RAG, AI Agents, visual search, recommendation systems, and anomaly detection. Key features of Zilliz Cloud include AutoIndex, which dynamically selects optimal indexing strategies for high search accuracy without manual tuning, a Cardinal indexing engine that unlocks 10x faster search than open-source Milvus, and hybrid retrieval support that combines dense vector search and full-text search for better results.

Core Benefits of Zilliz Cloud

High Performance: Capable of serving billion-scale vector data with thousands of QPS. Flexible choice of cluster types to achieve a balance of latency, accuracy, and cost

Scalability: Horizontally scales to handle up to 100 billion vectors through the distributed, cloud native architecture

Cost Efficiency: Reduces infrastructure costs by 70% via RaBitQ binary quantization, vector index optimizations, and tiered storage

Ease of Use: Simplifies deployment and operations with AutoIndex and a fully-managed service

Security & Reliability: Offers enterprise-grade security and 99.95% uptime SLA

Understanding Vector Databases

Vector databases specialize in storing high-dimensional vectors generated by embedding models. These vectors enable semantic search via Approximate Nearest Neighbour search, which is critical for AI workflows in natural language processing, computer vision, and recommender systems. By handling real-time indexing and multimodal querying, vector databases address the challenges of efficiently managing unstructured data. Zilliz Cloud leverages these capabilities to power AI applications with sub-20ms latency, ensuring high performance for production workloads.

The Role of Milvus

Milvus, the most popular open-source vector database with 36K+ stars on GitHub, is the foundation of Zilliz Cloud with its scalable, cloud-native architecture. Its distributed design allows Zilliz Cloud to scale horizontally across billions of vectors while maintaining low latency, enabling efficient handling of massive datasets.

Starting from Milvus 2.3, Arm CPU support is added along with official Docker images for Arm64, simplifying installation and deployment on cost-effective, energy-efficient platforms. Running Milvus on Arm CPUs offers a 20% performance boost over traditional x86, resulting in faster queries for applications relying on quick vector search and retrieval.

Journey to Arm-based CPUs

Zilliz Cloud's adoption of Arm-based AWS Graviton3 processors marks a strategic leap in the performance and cost-efficiency of AI workloads. The AWS Graviton3 processors, powered by Arm Neoverse architecture, leverage SVE and BFloat16 instruction support to deliver superior performance and efficiency. These architectural enhancements also include wider vector units and an improved software stack, making Graviton3 an ideal choice for GenAI tasks like semantic search and RAG pipelines that require high-throughput vector operations and real-time processing.

Cost is a major barrier to the adoption of large-scale AI search in enterprises. To address this, Zilliz Cloud has implemented numerous engineering optimizations that significantly reduce costs and improve efficiency, making vector search more accessible to its customers, even at a billion-scale.

Since learning the advanced computation power and optimized instruction design for vector operations, the Zilliz Cloud team has worked closely with the Arm and AWS teams to migrate the compute-heavy indexing workloads to AWS Graviton3.

One of the most notable benefits of adopting AWS Graviton3 processors is the substantial boost in performance and cost efficiency. Specifically, Zilliz Cloud has observed:

Index Building Performance: Index building performance has increased by 50% compared to x86 implementation, dramatically accelerating vector indexing, a critical operation during data ingestion to speed up the query at retrieval time. This enhancement enables faster data processing and more efficient handling of billion-scale vector datasets.

Search Operations: Search operations have seen a 10% efficiency gain benchmarked against x86, resulting in faster query execution results and more responsive data retrieval. This improvement enables smoother navigation through large datasets, ensuring that users can quickly locate specific records or explore vast amounts of information.

Beyond performance gains, adoption of AWS Graviton3-based cloud instances reduced Zilliz Cloud’s operational costs by 10%, driven by the processors’ energy efficiency and price-performance advantage compared to x86 instances. This upgrade aligns with Zilliz Cloud's mission to deliver high-performance, cost-effective solutions for AI applications. By leveraging AWS Graviton3 processors, Zilliz Cloud enhances its ability to handle demanding GenAI workloads while reducing total cost of ownership.

"AWS Graviton’s Arm Neoverse-based architecture delivers a cost-effective and energy-efficient solution for massive-scale vector search in production, ensuring that enterprise can increase AI search adoption without compromising performance," said James Luan, VP of Engineering at Zilliz. "By integrating Arm's innovative design, Zilliz Cloud empowers organizations to scale AI applications with greater efficiency, while maintaining the high performance required to remain competitive."

Conclusion

Zilliz Cloud’s integration of Arm-based AWS Graviton3 processors delivers a competitive edge in AI infrastructure, combining scalability and cost-optimized performance for critical workloads. By leveraging its enhanced compute and energy efficiency architecture, the platform accelerates AI/ML deployments through faster index construction, accelerated search capabilities, and reduced infrastructure costs. As organizations increasingly prioritize scalable, cost-effective solutions for AI-driven workloads, Zilliz Cloud’s adoption of high-performance, cost-efficient compute technology ensures it remains a leader in delivering innovative and efficient cloud services. This forward-looking approach empowers customers to tackle complex data challenges while maintaining agility in an increasingly complex and evolving technological landscape.

Visit the Arm Developer Hub for battle-tested tools, demos, and step-by-step guides to supercharge your AI applications on the Arm architecture.

Ready to revolutionize semantic search? Explore our tutorial on building a RAG-powered application using Zilliz Cloud on Arm-based servers.

Migrate seamlessly with Arm Cloud Migration – your shortcut to boosting efficiency and optimizing performance on Arm.

- Zilliz Cloud: Fully-managed Vector Database for Production Workloads

- Understanding Vector Databases

- The Role of Milvus

- Journey to Arm-based CPUs

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introducing Business Critical Plan: Enterprise-Grade Security and Compliance for Mission-Critical AI Applications

Discover Zilliz Cloud’s Business Critical Plan—offering advanced security, compliance, and uptime for mission-critical AI and vector database workloads.

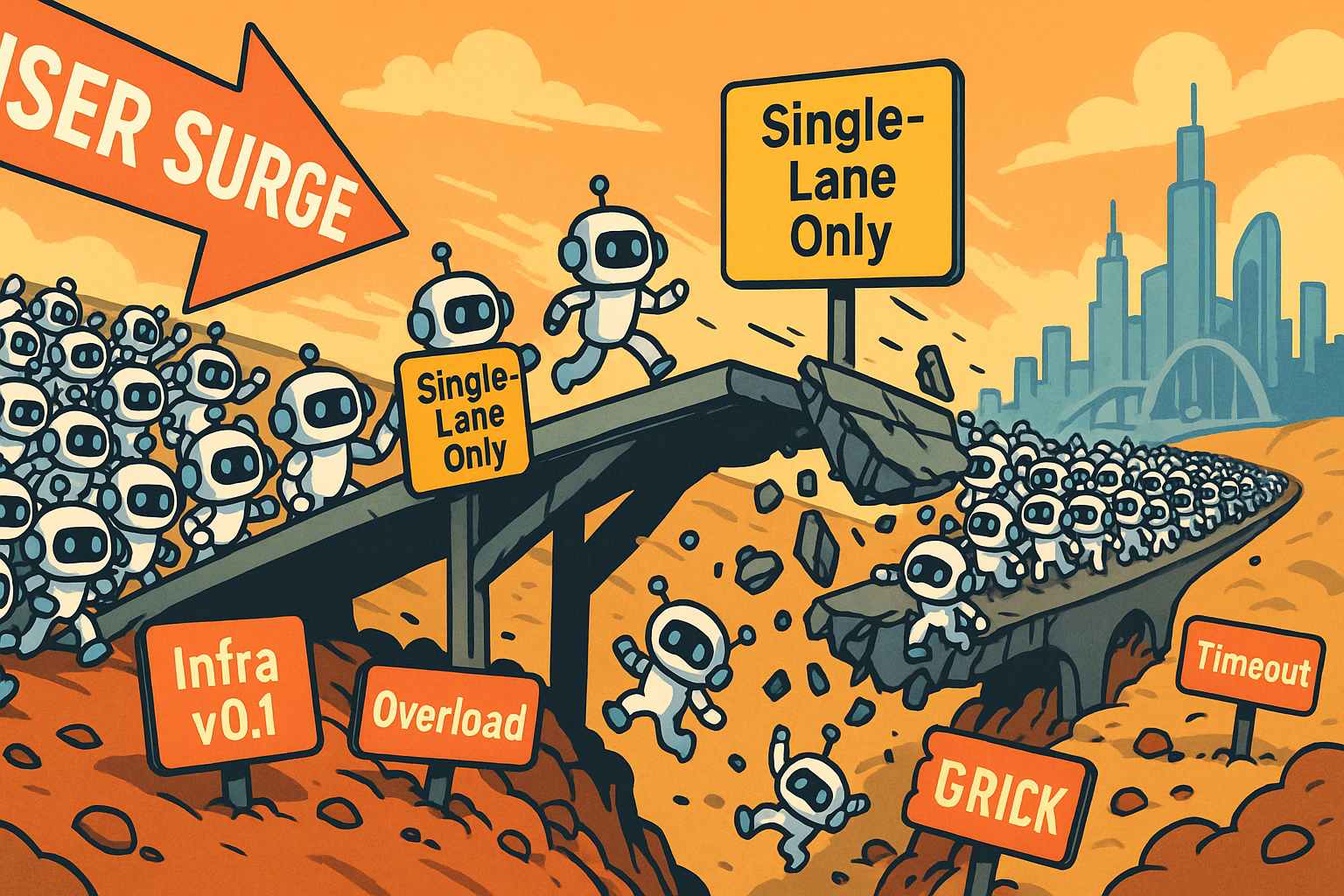

Build for the Boom: Why AI Agent Startups Should Build Scalable Infrastructure Early

Explore strategies for developing AI agents that can handle rapid growth. Don't let inadequate systems undermine your success during critical breakthrough moments.

Proactive Monitoring for Vector Database: Zilliz Cloud Integrates with Datadog

we're excited to announce Zilliz Cloud's integration with Datadog, enabling comprehensive monitoring and observability for your vector database deployments with your favorite monitoring tool.