Stop Waiting, Start Building: Voice Assistant With Milvus and Llama 3.2

"Sorry, this feature isn't available in your region yet."

If you're based in Europe, you've probably seen this message more times than you'd like to count. While the rest of the world celebrates each new AI breakthrough, many of us find ourselves pressing our noses against the metaphorical window, watching the party from outside. From Llama 3.2 to OpenAI's Advanced Voice Mode, we're caught in an endless cycle of "Coming to your region... soon™️".

Well, I got tired of waiting. If we can't join the party, let's throw our own!

In this blog, we will guide you through building a Voice Assistant, a specialized Agentic RAG (Retrieval-Augmented Generation) system designed for voice interactions. Using open-source projects such as Milvus for the vector database, Llama 3.2, and various GenAI technologies, including Assemby AI, DuckDuckGo, and ElevenLabs, this voice assistant goes beyond mere processing—it intelligently understands and responds to voice queries.

Key Technologies We'll Use

- Milvus is an open-source, high-performance, highly scalable vector database that stores, indexes, and searches billion-scale unstructured data through high-dimensional vector embeddings. It is perfect for building modern AI applications such as Retrieval Augmented Generation (RAG), semantic search, multimodal search, and recommendation systems. Milvus runs efficiently across various environments, from laptops to large-scale distributed systems.

- Llama 3.2 is the latest large language model (LLM) provided by Meta. We'll use it to generate responses based on the retrieved information from Milvus.

- Assembly AI provides advanced speech-to-text APIs designed to convert spoken language into written text with a high degree of accuracy.

- DuckDuckGo is a search engine that emphasizes user privacy and provides the same search results for all users, unlike traditional search engines that tailor results based on user data.

- ElevenLabs specializes in advanced voice synthesis, allowing users to create realistic voice clones from brief audio samples.

What We'll Build: An Agentic RAG System for Voice Interactions

There are several ways of building an Agentic RAG system. For those interested, I've written a blog walking through how to build a local agentic RAG system using Llama 3.2, LangChain, and LangGraph.

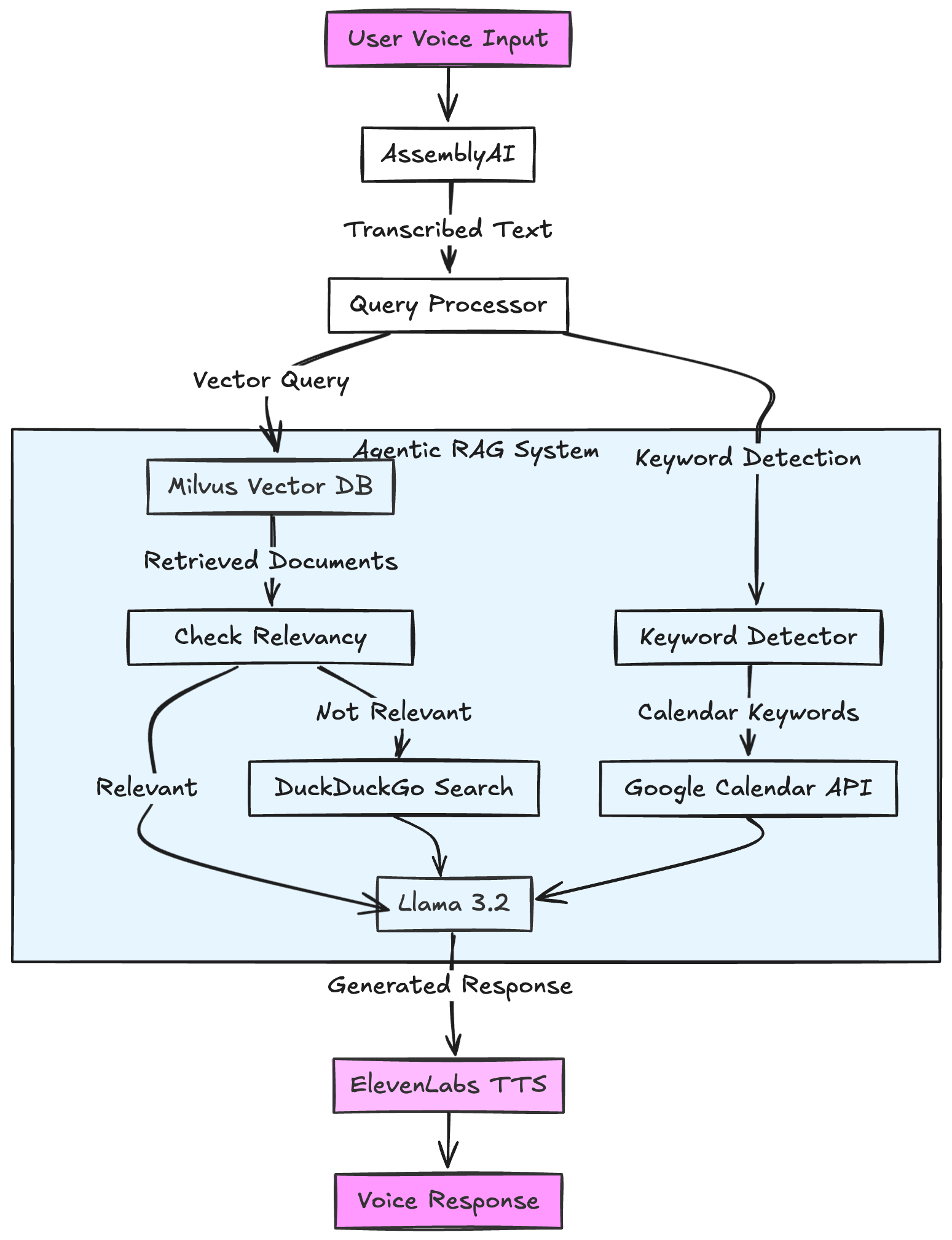

However, this time we're taking a different route by building an agent from scratch, without relying on any existing frameworks. The architecture of this RAG system is broken down into multiple components (as shown below), each handling a specific part of this process.

Figure- the architecture of this agentic RAG system.png

Figure- the architecture of this agentic RAG system.png

Figure: The architecture of this agentic RAG system

Below is an overview of how this agentic RAG system works.

- The user sends their voice query to the system, starting the interaction.

- Assembly AI transcribes the user's voice to text in real-time.

- The transcribed text is processed by the Query Processor, which prepares it for vector analysis and keyword detection in the agentic RAG system.

- Milvus vector database performs semantic similarity searches and retrieves documents with high semantic similarity to the query.

- Concurrently, the Keyword Detector scans the text for specific keywords that might trigger specific actions, such as accessing the Google Calendar API if calendar-related keywords are identified.

- Documents retrieved from Milvus are checked for their relevance to the query by our agentic system. If relevant, they proceed to response generation using Llama 3.2; if not, a web search is initiated. If the documents from Milvus are not relevant, DuckDuckGo then conducts a web search.

- Llama 3.2 takes the information from either Milvus or DuckDuckGo or Keyword Detector, depending on the relevancy check, to generate a coherent and context-appropriate response.

- The response generated by Llama 3.2 is converted into speech using ElevenLabs’ Text-to-Speech technology.

- Finally, the synthesized voice response is delivered back to the user, completing the interaction loop with an intelligent, context-aware answer.

In the next section, we'll discuss how we build this agentic RAG system, particularly how this system handles various types of searches simultaneously.

Multi-Source Orchestration

Unlike traditional RAG systems that follow a linear query → retrieve → generate pattern, our system thinks for itself. Let's explore the key components of our implementation:

💡 Note: For clarity and readability, we'll focus on the most important code snippets here. The complete implementation is available in our GitHub repository.

First, Milvus Vector Search

milvus_results = self.milvus_wrapper.search_similar_text(text)

relevant_results = [result for result in milvus_results

if result["distance"] > 0.6]

if relevant_results:

context = "\n".join([result["text"] for result in milvus_results])

augmented_query = f"""

Context: {context}

User Query: {text}

Please answer based on the given context.

"""

When you ask a question, we first check our Milvus knowledge base for relevant results. If we find something relevant (similarity > 0.6), we use that to answer your query.

Second, Calendar Integration

elif any(keyword in text.lower() for keyword in

["calendar", "schedule", "events", "appointment"]):

events = await self.calendar_service.get_upcoming_events()

augmented_query = f"""

Calendar Events: {events}

User Query: {text}

Please answer based on their calendar events.

"""

If you're asking about your schedule, we skip the knowledge base entirely and go straight to your calendar. No need to search through vectors when we know exactly where the information is!

Finally, Web Search Fallback

else:

web_results = self.web_searcher.search(text)

if web_results:

context = "\n".join(web_results[:3])

augmented_query = f"""

Web search results: {context}

User Query: {text}

Please answer based on the web search results.

"""

If we can't find anything relevant in Milvus and it's not a calendar query, we fall back to DuckDuckGo for a web search. We then get the top results, extract the most relevant paragraphs and feed that back to our LLM Llama 3.2.

Finally, Llama 3.2 takes the information as context to generate a coherent and accurate response.

Why Milvus for RAG?

RAG systems are only as good as their retrieval speed and the quality of their retrieval. This is why Milvus is key here:

- Speed Matters: When you're building a voice assistant, nobody wants to wait 5 seconds for an answer. Milvus can search through millions of vectors in milliseconds.

- Accuracy at Scale: Milvus excels at managing high-dimensional vector searches through Approximate Nearest Neighbor (ANN) algorithms. With robust indexing options like IVF_FLAT, HNSW, and DiskANN, Milvus ensures high recall rates even as the vector database scales, maintaining the integrity of search results in extensive systems.

The Results?

Our DIY voice assistant might not be the best one out there, but it's got some unique strengths:

- Modular design: Swap out any component (try Whisper instead of AssemblyAI, why not?)

- Full control: No black boxes, just transparent, hackable code

- Privacy-focused: Your data stays yours

Conclusion

While this project is obviously far from what OpenAI Advanced Voice mode can do, besides, there's something satisfying about building your own AI assistant. It may not have Claude's charm or ChatGPT's polish, but it's got character. More importantly, it offers true ownership and control of your AI stack. By using open-source tools like the Milvus vector database, we've shown how we can build something that's:

- Truly Yours: Every component is transparent and modifiable

- Geographically Unrestricted: Deploy anywhere, serve anyone

- Forever Available: No sudden API changes or service discontinuations

- Fully Customizable: Add features, modify behavior, or optimize for specific needs

- Privacy-First: Your data stays on your infrastructure, under your control

And unlike some other AI tools I could mention, this one definitely knows where Europe is on a map!

The full code is available on our Github.

We'd Love to Hear What You Think!

If you like this blog post, please consider:

- ⭐ Giving us a star on GitHub

- 💬 Joining our Milvus Discord community to share your experiences

- 🔍 Exploring our Bootcamp repository for more examples of RAG applications with Milvus

- Key Technologies We'll Use

- What We'll Build: An Agentic RAG System for Voice Interactions

- **Multi-Source Orchestration**

- Why Milvus for RAG?

- The Results?

- Conclusion

- We'd Love to Hear What You Think!

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

How Zilliz Saw the Future of Vector Databases—and Built for Production

Zilliz anticipated vector databases early, building Milvus to bring scalable, reliable vector search from research into production AI systems.

Zilliz Cloud Delivers Better Performance and Lower Costs with Arm Neoverse-based AWS Graviton

Zilliz Cloud adopts Arm-based AWS Graviton3 CPUs to cut costs, speed up AI vector search, and power billion-scale RAG and semantic search workloads.

Vector Databases vs. Document Databases

Use a vector database for similarity search and AI-powered applications; use a document database for flexible schema and JSON-like data storage.