Approximate Dynamic Programming: Breaking the Curse of Dimensionality

Approximate Dynamic Programming: Breaking the Curse of Dimensionality

Approximate Dynamic Programming (ADP) solves decision-making problems that are too complex for traditional dynamic programming. It finds near-optimal solutions by using approximation techniques instead of exact computations. These approximations allow for handling the "curse of dimensionality," which occurs in problems with large or continuous state spaces. ADP is widely used in fields like robotics, finance, and logistics, providing practical solutions when exact methods are too slow or impractical.

Background

What is Dynamic Programming (DP)?

Dynamic Programming (DP) is a technique used to solve complex problems by breaking them into smaller, simpler subproblems. Think of it as solving a giant jigsaw puzzle—one piece at a time, but in a way that ensures you don’t repeat the same work twice. By storing intermediate results, DP saves time and effort, making it a go-to method for tasks like shortest path calculations, inventory management, and even crafting AI game strategies.

Challenges of Traditional DP

Despite its brilliance, traditional DP struggles when problems grow too large or complex. For example:

Scalability Issues: The number of possible scenarios to consider can become unmanageable.

Computational Complexity: Solving every detail precisely requires significant time and processing power.

Memory Limitations: Storing all intermediate results for significant problems can quickly exceed available memory.

Why is Approximation Necessary?

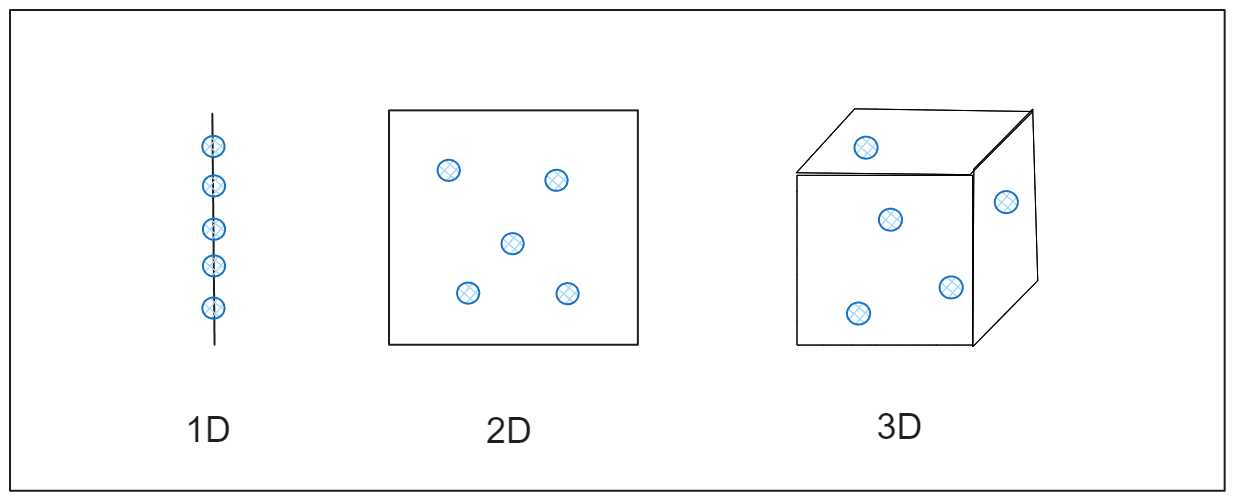

The need for approximation arises due to the "curse of dimensionality," a term that describes how problems grow exponentially more complex as the number of variables or states increases. For example:

In real-world problems like managing a large warehouse or training a robot to navigate, the number of possible states can be in the millions or billions.

Solving each state exactly using traditional DP would take immense computational power and memory.

Figure- How Data Expands Across Dimensions.png

Figure- How Data Expands Across Dimensions.png

Figure: How Data Expands Across Dimensions

Approximation provides a way to simplify the problem without losing the essence of a good solution. It focuses on the most essential parts of the problem, ignoring less critical details to save time and resources.

Approximate Dynamic Programming (ADP): A Smarter Approach

Approximate Dynamic Programming (ADP) offers a practical solution by focusing on near-optimal results instead of exact ones. It uses approximation techniques to simplify calculations to solve large and complex problems efficiently.

Think of ADP as using a detailed map instead of a satellite image—you still find your way without unnecessary detail. This approach provides faster decisions, reduces computational demands, and opens up possibilities to tackle challenges in robotics, logistics, finance, and beyond.

ADP strikes a balance between simplicity and accuracy. It simplifies problems enough to make them solvable in a reasonable timeframe while maintaining enough accuracy to produce high-quality results. By using these foundational ideas, ADP opens the door to solving large-scale problems that were previously out of reach with traditional methods.

Key Concepts of Approximate Dynamic Programming

ADP is built on the foundational principles of traditional dynamic programming, however, modifying them to operate with approximations rather than exact computations. Here are the key ideas:

Value Functions: A value function represents the long-term benefit of being in a particular state, considering the future decisions that will follow. It’s like a scorecard that helps decide which choices lead to the best outcomes.

Policies: A policy is a set of rules or strategies that guide decisions in each state. ADP aims to find nearly optimal policies that balance efficiency and accuracy.

Bellman Equations: These equations are the backbone of DP and ADP, providing a framework to evaluate value functions. In ADP, these equations are solved approximately to save time and resources.

Key Components of Approximate Dynamic Programming

ADP works by combining several essential components to approximate solutions:

State Space: This represents all possible situations or configurations in a problem. For instance, in a supply chain, each state could represent inventory levels at a given moment.

Decision Space: This is the set of all possible actions or choices available at each state. For example, a robot might decide to move left, right, or stay in place.

Approximation Mechanisms:

Function Approximation: Instead of computing exact values for every state, ADP estimates value functions using mathematical functions (e.g., linear functions, and neural networks).

Sampling and Simulation: ADP often uses simulations to explore a subset of states and decisions, focusing on the most important ones.

Iterative Refinement: Approximate solutions are improved over time by refining estimates and updating policies based on feedback from simulations.

Techniques in Approximate Dynamic Programming

ADP employs various techniques to tackle large-scale, complex decision-making problems. These techniques reduce computational demands, improve scalability, and maintain solution quality. Below are the key techniques used in ADP.

1. Function Approximation

Function approximation is one of the core techniques in ADP. It estimates value functions or policies when it is impractical to compute them exactly for every state.

Linear Methods: Linear function approximation uses weighted combinations of features to estimate value functions. For example, in a warehouse problem, features like inventory levels or demand trends might be combined linearly to predict future costs or benefits. Linear methods are simple, computationally fast, and suitable for problems with well-behaved relationships between variables.

Non-Linear Methods: Nonlinear techniques are used for more complex problems where relationships are not linear. These methods include polynomial regression or other advanced mathematical models capable of capturing intricate patterns in the data.

Neural Networks for Complex Approximations: In cases where state spaces are vast, and relationships are highly non-linear, neural networks are particularly effective. Neural networks can approximate value functions with high accuracy, making them ideal for applications like robotics or games where interactions are complex. For instance, deep reinforcement learning (a form of ADP) leverages neural networks to approximate policies or value functions in problems like autonomous driving.

2. Simulation-Based Methods

Simulation-based techniques allow ADP to explore large state and decision spaces without evaluating every possible scenario.

Monte Carlo Simulations: Monte Carlo methods use random sampling to estimate the outcomes of different decisions. These simulations are beneficial when the state space is too large to model exhaustively. For example, in financial portfolio optimization, Monte Carlo simulations can estimate the future performance of various investment strategies.

Approximate Policy Iteration: Policy iteration switches between improving a policy and evaluating it. Approximate policy iteration adapts this process by estimating value functions and policies using simulations rather than exact calculations for faster convergence while maintaining high-quality results.

3. Approximate Value Iteration

Value iteration is a method of finding the optimal policy by iteratively updating value functions. In ADP, approximate value iteration modifies this process to handle large-scale problems:

Truncation: Instead of calculating value functions for every possible state, truncation limits the computation to a subset of the state space. This subset is chosen based on its importance to the problem, reducing computation while still capturing the essence of the solution.

State Aggregation: Similar states are grouped into clusters or aggregated into a single "meta-state" to reduce the size of the state space while preserving enough detail for better decision-making. For example, in grid-world navigation problems, nearby states with similar values might be aggregated to speed up computations.

4. Reinforcement Learning (RL) Connection

ADP shares a close relationship with reinforcement learning (RL), and the two often overlap in methodology and application:

Shared Foundations: Both ADP and RL are rooted in the principles of dynamic programming, especially in solving Markov Decision Processes (MDPs). They use value functions, policies, and iterative improvement to solve decision-making problems.

Approximation Techniques in RL: Many RL algorithms, such as Q-learning or actor-critic methods, utilize approximation techniques similar to those in ADP to handle large state spaces.

Differences: While ADP often uses simulations based on predefined models, RL typically learns directly from interactions with the environment. This makes RL more flexible for scenarios where the underlying model is unknown or difficult to define.

Applications of Approximate Dynamic Programming

ADP has a wide range of applications across industries. Below are some of the key areas where ADP is making a significant impact:

1. Robotics and Control Systems

In robotics and control systems, ADP addresses challenges related to real-time decision-making and adaptability in dynamic environments.

Path Planning: Robots often need to find the most optimal route to a destination while avoiding obstacles. ADP helps by approximating the optimal policy to navigate complex environments by balancing speed and safety.

Decision-Making Under Uncertainty: Many robotic systems operate in environments where outcomes are uncertain, such as variable terrain or unpredictable interactions. ADP makes near-optimal decisions by modeling uncertainties and approximating the best actions in real time.

Industrial Automation: In manufacturing, ADP controls robotic arms, schedule tasks, and optimize production workflows for smoother operations.

2. Operations Research

Operations research focuses on optimizing processes and resource management, making it an ideal domain for ADP.

Supply Chain Optimization: Managing supply chains involves balancing inventory levels, transportation costs, and demand uncertainties. ADP provides scalable solutions to optimize these factors so that companies can reduce costs and improve efficiency.

Inventory Management: ADP helps businesses determine when to restock products, how much to order, and how to allocate resources across multiple locations. By approximating value functions, ADP can handle large-scale inventory systems with fluctuating demand.

Scheduling and Resource Allocation: From airline crew scheduling to hospital resource allocation, ADP is used to make decisions that maximize resource utilization while meeting constraints.

3. Finance and Economics

Decision-making in finance and economics often involves balancing risks and rewards over time, making ADP an invaluable tool.

Portfolio Optimization: ADP helps investors allocate assets to maximize returns while managing risk. By approximating value functions, it can account for market uncertainties and changing economic conditions.

Risk Management: Financial institutions use ADP to model and mitigate risks, such as credit defaults or market volatility. ADP’s ability to handle large state spaces allows for more accurate predictions and better strategies.

Pricing Strategies: ADP is used to determine dynamic pricing strategies, such as adjusting product prices based on demand, competition, and market trends.

4. Big Data and AI

As data-driven decision-making becomes increasingly essential, ADP’s ability to process and act on vast amounts of information has made it a critical component in artificial intelligence and big data applications.

Data-Driven Decision-Making: ADP facilitates businesses to make intelligent decisions based on data patterns, such as optimizing marketing strategies, improving customer retention, or personalizing user experiences.

AI in Dynamic Environments: Many AI systems, such as autonomous vehicles or virtual assistants, rely on ADP techniques to make real-time decisions in changing conditions.

High-Dimensional Problems: In big data scenarios, ADP helps tackle problems with large state and action spaces, such as recommendation systems or predictive analytics.

Advantages of Approximate Dynamic Programming

From the discussions it is clear that ADP offers several advantages that make it a practical and powerful approach to solve large-scale decision-making problems smoothly:

Scalability: Handles large and complex problems with vast state and action spaces efficiently.

Reduced Computational Costs: Uses approximations to save time and resources compared to exact dynamic programming.

Flexibility: Adapts to problems with uncertain or changing environments, such as real-time systems.

Memory Efficiency: Avoids storing detailed information for every state by leveraging function approximations.

Practical for Real-World Applications: Solves problems like supply chain optimization, robotics, and financial modeling where traditional methods are infeasible.

Improved Decision-Making: Delivers near-optimal solutions that balance accuracy and practicality.

Integration with AI: Compatible with machine learning and reinforcement learning techniques for data-driven decision-making.

Iterative Refinement: Allows continuous improvement of solutions through iterative updates and simulations.

Limitations of Approximate Dynamic Programming

Despite its significant advantages, ADP has its own set of limitations like:

Approximation Errors: Solutions are not exact, which can lead to suboptimal decisions in critical scenarios.

Convergence Challenges: Iterative methods may not always converge to a stable solution, especially with poor approximations.

Complexity of Function Approximation: Designing and training effective approximation models (e.g., neural networks) can be challenging and resource-intensive.

Dependence on Problem Structure: Performance heavily relies on the structure of the problem and the quality of the approximation mechanisms.

Computational Overhead for Large Simulations: While less costly than exact DP, simulations, and sampling in ADP can still require significant computational resources.

Model Dependency: Requires a reasonably accurate model of the problem to work effectively; errors in the model can propagate through the solution.

Trade-offs in Accuracy: Balancing computational performance with solution quality often require compromises that may not suit all applications.

The Role of Vector Databases in Scaling Approximate Dynamic Programming

While Approximate Dynamic Programming (ADP) addresses the challenges of complex decision-making through approximations, its practical implementation often requires scalable data management solutions. Zilliz, with its flagship product Milvus and Zilliz Cloud (managed Milvus), offers a vector database that complements decision-making frameworks by efficiently managing high-dimensional data and addressing the computational challenges inherent in real-world applications.

Milvus leverages Approximate Nearest Neighbor (ANN) techniques to deliver a scalable and high-speed platform for similarity search and retrieval. While ANN and ADP solve different problems, Milvus’s capabilities align with ADP-based workflows by supporting data-intensive tasks. Here’s how Milvus adds value:

Accelerating State Representation in Decision Systems: ADP often relies on approximating value functions or policies in high-dimensional spaces. Milvus facilitates this process by quickly retrieving similar states through ANN search, enabling efficient generalization and value estimation.

Enabling Scalable Real-Time Applications: Real-world decision-making systems often operate on massive datasets in dynamic environments. Milvus’s ANN-based architecture ensures fast retrieval and scalability, making it ideal for logistics, finance, and robotics applications.

Supporting AI-Driven Optimization: Milvus plays a critical role in AI-driven workflows where embedding data is central. For example, in recommendation systems, state embeddings can be stored and queried in Milvus to optimize personalization through ADP-like approaches.

Conclusion

ADP is a transformative approach to solving complex, large-scale decision-making problems. By leveraging approximation techniques, ADP balances computational speed and solution quality, addressing challenges like the curse of dimensionality. Its applications span diverse fields, including robotics, finance, operations research, and AI. Vector databases like Milvus and Zilliz Cloud complement decision-making frameworks by efficiently managing high-dimensional data and addressing the computational challenges inherent in real-world applications.

FAQs on Approximate Dynamic Programming

What is Approximate Dynamic Programming (ADP)? ADP is a method for solving complex decision-making problems by using approximations instead of exact calculations to provide scalable and computationally optimized solutions.

What are the main applications of ADP? ADP is widely used in robotics for path planning, operations research for supply chain optimization, finance for portfolio management, and AI for data-driven decision-making.

What are the limitations of ADP? ADP can introduce approximation errors, face convergence challenges, and require careful design of models and simulations to ensure reliable performance.

Why is ADP important for modern technology? ADP’s ability to solve large-scale problems efficiently makes it crucial for industries dealing with dynamic systems, high-dimensional data, and real-time optimization challenges.

Related Resources

- Background

- Approximate Dynamic Programming (ADP): A Smarter Approach

- Techniques in Approximate Dynamic Programming

- Applications of Approximate Dynamic Programming

- Advantages of Approximate Dynamic Programming

- Limitations of Approximate Dynamic Programming

- The Role of Vector Databases in Scaling Approximate Dynamic Programming

- Conclusion

- FAQs on Approximate Dynamic Programming

- Related Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free