20 Popular Open Datasets for Natural Language Processing

Learn the key criteria for selecting the ideal dataset for your NLP projects and explore 20 popular open datasets.

Read the entire series

- An Introduction to Natural Language Processing

- Top 20 NLP Models to Empower Your ML Application

- Unveiling the Power of Natural Language Processing: Top 10 Real-World Applications

- Everything You Need to Know About Zero Shot Learning

- NLP Essentials: Understanding Transformers in AI

- Transforming Text: The Rise of Sentence Transformers in NLP

- NLP and Vector Databases: Creating a Synergy for Advanced Processing

- Top 10 Natural Language Processing Tools and Platforms

- 20 Popular Open Datasets for Natural Language Processing

- Top 10 NLP Techniques Every Data Scientist Should Know

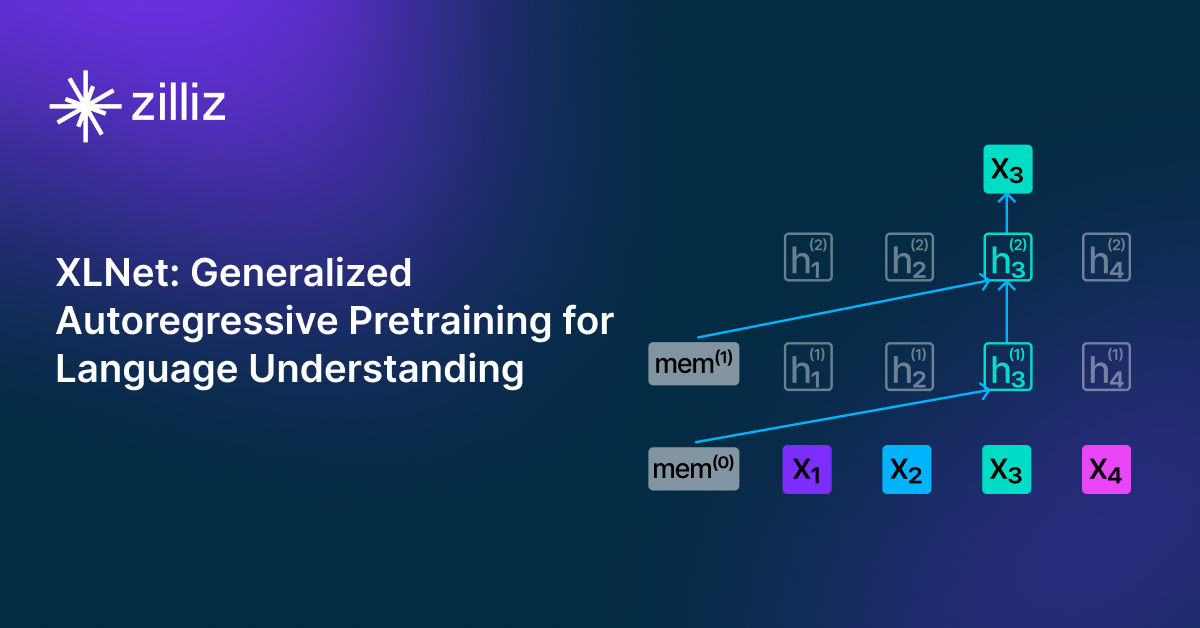

- XLNet Explained: Generalized Autoregressive Pretraining for Enhanced Language Understanding

Introduction

Natural Language Processing (NLP) is a field of machine learning where models learn to understand and derive meaning from human languages. NLP transforms unstructured data, like text and speech, into a structured format that can be used in classification tasks, summarization, machine translation, sentiment analysis, and many other applications.

Training models to perform these tasks requires large amounts of data. The more capable you want your model to be, the more data it needs. Fortunately, repositories like Hugging Face, Kaggle, GitHub, and Papers with Code offer vast, varied datasets that are readily available for public use.

In this post, we've compiled 20 of the most popular NLP datasets, categorized into general NLP tasks, sentiment analysis, text-based tasks, and speech recognition. We also explore the key criteria for selecting the ideal dataset for your project.

Criteria for Selecting NLP Datasets

When selecting an NLP dataset for training or fine-tuning your model, consider the specific goals of your project, the quality and size of the dataset, the diversity of the data, and how easily it can be accessed and used.

Purpose

The most critical factor is whether the dataset aligns with the purpose of your project. Even if a dataset has high quality, quantity, and diversity, it won't be useful if it's irrelevant to your target task or domain. For example, if you're building a sentiment analysis model for movie reviews, a dataset of IMDb movie reviews is far more effective than a larger but unrelated dataset like news articles from The New York Times. Ensuring that the dataset is fit for your specific purpose is essential for effectively training your model.

Data Quality

The quality of the dataset is also crucial because it directly impacts the performance of your model. Poor-quality data can lead to inaccurate predictions, unreliable outputs, and potentially misleading results. For instance, training a model on a dataset filled with spelling errors, grammatical mistakes, and inconsistent labeling will likely result in the model replicating these errors. This can cause issues like hallucinations in text generation tasks, where the model outputs incorrect information with confidence. Therefore, it’s essential to choose datasets that are carefully filtered for inaccuracies, biases, and redundancies, ensuring the data is clean and reliable for training your model.

Dataset Size

For your model to learn linguistic and semantic relationships effectively, you'll need large amounts of data. Generally, the more parameters your model has, the larger the dataset it requires.

A research paper “Training Compute-Optimal Large Language Models” by Hoffman et al. (2022) introduced the Chinchilla scaling laws, which propose an optimal parameter-to-token ratio of around 0.2 for training models. For example, a model with 1 billion parameters might require a dataset of approximately 5 billion tokens for optimal performance. On the other hand, smaller datasets can suffice for fine-tuning a sentiment analysis model if they accurately represent the linguistic features and domain of the target task. For instance, a sentiment analysis model with 100 million parameters might only need 10 million high-quality data points.

The table below illustrates examples of various model sizes alongside their corresponding optimal dataset sizes, as determined by the Chinchilla scaling laws:

| Model Size (parameters) | Optimal Dataset Size (tokens) |

| 70M | 300M |

| 1B | 5B |

| 10B | 50B |

| 70B | 300B |

Table: Examples of various model sizes alongside their corresponding optimal dataset sizes

Diversity

A diverse dataset is essential for creating a well-rounded model capable of handling a wide range of tasks with greater accuracy. Just as diverse experiences enrich a human personality, diverse data allows an NLP model to perform better across different contexts. For example, if you're training a language translation model, relying solely on a dataset of formal academic texts will limit the model's ability to translate casual conversations or slang. However, by incorporating a mix of prose, academic writing, interviews, and coding samples across various languages, the model will be better equipped to handle different linguistic contexts and produce more accurate translations.

Accessibility

Accessibility refers to how easily you can obtain the data needed for your project. The easier it is to access the required data, the more efficient your training process will be. Open datasets available on platforms like Hugging Face, Kaggle, and Papers with Code are often the first choice for many researchers due to their ease of access and comprehensive documentation.

HuggingFace has a huge collection of easily accessible Open Datasets for NLP

HuggingFace has a huge collection of easily accessible Open Datasets for NLP

For example, downloading a dataset like Project Gutenberg from Hugging Face is straightforward—simply install the datasets library and use the load_dataset function. This level of accessibility accelerates the development process, ensuring you have the right data to train your models effectively.

pip install datasets

from datasets import load_dataset

training_dataset = load_dataset("manu/project_gutenberg")

20 Popular Open Datasets for NLP

Having covered key criteria for selecting the right datasets for your NLP projects, let's explore 20 of the most popular datasets. These datasets fall into four categories: general NLP tasks, sentiment analysis, text-based tasks, and speech recognition.

General NLP Projects

For general NLP tasks, datasets need to be extensive and diverse, capturing the complexities and nuances of language. This ensures that models can generalize well across different linguistic contexts.

Blog Authorship Corpus: A dataset of nearly 700,000 posts by 19,000 authors on Blogger.com, totaling 140 million English words. This dataset is valuable for stylistic and authorship analysis but consider privacy and ethical concerns related to personal blogs.

Recommender Systems and Personalization Datasets: This collection includes vast datasets from sources like Amazon, Google, Twitch, and Reddit, featuring user interactions, reviews, and ratings in various formats. These datasets offer diverse sources but may require aggregation and preprocessing.

Project Gutenberg: An extensive collection of over 50,000 public domain books in various languages, providing a rich resource for language modeling across different periods. The dataset includes text in various formats such as plain text and HTML.

Yelp Open Dataset: Includes nearly 7 million reviews for over 150,000 businesses, along with data like user tips and business attributes. While extensive, its focus is on business reviews and may not cover broader general NLP tasks beyond reviews. Access the dataset here.

SQuAD (Stanford Question Answering Dataset): A large-scale dataset for question answering (QA) tasks, containing over 100,000 question-answer pairs where the answer is a segment of text extracted from a corresponding passage. It has become a standard benchmark for QA models and includes SQuAD 2.0, which introduces unanswerable questions. Access the dataset here.

Sentiment Analysis

Sentiment analysis is a NLP technique used to identify and classify the sentiment expressed in a piece of text. The primary goal is to determine whether the text conveys a positive, negative, neutral, or mixed sentiment. This technique is essential for understanding opinions, emotions, and the nuances of phrasing in textual data.

Sentiment analysis datasets are crucial for capturing opinions, emotions, and the nuances of phrasing. They should include diverse labels and rating systems to help models learn how sentiment is expressed in different ways.

Sentiment 140: A dataset of 1.6 million tweets, cleaned of emoticons, and labeled on a scale from 0 (negative) to 4 (positive). Includes fields like polarity, date, user, and text. Note that the dataset may reflect biases present in Twitter data, such as slang and informal language.

Multi-Domain Sentiment Analysis Dataset: Contains product reviews from Amazon across various categories, with star ratings that can be converted into binary labels. Ensure the dataset covers categories proportionally and is relevant for your specific use case.

SentimentDictionaries: Two dictionaries with over 80,000 entries tailored for sentiment analysis, one from IMDb movie reviews and the other from U.S. 8-K filings. They may require additional processing for specific applications.

OpinRank Dataset: A dataset of 300,000 car and hotel reviews from Edmunds and TripAdvisor, organized by car model or travel destination. Ensure it includes recent reviews and considers review authenticity.

Stanford Sentiment Treebank: Contains sentiment annotations for over 10,000 entries from Rotten Tomatoes reviews, rated on a scale from 1 (most negative) to 25 (most positive). The dataset includes annotations at different levels of granularity.

Text-Based Tasks

Text-based NLP tasks require datasets that are both large and diverse, supporting use cases like machine translation, text summarization, text classification, named entity recognition (NER), and question answering (QA).

20 Newsgroups: A collection of 20,000 documents from 20 different newsgroups, often used for text classification and clustering tasks. Available in three versions: original, no duplicates, and dates removed. Note that it may require custom preprocessing.

Microsoft Research WikiQA Corpus: Ideal for QA tasks, containing over 3,000 questions and 29,000 answers from Bing query logs. Ensure the dataset's quality and relevance for your specific QA model.

Jeopardy: A dataset of over 200,000 questions from the TV show "Jeopardy!" with categories, values, and descriptors covering episodes from 1964 to 2012. Note any potential biases due to the nature of the questions and the TV show's context.

Legal Case Reports Dataset: Includes summaries of over 4,000 Australian legal cases, making it an excellent resource for training models on text summarization. Ensure it covers a diverse range of case types and jurisdictions.

WordNet: A large lexical database of English, with nouns, verbs, adjectives, and adverbs grouped into cognitive synonyms, or “synsets,” linked by conceptual-semantic and lexical relations. It’s a great resource for lexical semantics and can be integrated with various NLP tasks.

Speech Recognition

Speech recognition datasets must be of high quality to capture the complexities of verbal communication, including minimizing background noise and ensuring high signal quality. Diversity in speakers, accents, and contexts is also essential to train models that are robust and adaptable to various real-world scenarios.

Spoken Wikipedia Corpora: A collection of Wikipedia articles narrated in English, German, and Dutch, with hundreds of hours of aligned audio in each language. Highlight the range of languages and any limitations in dataset quality or coverage.

LJ Speech Dataset: Contains 13,100 clips of human-verified transcriptions from audiobooks, featuring a single speaker for clarity. Note the limitation of a single speaker and its impact on generalizability to other voices.

M-AI Labs Speech Dataset: Nearly 1,000 hours of audio and transcriptions from LibriVox and Project Gutenberg, organized by gender and language. Ensure it includes a diverse range of speakers and contexts.

Noisy Speech Database: A parallel dataset of clean and noisy speech, useful for building speech enhancement and text-to-speech models. Mention the types of noise included and its effect on the dataset’s utility.

TIMIT: An acoustic-phonetic speech dataset created by Texas Instruments and MIT, featuring 630 speakers reading phonetically rich sentences in eight American English dialects. Indicate if it is still relevant with advancements in speech technology.

For further exploration of NLP and the wide range of datasets available for developing deep learning models, check out the resources below:

Other Sources of NLP Data

While publicly available datasets are invaluable for machine learning development and research, there are additional methods to obtain NLP training data that may better suit specific needs or provide unique advantages:

Private Datasets

Private datasets are personally curated collections of data, often created in-house or purchased from specialized dataset providers. These datasets are tailored to specific needs and are typically of high quality, with careful attention given to relevance, accuracy, and bias. However, acquiring private datasets can be costly and may require significant resources for data collection and management. They are especially useful for projects requiring domain-specific data or when working on proprietary applications.

Directly From the Internet

Scraping data directly from websites is another method to gather NLP data. This approach allows for the collection of large volumes of data quickly. However, it comes with significant challenges: scraped data is often unstructured, may contain inaccuracies or biases, and could include sensitive or confidential information. Additionally, scraping data from websites can raise legal and ethical concerns, especially if the data is copyrighted or if proper permissions are not obtained.

By exploring these additional sources, you can complement publicly available datasets and potentially find data that is more closely aligned with your project's requirements.

Summary

Choosing the right dataset is crucial for developing effective NLP models. Consider factors like the dataset's relevance to your project’s purpose, its quality, size, diversity, and accessibility to ensure optimal performance. This post has covered 20 popular open datasets across various NLP tasks, offering insights into their specific applications and benefits.

In addition to publicly available datasets, private datasets and web scraping offer alternative data sources. While private datasets can provide high-quality, domain-specific data, they can be expensive. Scraping data from the web allows for large-scale collection but comes with challenges such as data quality issues and legal concerns.

Further Reading

- Introduction

- Criteria for Selecting NLP Datasets

- 20 Popular Open Datasets for NLP

- Other Sources of NLP Data

- Summary

- Further Reading

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Everything You Need to Know About Zero Shot Learning

A comprehensive guide to Zero-Shot Learning, covering its methodologies, its relations with similarity search, and popular Zero-Shot Classification Models.

Top 10 NLP Techniques Every Data Scientist Should Know

In this article, we will explore the top 10 techniques widely used in NLP with clear explanations, applications, and code snippets.

XLNet Explained: Generalized Autoregressive Pretraining for Enhanced Language Understanding

XLNet is a transformer-based language model that builds on BERT's limitations by introducing a new approach called permutation-based training.