ChatGPT+ Vector database + prompt-as-code - The CVP Stack

I am excited to share a demo application we have been working on here at Zilliz. We call it OSS Chat, a chatbot you can use to get technical knowledge about your favorite open-source projects. This inaugural release only supports Hugging Face, Pytorch, and Milvus, but we plan to expand it to several of your favorite open-source projects. We built OSS Chat using OpenAI's ChatGPT and a vector database, Zilliz! We are offering it as a free service to anyone who uses it. If you'd like your open-source project listed, let us know.

Background on why we built OSS Chat

ChatGPT has constraints due to its limited knowledge base, sometimes resulting in hallucinating answers when asked about unfamiliar topics. We are introducing the new AI stack, ChatGPT+Vector database+prompt-as-code, or the CVP Stack, to overcome this constraint.

ChatGPT is exceptionally good at answering natural language queries. When combined with a prompt that links the user's query and the retrieved text, ChatGPT generates a relevant and accurate response. This approach can alleviate ChatGPT from providing "hallucinating answers."

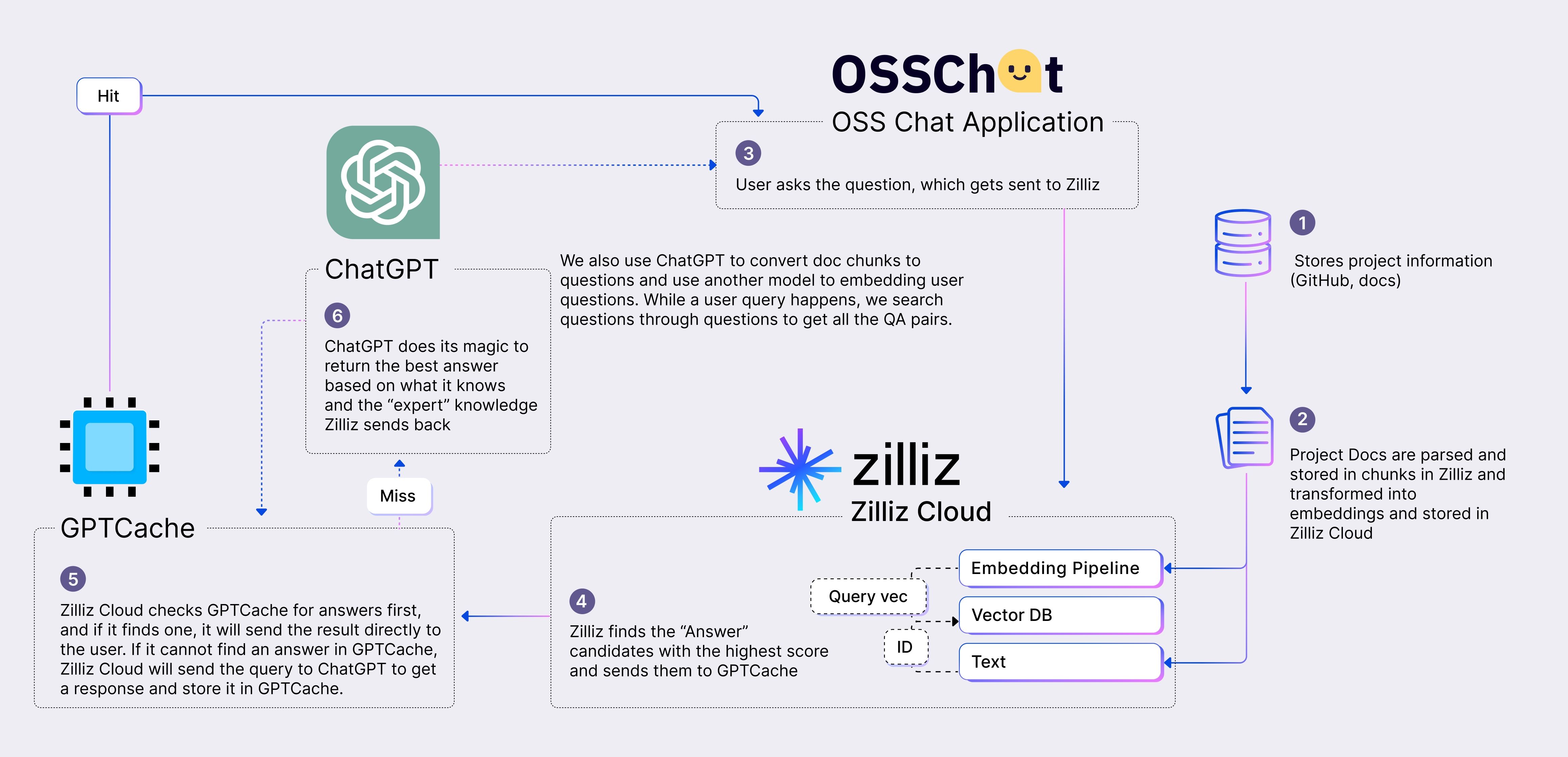

We have built OSS Chat as a working demonstration of the CVP stack. OSS Chat leverages various GitHub repositories of open-source projects and their associated docs pages as the source of truth. We convert this data into embeddings and store the embeddings in Zilliz and the related content in a separate data store. When the user interacts with OSS Chat by asking questions about any open-source project, we trigger a similarity search in Zilliz to find a relevant match. The retrieved data is fed into ChatGPT to generate a precise and accurate response.

OSS Chat Architecture

OSS Chat Architecture

Hallucinating the answer

I want to show you a few Q&A examples using ChatGPT and OSS Chat to demonstrate how you can remove some incorrect responses.

Q1 Does Hugging Face support OpenAI Whisper

When we asked ChatGPT this question, it admitted its limitation and gave us an accurate answer (it didn't have the data), which wasn't useful.

As of my knowledge cutoff of September 2021, Hugging Face has not announced support for OpenAI Whisper. However, it is always possible that they may add support in the future. OpenAI Whisper is a new language model from OpenAI, and it may take some time for other companies and developers to integrate it into their platforms and products.

With OSS Chat, because we are pulling from the Huggingface documentation site, we are able to get an accurate answer. Yes, Hugging Face supports OpenAI Whisper. The FlaxWhisperModel class is available in the transformers library, which is a wrapper around the OpenAI Whisper model. You can use this class to load the pre-trained OpenAI Whisper model and use it for various natural language processing tasks such as text classification, question answering, and text generation.

Q2 What does torch.compile API do?

We asked ChatGPT a question about a PyTorch API and got a long answer about what it is (which we didn't ask) and a response stating that PyTorch does not have a torch.compile API.

As of my knowledge cutoff of September 2021, there is no torch.compile API in PyTorch, which is a popular open-source machine learning library developed by Facebook AI Research.

However, PyTorch provides various APIs related to compiling and optimizing neural network models, such as torch.nn.Module, which is a base class for all neural network modules in PyTorch. torch.nn.Module provides various methods and hooks for defining and optimizing neural network models.

Additionally, PyTorch provides an interface to integrate with Just-In-Time (JIT) compilers, such as the TorchScript JIT compiler, which can be used to optimize and compile PyTorch models for efficient execution on various hardware platforms. The JIT compiler can be used to convert PyTorch models into a standalone, serialized representation that can be loaded and executed in other environments or languages. In summary, while PyTorch does not have a torch.compile API, it provides various APIs and tools for optimizing and compiling neural network models.

Here is what OSSChat told us:

The purpose of the torch.compile API is to speed up PyTorch code by compiling it ahead of time. It can handle arbitrary Python code with minimal changes to existing code and can handle data-dependent control flow. It is also able to handle non-PyTorch function calls, which TorchScript tracing treats as constants and can result in incorrect results.

Q3 What is TTL in Milvus and how do I set it

For this final question, we asked ChatGPT, and it provided us with a reasonable answer (it even had code snippets!), but it wasn't an answer to the question. Instead, it was one of those "hallucinations" where it must have compiled the answer.

In Milvus, TTL (Time To Live) is a feature that allows you to set an expiration time for your data. When you set a TTL for a collection or partition in Milvus, Milvus will automatically delete any data that has exceeded the specified TTL. To set TTL for a collection or partition in Milvus, you can use the set_collection_ttl or set_partition_ttl API respectively. Here is an example:

pythonCopy code

import datetime

from milvus import Milvus, DataType

milvus = Milvus()

# create a collection

collection_param = {

'collection_name': 'my_collection',

'dimension': 128,

'index_file_size': 1024,

'metric_type': DataType.FLOAT_VECTOR,

}

milvus.create_collection(collection_param)

# set TTL for the collection

now = datetime.datetime.now()

delta = datetime.timedelta(days=7) # TTL of 7 days

expiry_time = now + delta

milvus.set_collection_ttl('my_collection', expiry_time.timestamp())

# create a partition

partition_param = {

'collection_name': 'my_collection',

'partition_name': 'my_partition',

}

milvus.create_partition(partition_param)

# set TTL for the partition

milvus.set_partition_ttl('my_collection', 'my_partition', expiry_time.timestamp())

In this example, we create a collection and a partition in Milvus. We then set a TTL of 7 days for both the collection and partition using the set_collection_ttl and set_partition_ttl APIs respectively. The TTL is specified as a Unix timestamp, which is the number of seconds since January 1, 1970. We calculate the expiry time by adding the TTL to the current time using the datetime module.

Note that you need to enable the TTL feature when you start Milvus. This can be done by setting the TTL_CHECK_INTERVAL parameter in the Milvus configuration file. The default value is 60 seconds.

OSSchat, on the other hand, gave us the correct answer: a single line of code to set the desired seconds in the collection set property.

TTL stands for "Time to Live" in Milvus. It is a feature that allows you to set an expiration time for data in a collection. When data in a collection expires, it will be automatically cleaned up and will not be involved in searches or queries.

To set the TTL value for a collection in Milvus, you can use the collection.set_properties() method in Python. For example, to set the TTL value to 1800 seconds (30 minutes), you can use the following code:

collection.set_properties(properties={"collection.ttl.seconds": 1800})

You can also set the TTL value when creating a collection by specifying the collection.ttl.seconds property in the collection's properties. The value should be specified in seconds, and should be 0 or greater. A value of 0 means that TTL is disabled.

Get started now

In the coming days, we will add more functionality to OSS Chat and write up accompanying blogs to let you know how we build it. In the meantime, try playing with OSS Chat and let us know what capabilities you would like to see, or if you have an open-source project to add, let us know that too!

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

How to Build an Enterprise-Ready RAG Pipeline on AWS with Bedrock, Zilliz Cloud, and LangChain

Build production-ready enterprise RAG with AWS Bedrock, Nova models, Zilliz Cloud, and LangChain. Complete tutorial with deployable code.

Data Deduplication at Trillion Scale: How to Solve the Biggest Bottleneck of LLM Training

Explore how MinHash LSH and Milvus handle data deduplication at the trillion-scale level, solving key bottlenecks in LLM training for improved AI model performance.

Milvus WebUI: A Visual Management Tool for Your Vector Database

Milvus WebUI is a built-in GUI introduced in Milvus v2.5 for system observability. WebUI comes pre-installed with your Milvus instance and offers immediate access to critical system metrics and management features.