DeepSeek vs. OpenAI: A Battle of Innovation in Modern AI

Introduction

The rapid advancements in AI technology have led to models that not only excel in complex tasks but also seamlessly adapt to a wide range of applications, enhancing their utility across industries. OpenAI, a pioneer in this space, continues to push the boundaries with innovative models that redefine natural language processing and machine learning capabilities. These advancements have sparked a wave of innovation, thereby making AI more accessible, efficient, and capable of performing tasks that once seemed out of reach.

However, OpenAI's dominance is now being challenged by emerging competitors, such as DeepSeek, a Chinese AI company that has introduced DeepSeek R1, an open-source model that rivals some of the most advanced models available. DeepSeek R1 stands out due to its focus on cost efficiency and its ability to match the performance of high-end models while keeping operational costs significantly lower. This emerging player has begun to capture attention, especially for organizations and developers seeking a balance between performance and affordability.

In this blog, we will explore two standout models from OpenAI: OpenAI o1, which is renowned for its advanced reasoning capabilities and deliberate "thinking before responding" approach, and OpenAI o3-mini, a faster, more cost-efficient model optimized for STEM applications. Additionally, we will compare these models to DeepSeek R1, which offers similar performance and capabilities, but at a fraction of the cost. By examining their key features, performance benchmarks, and use cases, we aim to provide you with insights on how to choose the right AI model for your specific needs, whether you're working in research, software development, healthcare, or other fields where complex reasoning and cost efficiency are paramount.

OpenAI o1 Overview

Launched in September 2024 (in its preview version, and the full version released in December 2024), OpenAI o1 represents a significant leap forward in AI reasoning. Unlike its predecessors, o1 is specifically designed to tackle complex, multi-step tasks using a chain-of-thought reasoning approach, which is enhanced by large-scale reinforcement learning. This innovative method enables the model to think through problems step-by-step, improving its problem-solving capabilities and making it particularly effective for logical reasoning and decision-making in challenging scenarios.

Key Features

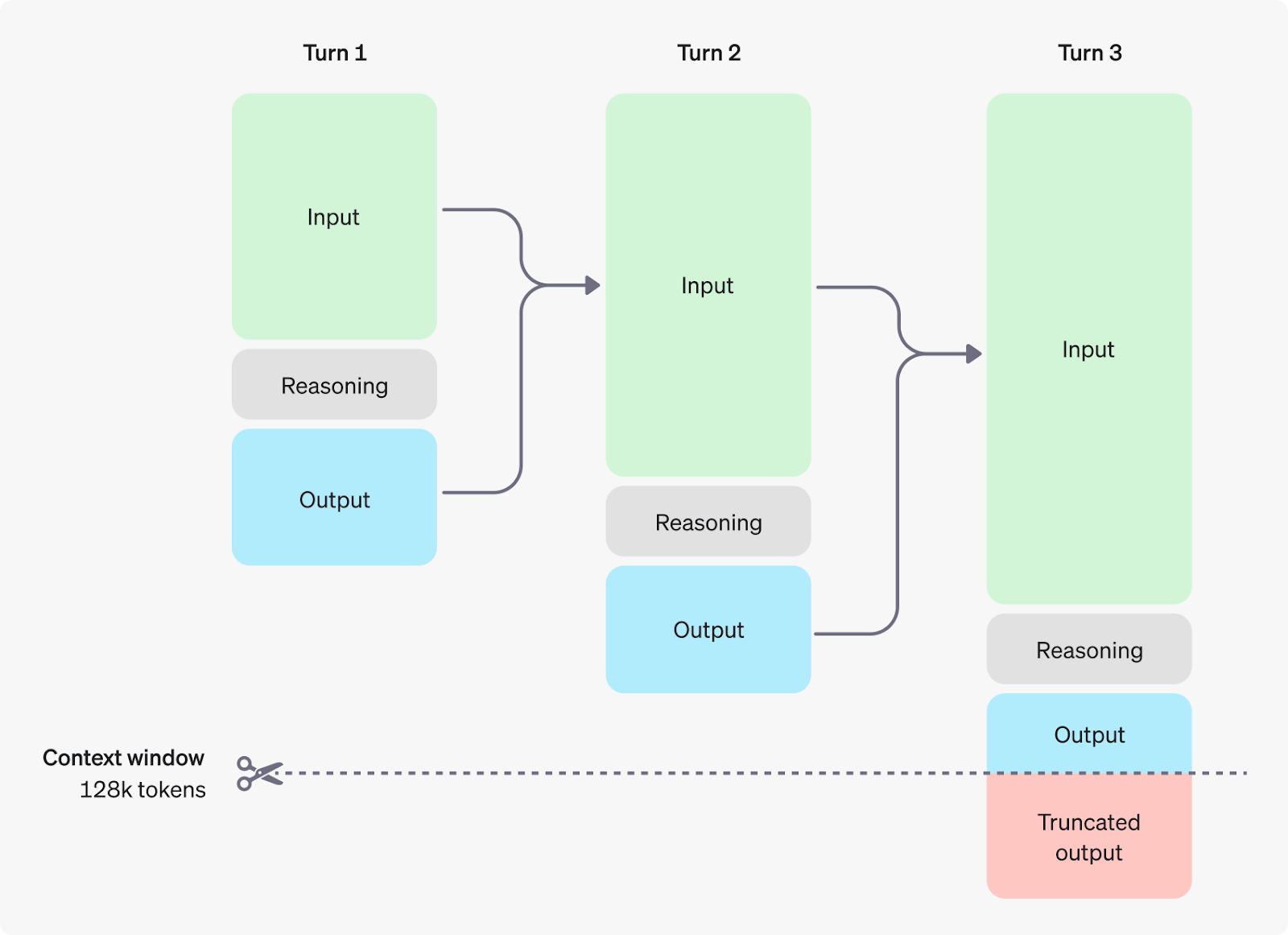

Model Architecture: OpenAI o1 is built on a transformer-based architecture optimized for reasoning and problem-solving. It employs a unique mechanism for generating extended chains-of-thought, allowing the model to perform deeper and more thorough analyses before providing an answer. This extended reasoning process enhances accuracy and reliability for complex queries.

Figure 1: Multi-step conversation with reasoning tokens (Source)

Training Data: OpenAI o1 was trained on a combination of filtered publicly available datasets and proprietary data from partnerships to enhance its reasoning and technical capabilities. Its training data includes web data, open-source datasets, reasoning datasets, paywalled content, specialized archives, and industry-specific resources, ensuring strong performance in both general knowledge and complex problem-solving.

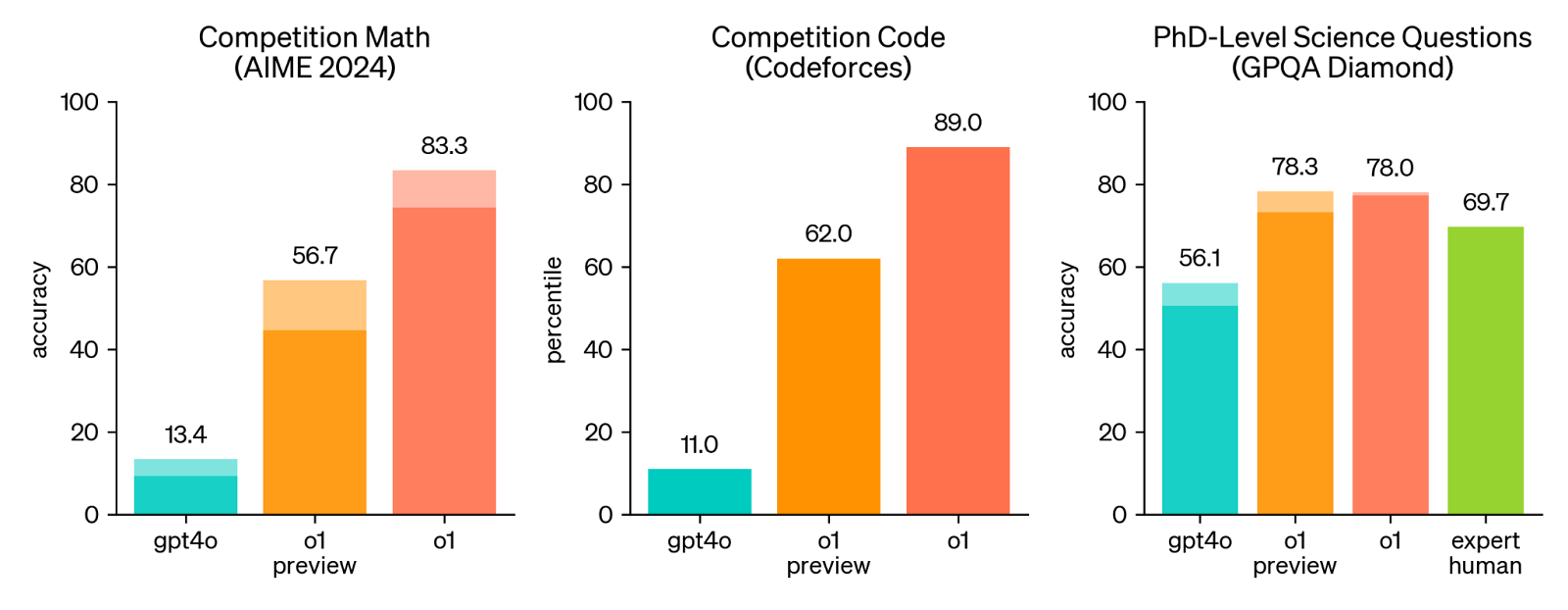

Performance Benchmarks: While OpenAI o1 is slower than earlier models like GPT-4o due to its reasoning processes, it consistently ranks higher in accuracy for complex tasks, particularly in STEM fields like science, mathematics, and coding. It achieved an impressive 83% on the American Invitational Mathematics Examination (AIME), and ranked in the 89th percentile on Codeforces competitive programming challenges. Additionally, it has demonstrated PhD-level accuracy in benchmarks for physics, biology, and chemistry problems.

Figure 2: Performance Benchmarks OpenAI o1 (Source)

Use Cases and Application Areas: OpenAI o1 is widely applicable in fields requiring advanced reasoning, such as scientific research (data analysis, hypothesis testing), software development (multi-step workflows, debugging), healthcare (diagnosis development), and educational applications (solving complex puzzles or crosswords).

OpenAI o3-mini Overview

The OpenAI o3-mini was officially released in late January 2025, following a preview in December 2024. This model continues OpenAI's progression in enhancing reasoning capabilities for complex tasks, offering notable improvements over previous models like o1, particularly in speed, efficiency, and performance across coding, mathematics, and science challenges.

Key Features

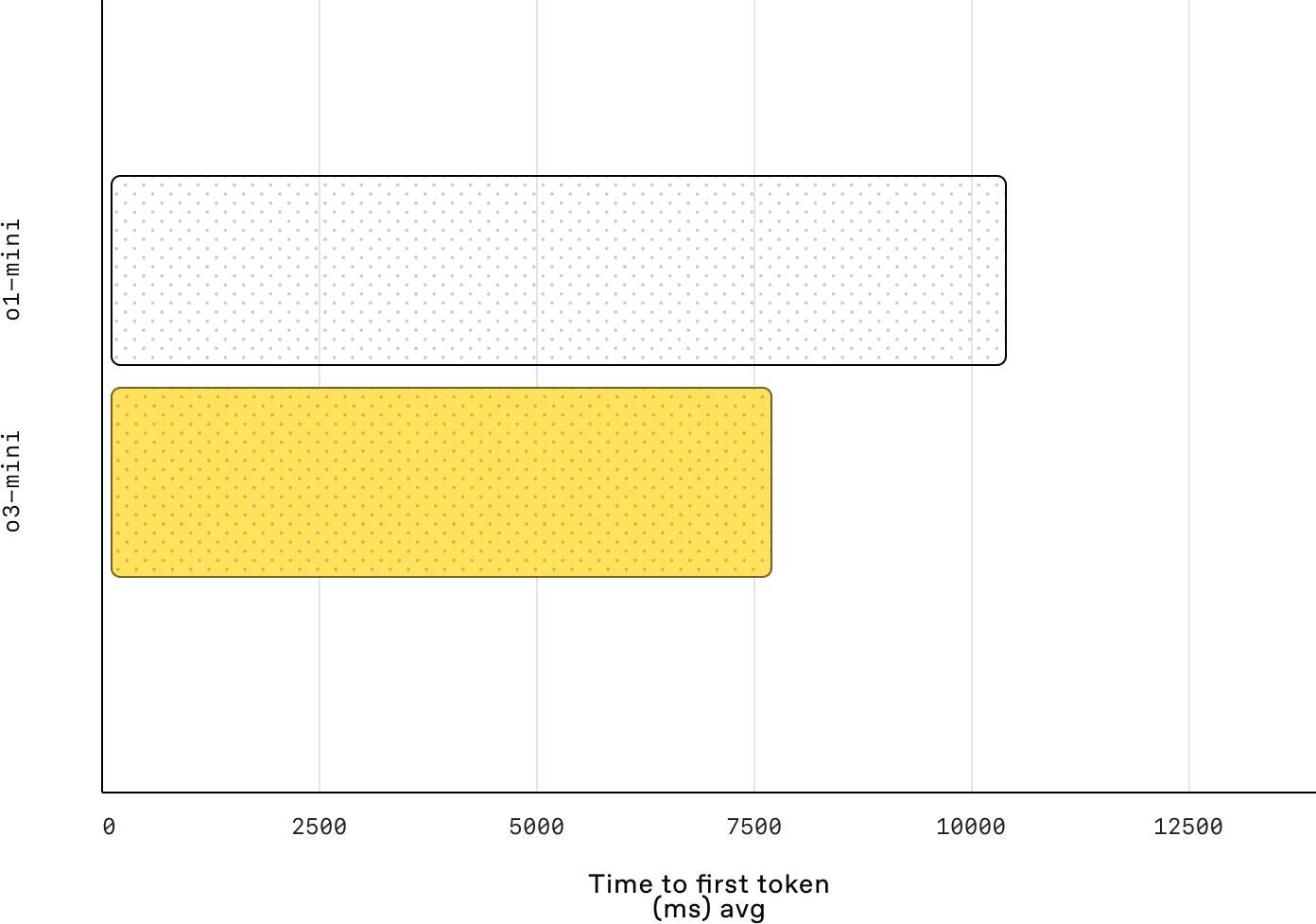

Model Architecture: Similar to OpenAI o1, o3-mini is based on a transformer architecture specifically optimized for advanced reasoning. It leverages the chain-of-thought technique to enable step-by-step problem-solving, combined with large-scale reinforcement learning to enhance reasoning. A standout feature of o3-mini is its reduced latency compared to o1, allowing for faster results while maintaining high accuracy in complex tasks.

Figure 3: Latency Comparison o3-mini vs. o1 (Source)

Training Data: Like its predecessor, o3-mini was trained on a combination of publicly available datasets, proprietary OpenAI data, and advanced filtering techniques to ensure a safe and effective training setup.

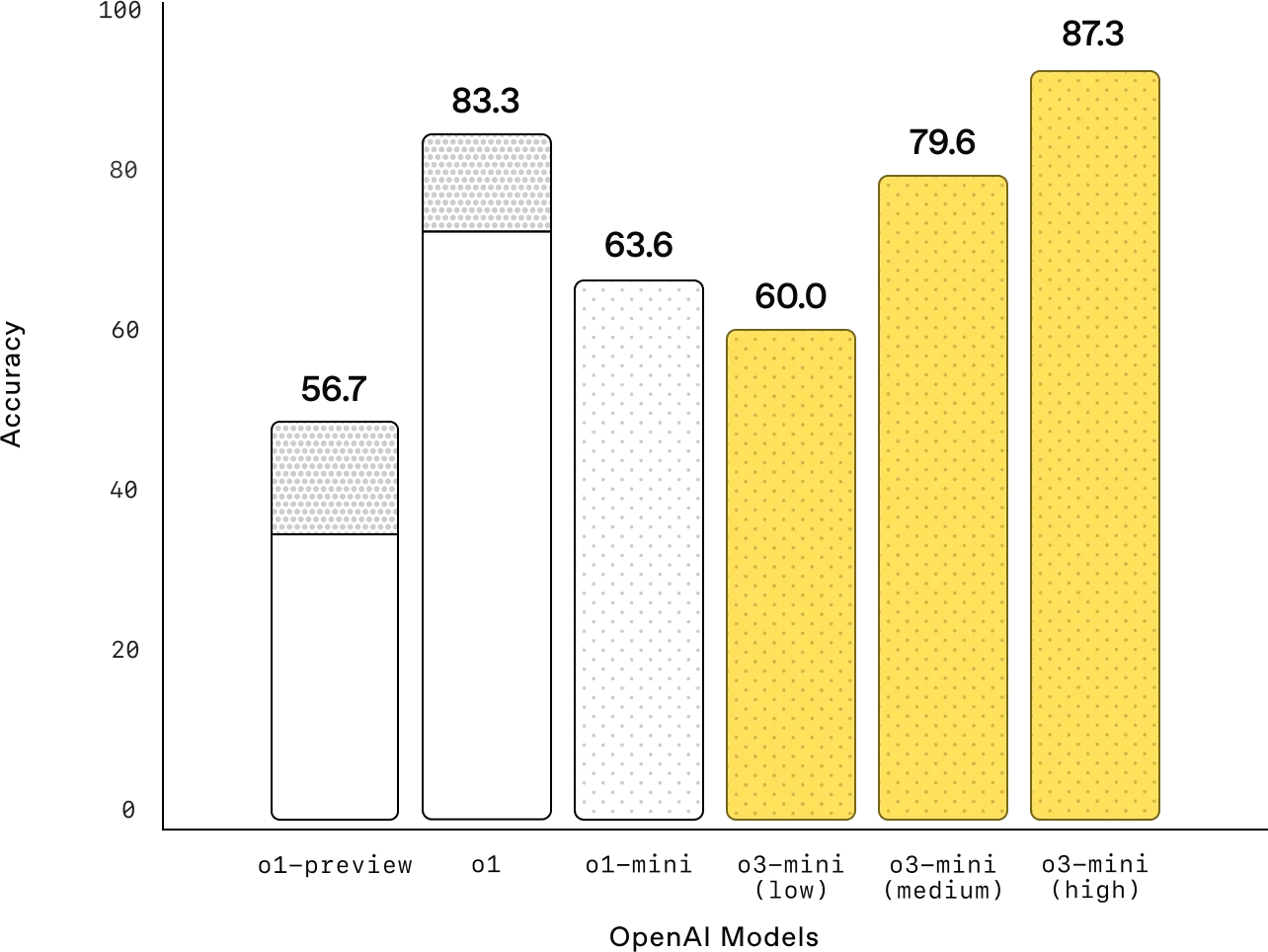

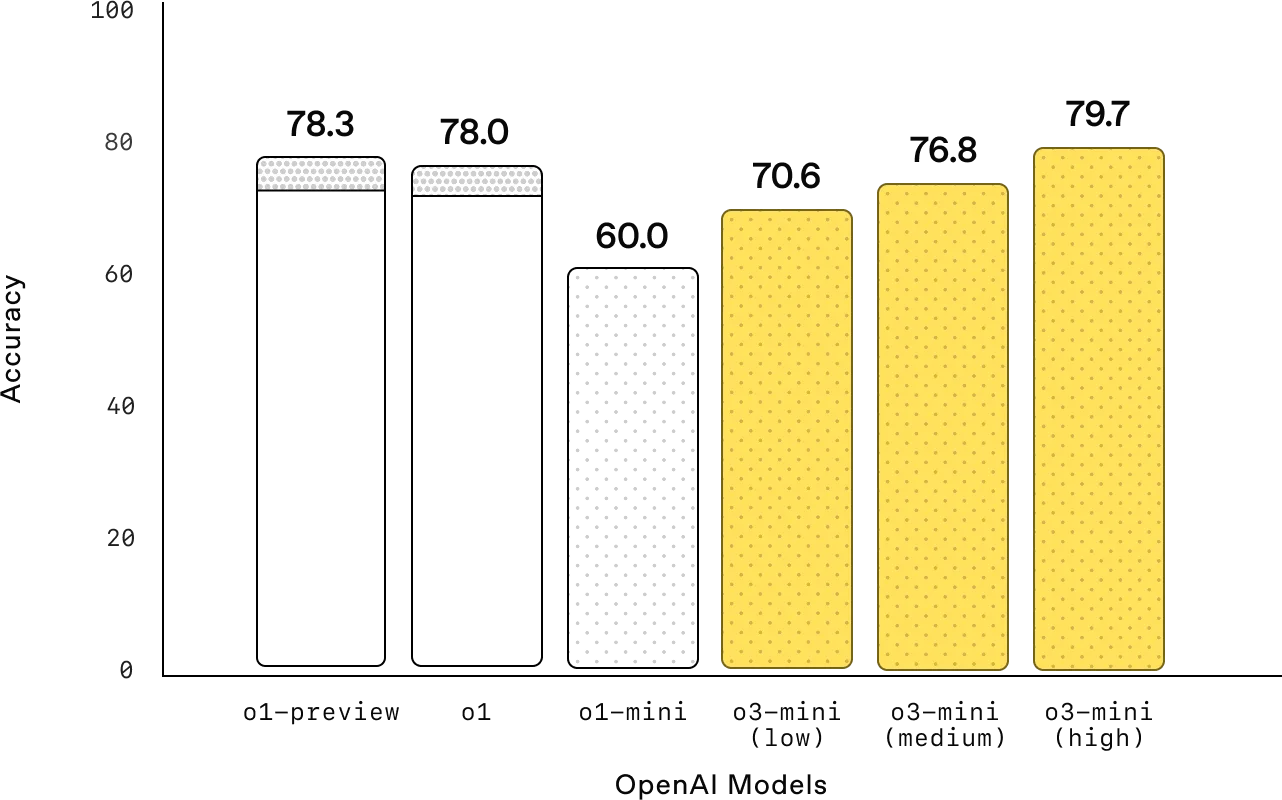

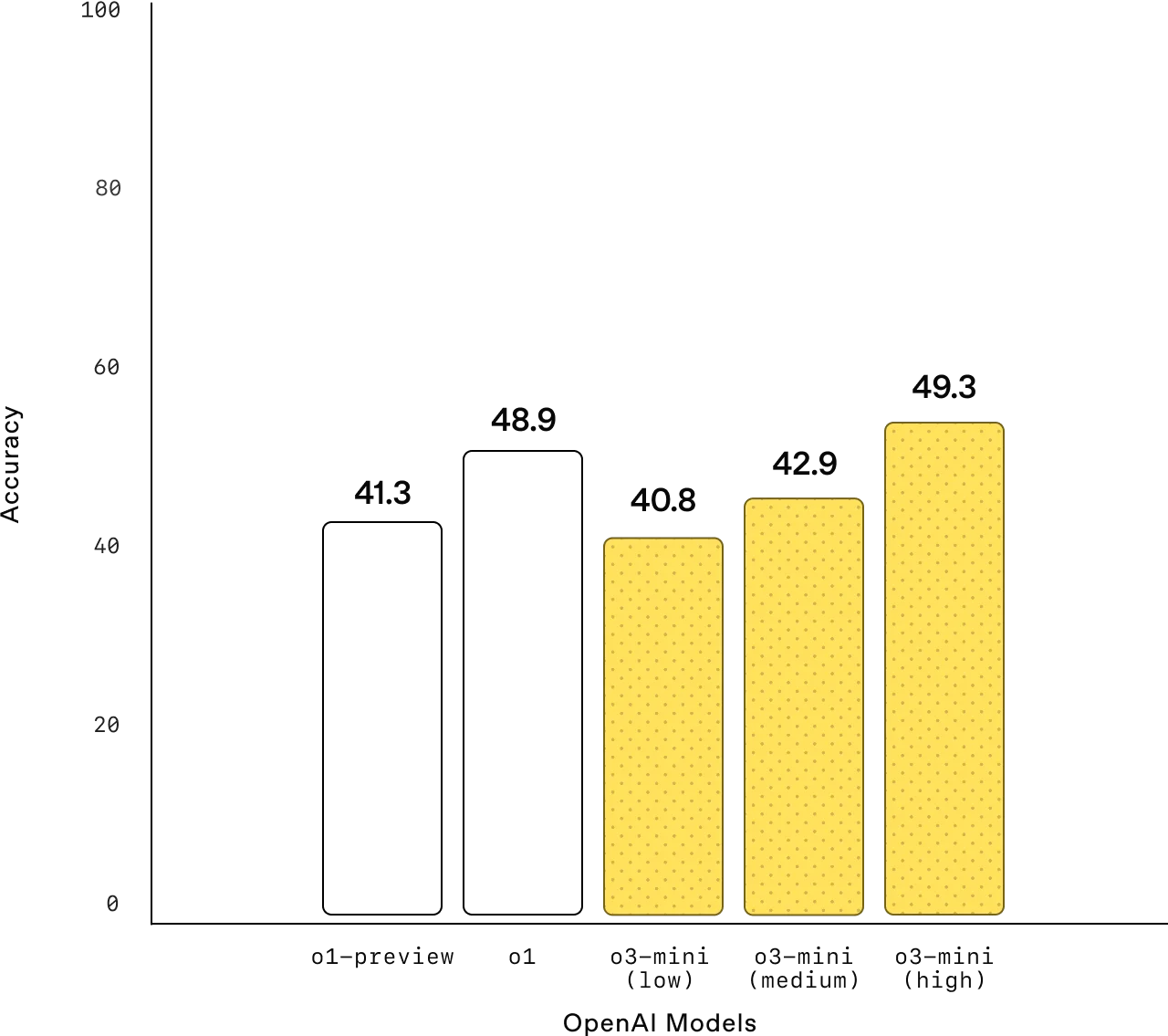

Performance Benchmarks: OpenAI o3-mini has demonstrated exceptional performance on benchmark datasets, achieving 87.3% accuracy in competition-level math problems, 79.7% accuracy on PhD-level science questions, and 49.3% accuracy in software programming, surpassing OpenAI o1 at higher reasoning levels.

Figure 4: Performance Benchmarks o3-mini vs. o1: Mathematics, AIME (Source)

Figure 5: Performance Benchmarks o3-mini vs. o1: PhD-level science

Figure 5: Performance Benchmarks o3-mini vs. o1: PhD-level science

Figure 5: Performance Benchmarks o3-mini vs. o1: PhD-level science (Source)

Figure 6: Performance Benchmarks o3-mini vs. o1: Software Engineering (Source)

Use Cases and Application Areas: While OpenAI o3-mini shares many use cases with o1, such as scientific research, software development, healthcare, and educational problem-solving, it stands out in domains where high-level reasoning with lower latency is essential. For instance, in financial analysis, o3-mini can efficiently handle complex risk forecasting, fraud detection, and investment strategy simulations, all while quickly processing large volumes of data.

Deepseek R1 Overview

DeepSeek R1, released in January 2025, is an open-source AI model developed by the Chinese company DeepSeek. It is specifically designed for advanced reasoning and problem-solving, leveraging a combination of chain-of-thought reasoning, supervised fine-tuning, and reinforcement learning to enhance logical inference. One of its standout features is its ability to achieve performance levels comparable to leading AI models, such as OpenAI o1, while maintaining significantly lower operational costs.

Key Features

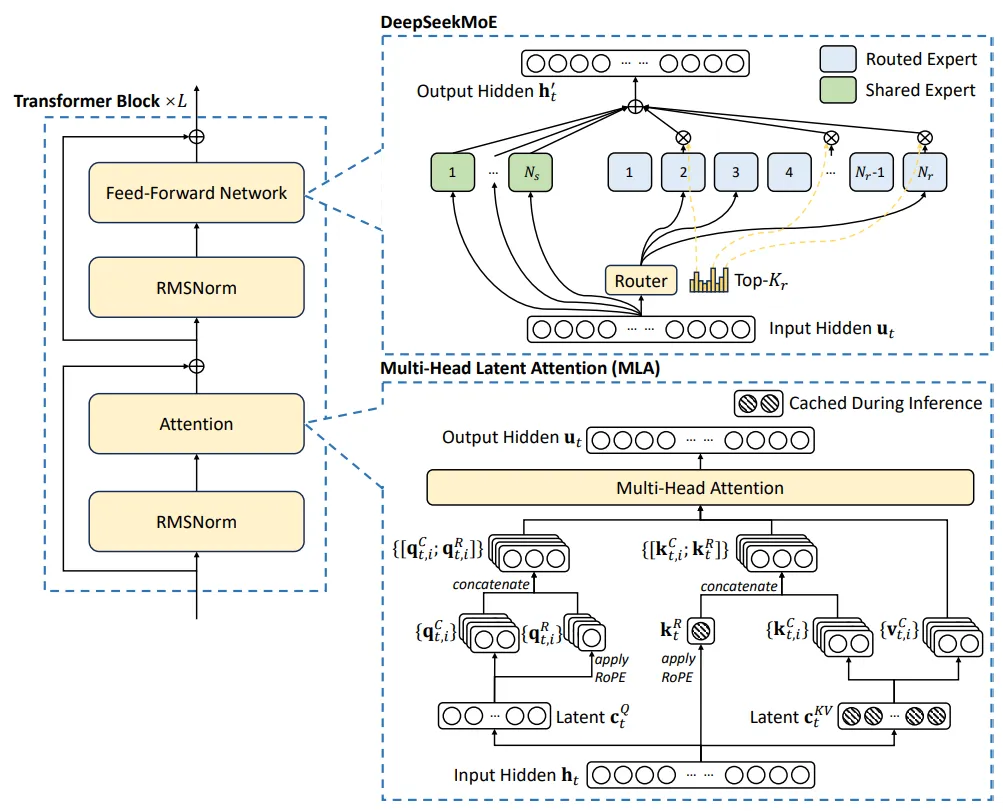

Model Architecture: DeepSeek R1 employs a transformer-based architecture optimized for reasoning tasks. Its training methodology builds on DeepSeek V3 and includes multiple steps: large-scale reinforcement learning, supervised fine-tuning, and a curated dataset of chain-of-thought examples. What sets it apart is the combination of three key elements, which significantly improve efficiency and problem-solving depth:

Mixture-of-Experts (MoE): This technique dynamically selects a subset of specialized neural network "experts" for each input, reducing computation while improving efficiency.

Multi-Head Latent Attention (MLA): The core idea behind MLA is low-rank joint compression for attention keys and values, which helps optimize the Key-Value (KV) cache during inference.

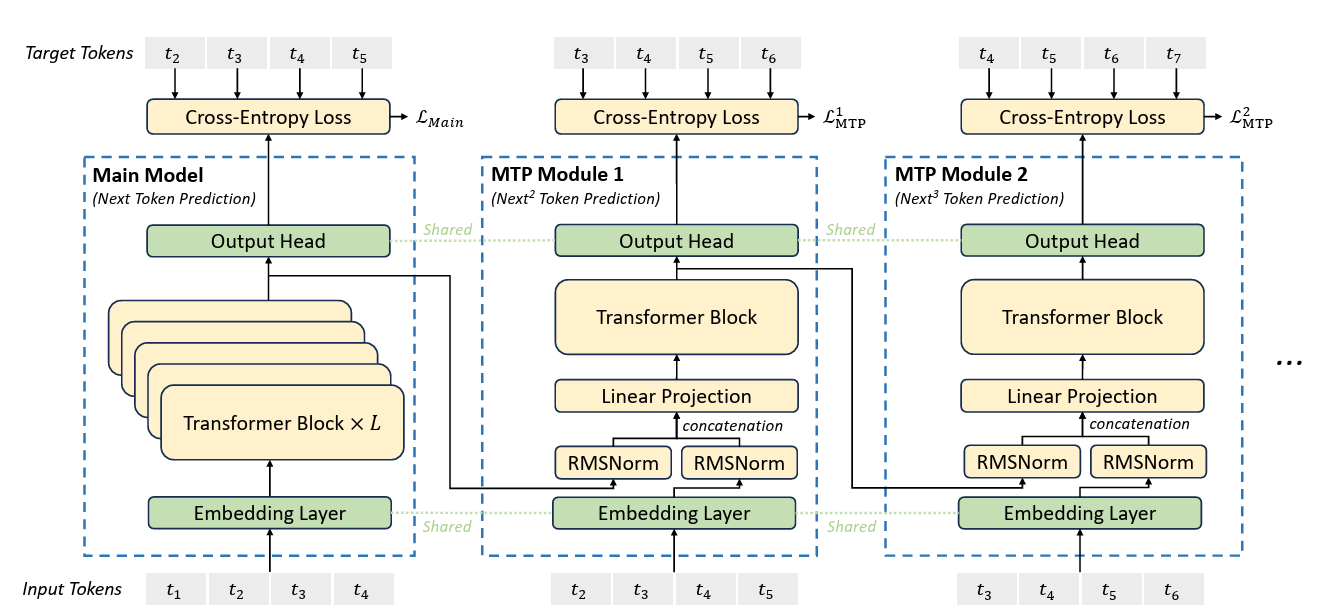

Multi-Token Prediction (MTP): Enables to predict multiple future tokens at once.

Figure 7: Basic architecture of DeepSeek-V3 (Source)

Figure 8: Illustration of our Multi-Token Prediction (MTP) implementation (Source)

Training Data: DeepSeek R1 was trained on a combination of two proprietary datasets that are not publicly available. One dataset adds reasoning capabilities, while the other enhances general-purpose tasks:

Reasoning: cold start chain-of-thought data to fine-tune DeepSeek V3.

Non-reasoning: labeled data for the subsequent supervised fine-tuning step to enhance general-purpose tasks such as writing, translation or factual QA.

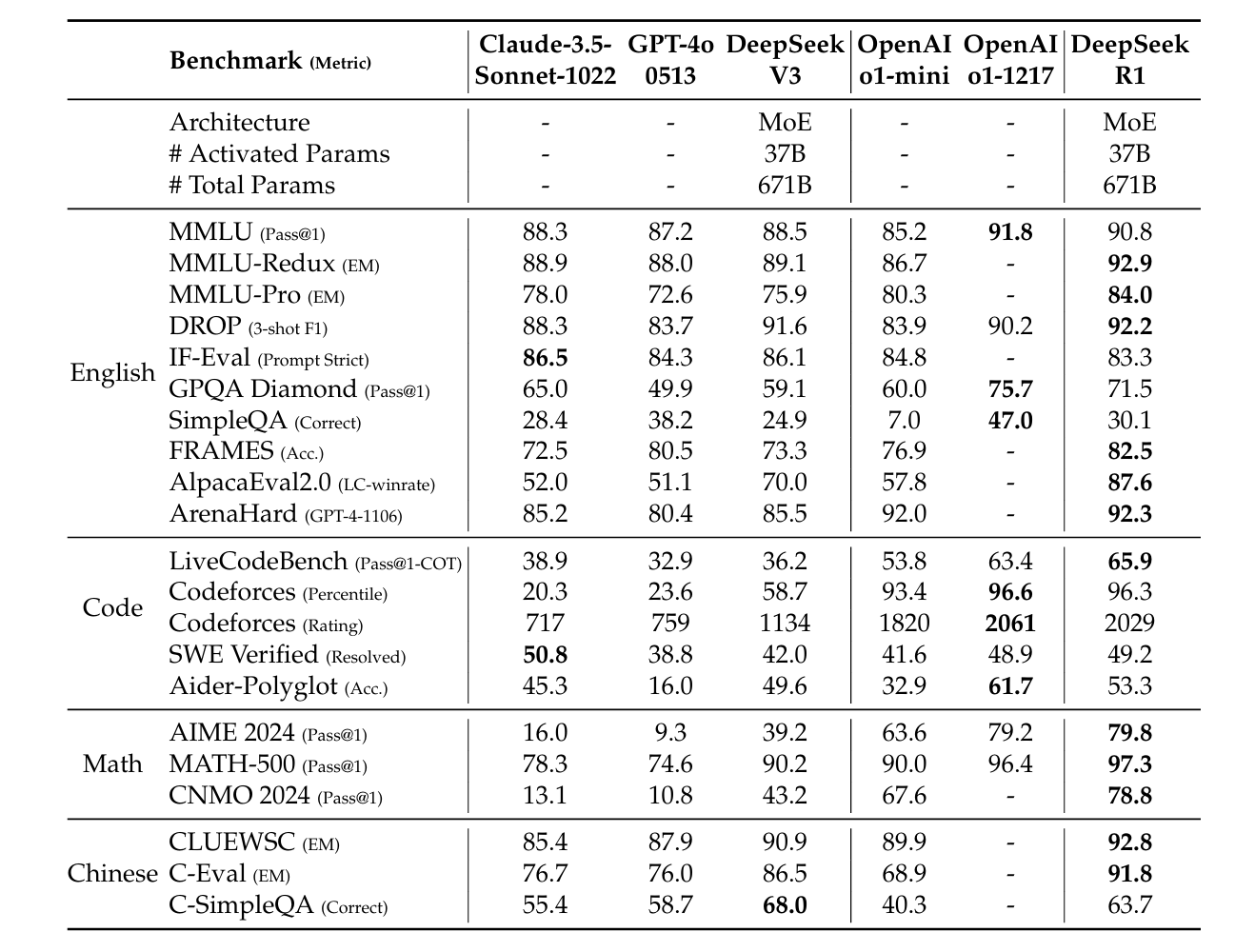

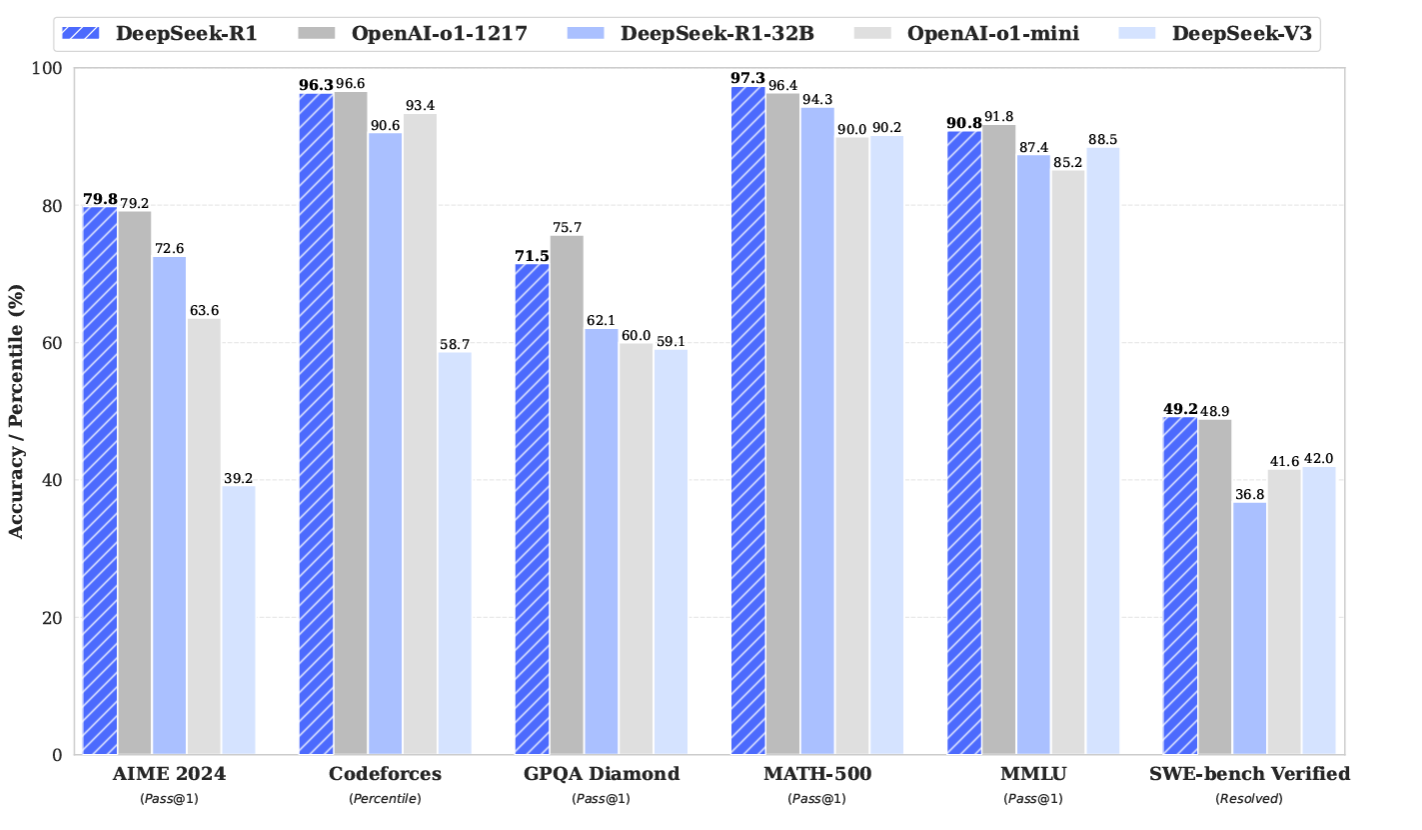

Performance Benchmarks: DeepSeek R1 excels in tasks requiring reasoning and deep analytical thinking, achieving 79.8% on AIME, 97.3% on MATH-500, and 96.3% accuracy on Codeforces. It also performed strongly in general knowledge benchmarks, with 90.8% on MMLU and 71.5% on GPQA Diamond.

Figure 9: Performance Benchmarks DeepSeek (Source)

Use Cases and Application Areas: Due to its strong performance in math and coding tasks, DeepSeek R1 is well suited for scientific research, software development, and academic education. Its open-source nature makes it accessible to a wide range of users and industries at a low cost.

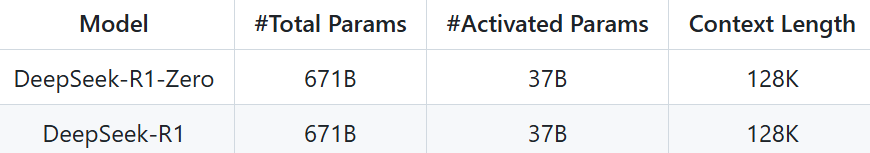

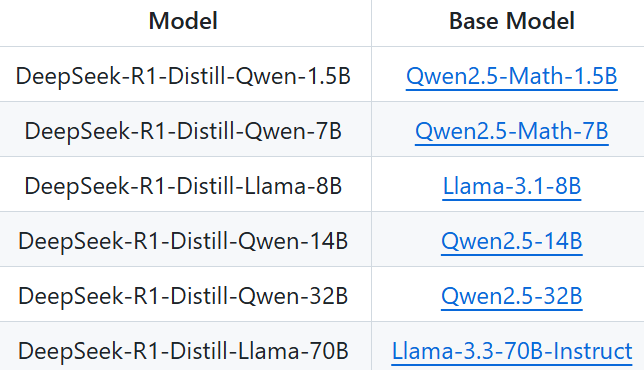

Model Versions

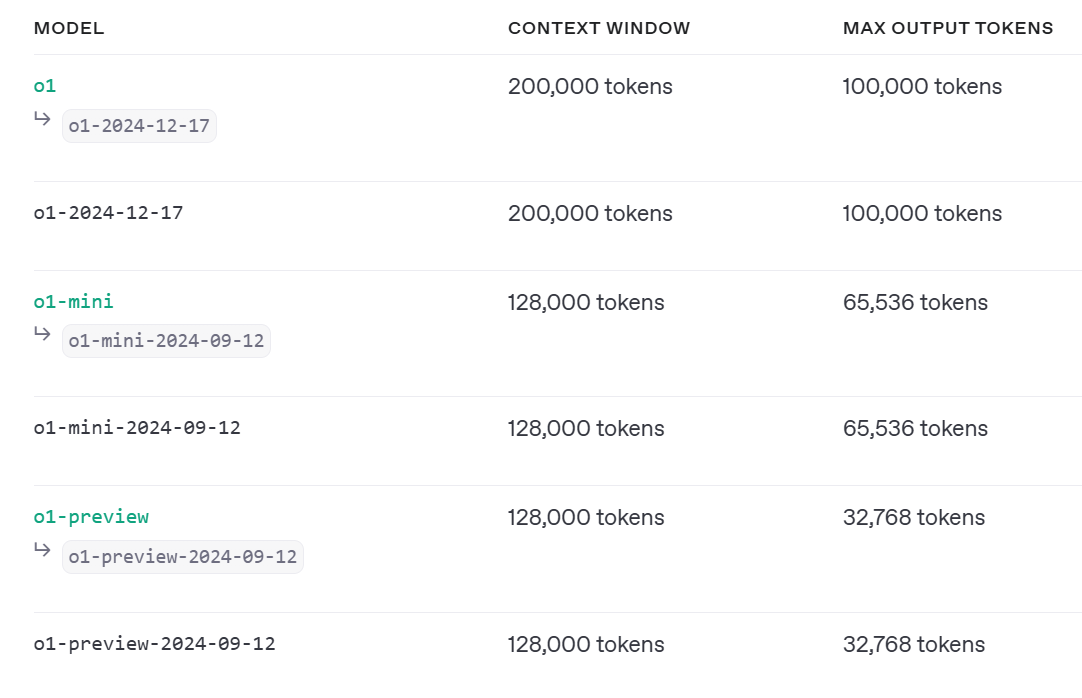

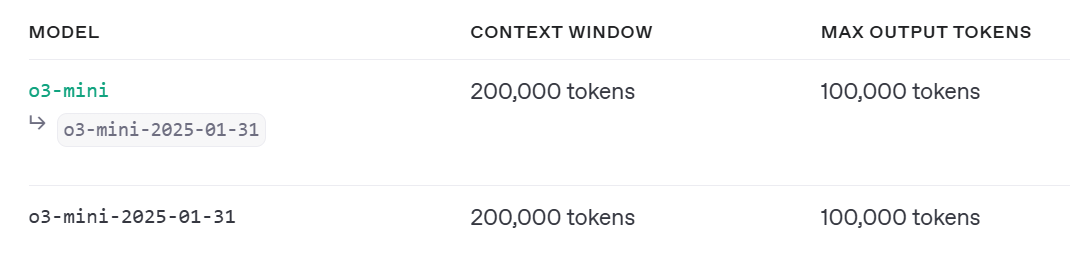

OpenAI has released three model versions of its o1, preview, mini and full, and one of o3-mini, differing in context window size and maximum output tokens. Meanwhile, DeepSeek has introduced two DeepSeek R1 models. The initial Zero version represented their first training attempt, excelling in reasoning benchmarks but facing challenges related to readability and language mixing. Additionally, DeepSeek has developed distilled models by fine-tuning open-source models such as Qwen and Llama.

Figure 10: OpenAI o1 model versions (Source)

Figure 11: OpenAI o3-mini model versions (Source)

Figure 12: DeepSeek R1 model versions (Source)

Figure 13: DeepSeek R1 distill model versions (Source)

Cost Comparison

Pricing is a crucial factor when selecting an AI model for schainpecific use cases. Below is a comparison of the costs associated with OpenAI o1, OpenAI o3-mini, and DeepSeek R1, including input and output token pricing per 1 million tokens, as well as cached prices.

| Model | Cached Price (per 1M tokens) | Input Token Price (per 1M tokens) | Output Token Price (per 1M tokens) |

| OpenAI o1 | $7.5 | $15.00 | $60.00 |

| OpenAI o1-mini | $0.55 | $1.10 | $4.40 |

| OpenAI o3-mini | $0.55 | $1.10 | $4.40 |

| DeepSeek R1 | $0.14 | $0.55 | $2.19 |

This table highlights the cost differences between the models. DeepSeek R1 offers significantly lower pricing, costing half as much as OpenAI's most affordable model, which makes it more cost efficient and scalable.

Comparison Table: Deepseek R1 vs. OpenAI o1 vs OpenAI o3-mini

To provide a clearer comparison, the table below outlines key features, performance benchmarks, areas of application, and cost considerations. OpenAI's key strength lies in the low latency of the o3-mini model, while DeepSeek R1 stands out for its cost efficiency.

| Feature | OpenAI o1 | OpenAI o3-mini | DeepSeek R1 |

| Release Date | Dec 2024 | Jan 2025 | Jan 2025 |

| Architecture | Transformer-based, chain-of-thought reasoning | Transformer-based, chain-of-thought optimized for low latency | Transformer-based, MoE, MLA, MTP |

| Training Data | Public + proprietary | Public + proprietary | Proprietary |

| Benchmarks | 83% (AIME), 89% (Codeforces), 79.7% (PhD-level STEM accuracy) | 87.3% (AIME), 79.7% (Science), 49.3% (Coding) | 79.8% (AIME), 97.3% (MATH-500), 96.3% (Codeforces) |

| Latency | Higher due to extended reasoning | Lower latency, faster responses | Moderate latency |

| Use Cases | Scientific research, software development, healthcare, education | Financial analysis, real-time decision-making, software development, research | Scientific research, academic education, software development |

| Open-Source | No | No | Yes |

| Cost Efficiency | High-cost proprietary model | High-cost proprietary model | Lower operational cost |

Figure 14: Benchmark performance comparison DeepSeek vs. OpenAI (Source)

Because of its open-source nature, DeepSeek R1 is available on multiple platforms, including Hugging Face and Ollama, while OpenAI models are integrated into various enterprise solutions and cloud platforms. However, OpenAI has not yet released its model weights, and users cannot download a fine-tuned model from the OpenAI platform. DeepSeek R1 also features a curated list of integrations in the following repository, including LiteLLM, Langfuse, and Ragflow. Additionally, it can be found on AWS and Azure.

Conclusion

As we move further into the age of AI, the competition between leading models like OpenAI's o1 and o3-mini, and newcomers like DeepSeek R1, is only going to intensify. OpenAI's models have proven their effectiveness in a wide variety of applications, especially where high-level reasoning, scalability, and robust performance are essential. Their innovation, speed, and advanced features set a high bar for the industry.

However, DeepSeek R1 offers a compelling alternative, especially for those seeking to leverage advanced AI capabilities without the high operational costs associated with other models. Its open-source nature and impressive performance benchmarks make it an attractive option for organizations and developers looking for a cost-effective solution without sacrificing the depth of reasoning and problem-solving capabilities.

Ultimately, the choice between these models comes down to your specific use case. If you're working on high-complexity tasks that demand rigorous, step-by-step reasoning, OpenAI o1 might be the best fit. If speed and cost-efficiency are more important, especially in STEM-related fields or financial applications, OpenAI o3-mini might be the right choice. For those seeking an open-source, budget-friendly solution that still delivers exceptional performance in tasks like math and software development, DeepSeek R1 presents an excellent alternative.

- Introduction

- OpenAI o1 Overview

- OpenAI o3-mini Overview

- Deepseek R1 Overview

- Model Versions

- Cost Comparison

- Comparison Table: Deepseek R1 vs. OpenAI o1 vs OpenAI o3-mini

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

How to Build an Enterprise-Ready RAG Pipeline on AWS with Bedrock, Zilliz Cloud, and LangChain

Build production-ready enterprise RAG with AWS Bedrock, Nova models, Zilliz Cloud, and LangChain. Complete tutorial with deployable code.

How to Use Anthropic MCP Server with Milvus

Discover how Model Context Protocol (MCP) pairs with Milvus to eliminate AI integration hassles, enabling smarter agents with seamless data access and flexibility.

Semantic Search vs. Lexical Search vs. Full-text Search

Lexical search offers exact term matching; full-text search allows for fuzzy matching; semantic search understands context and intent.