Semantic Search with Milvus and OpenAI

In this guide, we’ll explore semantic search capabilities through the integration of Milvus and OpenAI’s Embedding API, using a book title search as an example use case.

Read the entire series

- Effortless AI Workflows: A Beginner's Guide to Hugging Face and PyMilvus

- Building a RAG Pipeline with Milvus and Haystack 2.0

- How to Pick a Vector Index in Your Milvus Instance: A Visual Guide

- Semantic Search with Milvus and OpenAI

- Efficiently Deploying Milvus on GCP Kubernetes: A Guide to Open Source Database Management

- Building RAG with Snowflake Arctic and Transformers on Milvus

- Vectorizing JSON Data with Milvus for Similarity Search

- Building a Multimodal RAG with Gemini 1.5, BGE-M3, Milvus Lite, and LangChain

Introduction

Semantic, or similarity, search has quietly emerged as one of the most exciting digital innovations of recent times. While many people are unfamiliar with the concept, they benefit from semantic search technology daily, as it’s been incorporated into leading search engines, such as Google, and increasing numbers of eCommerce sites and web applications.

A semantic search works by matching the semantic similarity, i.e., likeness, of a query against data points instead of simply matching keywords with conventional search methods. This generates more comprehensive search results than traditional keyword search because it returns content that’s contextually relevant to the search terms instead of just results that are a literal match.

By interpreting natural language, semantic search enables applications to better understand the intent behind a user’s query and return better results. This efficiency allows users to find what they’re looking for with greater accuracy, saving time, preventing frustration, and enhancing the overall user experience.

In this guide, we’ll explore semantic search capabilities through the integration of Milvus and OpenAI’s Embedding API, using a book title search as an example use case. We’ll take you through how to create a semantic search application step-by-step, including entering data into a vector database, creating embeddings for a dataset, data indexing for efficient retrieval, and querying Milvus.

Prerequisites

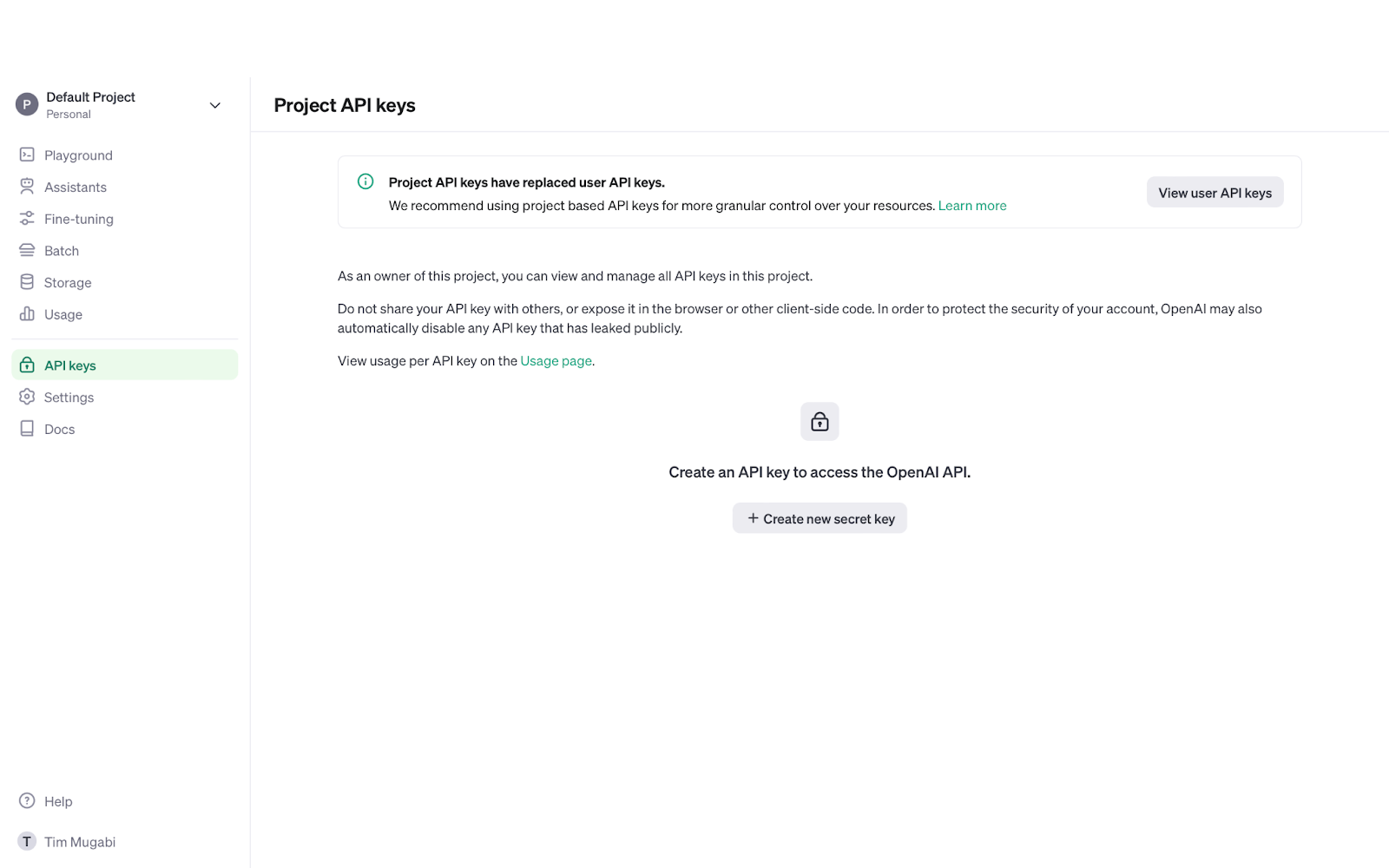

The first step in building a semantic search application is initializing an instance of Milvus and obtaining an embedding API key from OpenAI.

To run a Milvus instance, first start docker (if you don’t have docker installed, download it from their documentation), and then download and start Milvus as follows:

wget https://raw.githubusercontent.com/Milvus-io/Milvus/master/scripts/standalone_embed.sh

bash standalone_embed.sh start

Next, to get your embedding API key, visit the OpenAI website, create an account (or log into an existing account), select API Keys from the menu, and then click the Create new secret key button.

Setting Up Your Environment

Having downloaded Milvus and obtained an API key, the next step is setting up your development environment by installing the necessary Python programming packages.

For the semantic search book title search project, you’ll need to install the PyMilvus SDK, to connect to your Milvus database, and the OpenAI library, to connect to the embedding API.

You can do this with the code below:

pip install pymilvus openai

Step 1: Preparing the Data

With your environment set up, it is time to prepare your data for embedding and indexing.

In this example, we’ll import the data from a CSV file; you can download a CSV with the book title search data.

Let's create a function to load book titles:

import csv

def csv_load(file):

with open(file, newline='') as f:

reader = csv.reader(f, delimiter=',')

return [row[1] for row in reader]

Step 2: Generating Embeddings

Now that you’ve loaded the book titles from the CSV file, you need to generate embeddings for them through OpenAI’s embeddings API. For this, you’ll need the embedding API key you obtained earlier, inserting it where indicated in the code below.

Vector embeddings are mathematical representations of data that machines can interpret and process more efficiently. Each embedding has a certain number of dimensions ( in this case, 1536 as that’s the number of dimensions of the embeddings produced by chosen embedding model - text-embedding-3-small) that represent different characteristics that are used to determine its semantic relevance to other embeddings.

For example, in the title “The White Tiger”, the word “Tiger” is not only an animal but also a type of big cat, so this will be represented by one of its vector values. Additionally, the embedding will encode other features such as the fact it's a mammal, has 4 legs, stripes, etc. Consequently, the title will appear in the results for queries related to big cats, animals, mammals, etc.

An embedding model determines the similarity between vectors by measuring the distance between them in a high-dimensional vector space; the closer the distance between two vectors, the greater the similarity between them.

You can generate embeddings using OpenAI’s embedding API as follows:

import openai

client = OpenAI()

client.api_key = 'your_api_key_here'

def embed(text):

response = client.embeddings.create(

input=text,

model='text-embedding-3-small'

)

return response.data[0].embedding

We’ve opted for text-embedding-3-smallas our embedding model because it’s the most cost and resource-efficient of OpenAI’s embedding models. Please refer to OpenAI’s documentation for more information on alternative embedding models and when you might use them.

Step 3: Indexing and Searching with Milvus

After generating your embeddings, the next step is inserting the dataset into the vector database, which requires two steps:

Creating a collection

Creating an index

A collection is akin to a table in a conventional relational database and is how Milvus groups vector embeddings for storage and management. To create a collection, we need to define its schema, the data’s structure or format, which is composed of:

ID (the primary field, which is assigned automatically)

Book title

The title’s embedding

Once you’ve created a collection, you can create an index: a data structure that pre-calculates the semantic relevance between vectors and stores similar embeddings closer together for faster querying. Data indexing prevents the vector database from having to compare a query against every embedding to determine similarity - which is crucial as a collection grows increasingly larger.

The code snippet below inserts and indexes the dataset in Milvus:

from pymilvus import connections, Collection, FieldSchema, CollectionSchema, DataType

# Connect to Milvus

connections.connect(host='localhost', port='19530')

fields = [

FieldSchema(name='id', dtype=DataType.INT64, is_primary=True, auto_id=True),

FieldSchema(name='title', dtype=DataType.VARCHAR, max_length=255),

FieldSchema(name='embedding', dtype=DataType.FLOAT_VECTOR, dim=1536)

]

schema = CollectionSchema(fields, description='Book Titles')

collection = Collection(name='book_titles', schema=schema)

# Create an index

index_params = {

'index_type': 'IVF_FLAT',

'metric_type': 'L2',

'params': {'nlist': 1024}

}

collection.create_index(field_name="embedding", index_params=index_params)

# Insert data

collection.insert([{'title': title, 'embedding': embed(title)} for title in collection])

In the code snippet above, we’ve initialized the index with several parameters: the most important of which are index_type and metric_type.

For index type, we’ve chosen IVF_FLAT because it strikes a good balance between speed and accuracy while consuming less memory than other data indexing types. It divides the vector space in which the embeddings are stored into a number of clusters (defined by the nlist parameter, in this case, 1024), which allows for efficient approximate nearest neighbor (ANN) searching.

metric type defines the algorithm used to measure the similarity between embeddings when data indexing, which in our example is L2 (Euclidean distance). L2 is the most commonly used distance metric because, much like IVF_FLAT, it’s efficient and simple to interpret.

Advanced Querying Techniques

With the dataset successfully indexed, you can now perform semantic searches on the book titles in your vector database.

Just as we embedded the data, we also have to embed the query for Milvus to perform a semantic search. Additionally, as with data indexing, you have to define a few search parameters. The metric type of L2 matches that of the index, while nprobe defines how many clusters the vector database will search through during the query. Subsequently, the higher the value of nprobe, the more comprehensive the search - but the higher the computational load.

You also have the option of setting a limit on the number of results returned by the search operation, which we’ve set as 5. Lastly, we have output_fields, which is an essential parameter as it determines the data returned by the search operation; in this case, we’ve configured it to return the data point’s score and title.

Here’s an example search operation:

collection.load()

def search(query):

embedded_query = embed(query)

search_params = {"metric_type": "L2", "params": {"nprobe": 10}}

results = collection.search([embedded_query], "embedding", search_params, limit=5, output_fields=['score', 'title'])

return results

search_term ='self-improvement’

print("Search term" + search_term + + ":")

for result in search(search_term):

print(result)

Which should produce output similar to below:

Search term: self-improvement

[ 0.37948882579803467, 'The Road Less Traveled: A New Psychology of Love Traditional Values and Spiritual Growth']

[0.39301538467407227, 'The Leader In You: How to Win Friends Influence People and Succeed in a Changing World']

[0.4081816077232361, 'Think and Grow Rich: The Landmark Bestseller Now Revised and Updated for the 21st Century']

[ 0.4174671173095703, 'Great Expectations']

[0.41889268159866333, 'Nicomachean Ethics']

The floating point value that precedes the book title is the embedding’s score, i.e., the distance from the data point from the query embedding. The lower the score, the shorter the distance between the vectors and the greater their semantic relevance.

Conclusion

To recap, the steps for creating a semantic search application with Milvus and OpenAI are as follows:

Prerequisites: install and start an instance of Milvus and obtain an embedding API key from OpenAI

Setting Up Your Environment: install the pymilvus and openai libraries

Step 1: Preparing the Data: download and load the CSV file containing the book titles

Step 2: Generating Embeddings: generate vector embeddings through the OpenAI API

Step 3: Indexing and Searching with Milvus: create a collection and index in which to insert the embedding data

Advanced Querying Techniques: query the vector database

While semantic search has been employed for over a decade, we’re only scratching the surface of its vast potential. As the AI field continues to rapidly progress, semantic search capabilities will grow more precise and efficient - enabling superior user experiences, increasing productivity, and allowing for faster decision-making.

To increase your understanding of semantic search, and better tailor it to your specific use case, we encourage you to experiment with different parameters, i.e., embedding model, indexing type, similarity metric, etc.

To learn more about vector bases, learning language models (LLMs), and other key AI and machine learning concepts, visit the Zilliz Learn knowledge base.

Further Resources

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

How to Pick a Vector Index in Your Milvus Instance: A Visual Guide

In this post, we'll explore several vector indexing strategies that can be used to efficiently perform similarity search, even in scenarios where we have large amounts of data and multiple constraints to consider.

Building RAG with Snowflake Arctic and Transformers on Milvus

This article explored the integration of Snowflake Arctic with Milvus.

Building a Multimodal RAG with Gemini 1.5, BGE-M3, Milvus Lite, and LangChain

Multimodal RAG extends RAG by accepting data from different modalities as context. Learn how to build one with Gemini 1.5, BGE-M3, Milvus, and LangChain.