Practical Tips and Tricks for Developers Building RAG Applications

Vector Search is Not Effortless!

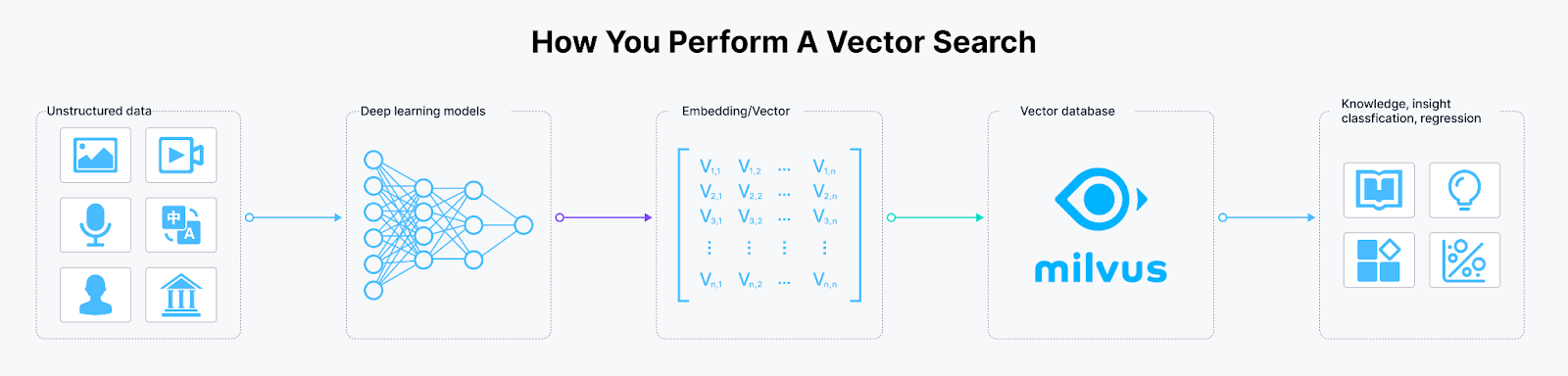

Vector search, also known as vector similarity search or nearest neighbor search, is a technique used in data retrieval for RAG applications and information retrieval systems to find items or data points that are similar or closely related to a given query vector. It is often marketed as straightforward when handling large datasets. The general perception is that you can simply feed data into an embedding model to generate vector embeddings and then transfer these vectors into your vector database to retrieve the desired results.

how to perform a vector search

how to perform a vector search

Many vector database providers promote their capabilities with descriptors like "easy," "user-friendly," and "simple." They assert that you can achieve significant outcomes with just a few lines of code, sidestepping the complexities of machine learning, AI, ETL processes, or detailed system tuning.

And they are right; vector search is as effortless as using a basic numerical library like NumPy. To demonstrate this concept, I wrote a short demo in just ten lines of Python code using the k-nearest neighbors algorithm (KNN). This straightforward approach is effective and accurate for small-scale applications with datasets of up to a thousand or ten thousand vectors.

import numpy as np

# Function to calculate Euclidean distance

def euclidean_distance(a, b):

return np.linalg.norm(a - b)

# Function to perform KNN

def knn(data, target, k):

# Calculate distances between target and all points in data

distances = [euclidean_distance(d, target) for d in data]

# Combine distances with data indices

distances = np.array(list(zip(distances, range(len(data)))))

# Sort by distance

sorted_distances = distances[distances[:, 0].argsort()]

# Get the top k closest indices

closest_k_indices = sorted_distances[:k, 1].astype(int)

# Return the top k closest vectors

return data[closest_k_indices]

However, this approach won't work when your dataset grows to a modest level of over a million or ten million vectors. That is simply because real-world applications must interface with users, be available, and are always way more complicated. Building a scalable real-world application requires thoroughly considering various factors beyond the coding, including search quality, scalability, availability, multi-tenancy, cost, security, and more!

So, let's be honest. Remember the adage, "It works on my machine?" Vector search is no different, where your prototype always works; vector search in production is often complex, so what are the best practices for building a vector search-powered application in production?

To help you navigate these challenges, we'll share three essential tips for effectively deploying your vector database in your RAG application production environment with Milvus:

Design an effective schema: Carefully consider your data structure and how it will be queried to create a schema that optimizes performance and scalability.

Plan for scalability: Anticipate future growth and design your architecture to accommodate increasing data volumes and user traffic.

Select the optimal index and fine-tune performance: Choose the most suitable indexing method for your use case and continuously monitor and adjust performance settings.

By following these best practices, you'll be well on your way to building a robust and efficient vector search-powered application. In the comments below, share your experiences and any additional tips you've found helpful!

Designing an Effective Schema Strategy

A schema defines the structure of a database, including the tables, fields, relationships, and data types. This organized framework ensures data is stored consistently and predictably, simplifying management, querying, and maintenance. Selecting an appropriate schema is particularly critical for vector databases like Milvus, which handle vectors and various structured data types, including metadata and scalar data. This data can enhance filtered search and improve overall search outcomes. This section will explore key factors to consider when choosing the most effective schema strategy.

Dynamic vs. Fixed Schema

In database systems, dynamic and fixed schemas represent two primary approaches to structuring data. Dynamic schemas offer flexibility, simplifying data insertion and retrieval without the need for extensive data alignment or ETL processes. This approach is perfect for applications requiring rapid data structure changes. On the other hand, developers value fixed schemas for their performance efficiency and memory conservation, thanks to their compact storage formats.

A hybrid schema approach can benefit developers working on efficient vector database applications. This method combines the robustness of fixed schemas for essential data pathways with the flexibility of dynamic schemas to accommodate diverse use cases. For example, in a recommender system, elements like product names and product IDs may vary in importance depending on the context. By employing a hybrid schema, developers can ensure optimal performance where needed while maintaining the ability to adapt to changing data requirements.

Setting Primary Keys and Partition Keys

Primary and partition keys are two important concepts in vector databases. Using the Milvus vector database as an example, we can delve deeper into how these keys function within vector databases.

The Milvus architecture segments data into several components: there are fixed and dynamic fields (collectively referred to as the payload), a compulsory vector field, and system fields like timestamps and universally unique identifiers (UUIDs), which are similar to those found in conventional relational databases.

Primary Keys: In Milvus, the primary key often serves as a unique identifier, which in a RAG use case may be applied to a chunk ID. This key is accessed frequently and can be configured to auto-generate. It plays a role in quickly locating and retrieving specific data entries within the database.

Partition Keys: When you create a collection in Milvus, you can specify a partition key. This key allows Milvus to store data entities in different partitions based on their key values, effectively organizing data into manageable segments. A simple way to think about partition keys is to consider using a partition key if there are data sets you want to filter on. For example, data isolation and efficient distribution are necessary in multi-tenant situations, so storing them in separate partitions can help achieve this. Partition keys are also useful for scalability, as partitioning data into shards via hashing enables the database to manage large-scale user bases and multi-tenancy more efficiently.

Both primary and partition keys are fundamental in maintaining the structural integrity and operational efficiency of vector databases, making them indispensable for handling extensive datasets and ensuring swift data access and retrieval.

Choosing Vector Embedding Types

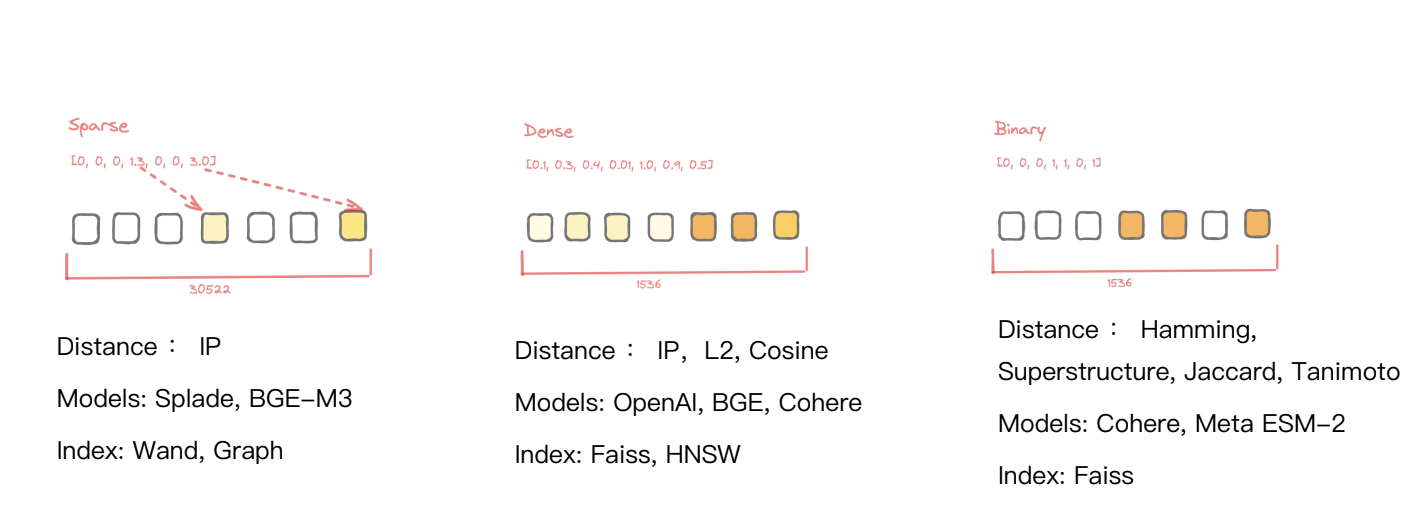

When choosing vector embeddings for RAG applications, it's a must to choose the right ML model for vector creation and understand the different embedding types available: dense, sparse, and binary embeddings.

Three popular categories of vector embeddings

Three popular categories of vector embeddings

Dense Embeddings are the most commonly used type in vector database applications for semantic similarity search. They are known for their robustness and general applicability across various data types. Popular dense embedding models include OpenAI, BGE, and Cohere.

Sparse embeddings are gaining popularity for their efficiency in searching out-of-domain data. Recent advancements in models such as Splade and BGE M3 have enhanced their utility in heterogeneous searches, making them a versatile choice for diverse applications.

Binary embeddings, characterized by their binary format (zeros and ones), are designed to be memory-efficient, making them ideal for special use cases like protein sequencing. Models like Meta ESM-2 are typically used to generate these embeddings, providing targeted solutions for specific search needs.

To ensure the accuracy of search results for RAG applications, we need to use more than just dense embeddings. Therefore, we need to find solutions that support different indexing algorithms to efficiently and effectively search different vector embedding types. Milvus supports various indices for managing dense, sparse, binary, and even hybrid sparse and dense embeddings, enabling efficient searches across various data dimensions and ensuring optimal performance in vector database applications.

Designing Your Schema: A Practical Example

Let's bring these elements together that we just reviewed to help us effectively design a schema architecture that will increase the accuracy of our search results:

| Field Name | Type | Description | Example Value |

|---|---|---|---|

| chunkID | Int64 | Primary key, uniquely identifies different parts of a document | 123456789 |

| userID | Int64 | Partition key, data partitioning is based on userID to ensure searches occur within a single userID | 987654321 |

| docID | Int64 | Unique identifier for a document, used to associate different chunks of the same document | 555666777 |

| chunkData | varchar | A part of the document, containing several hundred bytes of text | "This is a part of the document..." |

| dynamicParams | JSON | Stores dynamic parameters of the document, such as name, source URL, etc. | {"name": "Example Document", "source": "example.com"} |

| sparseVector | Specific format | Data representing a sparse vector. Specific format will have non-zero values only in certain positions to represent sparsity. | [0.1, 0, 0, 0.8, 0.4] |

| denseVector | Specific format | Data representing a dense vector. Specific format will have a fixed number of dimensions with values in each. | [0.2, 0.3, 0.4, 0.1] |

A schema demo for a typical Retrieval Augmented Generation (RAG) application

Examining this schema, it's crucial to note the presence of additional fields, extending beyond the primary key and vector field. These extra fields play a role in constructing and utilizing your vector database. Let's dig in:

The basics: These include your primary key (chunkID) and your embeddings (denseVector), which every entry in the database needs.

Multi-tenancy support: We've added a field to partition data by the tenants of our solution with the addition of a userID. This addition helps segregate and manage data access on a per-user basis, enhancing security and personalization.

Refining your search results: We have added a few other fields to help us with this refinement, including:

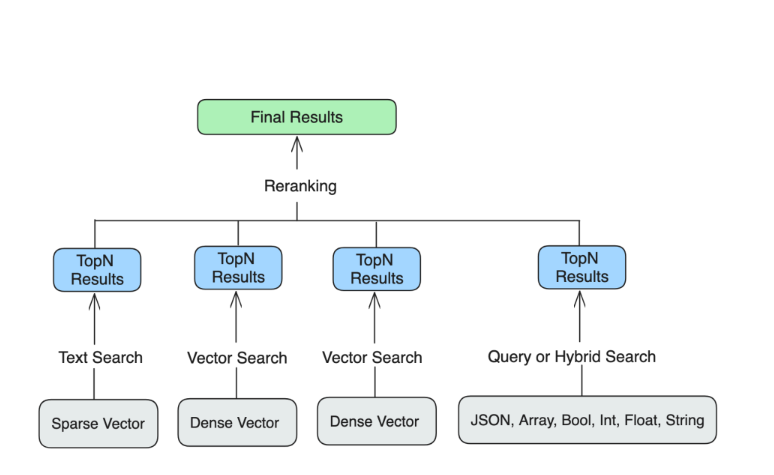

docID: This field indicates the origin of the chunk and can be used to leverage Milvus's grouping search feature. In our example, we split our document into chunks and stored the representative vector embedding in the denseVector field, and in this field (docID), we store the associated document information. You can include thegroup_by_fieldargument in the search() operation to group results by the document ID to find relevant documents instead of similar passages or chunks. This helps return the relevant documents rather than separate chunks from the same document.dynamicParams: This field can be filtered, allowing you to manage and retrieve data that suits your needs. It is a treasure trove of metadata, such as the document name, source URL, etc. We set the field json to store multiple key-value pairs in one field.sparseVector: This field holds the chunk's sparse embedding, which allows us to perform an ANN search and retrieve results based on a scalar value associated with the sparse vector.

The diagram shows that we can gather results from separate queries and then rerank them to refine the search results.

By designing a schema that includes these additional fields, you can create a more robust and flexible vector database that caters to your RAG application requirements. It allows you to leverage the strengths of vector search while incorporating traditional data management and retrieval techniques.

Plan for Scalability

Once you have found success with your MVP RAG application, it's time to start preparing for production deployment. This involves anticipating future growth and designing your architecture to accommodate increasing data volumes and user traffic.

To ensure your application can scale effectively, you need to be aware that scalability in vector databases poses unique challenges compared to traditional relational databases because data is stored in a large, centralized index. This setup can lead to two main issues: slow index speeds and degraded index quality due to frequent updates, which in turn can reduce search quality.

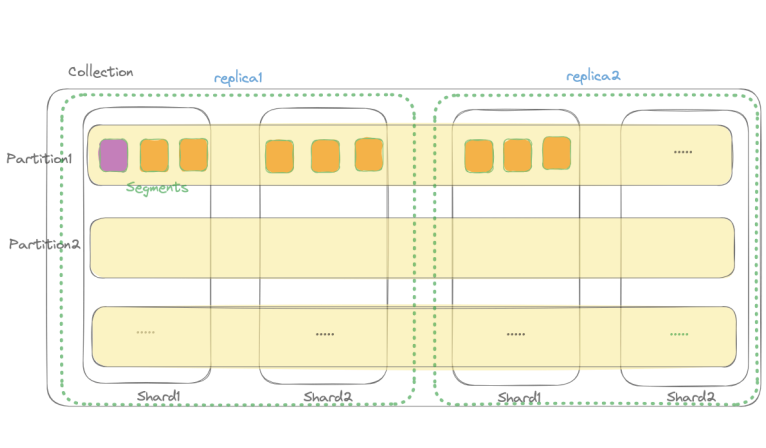

The sharding strategy of Milvus

The sharding strategy of Milvus

Milvus can handle these challenges by dividing the entire dataset into manageable segments, we can perform delayed updates or compact segments once they become unstable, maintaining consistent search quality. This segmentation facilitates effective load balancing, allowing us to distribute queries evenly across all processing cores.

Using partitions for multi-tenancy can also help with scalability and performance since searches are confined to partition-relevant data. This approach effectively organizes the data and enhances security and privacy by restricting visibility to suitable users. Furthermore, Milvus can efficiently manage up to ten billion data points in a single collection. That's a lot!

For multi-tenant applications with fewer than 10,000 tenants, managing data by collection provides greater data control. However, partition keys can effectively support unlimited tenants by dynamically segmenting data for services with millions of users.

Milvus is a distributed system that's designed to handle large query volumes with ease. And the best part? Simply adding more nodes can significantly boost performance, opening up a world of possibilities for your applications. For smaller datasets that don't initially require extensive resources, scaling up memory reserves—typically two to three times the current allocation—can effectively double the query per second (QPS). This scalable framework ensures that as your data grows, your database capabilities can grow along with it, ensuring efficient and reliable performance across the board.

Picking, Evaluating, and Tuning Your Index

During the prototype phase, loading all the data into memory is common for faster processing and easier development. However, as you move to production and your data grows, it becomes infeasible to store everything in memory. This is because:

Memory is limited and expensive compared to disk storage.

Large datasets may exceed the available memory capacity.

Loading all data into memory can significantly increase startup time and resource consumption.

To handle larger datasets efficiently in production, you need to choose an appropriate indexing strategy. The right index can optimize the performance of your RAG application in terms of query speed, storage requirements, and latency.

Indices Milvus supports

Indices Milvus supports

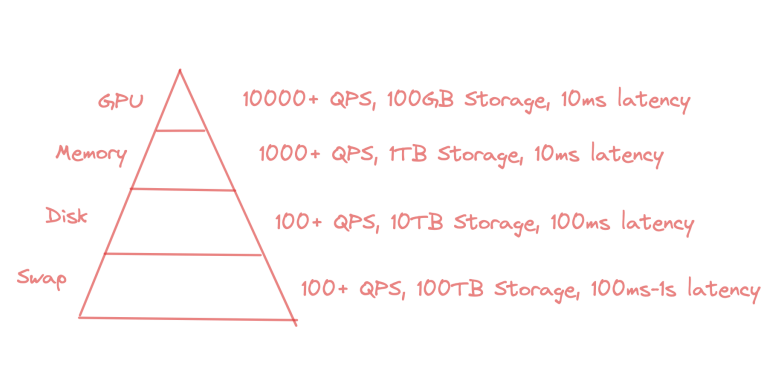

This diagram helps visualize the differences between various indexes based on three key metrics:

Queries per second (QPS): This measures how many search queries the index can handle per second, reflecting its throughput and efficiency.

Storage: This represents the amount of disk space required to store the index, which can impact infrastructure costs and scalability.

Latency refers to the time it takes to process a single query and return the results, which affects your application's responsiveness.

By comparing these metrics across different indexes, you can decide which index best suits your specific use case and performance requirements.

In Milvus, we provide a flexible index selection framework tailored to various storage and performance needs:

GPU Index is our premier option for high-performance environments, which supports rapid data processing and retrieval.

Memory Index is a middle-tier option that balances performance with capacity, offering solid query-per-second (QPS) rates and the ability to scale up to terabytes of storage with an average latency of around 10 milliseconds.

Disk Index can manage tens of terabytes with a reasonable latency of about 100 milliseconds, suitable for larger, less time-sensitive datasets. Milvus is the only open-source vector database that supports disk index.

Swap Index facilitates data swapping between S3 or other object storage solutions and memory. This approach significantly reduces costs—by approximately ten times—while effectively managing latency. Typical access times are around 100 milliseconds, but they may extend to a few seconds for less frequently accessed ("colder") data, making it viable for offline use cases and cost-sensitive applications.

After selecting an index, you can evaluate its performance based on its build time, accuracy, performance, and resource consumption. For instance, a non-optimized index might only support 20 queries per second without any construction required. Optimizing an index could enhance QPS significantly, potentially increasing it by tenfold with each iteration of tuning, albeit at the cost of increased view time.

To effectively select and fine-tune your index, you should:

Choose the appropriate index type based on your specific needs.

Tune the index parameters to optimize performance.

Benchmark your use cases to ensure the index performs as expected.

Adjust search parameters to further enhance performance.

If you find yourself unsure about the optimization process, leverage the power of benchmarking tools like VectorDBBench. This tool, developed and open-sourced by Zilliz, can evaluate all mainstream vector databases. It allows you to conduct comprehensive experiments and fine-tune your system for optimal performance.

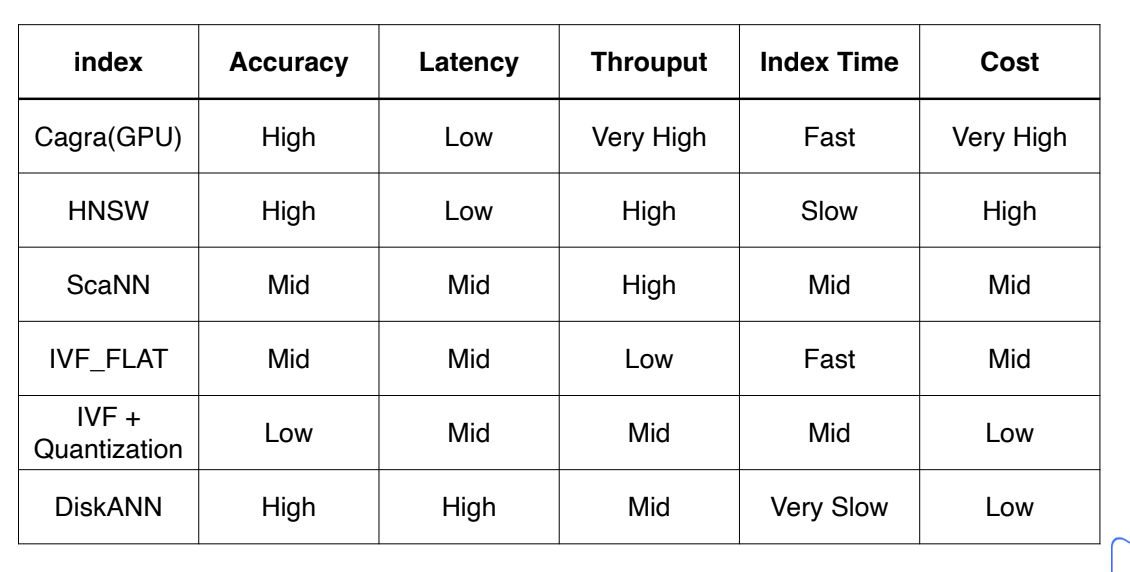

For a quick reference, we've prepared a handy cheat sheet that outlines the performance of each index in our GPU index catalog. This resource can help you optimize performance and cost-efficiency by guiding you to the best index match for your application's needs.

An index cheat sheet

An index cheat sheet

Summary

In this comprehensive guide, we've explored the multifaceted world of vector databases and the practical approaches required to maximize their efficiency and scalability. From the basics of designing a schema to the complexities of managing large data sets, we've covered the essential strategies and best practices that developers need to know when working with vector databases.

As vector databases evolve, staying informed about these aspects will empower developers to build more robust, efficient, and scalable applications. Whether you're a seasoned database professional or a newcomer to this field, the insights provided here will help you navigate the complexities of vector databases with greater confidence and competence.

- Vector Search is Not Effortless!

- Designing an Effective Schema Strategy

- Plan for Scalability

- Picking, Evaluating, and Tuning Your Index

- Summary

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud BYOC Now Available Across AWS, GCP, and Azure

Zilliz Cloud BYOC is now generally available on all three major clouds. Deploy fully managed vector search in your own AWS, GCP, or Azure account — your data never leaves your VPC.

Why Teams Are Migrating from Weaviate to Zilliz Cloud — and How to Do It Seamlessly

Explore how Milvus scales for large datasets and complex queries with advanced features, and discover how to migrate from Weaviate to Zilliz Cloud.

AI Integration in Video Surveillance Tools: Transforming the Industry with Vector Databases

Discover how AI and vector databases are revolutionizing video surveillance with real-time analysis, faster threat detection, and intelligent search capabilities for enhanced security.