Are CPUs Enough? A Review Of Vector Search Running On Novel Hardware

Vector search has emerged as a pivotal technology, enabling rapid and accurate retrieval of information in numerous applications, from recommendation systems to image recognition. Traditionally, CPUs have been the workhorses driving these operations. However, as data volumes soar and demands for speed and efficiency escalate, the question arises: Are CPUs enough to meet the burgeoning needs of modern AI-powered search?

In his recent talk at the Unstructured Data Meetup 2024, organized by Zilliz, George Williams, a computer vision expert at Smile Identity and NeurIPS BigANN Challenge organizer (2021, 2023), addressed whether CPUs are sufficient for vector search/ANN. He explored how new hardware solutions could revolutionize vector search, highlighting the intersection of advanced search algorithms and cutting-edge hardware, and offering insights into the future of data retrieval technologies.

In this blog, we will distill the key insights from the talk, examining the goals and outcomes of the NeurIPS BigANN competitions, where a Zilliz-based approach was one of the winners. We'll also delve into the practical challenges of implementing vector search, the innovations driving efficiency and performance, and the exciting new era of hardware that promises to transform the field.

Neurips BigANN competition and Zilliz role and contributions

The NeurIPS BigANN competitions aim to push the boundaries of what is possible in the field of vector search technology, encouraging innovation and collaboration among researchers and industry leaders. Participants are tasked with developing solutions that maximize recall, Queries Per Second (QPS), power efficiency, and cost-effectiveness, providing a comprehensive evaluation of hardware performance in vector search tasks. The competitions are organized in collaboration with initiatives like ANN-Benchmarks project, which provide a platform for submitting and evaluating vector search implementations, including prominent solutions like Milvus.

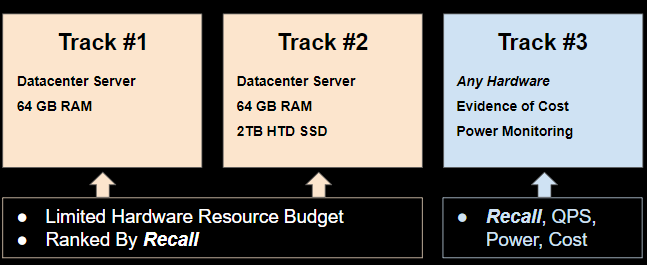

The competitions feature three tracks, each designed to test different aspects of vector search performance as shown in figure one.

Fig 1. The three tracks of the NeurIPS BigANN competition

Fig 1. The three tracks of the NeurIPS BigANN competition

Track 1: Limited Hardware Resource Budget

Specifications: Participants are limited to a datacenter server with 64 GB of RAM.

Criteria: Solutions are ranked based on recall, emphasizing the accuracy of retrieving the nearest neighbors within the constraints of limited hardware resources.

Track 2: Enhanced Storage Capabilities

Specifications: Participants use a datacenter server with 64 GB of RAM and a 2 TB high-throughput SSD.

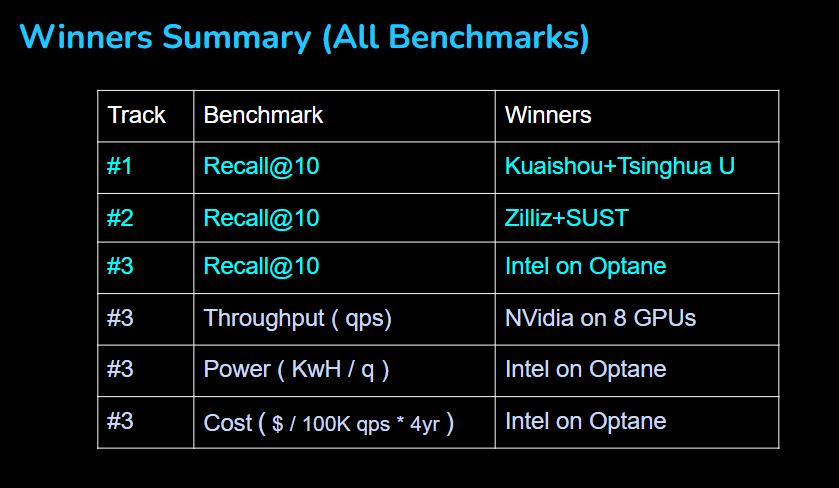

Criteria: This track also focuses on recall but allows for more extensive storage capabilities, challenging participants to optimize both memory usage and storage performance which Zilliz was the winner as shown on figure 2. Zilliz+SUST’s approach performed well in the range search dataset (SSNPP) with the highest recall score. This win highlights Zilliz's expertise and innovation in developing efficient vector search algorithms tailored to modern hardware constraints. We’ll describe the Zilliz approach in the next section.

Fig 2. Zilliz+SUST team wins the second track

Fig 2. Zilliz+SUST team wins the second track

Track 3: Open Hardware

Specifications: Participants can use any hardware, provided they present evidence of cost and monitor power usage.

Criteria: Solutions are evaluated based on a combination of recall, QPS, power efficiency, and cost, making this track the most comprehensive and challenging.

These tracks allow the competition to address a wide range of practical considerations, from optimizing limited resources to leveraging advanced hardware setups. Through these evaluations, the NeurIPS BigANN competitions provide insights into the state of vector search technology and highlight the most promising advancements in the field.

Optimization and Evaluation in Vector Search

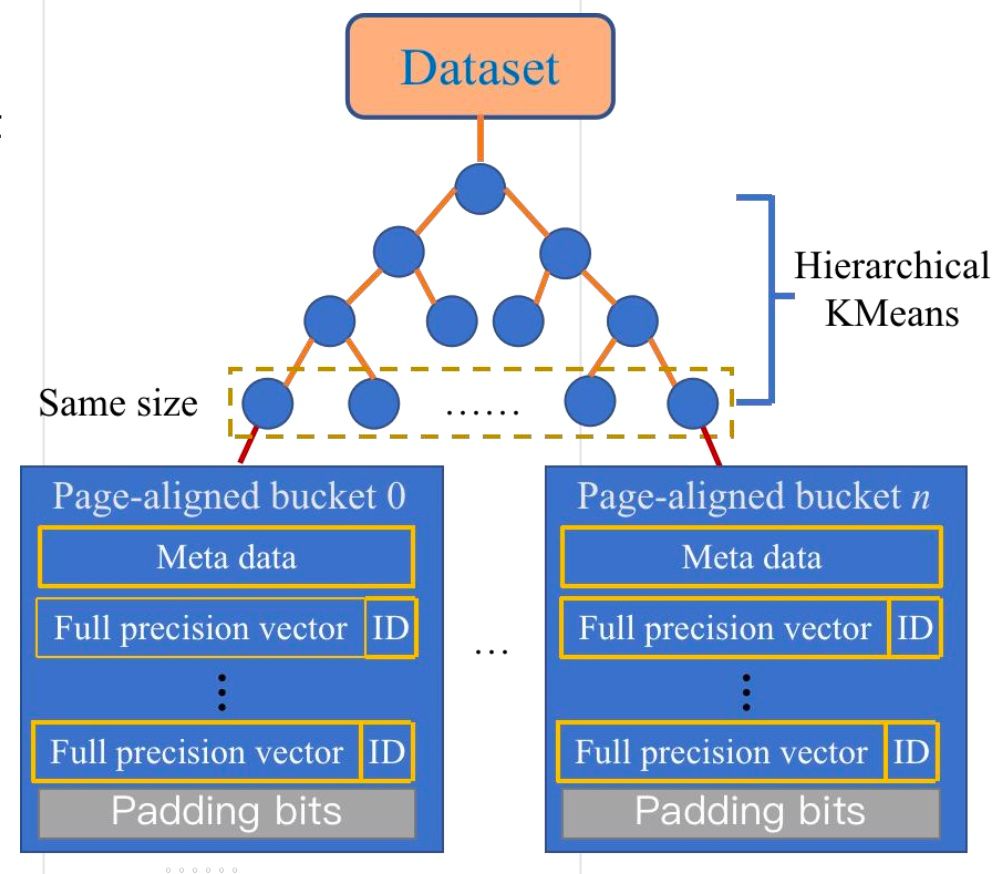

Optimizing vector search requires a multifaceted approach, particularly when it comes to memory and SSD usage. One of the key innovations introduced by Zilliz is the Hybrid Graph Clustering Index shown in figure 3. This method enhances the efficiency of vector search by structuring data in a way that optimizes memory layout and access patterns, particularly for SSDs. By organizing data into page-aligned buckets, Zilliz ensures that full precision vectors are stored alongside their metadata, reducing the latency and improving the retrieval speed. This approach not only boosts performance but also maximizes the utilization of SSD storage, which is crucial for handling large datasets. Additionally, Zilliz has focused on optimizing the memory layout to ensure that data access is efficient and aligned with the underlying hardware capabilities. This involves careful structuring of data to minimize access times and improve overall throughput, making their solutions highly effective for large-scale vector search tasks.

Moreover, the release of Milvus 2.4, their latest version of the open-source vector database, introduces several groundbreaking features such as Multi-vector support, Grouping Search, and GPU Indexing with NVIDIA’s CUDA-Accelerated Graph Index for Vector Retrieval (CAGRA). These innovations further demonstrate Zilliz’s strategy and commitment to enhancing vector search performance and efficiency.

Fig 3. Hybrid Graph Clustering Index and Memory Layout and Access Optimized For SSD

Fig 3. Hybrid Graph Clustering Index and Memory Layout and Access Optimized For SSD

Future directions

According to the speaker, the future of vector search may soon shift from relying on approximation techniques to leveraging brute force methods while still in a short time. This shift will be driven by the increasing scalability of raw vectors without the need for creating indexes, marking a significant evolution in the memory market. While specialized chips are expensive to produce and require substantial investment and time to develop toolkits—often taking over a year to bring to market—commoditized hardware may become the norm. In this scenario, software would need significant tweaking to optimize performance. The concept of chiplets is introduced, where the best semiconductor technologies are modularly designed by different companies, similar to software development. This modular approach allows for the reuse of software on existing chips, enabling non-chip-savvy individuals to design their chips that are cheaper and faster. This could serve as an entry point for many companies, making advanced chip design more accessible and cost-effective.

Conclusion

The rapid advancements in hardware technology are paving the way for more efficient and powerful vector search capabilities. As illustrated by the NeurIPS BigANN competition and Zilliz's contributions, the intersection of advanced hardware and innovative algorithms is key to the future of data retrieval technologies. While CPUs have been the backbone of computing for decades, the emergence of specialized hardware solutions signifies a new era, where efficiency and performance are paramount. Zilliz, one of the most widely adopted vector databases, is focused on optimizing memory efficiency and maximizing data retrieval speed, leveraging advanced hardware capabilities to push the boundaries of what is possible in vector search technology. Follow Zilliz on twitter @zilliz_universe and @milvusio and watch tons of resourceful video talks and tutorials on Zilliz YouTube channel.

Resources

Enhancing App Functionality: Optimizing Search with Vector Databases - Zilliz blog

Milvus 2.4 Unveils Game-Changing Features for Enhanced Vector Search

Milvus 2.4 Unveils CAGRA: Elevating Vector Search with Next-Gen GPU Indexing - Zilliz blog

How to Evaluate and Optimize the Performance of Milvus Storage - Zilliz blog

- Neurips BigANN competition and Zilliz role and contributions

- Optimization and Evaluation in Vector Search

- Future directions

- Conclusion

- Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Audit Logs Goes GA: Security, Compliance, and Transparency at Scale

Zilliz Cloud Audit Logs are GA—delivering security, compliance, and transparency at scale with real-time visibility and enterprise-ready audit trails.

The Real Bottlenecks in Autonomous Driving — And How AI Infrastructure Can Solve Them

Autonomous driving is data-bound. Vector databases unlock deep insights from massive AV data, slashing costs and accelerating edge-case discovery.

DeepRAG: Thinking to Retrieval Step by Step for Large Language Models

In this article, we’ll explore how DeepRAG works, unpack its key components, and show how vector databases like Milvus and Zilliz Cloud can further enhance its retrieval capabilities.