What is AI Hardware?

What is AI Hardware?

Artificial intelligence relies on both software and hardware to function effectively. While algorithms often get the spotlight, hardware for AI is crucial for performance. This glossary explores the key components and concepts of AI hardware, emphasizing how AI hardware solutions impact AI performance across various applications.

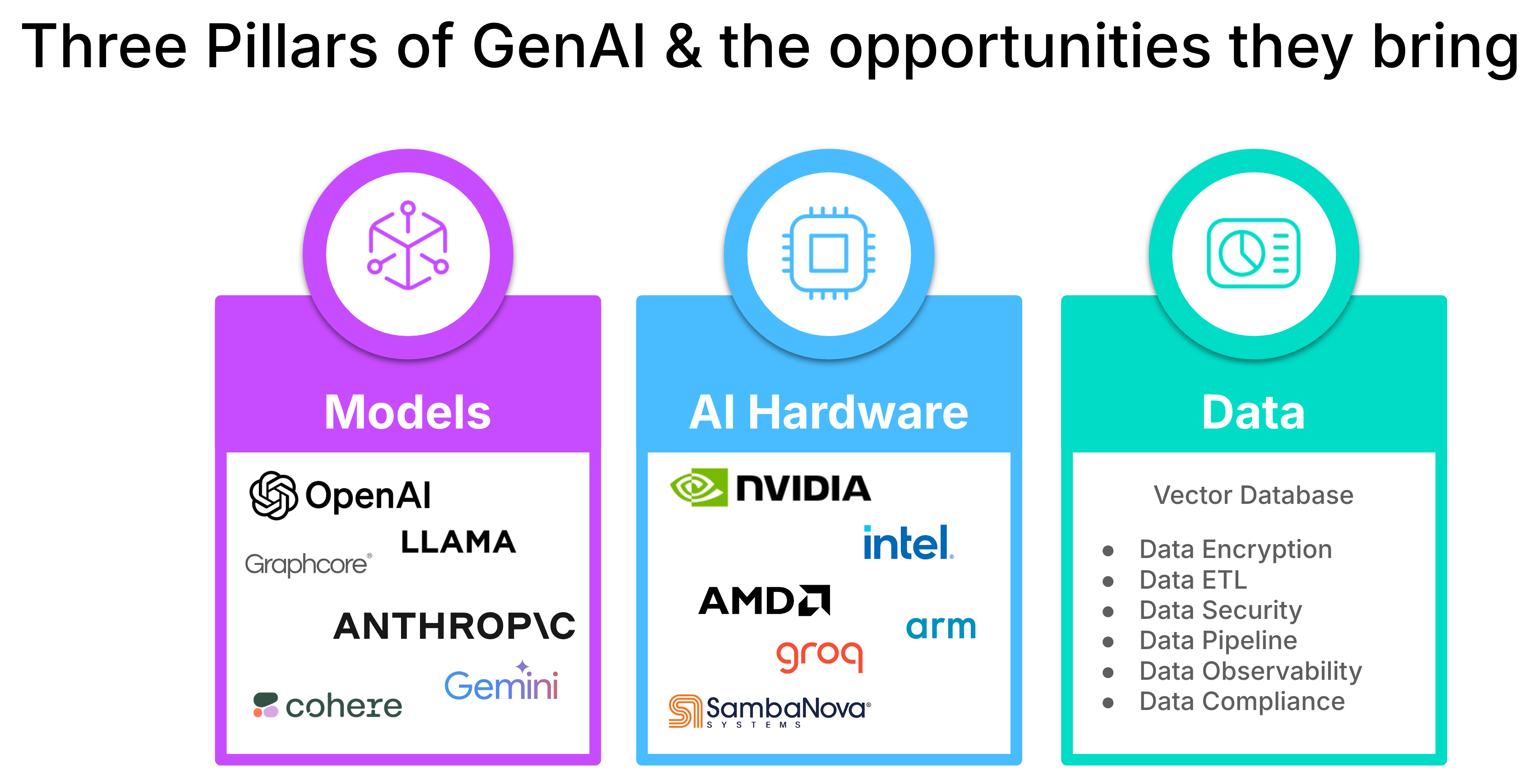

Three Pillars of AI

Three Pillars of AI

Overview

AI hardware refers to the specialized computational devices and components designed to handle the processing demands of artificial intelligence tasks. These AI hardware solutions work alongside algorithms and software in the AI ecosystem, enabling faster model training, more efficient inference, and the ability to tackle complex problems. The choice of hardware for AI can significantly influence AI performance, especially in data-intensive AI workloads.

Specialized AI hardware is particularly important for advancing medical and scientific research, enabling faster computations and processing of large datasets. The growth of Transformer models since 2017 has increased demand for advanced AI hardware, driving continuous improvements in the field. While AI hardware focuses on computational power, its environmental impact is a growing concern, requiring a balance between AI benefits and energy consumption.

Key Components

AI hardware consists of several critical components that work together to enable efficient AI computations. These components can be broadly categorized into processors, memory and storage, and interconnects. Each plays a vital role in the overall performance of systems.

Processors

The heart of AI hardware lies in its processing units. Different types of processors are designed to handle various aspects of AI workloads, from general-purpose computing to specialized AI tasks. Here are the main types of processors used in AI hardware:

CPU (Central Processing Unit): General-purpose processor, now with some AI acceleration capabilities.

GPU (Graphics Processing Unit): Excels at parallel processing, ideal for many AI tasks, especially deep learning.

TPU (Tensor Processing Unit): Google's custom-designed chip for tensor operations in machine learning.

NPU (Neural Processing Unit): Specialized for neural network computations and AI acceleration.

FPGA (Field-Programmable Gate Array): Reconfigurable hardware for flexible AI applications.

ASIC (Application-Specific Integrated Circuit): Custom-designed chips for specific AI tasks, offering high performance and efficiency.

Memory and Storage

Efficient data access and storage are crucial for AI systems to function effectively. AI workloads often involve processing large amounts of data, making memory and storage solutions a critical component of AI hardware. Here are the key memory and storage technologies used in systems:

RAM (Random Access Memory): Fast, volatile memory for active data during AI computations.

Cache: Ultra-fast memory close to the processor for quick data access.

SSD (Solid State Drive): Fast storage preferred in AI setups.

Petascale Storage: Large-scale solutions for vast AI datasets.

Object Storage: Scalable storage solution for unstructured data, ideal for AI and machine learning workloads.

Object storage deserves special mention in the context of AI and machine learning. It offers several advantages:

Scalability: Can easily handle petabytes of data, essential for large AI datasets.

Cost-effectiveness: Often more economical for storing vast amounts of unstructured data.

Data durability: Built-in redundancy ensures data integrity.

API accessibility: Allows direct access to data objects, beneficial for distributed AI workloads.

Metadata management: Enhances data organization and retrieval for AI applications.

Many cloud providers offer object storage solutions optimized for AI workloads, making it easier to implement and scale AI projects.

Interconnects

Interconnects are the communication highways of AI hardware systems. They ensure efficient data transfer between different components, which is crucial for maintaining high performance in AI computations. High-speed interconnects minimize bottlenecks and allow for seamless scaling of systems.

AI Hardware Architectures

AI hardware architectures define how different components are organized and interact within a system. These architectures are designed to optimize AI workloads and can significantly impact AI performance. The choice of architecture can profoundly influence an AI system's efficiency, scalability, and suitability for specific tasks. Here are some key AI hardware architectures:

Von Neumann Architecture: Traditional computing architecture used in many systems for AI. While versatile, it can face bottlenecks in data-intensive AI workloads due to the separation of processing and memory.

Neuromorphic Architecture: Hardware designed to mimic biological neural networks. This architecture offers potential for extremely low power consumption and efficient processing of unstructured data, making it promising for edge AI applications.

Dataflow Architecture: Optimized for data flow, useful for certain AI algorithms. This architecture excels in handling large-scale parallel computations, making it particularly effective for deep learning tasks with massive datasets.

Interestingly, many modern systems employ hybrid architectures, combining elements from different approaches to balance performance, efficiency, and flexibility. For instance, some systems might use a Von Neumann architecture for general computing tasks while incorporating neuromorphic elements for specific AI functions.

Performance Metrics

Understanding AI hardware performance is crucial for selecting the right solutions and optimizing systems for AI. Various metrics are used to measure different aspects of AI hardware performance:

FLOPS (Floating Point Operations Per Second): Measures floating-point calculations per second.

TOPS (Tera Operations Per Second): Measures AI-specific performance, often for integer operations.

Latency: Time delay between input and output.

Throughput: Amount of data processed in a given time.

Efficiency: Often measured in performance per watt.

MLPerf: Industry-standard benchmark for AI hardware performance.

Numerical Representations

Numerical representations in AI hardware affect both performance and accuracy. Different precision levels are used depending on the specific requirements of AI tasks:

FP64 (Double Precision Floating Point): High precision for scientific computations.

FP32 (Single Precision Floating Point): Standard precision for many applications.

FP16 (Half Precision Floating Point): Lower precision, often used in deep learning.

bfloat16 (Brain Floating Point): 16-bit format tailored for AI, balancing range and precision.

Applications and Use Cases

AI optimized hardware solutions finds applications across various sectors, enabling advanced computational tasks and driving innovation:

Data Centers: AI hardware powers large-scale training of complex models and enables high-volume inference operations, supporting a wide range of AI applications and services.

Edge Devices: Specialized AI chips in smartphones, IoT devices, and autonomous vehicles enable on-device AI processing, improving response times and data privacy for tasks like voice recognition and real-time decision making.

Research Institutions: Advanced AI hardware accelerates the development and testing of novel algorithms, facilitating breakthroughs in areas such as natural language processing, computer vision, and reinforcement learning.

Industry-specific Solutions: In sectors like finance, manufacturing, and retail, AI hardware drives predictive analytics, process optimization, and personalized customer experiences through tailored AI applications.

Medical and Scientific Research: AI hardware accelerates disease analysis through medical imaging, virtual molecule screening, and protein structure analysis. It enhances drug discovery, predicts protein changes, and enables multi-modal medical data modeling for personalized healthcare insights.

Mar 19 ——Milvus 2.4 Unveils CAGRA_ Elevating Vector Search with Next-Gen GPU Indexing.png

CAGRA: Elevating Vector Search with Next-Gen GPU Indexing

Mar 19 ——Milvus 2.4 Unveils CAGRA_ Elevating Vector Search with Next-Gen GPU Indexing.png

CAGRA: Elevating Vector Search with Next-Gen GPU Indexing

Challenges and Considerations

While AI hardware solutions offer significant benefits, they also present several challenges that need to be addressed:

Power Consumption: Hardware for AI often requires substantial energy, leading to high operational costs and environmental concerns. This challenge drives research into more energy-efficient designs and cooling solutions.

Heat Management: Intensive computations generate significant heat, necessitating advanced cooling systems. Efficient heat dissipation is crucial for maintaining performance and extending hardware lifespan.

Scalability: As AI models grow in complexity, hardware must adapt to various scales. This involves designing systems that can efficiently handle both small-scale edge deployments and massive data center operations.

Cost: High-performance AI hardware can be expensive, potentially limiting accessibility. Balancing performance with affordability remains a key challenge in democratizing AI technology.

Supply Chain Issues: Global demand for AI hardware components can lead to shortages and production bottlenecks. Ensuring a stable and diverse supply chain is crucial for sustained AI hardware development.

Security: As AI systems handle sensitive data, ensuring hardware-level security, data integrity, and confidentiality becomes paramount. This includes protection against both physical tampering and cyber threats.

Data Challenges for AI

Data management is a crucial aspect of AI systems, presenting its own set of challenges:

Data Location: With data often spread across various platforms and geographical locations, integrating and accessing it efficiently poses significant challenges for systems.

Data Formats: The diversity of data formats complicates preparation and processing. AI systems must be able to handle and normalize a wide range of structured and unstructured data types.

Data Quality: Inconsistent or poor-quality data can significantly impact AI model performance. Ensuring data accuracy, completeness, and relevance is crucial for reliable AI outcomes.

Data Curation: AI and Business Intelligence often require different approaches to data preparation. Developing tools and methodologies that can effectively curate data for various AI applications is an ongoing challenge.

Advantages of Specialized AI Hardware

Specialized AI hardware offers several advantages over general-purpose computing solutions, particularly in enhancing AI performance:

Optimized Computations: These systems are designed specifically for tensor operations and matrix multiplications, the core computations in many AI algorithms, resulting in significantly faster processing and improved AI performance.

Cost-Effectiveness: Despite higher upfront costs, specialized AI hardware often provides a better price-performance ratio for AI workloads compared to general-purpose systems.

Efficient Model Training: Particularly effective for training complex models like Transformers, specialized hardware can dramatically reduce training times and resource requirements.

Improved Scalability: With features like all-to-all connectivity, AI hardware allows for better scaling of models and workloads across multiple devices or nodes.

Parallel Processing: Designed for distributed data processing, AI hardware excels at handling the parallel nature of many AI algorithms, further enhancing performance.

Future Developments

The field of AI hardware is continuously evolving, with several exciting developments on the horizon that promise to further enhance performance:

Quantum Computing: This emerging technology promises unprecedented computational power for certain AI tasks, potentially revolutionizing fields like cryptography and complex system modeling.

Edge AI: As AI capabilities expand to end-user devices, specialized hardware for AI is being developed to enable more powerful and efficient on-device AI processing, enhancing privacy and reducing latency.

Sustainable Designs: With growing awareness of AI's environmental impact, future hardware designs are focusing on energy efficiency and sustainable materials to reduce the carbon footprint of AI operations.

Neuromorphic Computing: By mimicking the structure and function of biological neural networks, neuromorphic hardware aims to achieve higher efficiency and novel computational capabilities for AI systems.

Scalability and Future-Proofing: As AI models and applications continue to grow in complexity, hardware designers are focusing on creating adaptable solutions that can scale effectively and remain relevant as the field evolves.

Continuous Improvement: Ongoing advancements in materials science, chip design, and manufacturing processes are driving continuous improvements in AI hardware capabilities, enabling more complex problem-solving and expanding the boundaries of AI applications.

Interdisciplinary Impact

AI hardware's influence extends beyond computer science, affecting various fields and driving the need for innovative AI hardware solutions:

Neuroscience: The development of AI hardware, particularly neuromorphic computing, is both inspired by and contributing to our understanding of brain function, potentially leading to breakthroughs in neuroscience and cognitive research.

Physics: The intersection of AI hardware with quantum computing is pushing the boundaries of quantum physics, while also benefiting from advancements in materials science and quantum mechanics.

Environmental Science: As AI is increasingly applied to climate modeling and environmental monitoring, the development of energy-efficient AI hardware is becoming crucial in addressing and mitigating AI's ecological impact.

Ethics: The capabilities and limitations of AI hardware play a significant role in discussions around AI ethics, influencing debates on privacy, bias, and the equitable access to AI technologies.

AI Hardware

AI Hardware

Conclusion

AI hardware forms the backbone of modern artificial intelligence systems, playing a crucial role in advancing the field and enabling breakthrough applications across various sectors. From specialized processors like GPUs and TPUs to innovative memory solutions and interconnects, the hardware components work in concert to tackle the computational challenges posed by complex AI algorithms and data-intensive workloads.

The evolution of hardware architectures, including neuromorphic and dataflow designs, continues to push the boundaries of AI performance and efficiency. These advancements are essential for addressing the growing demands of AI applications, from edge devices to large-scale data centers.

While AI hardware solutions offer significant advantages in terms of performance and specialized capabilities, they also present challenges related to power consumption, heat management, and scalability. Ongoing research and development in areas such as quantum computing, edge AI, and sustainable designs promise to address these challenges and open new frontiers in AI capabilities.

The interdisciplinary impact of hardware extends beyond computer science, influencing fields like neuroscience, physics, and environmental science. As AI continues to permeate various aspects of society, the ethical implications of hardware development, including issues of accessibility and environmental impact, become increasingly important.

In conclusion, the field of AI hardware is dynamic and rapidly evolving, with continuous innovations driving the future of artificial intelligence and its applications across industries and scientific domains.

- **Overview**

- **Key Components**

- AI Hardware Architectures

- Performance Metrics

- **Applications and Use Cases**

- Challenges and Considerations

- Advantages of Specialized AI Hardware

- Future Developments

- Interdisciplinary Impact

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free