VidTok: Rethinking Video Processing with Compact Tokenization

Consider watching a video of a busy street: the buildings, trees, and roads remain almost the same in every frame, while only the people and vehicles move. Traditional video processing methods analyze each frame as an independent image, which means they end up handling a lot of repetitive information without taking advantage of the natural flow from one frame to the next. This redundancy makes video processing inefficient, requiring more storage, memory, and computation than necessary.

To address this, VidTok, introduced in the paper VidTok: A Versatile and Open-Source Video Tokenizer, presents a new approach to video compression and representation. Instead of processing each frame separately, VidTok transforms raw video into compact tokens that capture both visual details and motion. This reduces redundancy while preserving the essential structure of the video, making tasks like video generation, editing, and retrieval more efficient.

In this article, we will look at the limitations of conventional video processing and how VidTok provides a more efficient alternative. We will break down its architecture, explain its approach to spatial and temporal feature extraction, and explore how it quantizes video data.

The Limitations of Traditional Video Processing

In many video scenarios, especially with static backgrounds or minimal changes, the content between consecutive frames remains similar. Instead of leveraging this redundancy, conventional methods treat each frame as an isolated image. This approach does not account for the continuity in video data and leads to inefficiencies that affect both performance and resource usage.

The primary issues include:

Excessive Computational Load: Similar information is processed repeatedly across frames, leading to redundant calculations. This increases processing time and energy consumption, which becomes critical for high-resolution videos and real-time applications.

High Storage and Memory Overhead: Storing each frame as a unique unit results in the accumulation of redundant data. Over the duration of a video, the repeated storage of nearly identical background information significantly increases data volume, placing strain on storage systems and memory resources.

Loss of Temporal Coherence: The continuity between frames is necessary for accurately capturing motion. Isolating each frame can cause the system to miss subtle changes that convey continuity, potentially leading to a loss of detail in dynamic scenes and artifacts in video reconstruction.

Inefficient Data Utilization: Processing static portions of a video repeatedly means that computational resources are not focused on actual changes. This results in wasted processing power on redundant information rather than on elements that define the scene’s dynamics.

These challenges underscore the need for a method that compresses video data into a compact, meaningful representation, preserving both spatial detail and the flow of motion. VidTok addresses these issues by transforming raw video data into an efficient tokenized format that targets meaningful changes while minimizing redundancy.

How VidTok Transforms Raw Video Data

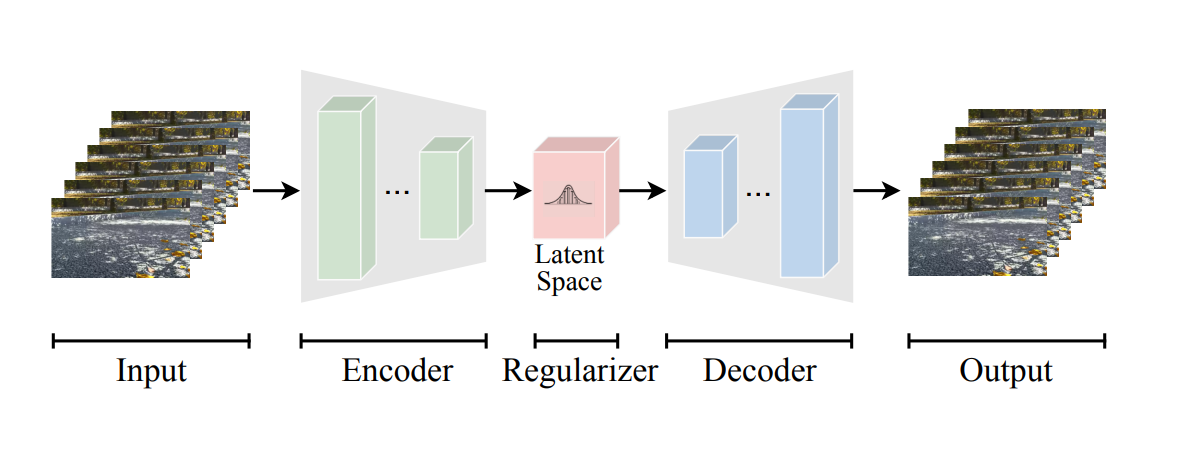

VidTok converts high-dimensional video into a compact representation through a sequence of steps: encoding, regularization, quantization, and decoding.

Figure 1. Overview of the VidTok Pipeline

The process begins with an encoder, a neural network using convolutional layers to extract essential spatial features from each frame. This network identifies key details like edges, textures, and shapes. By leveraging similarities between consecutive frames, the encoder focuses on changes that capture motion rather than repeatedly processing static information.

After encoding, a regularization step organizes the extracted features into a structured latent space(a compressed, abstract representation of data where similar inputs have similar encoded features). This ensures that similar frames yield similar representations, which is vital for consistent reconstruction later on.

Following regularization, VidTok applies Finite Scalar Quantization (FSQ) to the latent features, creating a discrete representation. FSQ maps each element of the representation to one of a fixed set of values, avoiding issues like codebook collapse seen in traditional vector quantization. However, VidTok is not limited to discrete tokenization, it also supports continuous tokenization, where video is mapped into a smooth latent space instead of fixed discrete values. Continuous tokenization is useful for tasks like video generation and diffusion-based models, whereas discrete tokenization is more efficient for compression and retrieval tasks. VidTok balances both approaches by using FSQ for discrete tokens and KL regularization for continuous tokens, making it more flexible than models that specialize in only one type.

The decoder then reconstructs the video when needed, reassembling the tokenized representation to preserve both spatial details and temporal motion dynamics. By compressing video data into this tokenized format, VidTok reduces redundancy while retaining critical information, making video editing, generation, and retrieval more efficient. Now that we’ve seen how VidTok encodes and quantizes video data, let’s explore its architectural components in more detail.

Inside the VidTok Architecture

VidTok is engineered to convert raw video data into a compact set of tokens that capture both spatial details and motion information. The architecture is composed of several interlinked modules that work together to reduce redundancy and preserve essential content.

Spatial Feature Extraction with 2D Convolutions

The process begins with an encoder that applies 2D convolutional layers to individual video frames. These layers extract critical spatial features, such as edges, textures, and shapes, from each image. For instance, in a city street scene, the network learns to detect building outlines, road markings, and signage while minimizing the repetitive processing of static background elements.

Temporal Feature Extraction with 3D Convolutions

To capture motion, VidTok employs 3D convolutional layers that process multiple frames simultaneously. By considering both spatial and temporal dimensions, these layers identify movement patterns and transitions over time. In a scenario like a busy intersection, 3D convolutions focus on the dynamic aspects, such as moving vehicles and pedestrians, while ignoring the largely static surroundings.

Decoupled Spatial and Temporal Sampling

A key design decision in VidTok is to handle spatial and temporal information separately. Spatial sampling is performed using dedicated 2D operations that efficiently extract details from each frame, while temporal sampling is managed independently to track changes over time. This separation allows the network to allocate resources where they are most needed, reducing redundant computations on static content.

Temporal Blending with the AlphaBlender Operator

Maintaining smooth transitions between frames is essential for capturing motion accurately. VidTok integrates the AlphaBlender operator to blend features from consecutive frames. The operator computes a weighted sum according to the formula:

x=α⋅x1+(1−α)⋅x2

In this equation, x1 and x2 represent feature maps from two consecutive time steps, while α controls the balance between them. This blending process is crucial for preserving gradual changes, such as the shifting hues of a sunset, by effectively combining the information from adjacent frames. To better understand how VidTok processes spatial and temporal information, take a look at the following diagram, which illustrates its architecture and shows the different components involved in feature extraction and blending.

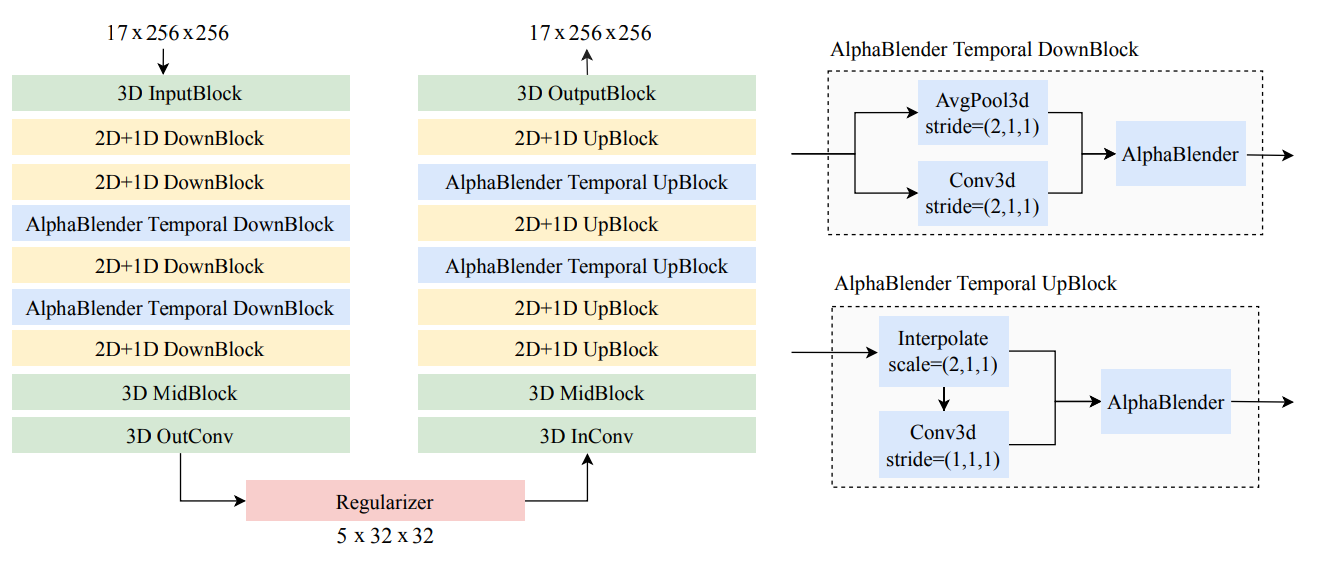

Figure 2. The model’s spatial and temporal processing, including the 2D+1D DownBlocks, AlphaBlender Temporal DownBlocks, and 3D convolutions

VidTok processes video input using a mix of 3D convolutions for spatial-temporal feature extraction, 2D+1D blocks for efficient spatial processing, and AlphaBlender for temporal blending. The right side of the diagram details how AlphaBlender integrates pooling and interpolation to maintain smooth motion representation. This structure ensures that static details are preserved while temporal information is efficiently captured without introducing unnecessary artifacts.

Finite Scalar Quantization (FSQ)

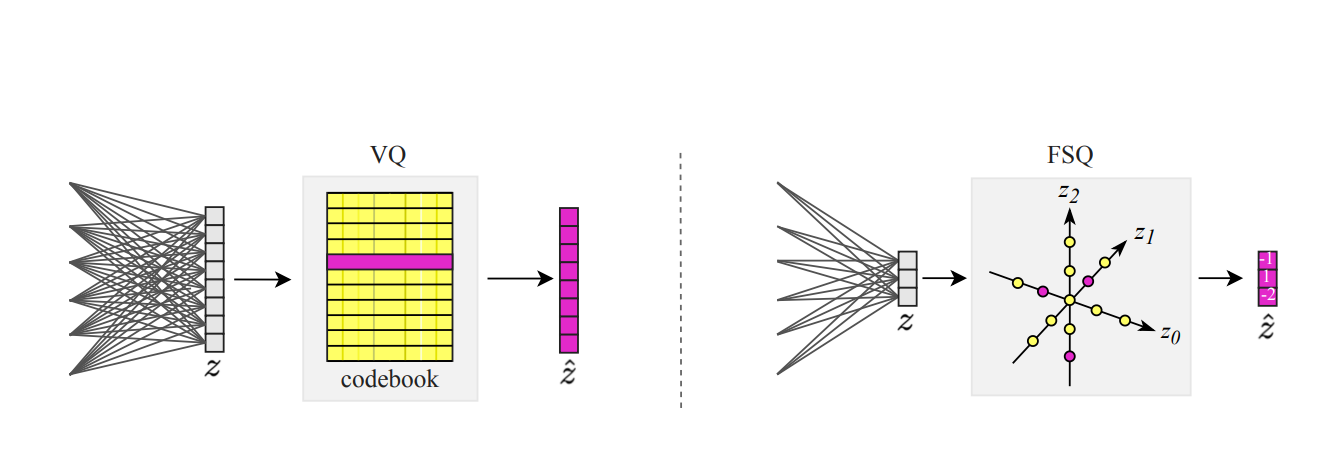

Following feature extraction and blending, VidTok compresses the latent representation using Finite Scalar Quantization (FSQ). FSQ quantizes each scalar element of the latent vector independently by mapping it to one of a fixed set of predetermined values. This independent quantization avoids issues like codebook collapse that can occur in traditional vector quantization methods. The result is a set of tokens where almost every token carries meaningful information.

Figure 3. Visual comparison between traditional vector quantization and FSQ

The above figure shows how VQ replaces the entire latent vector with the nearest entry from a learned codebook, which can lead to instability. In contrast, FSQ quantizes each dimension independently along predefined axes (z₀, z₁, z₂), eliminating the need for a codebook and ensuring more efficient and stable tokenization. This method results in a more reliable discrete representation where each token carries meaningful information.

The Decoder and Reconstruction Process

The decoder reverses the encoding process by reconstructing the video from the compact tokens. It reassembles the tokenized representation to reproduce both the spatial details and the temporal flow of the original video. This reconstruction is similar to piecing together a mosaic, where each token contributes to the complete image.

Training Strategy and Regularization

VidTok is trained using a two-stage approach that balances computational efficiency with high-quality video reconstruction. In the first stage, the model is trained on low-resolution videos, allowing it to capture structural patterns without excessive computational cost. This helps the network learn general video features before dealing with finer details. In the second stage, only the decoder is fine-tuned using high-resolution data, refining the reconstructed frames while keeping training efficient. Additionally, reducing the frame rate during training helps the model focus on meaningful temporal changes rather than processing redundant frame-by-frame updates. This improves motion representation by prioritizing the changes that actually matter in video dynamics.

To maintain a structured latent space, VidTok applies regularization techniques that improve stability and prevent overfitting. One key method used for continuous tokens is KL divergence, which measures how much the learned distribution deviates from an expected distribution. By minimizing KL divergence, the model ensures that latent representations remain smooth and do not collapse into overly concentrated values, which could otherwise limit the diversity of learned features.

For discrete tokens, entropy penalties are applied to encourage a more uniform and diverse usage of available quantization levels. Without these penalties, the model might overuse only a small subset of tokens, leading to inefficient compression and loss of information. By applying entropy regularization, VidTok ensures that the entire token space is utilized effectively, improving the quality of video representation. Together, these techniques help VidTok optimize video tokenization, preserving spatial and temporal information while enabling efficient video generation, editing, and retrieval.

Let’s now examine how these architectural choices translate into performance improvements on key benchmarks and practical applications.

VidTok’s Performance on Benchmarks

After refining its training strategy and applying Finite Scalar Quantization, VidTok shows strong performance on video reconstruction benchmarks. The evaluation measures how well it preserves spatial details and captures motion across various video datasets, each with its own challenges in scene complexity and motion dynamics.

VidTok was tested on datasets such as MCL-JCV and WebVid-Val. The MCL-JCV dataset consists of videos with varying motion patterns and levels of detail, while WebVid-Val contains natural videos from real-world scenarios. Under these conditions, VidTok achieved a Peak Signal-to-Noise Ratio (PSNR) of 29.82 dB, indicating that the reconstructed videos closely match the original content with minimal distortion. It also reached a Structural Similarity Index (SSIM) of 0.867, reflecting its ability to maintain spatial structures, textures, and contrast between frames. A higher SSIM means the reconstructed video looks more like the original.

Further evaluation using the Learned Perceptual Image Patch Similarity (LPIPS) metric resulted in a score of 0.106, suggesting that the visual differences between the original and reconstructed videos remain low. VidTok also recorded a Fréchet Video Distance (FVD) of 160.1, a measure of how well the model preserves temporal consistency and motion across frames.

These results highlight VidTok’s ability to balance compression efficiency with reconstruction quality. The tokenized representation retains essential information while reducing redundancy, making it useful for applications in video generation, editing, and retrieval. While these results demonstrate VidTok’s efficiency, there are still areas where further improvements can be made.

Future Directions for VidTok and Video Tokenization

VidTok provides an efficient approach to video representation, improving retrieval, storage, and generation. While the current model achieves strong results, several areas could be explored to enhance its capabilities further.

Improving Motion Representation

VidTok captures temporal dynamics using 3D convolutions and the AlphaBlender operator, but handling long-range dependencies in video remains challenging. Future work could explore transformer-based video architectures that explicitly track long-range dependencies across frames. These improvements would enhance motion continuity, particularly in scenes with fast-moving objects, occlusions, or rapid transitions.

Higher Resolution and Multi-Scale Encoding

Handling higher-resolution videos efficiently remains an open challenge. Multi-scale encoding techniques, where different parts of a video are compressed at varying levels of detail, could allow for better preservation of fine textures without increasing computational costs significantly. This would be particularly useful for applications requiring high-quality video reconstruction, such as media production and medical imaging.

Adaptive Quantization for Better Compression

Finite Scalar Quantization (FSQ) provides stability in tokenization, but adaptive quantization could improve efficiency further. By dynamically adjusting the bit allocation based on scene complexity, the system could assign more detail to areas with intricate textures or high motion while reducing redundancy in static regions. This would optimize storage while maintaining video quality.

Cross-Modal Learning for Video Understanding

VidTok’s tokenized representations could be combined with other modalities, such as text and audio, to enhance video understanding. Future work could explore joint embeddings where video tokens are mapped alongside text descriptions and sound features. This would improve tasks such as automatic captioning, multimodal search, and video-based question answering, expanding VidTok’s potential applications beyond retrieval and compression.

By focusing on these areas, VidTok can continue evolving into a more powerful tool for video processing, improving efficiency in storage, retrieval, and analysis across various industries.

Conclusion

VidTok improves video processing by reducing redundancy and preserving both spatial and temporal details. Instead of treating each frame as an isolated image, it converts video data into compact tokens, making storage, compression, and reconstruction more efficient. By applying convolutional encoders, Finite Scalar Quantization (FSQ), and temporal blending, it focuses on meaningful changes while minimizing unnecessary processing.

This approach makes tasks like video generation, editing, and retrieval more efficient without sacrificing important details. Looking ahead, improvements in motion tracking, multi-scale encoding, and adaptive quantization could further refine its performance. As demand for efficient video processing grows, VidTok provides a structured and scalable method to manage video data while maintaining accuracy and efficiency.

Further Resources

- The Limitations of Traditional Video Processing

- How VidTok Transforms Raw Video Data

- Inside the VidTok Architecture

- VidTok’s Performance on Benchmarks

- Future Directions for VidTok and Video Tokenization

- Conclusion

- Further Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introducing Zilliz Cloud Global Cluster: Region-Level Resilience for Mission-Critical AI

Zilliz Cloud Global Cluster delivers multi-region resilience, automatic failover, and fast global AI search with built-in security and compliance.

How to Build RAG with Milvus, QwQ-32B and Ollama

Hands-on tutorial on how to create a streamlined, powerful RAG pipeline that balances efficiency, accuracy, and scalability using the QwQ-32B model and Milvus.

Cosmos World Foundation Model Platform for Physical AI

NVIDIA’s Cosmos platform pioneers GenAI for physical applications by enabling safe digital twin training to overcome data and safety challenges in physical AI modeling.