Couchbase vs Neo4jChoosing the Right Vector Database for Your AI Apps

What is a Vector Database?

Before we compare Couchbase and Neo4j let's first explore the concept of vector databases.

A vector database is specifically designed to store and query high-dimensional vectors, which are numerical representations of unstructured data. These vectors encode complex information, such as the semantic meaning of text, the visual features of images, or product attributes. By enabling efficient similarity searches, vector databases play a pivotal role in AI applications, allowing for more advanced data analysis and retrieval.

Common use cases for vector databases include e-commerce product recommendations, content discovery platforms, anomaly detection in cybersecurity, medical image analysis, and natural language processing (NLP) tasks. They also play a crucial role in Retrieval Augmented Generation (RAG), a technique that enhances the performance of large language models (LLMs) by providing external knowledge to reduce issues like AI hallucinations.

There are many types of vector databases available in the market, including:

- Purpose-built vector databases such as Milvus, Zilliz Cloud (fully managed Milvus)

- Vector search libraries such as Faiss and Annoy.

- Lightweight vector databases such as Chroma and Milvus Lite.

- Traditional databases with vector search add-ons capable of performing small-scale vector searches.

Couchbase is a distributed multi-model NoSQL document-oriented database and Neo4j is a graph database. Both have vector search capabilities added on. This post compares their vector search capabilities.

Couchbase: Overview and Core Technology

Couchbase is a distributed, open-source, NoSQL database that can be used to build applications for cloud, mobile, AI, and edge computing. It combines the strengths of relational databases with the versatility of JSON. Couchbase also provides the flexibility to implement vector search despite not having native support for vector indexes. Developers can store vector embeddings—numerical representations generated by machine learning models—within Couchbase documents as part of their JSON structure. These vectors can be used in similarity search use cases, such as recommendation systems or retrieval-augmented generation both based on semantic search, where finding data points close to each other in a high-dimensional space is important.

One approach to enabling vector search in Couchbase is by leveraging Full Text Search (FTS). While FTS is typically designed for text-based search, it can be adapted to handle vector searches by converting vector data into searchable fields. For instance, vectors can be tokenized into text-like data, allowing FTS to index and search based on those tokens. This can facilitate approximate vector search, providing a way to query documents with vectors that are close in similarity.

Alternatively, developers can store the raw vector embeddings in Couchbase and perform the vector similarity calculations at the application level. This involves retrieving documents and computing metrics such as cosine similarity or Euclidean distance between vectors to identify the closest matches. This method allows Couchbase to serve as a storage solution for vectors while the application handles the mathematical comparison logic.

For more advanced use cases, some developers integrate Couchbase with specialized libraries or algorithms (like FAISS or HNSW) that enable efficient vector search. These integrations allow Couchbase to manage the document store while the external libraries perform the actual vector comparisons. In this way, Couchbase can still be part of a solution that supports vector search.

By using these approaches, Couchbase can be adapted to handle vector search functionality, making it a flexible option for various AI and machine learning tasks that rely on similarity searches.

Neo4j: The Basics

Neo4j’s vector search allows developers to create vector indexes to search for similar data across their graph. These indexes work with node properties that contain vector embeddings - numerical representations of data like text, images or audio that capture the meaning of the data. The system supports vectors up to 4096 dimensions and cosine and Euclidean similarity functions.

The implementation uses Hierarchical Navigable Small World (HNSW) graphs to do fast approximate k-nearest neighbor searches. When querying a vector index, you specify how many neighbors you want to retrieve and the system returns matching nodes ordered by similarity score. These scores are 0-1 with higher being more similar. The HNSW approach works well by keeping connections between similar vectors and allowing the system to quickly jump to different parts of the vector space.

Creating and using vector indexes is done through the query language. You can create indexes with the CREATE VECTOR INDEX command and specify parameters like vector dimensions and similarity function. The system will validate that only vectors of the configured dimensions are indexed. Querying these indexes is done with the db.index.vector.queryNodes procedure which takes an index name, number of results and query vector as input.

Neo4j’s vector indexing has performance optimizations like quantization which reduces memory usage by compressing the vector representations. You can tune the index behavior with parameters like max connections per node (M) and number of nearest neighbors tracked during insertion (ef_construction). While these parameters allow you to balance between accuracy and performance, the defaults work well for most use cases. The system also supports relationship vector indexes from version 5.18, so you can search for similar data on relationship properties.

This allows developers to build AI powered applications. By combining graph queries with vector similarity search applications can find related data based on semantic meaning not exact matches. For example a movie recommendation system could use plot embedding vectors to find similar movies, while using the graph structure to ensure the recommendations come from the same genre or era as the user prefers.

Key Differences

Search Methodology

Couchbase: Couchbase doesn’t have native vector search but has several workarounds. Developers can use Full Text Search (FTS) to tokenize vectors into searchable fields or store raw vector embeddings in JSON documents and calculate similarity at application level. Integrations with external libraries like FAISS or HNSW can improve vector search but requires extra setup.

Neo4j: Neo4j has native vector search through vector indexes built on HNSW graphs. This allows for fast approximate k-nearest neighbor search. Developers can specify vector dimensions, similarity function (cosine or Euclidean) and fine tune index performance parameters, making Neo4j a great option for semantic search directly within the graph.

Data Handling

Couchbase: Couchbase is a distributed NoSQL database for structured, semi-structured and unstructured data. It’s great at storing JSON documents so you can embed vectors alongside other attributes. But its vector search adaptability often requires extra computation or integrations outside the database itself.

Neo4j: Neo4j is a graph database for highly connected data, combining graph relationships with vector embeddings. This allows for vector search and graph queries to be integrated seamlessly and get richer insights by combining contextual and semantic data.

Scalability and Performance

Couchbase: Couchbase is horizontally scalable but since vector similarity calculations are often done at application level or via external tools, scalability for vector search depends on those extra components and not Couchbase itself.

Neo4j: Neo4j’s vector search is optimized for performance through HNSW graph-based indexing. Quantization and configurable parameters makes it scalable and memory efficient for applications with large vector datasets or frequent similarity queries.

Flexibility and Customization

Couchbase: Couchbase is very flexible for data modeling, supports JSON documents and integrates well with other tools and frameworks. For vector search developers have the freedom to implement custom solutions using external libraries or application level logic.

Neo4j: Neo4j provides flexibility in query design by combining graph traversal with vector similarity search. The ability to create indexes on node and relationship properties adds another layer of customization so developers can align data structures with application requirements.

Integration and Ecosystem

Couchbase: Couchbase integrates with many application frameworks and libraries including those for AI and ML workloads. But it relies heavily on external libraries like FAISS for advanced vector search which adds to the complexity of integration.

Neo4j: Neo4j’s ecosystem has native graph analytics and AI focused features. Relationship vector indexes and built-in procedures for vector queries makes it easier to develop AI driven applications that combines graph and semantic data.

Ease of Use

Couchbase: While Couchbase is developer friendly, vector search requires a lot of customization or external tools which adds complexity. Its documentation supports the effort but requires more initial setup for vector search use cases.

Neo4j: Neo4j’s native vector indexing makes it easier to use for developers familiar with graph databases. The declarative query language reduces the learning curve for graph and vector queries.

Cost

Couchbase: Couchbase cost depends on the deployment model (self-hosted vs managed). Custom solutions for vector search will require extra infrastructure and add to the overall cost.

Neo4j: Neo4j’s native vector search reduces tooling cost. But its licensing and resource requirements for large scale graph and vector queries will impact overall cost.

Security

Couchbase: Couchbase has many security features including encryption, role-based access control and enterprise authentication.

Neo4j: Neo4j has many security features including encryption, granular access control and enterprise auth.

When to use Couchbase

Couchbase is a good choice for applications that need a distributed NoSQL database that can handle structured, semi-structured and unstructured data at scale. It’s great when flexibility is key, like storing JSON documents with embedded vector data for applications like recommendation systems or AI driven search. Couchbase can integrate with external vector search libraries so you can customize the solution. It’s good when the primary use case is large scale data storage with occasional vector similarity search.

When to use Neo4j

Neo4j is good for applications that need to combine graph analytics with semantic similarity, like recommendation engines, fraud detection or knowledge graphs. Its native vector indexing, optimized for fast k-nearest neighbor searches, is great for AI applications that use both graph structures and high dimensional vector data. If your use case requires graph relationships and vector search to be seamlessly integrated, Neo4j is a more out of the box solution with minimal need for additional tooling or complex setup.

Summary

Couchbase and Neo4j are both good at different things. Couchbase is great for distributed data management and flexibility, so good for developers who need a general purpose NoSQL database with optional vector search. Neo4j is great when vector search is part of the application and must be combined with graph based queries. It’s up to you to decide based on your use case, the type of data you are storing and the performance or integration requirements of your application.

Read this to get an overview of Couchbase and Neo4j but to evaluate these you need to evaluate based on your use case. One tool that can help with that is VectorDBBench, an open-source benchmarking tool for vector database comparison. In the end, thorough benchmarking with your own datasets and query patterns will be key to making a decision between these two powerful but different approaches to vector search in distributed database systems.

Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

VectorDBBench is an open-source benchmarking tool for users who need high-performance data storage and retrieval systems, especially vector databases. This tool allows users to test and compare different vector database systems like Milvus and Zilliz Cloud (the managed Milvus) using their own datasets and find the one that fits their use cases. With VectorDBBench, users can make decisions based on actual vector database performance rather than marketing claims or hearsay.

VectorDBBench is written in Python and licensed under the MIT open-source license, meaning anyone can freely use, modify, and distribute it. The tool is actively maintained by a community of developers committed to improving its features and performance.

Download VectorDBBench from its GitHub repository to reproduce our benchmark results or obtain performance results on your own datasets.

Take a quick look at the performance of mainstream vector databases on the VectorDBBench Leaderboard.

Read the following blogs to learn more about vector database evaluation.

Further Resources about VectorDB, GenAI, and ML

- What is a Vector Database?

- Couchbase: Overview and Core Technology

- Neo4j: The Basics

- Key Differences

- When to use Couchbase

- When to use Neo4j

- Summary

- Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

- Further Resources about VectorDB, GenAI, and ML

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Why Not All VectorDBs Are Agent-Ready

Explore why choosing the right vector database is critical for scaling AI agents, and why traditional solutions fall short in production.

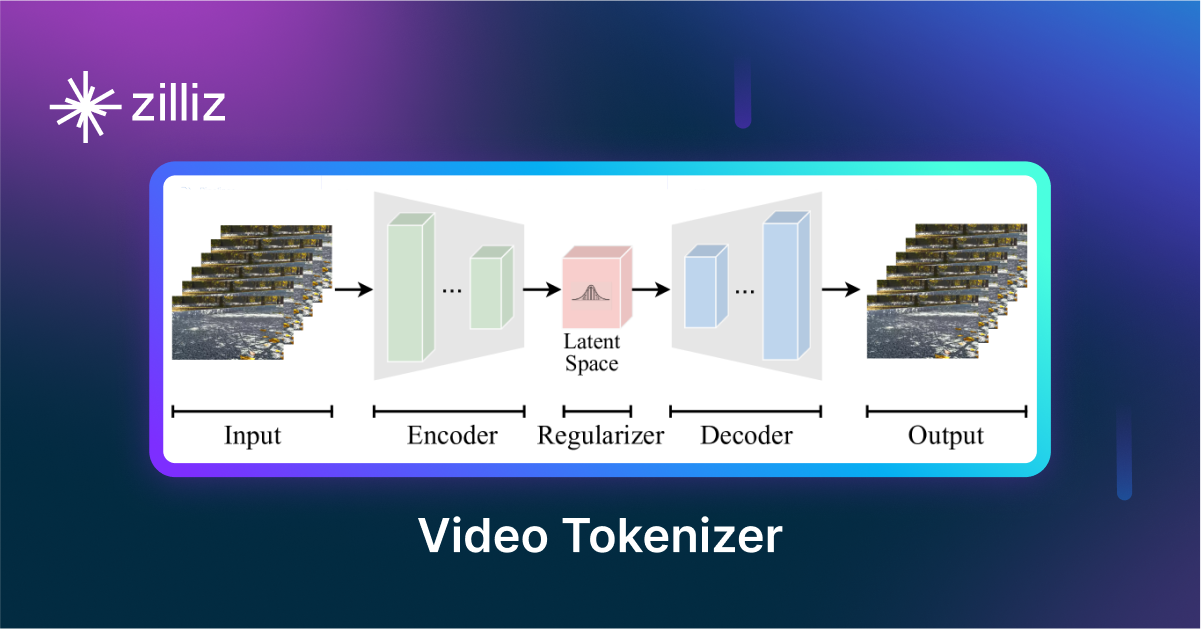

VidTok: Rethinking Video Processing with Compact Tokenization

VidTok tokenizes videos to reduce redundancy while preserving spatial and temporal details for efficient processing.

Vector Databases vs. Graph Databases

Use a vector database for AI-powered similarity search; use a graph database for complex relationship-based queries and network analysis.

The Definitive Guide to Choosing a Vector Database

Overwhelmed by all the options? Learn key features to look for & how to evaluate with your own data. Choose with confidence.