All You Need to Know About ANN Machine Learning

To understand what an ANN (Artificial Neural Network) is, one needs to first understand what a neural network is all about. Just like your brain, neural networks are made up of numerous neurons that are interconnected. Many applications use neural networks to model unknown relationships between various parameters based on a large number of examples

Classification of handwritten digits, speech recognition, and forecasting stock prices are a few prominent examples of successful neural network applications.

In 1943, American neurophysiologist and cybernetician Warren S. McCulloch (the man who invented artificial neural networks) created the first conceptual model of an an artificial neural network, according to which, a neuron is referred to as "a logical calculus of the ideas immanent in nervous activity." Basically, it's a single cell in a network of cells that receives an input, processes it, and generates an output.

This article from Zilliz, the leading provider of vector database and AI technologies, provides a comprehensive understanding of ANN machine learning and how it works.

What is an Artificial Neural Network?

Artificial Neural Network Definition

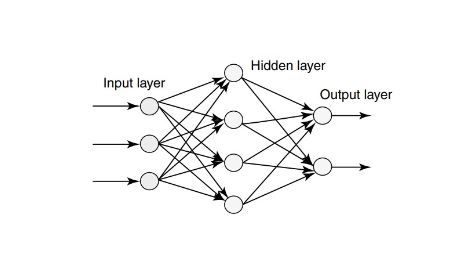

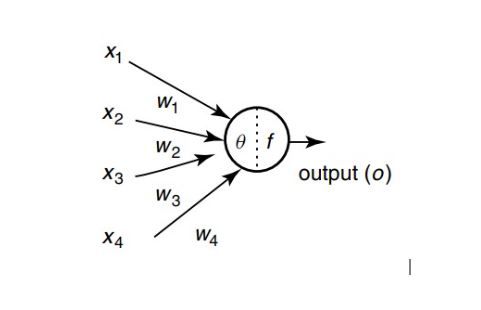

An artificial neural network (ANN) is made up of an input layer, several hidden layers, and an output layer which together make up the basic structure of the neural network. Every node in one layer is linked to every node in the following layer. Any given node processes the weighted sum of its inputs using a nonlinear activation function. This is the node's output, which becomes the input of another node in the next layer.

The signal flows from left to right, and the final output is computed by repeating this procedure for each node, completing the neural network structure. Learning the weights associated with all the edges is what training this deep neural network entails.

Figure 2 depicts a typical artificial neuron as well as the modeling of a multilayered neural network. In this figure, the signal flow from inputs x1,...,xn is considered unidirectional, as indicated by arrows, as is the signal flow from a neuron's output (O).

The Backpropagation Algorithm

In feed forward ANNs, the backpropagation algorithm is used. It means the artificial neurons are organized in layers and send signals "forward," with errors propagating backwards. Neurons in the input layer provide input to the network, and neurons in the output layer provide output to the network.

There could be one or more hidden intermediate layers. The backpropagation algorithm employs supervised learning, which means we feed the algorithm examples of the inputs and outputs we want the network to compute, and the error (the difference between actual and expected results) is calculated.

The backpropagation algorithm is designed to reduce this error until the ANN learns the training data.

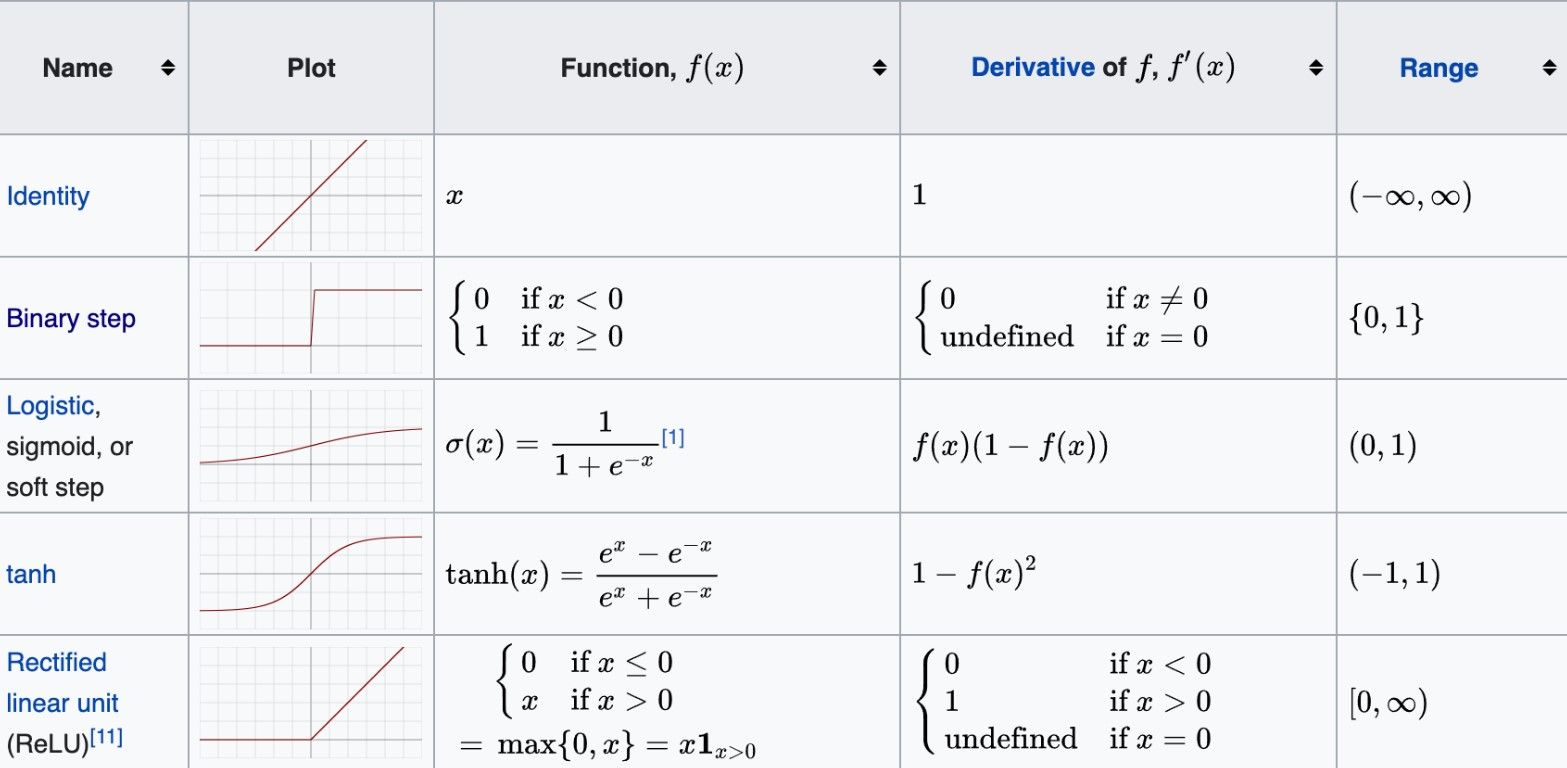

The sigmoidal function is the most common output function. For large positive numbers, the sigmoidal function is very close to one, 0.5 at zero, and very close to zero for large negative numbers. This allows for a smooth transition between the neuron's low and high output (close to zero or close to one).

The backpropagation algorithm now computes the error's relationship to the output, inputs, and weights. Once we've determined this, we can use the gradient descendent method to adjust the weights.

Most common activation functions. Source: Wikipedia

Most common activation functions. Source: Wikipedia

To represent more complex features and "read" increasingly complex models for prediction and classification of data based on thousands or even millions of features, ANN systems needed to evolve to become artificial intelligence neural networks.

Deep learning is a subfield of machine learning that focuses on learning successive "layers" of increasingly meaningful representations from data.

Artificial neural networks come in a variety of forms. The networks are constructed using a set of parameters and mathematical operations that determine the output.

Prominent Types of Artificial Neural Networks

An ANN is a data processing system composed of a large number of simple, highly interconnected processing elements (artificial neurons) in an architecture inspired by the structure of the cerebral cortex of the brain.

There are various types of neural network architecture. Let's take a close look at the most commonly used ones:

1. Feed forward Neural Networks

The most basic type of neural network is called feed forward neural networks (FNN). These FNNssend data in one direction, through various input nodes, until it reaches the output node. The network may or may not have hidden node layers, which makes its operation more understandable. It is set up to deal with a lot of noise.

Data enters this type of neural network through input nodes and leaves through output nodes, and it may have hidden layers. In this neural network, the classifying activation function is utilized. Only the front-propagated wave is required. Backpropagation is not permitted.

Feed forward neural networks are at the core of tasks such as computer vision and natural language processing. A feed forward neural network is fairly simple and hugely beneficial for some machine learning applications due to its streamlined architecture.

2. Convolutional Neural Networks

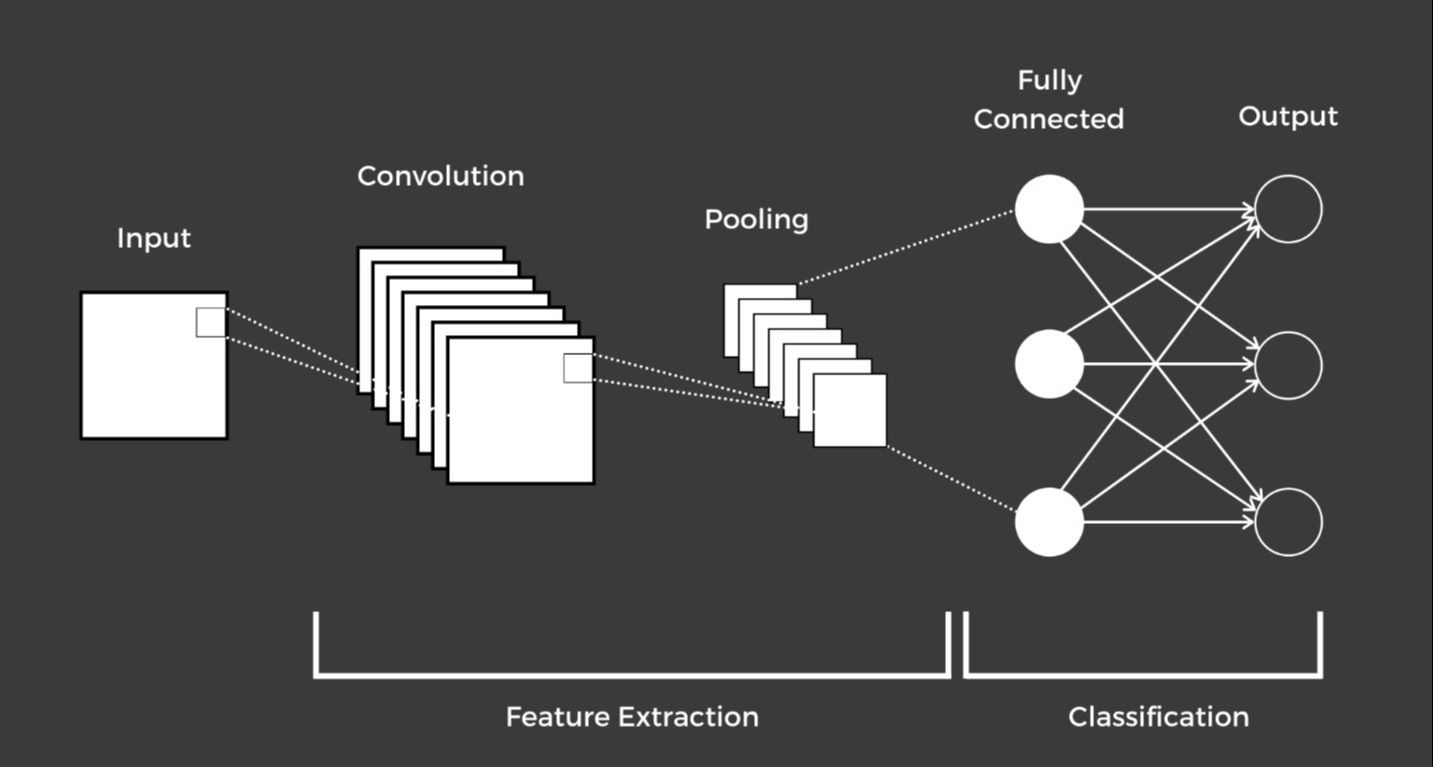

Convolutional neural network example. Source: https://studentsxstudents.com/training-a-convolutional-neural-network-cnn-on-cifar-10-dataset-cde439b67bf3

Convolutional neural network example. Source: https://studentsxstudents.com/training-a-convolutional-neural-network-cnn-on-cifar-10-dataset-cde439b67bf3

A convolutional neural network (CNN or ConvNet) is the most common type of deep neural network. A CNN layers learned features with input data, making the architecture well-suited to process 2D data, such as images. CNNs eliminate the need for manual feature extraction, so you don't have to identify image-classification features.

The CNN extracts features from images directly. The relevant features are not pre-trained. Instead, they are learned as the network trains on a set of images.

There are some significant reasons why convolutional neural networks are preferred over other neural network models. To begin with, CNN uses a weight-sharing concept, which reduces the number of parameters that need to be trained, resulting in improved generalization. Since there are fewer parameters, CNNs can be trained easily and without overfitting. Next, with CNNs, the classification stage is combined with the feature extraction stage, and both stages employ the learning process. Also, implementing large networks using general models of ANN is much more difficult than implementing using CNN.

CNNs are widely used in a variety of domains due to their exceptional performance, including image classification, object detection, face detection, speech recognition, and real-time automotive recognition.

3. Recurrent neural networks

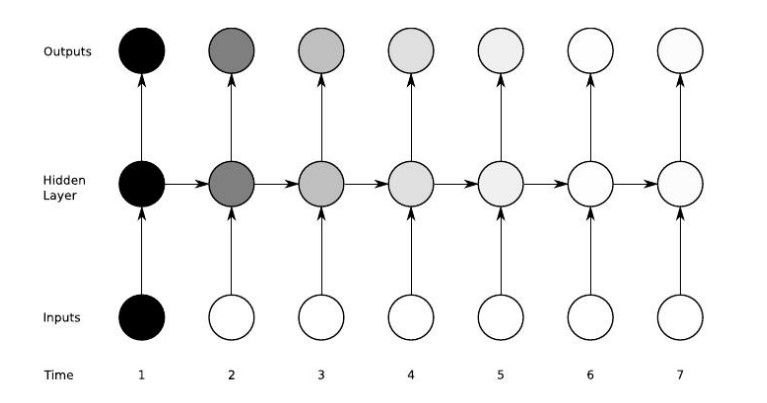

A diagram showing a recurrent neural network architecture. Source: https://www.cs.toronto.edu/~tingwuwang/rnn_tutorial.pdf

A diagram showing a recurrent neural network architecture. Source: https://www.cs.toronto.edu/~tingwuwang/rnn_tutorial.pdf

Recurrent neural networks, just like FNNs, have hidden layers with an additional self-looped connection that allows hidden layers at one time instant to be used as input for the next time instance. Each node in a neural network model acts like a memory cell. By processing the information they receive, these cells work to ensure intelligent computation and implementation. This model's capacity to gather and reuse all processed data makes it unique.

One of the essential components of a recurrent neural network is a robust feedback loop. With recurrent neural networks, the neural network techniques are capable of "self-learning" from their errors. When data is run through the algorithm a second time after an incorrect prediction, the system attempts to make the correct prediction while taking feedback into account.

Recurrent neural networks are frequently employed in applications such as text-to-speech, sales forecasting, and stock market forecasting.

But the Vanilla RNN model has a serious flaw, known as the vanishing gradient problem, which prevents it from being accurate. It means the network has trouble remembering words further along in the sequence, and instead, bases its predictions only on the most recent ones.

In order to address this issue, successor models such as LSTM and GRU were introduced. These networks outperform traditional RNNs because they can maintain long-term interrelationships as well as nonlinear dynamics in the case of a time series input data set. In these models, gradients flow unchanged, thus avoiding the problem of vanishing gradients.

Advantages of Using ANN

Let's take a quick at the three key benefits of artificial neural networks:

Parallel processing capability: An artificial neural network has the numerical strength to perform multiple tasks at once.

Wide applications: Since any neural network is made to make machines function like humans, they have several advantages over human replacements and a wide range of applications. The technology has benefits for the fields of medicine, engineering, mining, agriculture, etc..

No data loss: Since the input is stored in its own networks rather than in a database, data loss has no effect on its operation.

Challenges of Using ANN

The problem of scalability, testing, verification, and integration of neural network systems into modern environments are major issues today. When applied to larger problems, neural network programs can become unstable. Testing and verification are a source of concern for sensitive industries such as defense, nuclear, and space research.

An ANN cannot be selected, developed, trained, or verified using any structured methodology. It is well-known that an ANN's solution quality depends on the size of the training set, the number of layers, and the number of neurons in each layer.

Many people feel the output would be more accurate when there's more data in the training set. However, it is untrue. A training set that is too small will prevent the network from learning generalized patterns from the inputs, whereas one that is too large will cause the generalized patterns to disintegrate and make the network susceptible to input noise.

A neural network model is often called an "ultimate black box". A lack of explanation behind probing solutions is a significant disadvantage - there's an inability to explain why or how the solution breeds distrust in the network. It's because of this problem that some industries, especially the medical community, avoid using a neural network model.

Application of ANN in Machine Learning

Today, neural networks are used in a wide range of applications across multiple industries, leveraging the power of machine learning. Their use in businesses has witnessed a sharp growth of 270% in the past few years. Here are some areas in which neural networks are extremely effective.

Speech Recognition: Artificial neural networks are now routinely used in speech recognition technology. Earlier on, statistical models, such as the Hidden Markov model, were used in speech recognition tech.

Image Recognition: This application comes under the wider category of pattern recognition. Many neural networks have been developed to recognise handwritten characters, whether letters or digits. With numerous other applications, including facial recognition in social media, and the processing of satellite imagery, Image Recognition is a field that is rapidly expanding.

Text Classification: Many applications, including web search, information filtering, language identification, readability testing, and sentiment analysis, depend on text classification.

Forecasting: A neural network can forecast outcomes by examining historical trends. Neural networks are routinely used in applications in the fields of banking, stock markets, and weather forecasting. More research is underway in this area.

Social media: Neural networks are commonly used to analyze user behavior. Massive amounts of user-generated content can be processed and analyzed by a neural network. Every tap a user makes within an app is meant to yield insightful information. Using that data, targeted advertisements are then sent out based on user activity, preferences, and purchasing behavior.

ANN Machine Learning Conclusion

The ability of neural networks to examine a wide range of relationships allows users to quickly model phenomena that would otherwise be difficult, if not impossible, to comprehend.

Managing massive embedding vectors generated by deep neural networks and other machine learning models is crucial. These high-dimensional data make the computation process extremely slow if a traditional database is used. Hence, they can be stored in a vector database.

This is one of the many use cases of vector databases. And this is precisely where Zilliz steps in, especially if you're wondering 'what is a vector database?' and whether to explore it.

There's no denying that vector databases are the need of the hour in the era of AI. Zilliz offers a one-stop solution for challenges in handling unstructured data, especially for enterprises that build AI/ML applications that leverage vector similarity search.

If you want to bring the power of AI to your enterprise, get in touch with Zilliz and discuss the way ahead!

- What is an Artificial Neural Network?

- Prominent Types of Artificial Neural Networks

- Advantages of Using ANN

- Challenges of Using ANN

- Application of ANN in Machine Learning

- ANN Machine Learning Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Now Available in Azure North Europe: Bringing AI-Powered Vector Search Closer to European Customers

The addition of the Azure North Europe (Ireland) region further expands our global footprint to better serve our European customers.

Zilliz Cloud Enterprise Vector Search Powers High-Performance AI on AWS

Zilliz Cloud delivers blazing-fast, secure vector search on AWS, optimized for AI workloads with AutoIndex, BYOC, and Cardinal engine performance.

OpenAI o1: What Developers Need to Know

In this article, we will talk about the o1 series from a developer's perspective, exploring how these models can be implemented for sophisticated use cases.