VectorDB 101

What is a Vector Database and how does it work: Implementation, Optimization & Scaling for Production Applications

A vector database stores, indexes, and searches vector embeddings generated by machine learning models for fast information retrieval and similarity search.

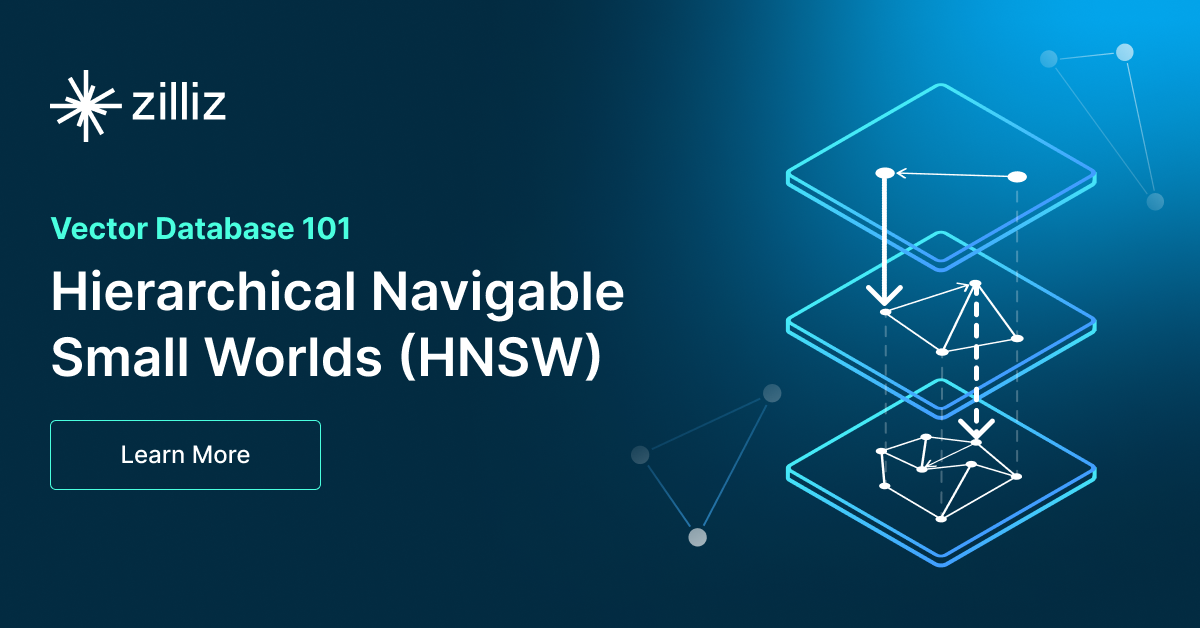

VectorDB 101

Hierarchical Navigable Small Worlds (HNSW)

Hierarchical Navigable Small World (HNSW) is a graph-based algorithm that performs approximate nearest neighbor (ANN) searches in vector databases.

Engineering

Evaluating Your Embedding Model

We'll review some key considerations for selecting a model and a practical example of using Arize Pheonix and RAGAS to evaluate different text embedding models.

VectorDB 101

Deploying Vector Databases in Multi-Cloud Environments

Multi-cloud deployment has become increasingly popular for services looking for as much uptime as possible, with organizations leveraging multiple cloud providers to optimize performance, reliability, and cost-efficiency.

Engineering

Hybrid Search: Combining Text and Image for Enhanced Search Capabilities

Milvus enables hybrid sparse and dense vector search and multi-vector search capabilities, simplifying the vectorization and search process.

Engineering

Introduction to the Falcon 180B Large Language Model (LLM)

Falcon 180B is an open-source large language model (LLM) with 180B parameters trained on 3.5 trillion tokens. Learn its architecture and benefits in this blog.

Engineering

Training Your Own Text Embedding Model

Explore how to train your text embedding model using the `sentence-transformers` library and generate our training data by leveraging a pre-trained LLM.

Engineering

Sentence Transformers for Long-Form Text

Learn about sentence transformers for long-form text, Sentence-BERT architecture and use the IMDB dataset for evaluating different embedding models.

Engineering

Sparse and Dense Embeddings

Learn about sparse and dense embeddings, their use cases, and a text classification example using these embeddings.