Building RAG with Zilliz Cloud and AWS Bedrock: A Narrative Guide

A comprehensive guide on how to use Zilliz Cloud and AWS Bedrock to build RAG applications

Retrieval-Augmented Generation (RAG) is an AI framework that combines information retrieval with natural language processing (NLP) to enhance text generation. In this system, a language model is augmented with a retrieval mechanism that enables it to search a knowledge base or external database in response to a query. This way, it can incorporate up-to-date and relevant information into its responses, resulting in more accurate and contextually rich outputs.

Zilliz Cloud is a platform that provides tools for managing, analyzing, and retrieving data efficiently. Available on the AWS Marketplace and built on top of the open source Milvus vector database, Zilliz Cloud offers solutions for storing and processing large-scale vectorized data. In the context of RAG, Zilliz Cloud's vector database capabilities can be leveraged to store and search through large sets of vector embeddings, facilitating the retrieval component of the RAG framework.

AWS Bedrock is a cloud service that provides access to a variety of pre-trained foundational models. It offers a robust infrastructure for deploying and scaling NLP solutions, allowing developers to integrate models for language generation, comprehension, and translation into their projects. In RAG implementations, language models accessible in AWS Bedrock can handle the generation aspect, creating text responses that are coherent and contextually relevant, integrating retrieved information seamlessly.

Let's see an example of how Zilliz Cloud integrates with AWS Bedrock

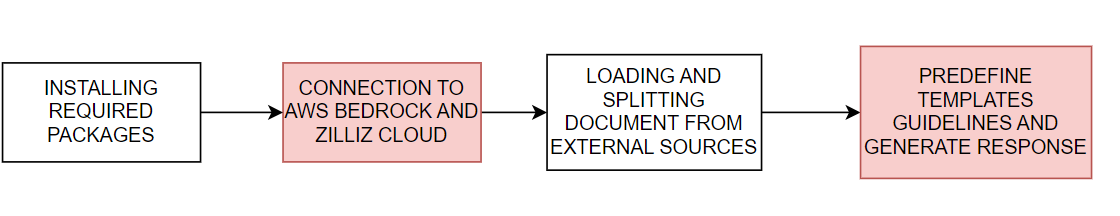

We will go through this code example that demonstrates the integration of Zilliz Cloud with AWS Bedrock to implement a RAG application as summarized on figure one.

Fig 1. Main steps in implementing a RAG application using Zilliz Cloud and AWS Bedrock

#download the packages then import them

! pip install --upgrade --quiet langchain langchain-core langchain-text-splitters langchain-community langchain-aws bs4 boto3

# For example

import bs4

import boto3

Configure the connection to AWS bedrock and Zilliz Cloud

The next step configures the environment variables needed to connect to AWS and Zilliz Cloud services. The region and access keys for AWS are set to provide the necessary credentials and region configuration, ensuring smooth integration with AWS Bedrock services. Similarly, the Zilliz Cloud URI and API key are configured, allowing the application to securely connect to the Zilliz Cloud platform, which provides vector database functionalities essential for retrieval operations in a RAG system.

# Set the AWS region and access key environment variables

REGION_NAME = "us-east-1"

AWS_ACCESS_KEY_ID = os.getenv("AWS_ACCESS_KEY_ID")

AWS_SECRET_ACCESS_KEY = os.getenv("AWS_SECRET_ACCESS_KEY")

# Set ZILLIZ cloud environment variables

ZILLIZ_CLOUD_URI = os.getenv("ZILLIZ_CLOUD_URI")

ZILLIZ_CLOUD_API_KEY = os.getenv("ZILLIZ_CLOUD_API_KEY")

A boto3 client is created to connect to the AWS Bedrock Runtime service using the specified credentials, allowing the integration of the language models in AWS Bedrock. A ChatBedrock instance is initialized, linking it to the client and configuring it to use a specific language model, in this case `anthropic.claude-3-sonnet-20240229-v1:0`. This setup provides the necessary infrastructure for generating text responses, with model-specific settings such as a low temperature parameter to control response variability. A BedrockEmbeddings instance enables the conversion of unstructured data such as text into vector representations.

# Create a boto3 client with the specified credentials

client = boto3.client(

"bedrock-runtime",

region_name=REGION_NAME,

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY,

)

# Initialize the ChatBedrock instance for language model operations

llm = ChatBedrock(

client=client,

model_id="anthropic.claude-3-sonnet-20240229-v1:0",

region_name=REGION_NAME,

model_kwargs={"temperature": 0.1},

)

# Initialize the BedrockEmbeddings instance for handling text embeddings

embeddings = BedrockEmbeddings(client=client, region_name=REGION_NAME)

Gathering and Processing Information

With the embedding model instantiated, the next step is to load data from external sources. A WebBaseLoade instance is created to fetch content from specific web sources. In this case, it is configured to load content from a blog post about AI agents. The loader uses BeautifulSoup's `SoupStrainer` to parse only specific parts of the web page, namely those with classes "post-content," "post-title," and "post-header," ensuring that only relevant content is retrieved. The loader then retrieves documents from the specified web source, providing a collection of content ready for processing. Then, we use a RecursiveCharacterTextSplitter instance to split the retrieved documents into smaller text chunks. This ensures that the content is manageable and can be fed into other components, like the text embedding or language generation modules, for further processing.

# Create a WebBaseLoader instance to load documents from web sources

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

# Load documents from web sources using the loader

documents = loader.load()

# Initialize a RecursiveCharacterTextSplitter for splitting text into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=2000, chunk_overlap=200)

# Split the documents into chunks using the text_splitter

docs = text_splitter.split_documents(documents)

Generating Responses

A predefined prompt template guides the structure of each response, instructing the AI to use statistical information and numbers wherever possible, and to avoid fabricating answers when it lacks the necessary information.

# Define the prompt template for generating AI responses

PROMPT_TEMPLATE = """

Human: You are a financial advisor AI system, and provides answers to questions by using fact based and statistical information when possible.

Use the following pieces of information to provide a concise answer to the question enclosed in <question> tags.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

<context>

{context}

</context>

<question>

{question}

</question>

The response should be specific and use statistics or numbers when possible.

Assistant:"""

# Create a PromptTemplate instance with the defined template and input variables

prompt = PromptTemplate(

template=PROMPT_TEMPLATE, input_variables=["context", "question"]

)

A Zilliz vector store is initialized from the loaded documents and embeddings, connecting to the Zilliz Cloud platform. This store organizes documents as vectors, facilitating quick and efficient document retrieval for RAG operations. Then retrieved documents are formatted into coherent text, creating a narrative that the AI integrates into its replies, delivering answers that are factual, insightful, and relevant to the user's needs.

# Initialize Zilliz vector store from the loaded documents and embeddings

vectorstore = Zilliz.from_documents(

documents=docs,

embedding=embeddings,

connection_args={

"uri": ZILLIZ_CLOUD_URI,

"token": ZILLIZ_CLOUD_API_KEY,

"secure": True,

},

auto_id=True,

drop_old=True,

)

# Create a retriever for document retrieval and generation

retriever = vectorstore.as_retriever()

# Define a function to format the retrieved documents

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

Finally, a complete RAG chain for generating AI responses and demonstrates its usage. The chain works by first retrieving documents relevant to the user's query from the vector store, processing them with a retriever and formatter, then passing them along to a prompt template to format the response structure. This structured input is then fed into a language model to generate a coherent response, which is parsed into a string format and presented to the user, offering an accurate, informed answer.

# Define the RAG (Retrieval-Augmented Generation) chain for AI response generation

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# rag_chain.get_graph().print_ascii()

# Invoke the RAG chain with a specific question and retrieve the response

res = rag_chain.invoke("What is self-reflection of an AI Agent?")

print(res)

A response from the model will look something like this.

Self-reflection is a vital capability that allows autonomous AI agents to improve iteratively by analyzing and refining their past actions, decisions, and mistakes. Some key aspects of self-reflection for AI agents include:

1. Evaluating the efficiency and effectiveness of past reasoning trajectories and action sequences to identify potential issues like inefficient planning or hallucinations (generating consecutive identical actions without progress).

2. Synthesizing observations and memories from past experiences into higher-level inferences or summaries to guide future behavior.

Benefits of Zilliz Cloud Integration and AWS Bedrock

As shown in Table 1, Zilliz Cloud and AWS Bedrock integrate seamlessly, providing efficiency, scalability, and accuracy for RAG applications. This synergy enables the development of comprehensive solutions that handle large datasets, offering more accurate responses and simplifying the implementation of RAG chains.

| Aspect | Zilliz Cloud | AWS Bedrock | Synergy & Benefits |

| Storage and Retrieval | Provides a managed vector database, built on Milvus, that stores and organizes data as vectors, facilitating quick retrieval. | Offers integration with various language models, which can utilize retrieved data to generate responses. | The combination of Zilliz Cloud's vector storage and AWS Bedrock's NLP capabilities enhances the efficiency of AI-driven queries, enabling rapid access to relevant information for generation. |

| Scalability | Zilliz Cloud's infrastructure supports large-scale data storage and retrieval, allowing efficient management of vast datasets. | AWS Bedrock offers scalable NLP solutions, accommodating diverse models and handling large volumes of queries seamlessly. | This integration provides scalability in both data storage and language generation, making it ideal for projects that handle substantial datasets and require frequent retrieval and processing. |

| Accuracy | Zilliz Cloud enables precise data retrieval through vector embeddings, ensuring that relevant information is fetched accurately. | AWS Bedrock's language models can incorporate retrieved information into responses, providing coherent and contextually accurate outputs. | This synergy minimizes hallucinations and ensures accurate, fact-based responses, improving the quality of AI-driven queries and data retrieval. |

| Implementation Ease | Zilliz Cloud offers a straightforward API and integration with Python libraries, simplifying the setup of a vector store. | AWS Bedrock provides easy integration with pre-trained models and flexible configurations for NLP tasks. | This combination offers a simplified and efficient approach to implementing RAG chains, making it easier to build comprehensive solutions for various application |

Table 1. Benefits of integrating Zilliz Cloud and AWS Bedrock

Conclusion

This blog demonstrated the integration of Zilliz Cloud and AWS Bedrock to implement a RAG system. We saw how Zilliz Cloud's vector database, built on Milvus, provides scalable storage and retrieval solutions for vector embeddings, while AWS Bedrock offers robust pre-trained models for language generation. The code example walked through configuring connections to both services, loading data from external sources, processing and splitting it, and constructing a complete RAG chain that retrieves and integrates relevant information into coherent responses. This setup minimizes hallucinations and inaccuracies and demonstrates the synergy between modern NLP models and vector databases. We encourage you to explore this guide and consider how you might apply similar techniques in your projects. By doing so, you can build powerful applications that deliver precise, contextually rich responses in various domains, from financial advising to customer support and beyond. To dive deeper into RAG, Zilliz Cloud, and AWS Bedrock, here are some additional resources:

Resources

- Let's see an example of how Zilliz Cloud integrates with AWS Bedrock

- Benefits of Zilliz Cloud Integration and AWS Bedrock

- Conclusion

- Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

A Beginner's Guide to Connecting Zilliz Cloud with Google Cloud Platform

A Beginner's Guide to Connecting Zilliz Cloud with Google Cloud Platform

A Beginner’s Guide to Zilliz Cloud on the AWS Marketplace

In this article, we’ll discover how to connect Zilliz Cloud—the world’s leading vector database—with the AWS marketplace.

A Beginner's Guide to Connecting Zilliz Cloud with Azure Marketplace

A Beginner's Guide to Connecting Zilliz Cloud with Azure Marketplace