Smarter Autoscaling in Zilliz Cloud: Always Optimized for Every Workload

AI workloads don’t follow smooth, predictable curves. One moment, your vector database is comfortably serving queries; the next, a viral campaign, a fresh data ingestion, or a routine 9 AM login surge pushes usage to the red zone. Teams are forced to choose between over-provisioning and wasting budget, or under-provisioning and risking bottlenecks.

With the latest upgrade, Zilliz Cloud introduces smarter autoscaling—a fully automated, more streamlined, elastic resource management system. It scales up instantly to maintain performance and scales down automatically to cut waste, eliminating guesswork and keeping your database right-sized under any workload.

What’s New for Autoscaling and How it Works?

Zilliz Cloud has long supported automatic scale-up, ensuring performance during sudden traffic spikes. Now, with this release, scaling down is automatic too—closing the loop on cost optimization. Instead of tuning thresholds or guessing when to adjust, you simply set a minimum and maximum CU range, and Zilliz Cloud continuously optimizes capacity in real time. That means:

Lower costs – resources contract automatically when demand falls.

Consistent performance – resources expand instantly when demand surges.

Simpler operations – no more manual threshold management or constant reconfiguration.

Under the hood, autoscaling is powered by two complementary modes covering the full spectrum of real-world workload patterns:

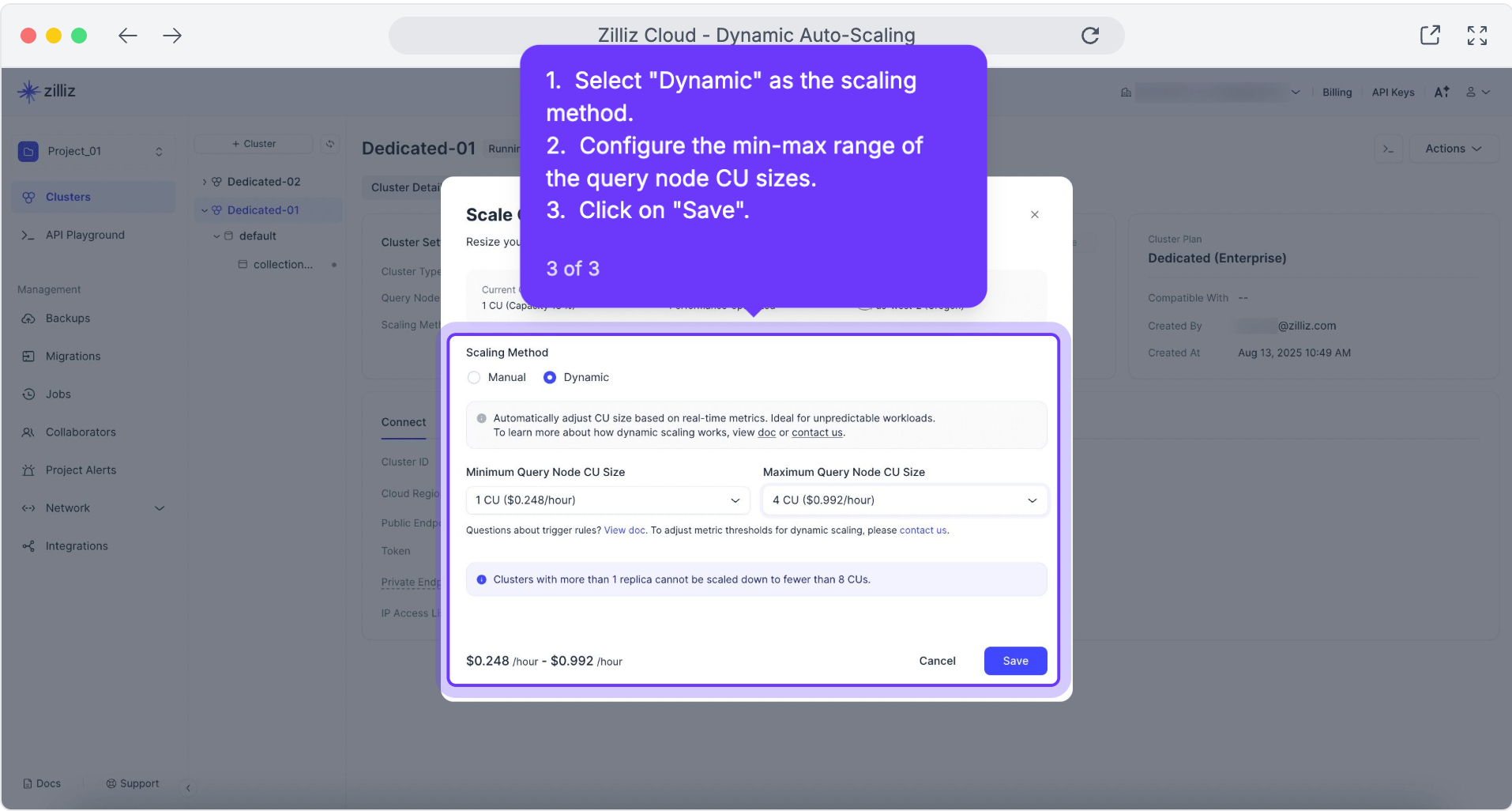

Dynamic Scaling – This is the system’s reactive intelligence. By continuously monitoring real-time CU (Compute Unit) utilization, Zilliz Cloud automatically scales resources up during unpredictable spikes such as heavy ingestion or complex query loads and scales back down when demand subsides. The result is consistent performance without the waste of permanent over-provisioning.

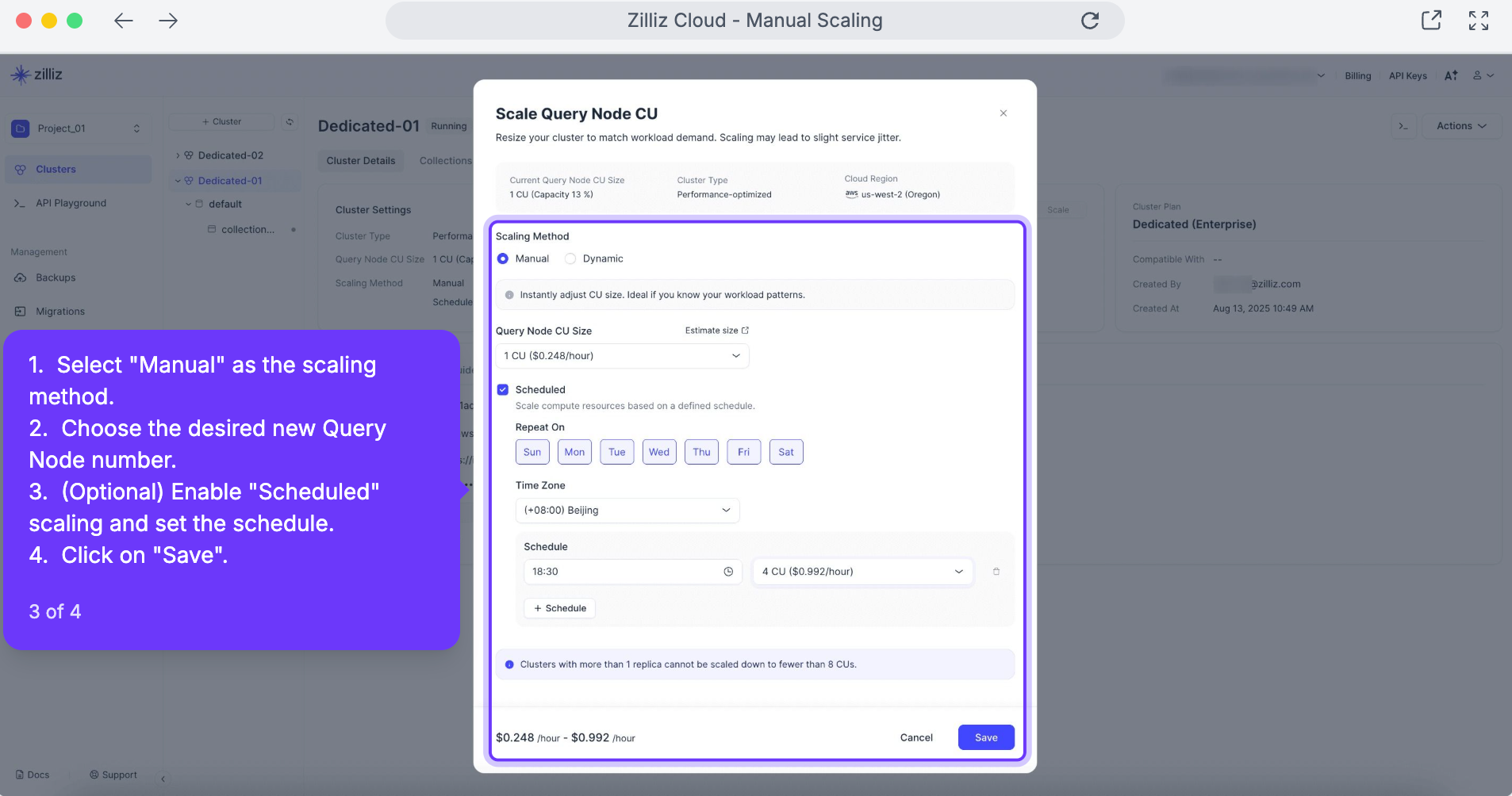

Scheduled Scaling – This is the proactive counterpart. Many workloads follow predictable patterns, such as morning login surges or peak business hours. With scheduled scaling, you can pre-define adjustments for both CUs and Replicas based on time windows. This ensures your system is fully prepared for expected traffic while avoiding unnecessary costs during off-peak hours.

Note: You can still scale manually at any time.

Together, these capabilities ensure your vector database always has the right resources at the right time—without wasted spend or performance risks.

Practical Use Cases for Autoscaling

To make this feature concrete, let’s look at three common use cases where autoscaling delivers measurable business value.

1. Flash Sale Frenzy

An e-commerce platform launches a two-hour flash sale at noon. Traffic floods in, putting heavy pressure on vector-powered “similar products” and recommendation APIs. Without scaling, the system may falter at the very moment revenue depends on it.

Solution: With Scheduled Replica Scaling, replicas can be increased 4× at 11:50 AM—just before the event begins—and return to baseline at 2:05 PM. This ensures smooth performance during the peak window, while guaranteeing you only pay for capacity when it’s needed.

2. Morning Login Surge

A company’s AI knowledge base sees hundreds of employees logging in at 9 AM each weekday. The surge overwhelms query capacity, slowing down RAG chatbot responses and creating a frustrating start to the workday.

Solution: Scheduled Scaling allows query node replicas to expand automatically at 8:50 AM and contract again after the rush. It’s like opening extra lanes on a highway before rush hour—keeping response times fast without wasting resources once traffic clears.

3. Midnight Catalog Refresh

A data pipeline initiates a large, unscheduled reindexing job overnight. The process consumes memory and competes with live traffic, slowing searches and even risking timeouts.

Solution: With Dynamic CU Scaling, Zilliz Cloud responds in real-time as capacity increases, automatically scaling CUs to absorb the workload. Once indexing completes, resources scale back down. Live queries remain fast, ingestion completes smoothly, and no manual intervention is required.

What’s Next?

This release is a major step forward, but it’s also the foundation for something bigger: a vector database that manages resources entirely on its own.

Currently, we are actively exploring more granular scaling strategies in Zilliz Cloud. Different workloads demand different trade-offs. Some prioritize cost efficiency, others require performance headroom at all times. We’re envisioning scaling “profiles” that let you define a preferred strategy—conservative for background jobs, balanced for general workloads, or aggressive for high-stakes launches. This would give teams fine-grained control while reducing operational guesswork.

Our long-term goal is to develop a resource management framework that is both autonomous and adaptable, providing you with confidence that your vector database will always match capacity to demand, with zero manual effort.

Getting Started with Autoscaling in Zilliz Cloud

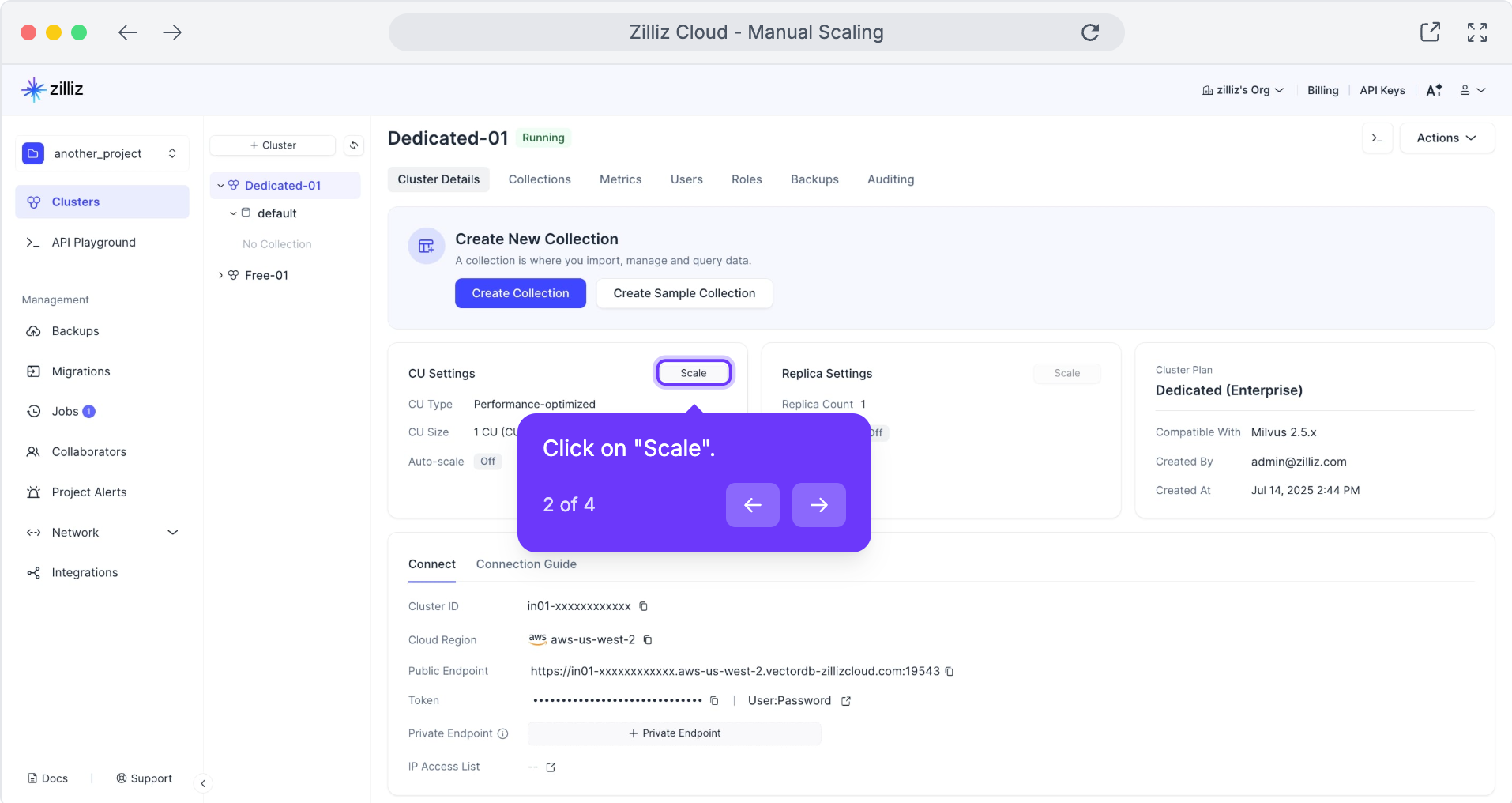

Autoscaling is live in Zilliz Cloud, and you can start using it right now. Here’s how to try it for yourself:

Log in to the Zilliz Cloud console. Go to your project and select the cluster you want to manage.

Set your CU range. Define the minimum and maximum Compute Units (CUs) for your workload. Autoscaling will automatically expand and contract resources within this range.

Choose your scaling mode.

- Use Dynamic Scaling for unpredictable workloads (e.g., data ingestion, sudden query spikes).

Use Scheduled Scaling for predictable cycles (e.g., morning login surges, flash sales).

You can still scale manually at any time if you want immediate adjustments.

- Watch it in action. Monitor how your cluster scales up and down in real time, and fine-tune as you learn more about your workload.

With just a few clicks, your database will stay right-sized without constant tuning. You’ll see faster performance during peaks, lower costs during lulls, and fewer operational headaches along the way.

Tip: Start with a wider CU range to observe how autoscaling behaves, then fine-tune the limits as you gain a deeper understanding of your workload.

👉 For more information, check our autoscaling documentation.

👉 Have questions? Our support team is here to help.

More Than Autoscaling: Enterprise-Ready Capabilities in Zilliz Cloud

Autoscaling is just one piece of what makes Zilliz Cloud the most complete and production-ready vector database service. In recent releases, we’ve also introduced SSO GA, audit logs, the Milvus 2.6 private preview, and a growing set of enterprise-grade features designed to meet the needs of teams building AI at scale. (See our launch blog for details.)

Built on Milvus, Zilliz Cloud delivers a fully managed experience with:

Elastic scaling & cost efficiency – One-click deployment, serverless autoscaling, and pay-as-you-go pricing.

Advanced AI search – Vector, full-text, and hybrid (sparse + dense) search with metadata filtering, dynamic schema, and multi-tenancy.

Natural language querying – MCP server support for intuitive queries without complex APIs.

Enterprise-grade reliability & security – 99.95% SLA, SOC 2 Type II and ISO 27001 certifications, GDPR compliance, HIPAA readiness, RBAC, BYOC, and now audit logs.

Global availability – Deployments across AWS, GCP, and Azure with sub-100ms latency worldwide.

Seamless migration – Built-in tools to move from Pinecone, Qdrant, Elasticsearch, PostgreSQL, OpenSearch, or on-prem Milvus.

Together, these capabilities ensure that Zilliz Cloud isn’t just a vector database service—it’s a production-ready foundation for enterprise AI applications.

- What’s New for Autoscaling and How it Works?

- Practical Use Cases for Autoscaling

- What’s Next?

- Getting Started with Autoscaling in Zilliz Cloud

- More Than Autoscaling: Enterprise-Ready Capabilities in Zilliz Cloud

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Vector Databases vs. Object-Relational Databases

Use a vector database for AI-powered similarity search; use an object-relational database for complex data modeling with both relational integrity and object-oriented features.

Vector Databases vs. In-Memory Databases

Use a vector database for AI-powered similarity search; use an in-memory database for ultra-low latency and high-throughput data access.

Insights into LLM Security from the World’s Largest Red Team

We will discuss how the Gandalf project revealed LLMs' vulnerabilities to adversarial attacks. Additionally, we will address the role of vector databases in AI security.