Apache Cassandra vs. Aerospike: Choosing the Right Vector Database for Your AI Applications

As AI-driven applications become more prevalent, developers and engineers face the challenge of selecting the right database to handle vector data efficiently. Two popular options in this space are Apache Cassandra and Aerospike. This article compares these technologies to help you decide on your vector database needs.

What is a Vector Database?

Before we compare Apache Cassandra and Aerospike, let's first explore the concept of vector databases.

A vector database is specifically designed to store and query high-dimensional vector embeddings, which are numerical representations of unstructured data. These vectors encode complex information, such as text's semantic meaning, images' visual features, or product attributes. By enabling efficient similarity searches, vector databases play a pivotal role in AI applications, allowing for more advanced data analysis and retrieval.

Vector databases are adopted in many use cases, including e-commerce product recommendations, content discovery platforms, anomaly detection in cybersecurity, medical image analysis, and natural language processing (NLP) tasks. They also play a crucial role in Retrieval Augmented Generation (RAG), a technique that enhances the performance of large language models (LLMs) by providing external knowledge to reduce issues like AI hallucinations.

There are many types of vector databases available in the market, including:

- Purpose-built vector databases such as Milvus, Zilliz Cloud (fully managed Milvus)

- Vector search libraries such as Faiss and Annoy.

- Lightweight vector databases such as Chroma and Milvus Lite.

- Traditional databases with vector search add-ons capable of performing small-scale vector searches.

Apache Cassandra is a traditional NoSQL database that has evolved to include vector search capabilities as an add-on. Aerospike is a distributed NoSQL database that has also evolved to include vector search capabilities.

Apache Cassandra: Overview and Core Technology

Apache Cassandra is an open-source, distributed NoSQL database known for its scalability and availability. Cassandra's features include a masterless architecture for availability, scalability, tunable consistency, and a flexible data model. With the release of Cassandra 5.0, it now supports vector embeddings and vector similarity search through its Storage-Attached Indexes (SAI) feature. While this integration allows Cassandra to handle vector data, it's important to note that vector search is implemented as an extension of Cassandra's existing architecture rather than a native feature.

Cassandra's vector search functionality is built on its existing architecture. It allows users to store vector embeddings alongside other data and perform similarity searches. This integration enables Cassandra to support AI-driven applications while maintaining its strengths in handling large-scale, distributed data.

A key component of Cassandra's vector search is Storage-Attached Indexes (SAI). SAI is a highly scalable and globally distributed index that adds column-level indexes to any vector data type column. It provides high I/O throughput for Vector Search databases and other search indexing. SAI offers extensive indexing functionality, capable of indexing queries and content (including large inputs like documents, words, and images) to capture semantics.

Vector Search is the first instance of validating SAI's extensibility, leveraging its new modularity. This Vector Search and SAI combination enhances Cassandra's capabilities in handling AI and machine learning workloads, making it a strong contender in the vector database space.

Aerospike: Overview and Core Technology

Aerospike is a distributed NoSQL database designed for high-performance, real-time applications. It has evolved to include support for vector indexing and searching, making it suitable for vector database use cases. This vector capability, called Aerospike Vector Search (AVS), is in Preview and users can request early access from Aerospike.

AVS only supports Hierarchical Navigable Small World (HNSW) indexes for its vector search capabilities. When updates or inserts are made in AVS, record data, including the vector, is first written to the Aerospike Database (ASDB) and is immediately visible. For indexing, each record must contain at least one vector in the specified vector field of an index. Multiple vectors and indexes can be specified for a single record, enabling various search approaches on the same data. Aerospike recommends assigning upserted records to a specific set to facilitate monitoring and operations.

AVS has a unique approach to index construction, managing it concurrently across all AVS nodes. While vector record updates are committed directly to ASDB, index records are processed asynchronously from an indexing queue. This processing is done in batches and distributed across all AVS nodes, maximizing CPU core usage in the AVS cluster and allowing for scalable ingestion. The ingestion performance is highly dependent on host memory and storage layer configuration.

For each item in the indexing queue, AVS processes the vector for indexing, assembles the clusters for each vector, and commits these to ASDB. An index record contains a copy of the vector itself and the associated clusters for that vector at a given layer of the HNSW graph. Index construction leverages vector extensions (AVX) for single instruction and multiple data parallel processing, enhancing efficiency.

Due to the interconnected nature of records in their clusters, AVS performs queries during ingestion to "pre-hydrate" the index cache. These queries are not reported as requests but as reads against the storage layer. This approach ensures the cache is populated with relevant data, potentially improving query performance. These features demonstrate AVS's approach to handling vector data and constructing efficient indexes for similarity search operations, allowing for scalable performance in high-dimensional vector searches.

Key Differences

Apache Cassandra and Aerospike have distinct approaches to implementing vector search capabilities. While Cassandra integrates vector search into its core database using Storage-Attached Indexes (SAI), Aerospike introduces it as a separate layer (AVS) on top of its core database. This fundamental difference impacts their indexing methodologies, data handling, scalability approaches, and query optimization techniques.

In terms of data handling and storage, Cassandra leverages its wide-column store model, allowing for flexible schema design with vector data stored alongside other attributes using SAI. Aerospike uses a hybrid memory architecture to store data in DRAM, SSD, or both, with vector data stored in the core Aerospike Database (ASDB) and index data managed separately in the AVS layer.

Both databases offer scalability but with different emphases. Cassandra provides linear scalability for write operations and uses its distributed architecture for vector search performance. In contrast, Aerospike's search layer (AVS) can be scaled independently from the storage layer to meet specific query and ingestion requirements.

The databases also differ in their approach to caching and query optimization. Cassandra utilizes its existing caching mechanisms, with SAI potentially providing additional optimizations for vector searches. Aerospike implements a dedicated caching system in the AVS layer, including pre-hydration of the index cache during ingestion to optimize query performance.

It's worth noting that the maturity of these vector search features differs between the two databases. Cassandra's vector search is part of the core database as of version 5.0, indicating a stable feature ready for production use. Aerospike's vector search (AVS) is currently in Preview, suggesting it's still evolving and may undergo changes before the final release.

Conclusion

The evolution of Apache Cassandra and Aerospike to include vector search capabilities represents a step forward in distributed databases. Both systems have approached this challenge in ways that leverage their existing strengths while addressing the growing demand for efficient handling of high-dimensional vector data. Cassandra's integration of vector search directly into its core database offers a seamless experience for users familiar with its ecosystem. In contrast, Aerospike's dedicated vector search layer promises high performance for real-time applications.

The choice between these two databases for vector search applications largely depends on specific use case requirements. Cassandra's mature implementation and ability to handle vast amounts of distributed data make it attractive for large-scale deployments where flexibility and scalability are paramount. Its integration of vector operations with traditional database functionalities could be particularly beneficial for complex, hybrid query scenarios. With its focus on low-latency, high-throughput operations, Aerospike may be more suitable for use cases demanding real-time vector search capabilities. However, its Preview status suggests potential evolution in its feature set.

When deciding between Cassandra and Aerospike, consider the following steps:

- Assess your current and future data scale and complexity.

- Evaluate your performance requirements, especially in terms of latency and throughput.

- Consider your team's expertise and familiarity with each system.

- Conduct proof-of-concept tests with your specific datasets and query patterns.

- Assess the maturity of each system's vector search capabilities and how they align with your production timeline.

As vector search becomes increasingly crucial in AI and machine learning applications, Cassandra and Aerospike are positioning themselves as viable solutions. However, the rapid pace of development in this field means that these technologies will likely continue evolving. Organizations considering either of these databases for vector search should evaluate their current needs and future scalability requirements and the potential for advancements in vector search technologies.

While this article provides an overview of Cassandra and Aerospike, it's crucial to evaluate these databases based on your specific use case. One tool that can assist in this process is VectorDBBench, an open-source benchmarking tool designed for comparing vector database performance. Ultimately, thorough benchmarking with specific datasets and query patterns will be essential in making an informed decision between these two powerful yet distinct approaches to vector search in distributed database systems.

Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

VectorDBBench is an open-source benchmarking tool designed for users who require high-performance data storage and retrieval systems, particularly vector databases. This tool allows users to test and compare the performance of different vector database systems such as Milvus and Zilliz Cloud (the managed Milvus) using their own datasets and determine the most suitable one for their use cases. Using VectorDBBench, users can make informed decisions based on the actual vector database performance rather than relying on marketing claims or anecdotal evidence.

VectorDBBench is written in Python and licensed under the MIT open-source license, meaning anyone can freely use, modify, and distribute it. The tool is actively maintained by a community of developers committed to improving its features and performance.

Download VectorDBBench from its GitHub repository to reproduce our benchmark results or obtain performance results on your own datasets.

Take a quick look at the performance of mainstream vector databases on the VectorDBBench Leaderboard.

Read the following blogs to learn more about vector database evaluation.

Further Resources about VectorDB, GenAI, and ML

- What is a Vector Database?

- Apache Cassandra: Overview and Core Technology

- Aerospike: Overview and Core Technology

- Key Differences

- Conclusion

- Using Open-source VectorDBBench to Evaluate and Compare Vector Databases on Your Own

- Further Resources about VectorDB, GenAI, and ML

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Build for the Boom: Why AI Agent Startups Should Build Scalable Infrastructure Early

Explore strategies for developing AI agents that can handle rapid growth. Don't let inadequate systems undermine your success during critical breakthrough moments.

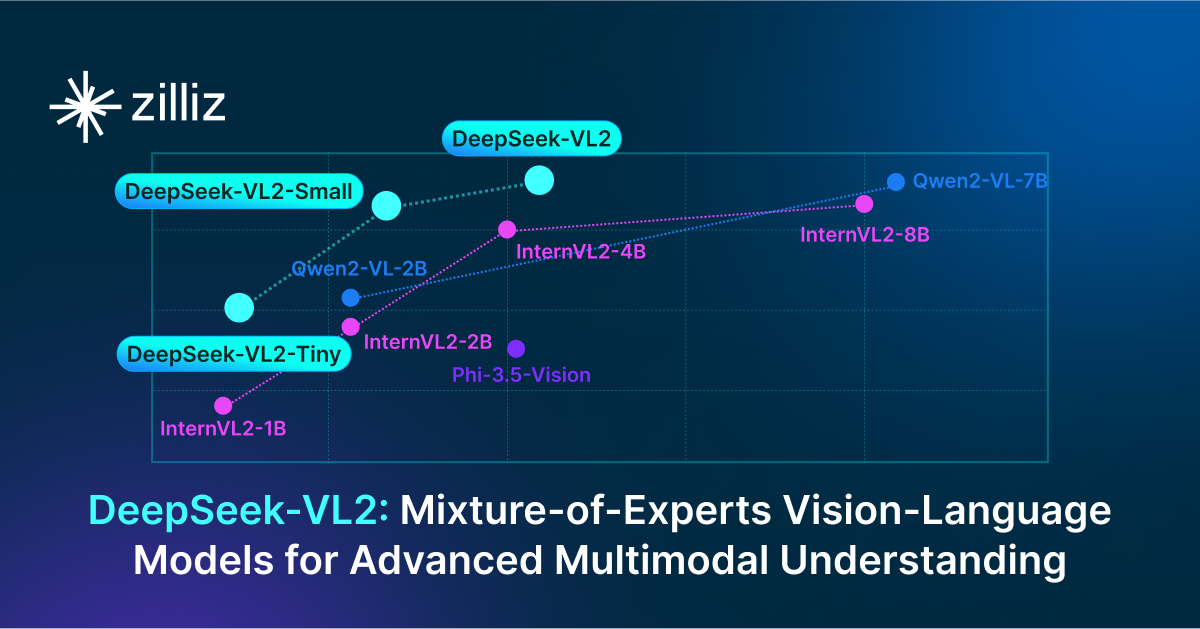

DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding

Explore DeepSeek-VL2, the open-source MoE vision-language model. Discover its architecture, efficient training pipeline, and top-tier performance.

Knowledge Injection in LLMs: Fine-Tuning and RAG

Explore knowledge injection techniques like fine-tuning and RAG. Compare their effectiveness in improving accuracy, knowledge retention, and task performance.

The Definitive Guide to Choosing a Vector Database

Overwhelmed by all the options? Learn key features to look for & how to evaluate with your own data. Choose with confidence.