Real-Time Data Streams

Real-Time Data Streams

Real-time Data Streams.jpg

Real-time Data Streams.jpg

Have you ever wondered how some companies quickly adapt to changing business conditions and consumer expectations? The answer lies in understanding real-time data streams. With rising data volume and variety, organizations need the most up-to-date information to remain competitive and ensure a smooth customer experience.

Real-time data streaming allows users to quickly ingest the latest data and perform analysis to reveal actionable insights. Due to its versatility, real-time streaming is valuable in multiple domains, including financial services, supply chain, and e-commerce.

This post will explain real-time data streams and how they work. It will also mention their benefits, challenges, and use cases.

What is Real-time Data Streaming?

Real-time data streaming ingests and processes a continuous data flow as soon as it is generated from a source. Instead of capturing data in batches for later analysis, real-time streaming allows users to instantly store and analyze incoming data streams, delivering actionable insights.

For instance, a stock trading platform that relies on the latest market trends needs timely data on multiple financial and economic indicators. Instead of waiting for a daily or hourly summary, the platform can use real-time updates to provide the most relevant and accurate information on stock price movements, helping traders make immediate decisions.

Other examples of streaming data include:

A user’s log files when they log on to a web or mobile application

Purchase history from e-commerce platforms

Data from edge devices such as sensors and cameras to Internet-of-Things (IoT) systems.

How Does Real-Time Data Streaming Work?

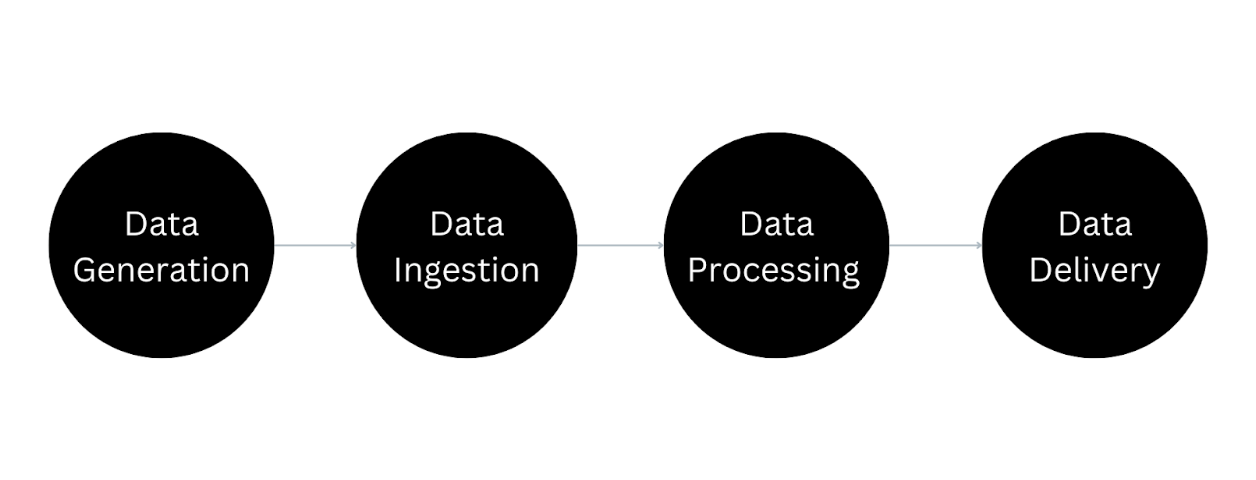

Real-time data streaming consists of multiple stages to generate, ingest, store, process, and deliver the stream to a particular destination. The following list explains these phases in more detail to help you understand how the process works.

Figure- Real-time Data Streaming.png

Figure- Real-time Data Streaming.png

Figure: Real-time Data Streaming

Data Generation: The first step in real-time data streaming is data generation, which comes from different sources, such as IoT sensors, mobile, financial systems, or user interactions on websites. These sources produce a continuous stream of events or messages.

Data Ingestion: A streaming platform ingests or collects incoming data flows after generation. This is where the data enters the pipeline for further processing. Popular technologies for data ingestion include Apache Kafka, Amazon Kinesis, and Google Pub/Sub. These tools collect, store, and manage extensive data volumes generated in real time. The ingestion process ends by transferring the data from multiple sources to a storage repository such as a data warehouse, lake, or database.

Data Processing: Automated pipelines fetch raw data from the repository and apply relevant transformations to make it usable for domain-specific applications. Transformations can include filtration, aggregation, and normalization processes. The goal is to extract meaningful insights quickly.

Data Delivery: Finally, data pipelines can deliver the processed data to dashboards, trigger alerts, and other management systems to take immediate action. For example, a fraud detection system can flag suspicious transactions as they happen, preventing potential financial losses.

Streaming Data vs. Streaming Process vs. Real-time Analytics

Understanding the difference between streaming data, streaming process, and real-time analytics is often challenging.

Although the terms relate to a real-time system, developers must understand subtle differences to streamline real-time workflows. The list below summarizes these differences to provide better clarity on these concepts.

Streaming Data

Streaming data refers to the constant flow of data generated from diverse sources, such as IoT devices, user interactions, financial transactions, or social media feeds.

The data is often unstructured or semi-structured and arrives continuously rather than in fixed batches. This raw data requires further processing before it can deliver actionable insights.

Streaming Process

The streaming process is the engine that consists of methods and technologies for collecting, processing, and analyzing streaming data in real-time. It transforms and enhances the data to help users quickly detect patterns, anomalies, and trends.

The method helps detect and fix issues related to outliers, missing values, and inconsistent formats. Additional pipelines can perform more complex operations, such as aggregation and segmentation, to maintain data consistency and interpretability.

Real-time Analytics

Real-time analytics uses processed data from the streaming pipeline to generate immediate insights. This step applies mathematical and statistical techniques to compute pre-defined metrics for assessing particular situations.

Modern methods use machine learning and artificial intelligence (AI) algorithms to provide instant predictions and recommendations. For example, a stock trading application can analyze market trends in real time and give the user personalized investment strategies to maximize profits.

Benefits and Challenges of Real-time Data Streams

As the current business environment becomes more dynamic, companies must invest in real-time data technologies to quickly address changing customer demands. However, effective implementation of real-time data streaming is challenging.

The list below mentions a few benefits and challenges of real-time data streams to help you understand their value and ways to overcome common issues associated with such systems.

Benefits

Instant Insights: One of the most significant advantages of real-time data streaming is the ability to generate instant insights. Businesses can respond quickly to changes in customer behavior, market trends, or system health.

Improved Customer Experience: AI and ML algorithms can analyze real-time customer data from social media, mobile, and web applications. The analysis can generate personalized recommendations to improve customer experience. For example, a real-time data stream for an e-commerce platform can analyze a customer’s clickstream and recommend related products to help them quickly find relevant items.

Proactive Maintenance: Businesses can streamline upgrades and maintenance procedures by monitoring real-time performance metrics. For instance, a manufacturer can develop a system that collects and processes real-time data on equipment health. The system can generate instant alerts once it detects an anomaly and allow the relevant teams to predict and prevent failures before they occur.

Competitive Agility: Analyzing extensive real-time customer data allows a company to quickly adjust its products and services to ensure a high retention rate. The method makes operational procedures more agile to address changing needs and tastes. For example, customers may report issues with an application’s user interface, and a streaming process can analyze the data instantly to alert technical teams to fix the problem in time.

Challenges

Data Overload: Collecting raw data from diverse sources in real-time can quickly overwhelm a system, causing significant downtime and performance degradation. Implementing workload distribution processes such as data sharding and server replication can help increase scalability and prevent costly application failures. Cloud services like AWS Kineses with auto-scaling features can also help streamline resource allocation according to changing demands.

Integration: Installing and maintaining real-time data streaming infrastructure requires expertise in multiple tools, platforms, and languages, increasing complexity. Companies can use managed streaming services or develop a modular architecture that is easier to maintain and upgrade.

Data Ordering: Real-time data streams consisting of unstructured data, such as user conversations or event logs, require the system to understand the sequence of data packets to derive meaning. For example, log files that record user interactions must have an order to allow backend teams to understand the user journey. Companies must deploy automated timestamp applications at the data source and synchronize clocks across multiple sources to ensure consistency between events.

Data Integrity: Maintaining data integrity when ingesting information from disparate sources is problematic. Data from one source may not be consistent, causing the system to deliver illogical insights. Developers can build pipelines with comprehensive validation rules and flags that help eliminate such inconsistencies.

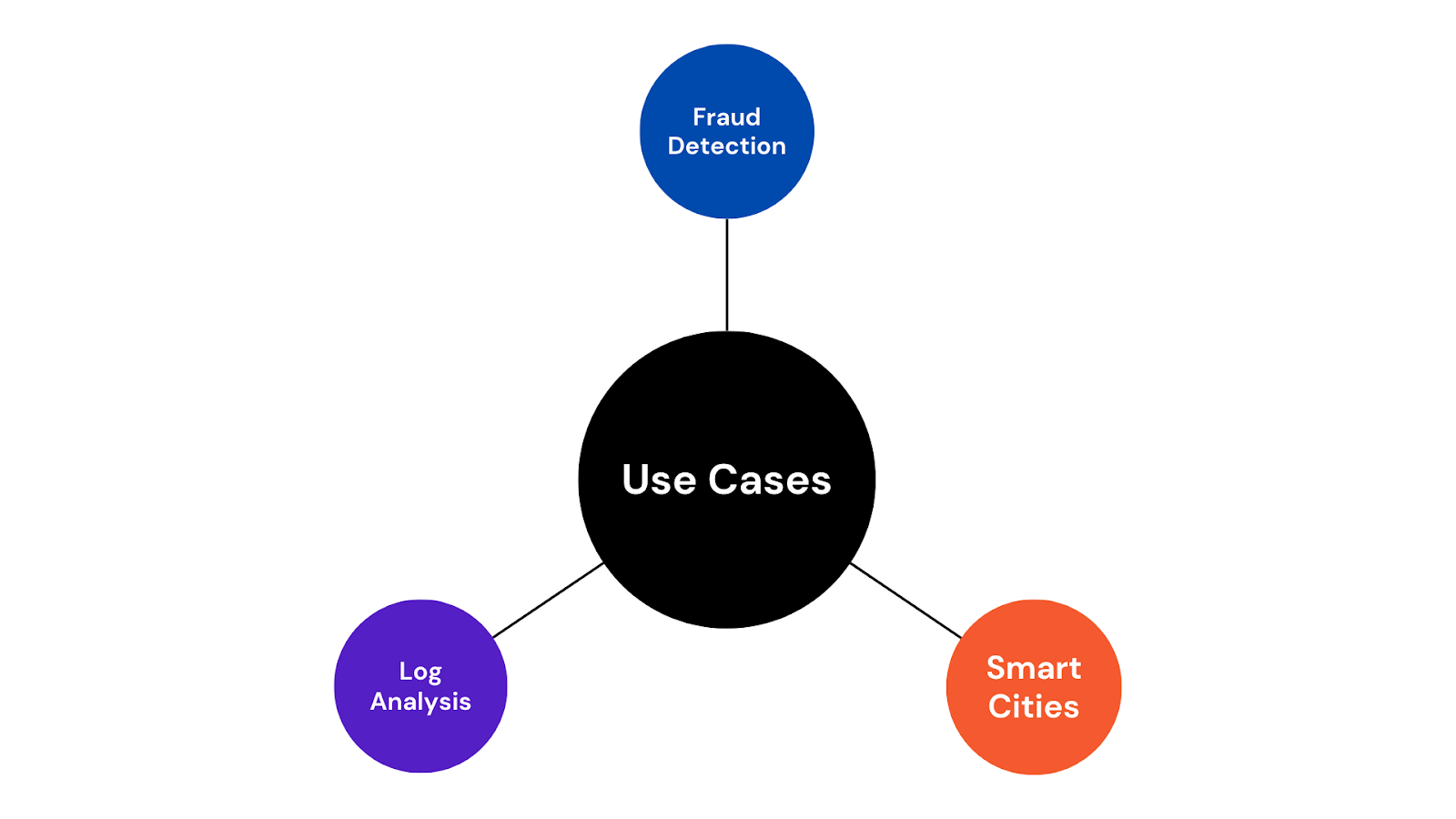

Use Cases of Real-time Data Streams

Real-time data streams are entering multiple domains to solve real-world problems, with businesses increasingly relying on data to drive decision-making. The list below highlights a few widespread use cases where real-time data streaming delivers significant value.

Figure: Real-time Data Streams Use Cases.png

Figure: Real-time Data Streams Use Cases.png

Real-time Data Streams Use Cases

Fraud Detection: Companies can integrate real-time data streams consisting of financial transactions with AI algorithms to detect anomalies and inconsistencies quickly. The algorithms can correlate incoming data from different sources and compare it to established industry standards. The analysis can reveal fraudulent patterns in transactional data, causing the system to alert relevant teams and stop a transaction beforehand to prevent losses.

Log Analysis: IT professionals often analyze extensive log files to debug errors or system failures. Reading the files manually is tedious and may not reveal any particular insight. However, real-time data streaming can enhance the process by collecting log data and performing analysis to identify issues instantly. For instance, developers can integrate the streaming solution with a large language model (LLM) that can read and understand textual data in log files.

Smart Cities: Edge devices such as sensors and cameras are popular tools for developing IoT systems for smart cities. To improve city management, the devices collect real-time data streams on multiple indicators, such as traffic flows, air quality, and temperature. Analyzing these real-time data packets can allow local governments to identify areas for improvement in enhancing quality of life.

FAQs of Real-time Data Streams

- What is real-time data streaming?

Real-time data streaming processes continuous data flow from multiple sources, allowing users real-time insights.

- What are the primary use cases for real-time data streaming?

Some main real-time data streaming applications are fraud detection, log analysis, IoT sensor networks, and e-commerce personalization.

- What are the challenges of implementing real-time data streams?

Some key challenges of real-time data streams include handling large volumes of data, maintaining data integrity, and ensuring consistent data ordering.

Can real-time streaming handle unstructured data?

Yes, real-time streaming systems can handle unstructured data such as social media feeds, sensor data, and logs, often using frameworks that support flexible schema formats.

What is the main benefit of real-time analytics?

Real-time analytics allows businesses to make decisions and take action instantly, allowing them to address changing demands proactively.

Related Resources

Real-time data streams often contain unstructured data in multiple formats. Ingesting, processing, and analyzing such datasets requires specialized tools to generate insights.

Vector databases are popular frameworks for storing extensive unstructured datasets as embeddings. The resources below will help you understand how vector databases and how you can use them to implement real-time data streaming.

- What is Real-time Data Streaming?

- How Does Real-Time Data Streaming Work?

- Streaming Data vs. Streaming Process vs. Real-time Analytics

- Benefits and Challenges of Real-time Data Streams

- Use Cases of Real-time Data Streams

- FAQs of Real-time Data Streams

- Related Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free