Text as Data, From Anywhere to Anywhere

Introduction

In March 2024, we heard from AJ Steers at the SF Unstructured Data Meetup about how we can utilize Airbyte and PyAirbyte to use text as data, from anywhere to anywhere. This means we can integrate and utilize both structured and unstructured data from various sources across different platforms.

Link to the YouTube replay of AJ Steers's talk: Watch on YouTube.

Who is AJ Steers and Why Should You Care About Unified Data Integration?

AJ Steers is an experienced architect, data engineer, software developer, and data ops expert. He has designed end-to-end solutions at Amazon and created a vision for quantified self-data models. As of now, he is a staff software engineer at Airbyte.

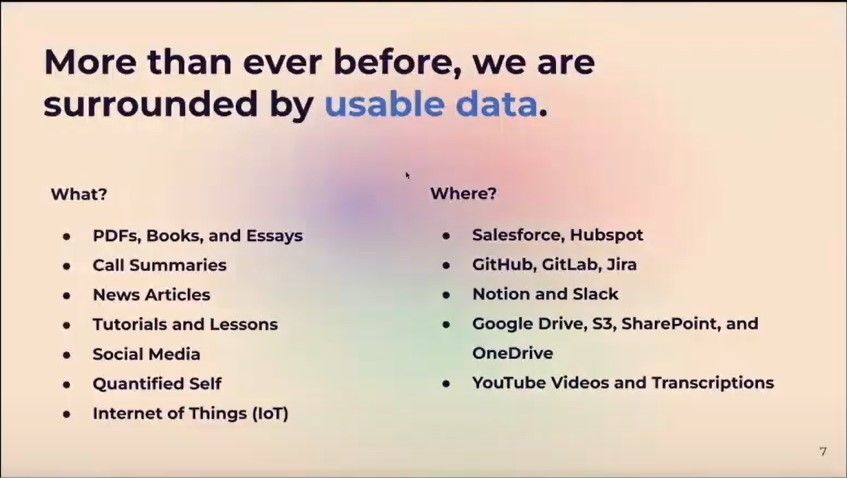

Unified data integration merges diverse data types and sources into a single, cohesive system for efficient analysis and processing. This capability is crucial in harnessing the full potential of your data, ensuring seamless access and utilization across various platforms and applications. As AJ puts it, “More than ever before, we are surrounded by usable data” and now it's all about opportunity cost. How much time do we have to get data that we think can be valuable from the source?

With that, let's dive into AJ's talk on “Text as Data, From Anywhere to Anywhere” using Airbyte.

Airbyte’s Expansion of supported sources and destinations

Airbyte’s focus so far has been on offering reliability, flexible deployment options, and a robust library of connectors to ensure seamless data integration for traditional tabular data. But what about unstructured data? AJ talks about what the Airbyte team has been up to for the past six months.

In the past six months, from March 2024 backward, Airbyte has expanded its capabilities to cover unstructured data sources in addition to traditional tabular data sources. This is due to the growing importance and prevalence of unstructured data in today’s data ecosystem. This expansion has seen Airbyte go beyond SQL-type and file-type destinations to cover vector database destinations like Milvus, ensuring we can effectively utilize data across various applications. Let's have a look at some of the connectors supported by Airbyte for both structured and unstructured data sources.

Airbyte supports more than 350 connectors hence we cannot list all of them. But the above slide shared by AJ shows sample connectors for each source and destination category. So far, we have been talking about unstructured data and how Airbyte is expanding to support them. But what are real-world examples of this data?

Think of this blog you are reading, is it arranged in tabular form? it's a prime example of unstructured data, composed of text that doesn't fit neatly into rows and columns. Unstructured data refers to information that doesn’t reside in a traditional row-column database. It includes data like text, images, videos, and social media posts. Let's take a look at some of the unstructured data shared by AJ in the slide below.

With this kind of data and Airbyte's assistance in consuming it, different communities have different expectations from Airbyte.

GenAl developers: Want streamlined integrations with other ecosystem tools and paradigms.

Data scientists: Want libraries that can run in a Jupyter notebook, decoupled from a central data warehouse.

Data engineers: Want a pipelines-as-code approach, the ability to run CI test pipelines, and friction-free development.

Developers: Want to build and iterate locally, then deploy later.

This shows we all want great libraries, good interoperability, and more control. But it all comes down to what you want. Some of us want something we can code for and control while others like to be less technical and use a user interface. Traditionally, this has led us to completely different paths. Airbyte is trying to break these barriers to anybody who can run and write Python code or anybody who can just copy-paste a few lines of code using PyAirbyte. Let's see what PyAibyte is and its pros.

Introducing PyAirbyte

PyAirbyte is a Python library that provides an interface to interact with Airbyte. It allows us to control and manage our Airbyte instances using Python. Think of it as bringing the power of Airbyte to every Python developer.

Advantages of PyAirbyte

- Run anywhere: We can run PyAirbyte in a Jupyter notebook, decoupled from any data warehouse. This means we can use it in many environments, not just those tied to a specific data warehouse, giving us the flexibility to work in the environment that suits our needs best.

- Reduce time to value: With PyAirbyte, we can build and iterate on data pipelines locally before deploying them. This allows us to test and refine our pipelines to ensure they're working as expected, which helps reduce the time it takes to get valuable insights from the data.

- Fast prototyping: PyAirbyte doesn't require a user interface or signups, so we can start defining our data pipelines in code right away. This speeds up the prototyping process.

- Flexible: We can use PyAirbyte with the tools and frameworks in our ecosystem. This means we can integrate it with the other tools we're already using, making it easier to incorporate into our existing workflows and processes.

Now, let’s dive into how you can get started with Airbyte and PyAirbyte in just a few steps.

UI and Code Demos

AJ digs into a demo session showing how we can use the hosted version of Airbyte (no code approach) and PyAirbyte (minimal code approach) to integrate our data sources with our data destinations.

UI Approach Demo

For those who love clicking buttons more than typing code, the UI approach is a dream come true. It’s like the ‘easy mode’ in your favorite video game. We will keep this brief as we have already covered how to use Airbyte’s hosted version to connect a data source and destination in detail. The linked article goes beyond the integration part and shows us how to build a real-world app using the established connection.

There are four main steps involved in using the no-code approach to connect a data source with a data destination.

Sign up or log in to your Airbyte account. When you sign up you are given a 14 day trial.

Configure the data source.

Configure the data destination.

Create a connection between the source and destination.

When the connection is established, you are ready to use your data. If you are a code geek like me and prefer the code approach, PyAirbyte has you covered.

Code Approach Demo

Fetching Data Using PyAirbyte in Three Steps

The process of fetching data using PyAirbyte is quite simple.

Step 1:

Create a source using the get_source() function.

import airbyte as ab

# Check for supported sources

print(ab.get_available_connectors())

# Create the data source we want

source = ab.get_source("source-github")

To have a visual look of all the connectors Airbyte supports, have a look at the supported connectors page. But what if your source’s connector is not supported? Then you need to build your own custom connector. Don’t worry it's not as hard as it sounds. You just have to follow this guide as Airbyte offers a no-code connector builder which lets us build connectors in less than 10 minutes.

Step 2:

Configure our source with set_config().

source.set_config(

{

# Replace with your desired repository

"repositories": ["FINCH285/engineering-education"],

"credentials": {

"personal_access_token": ab.get_secret(

"GITHUB_PERSONAL_ACCESS_TOKEN"

)

}

}

)

# Validate the config and credentials

source.check()

This is just passing where in the source we want to get the data from and the required authorization credentials.

Step 3:

Read the data using the read() function.

# See what streams are avilable from the source

print(source.get_available_streams())

#Pull data rom the steams we choose

read_result = source.read(

streams=['branches', 'collaborators']

)

# Use the interop option

branches_dataframe = read_result["branches"].to_pandas()

branches_sql_table = read_result["branches"].to_sql_table()

branches_lln_docs = read_result["branches"].to_documents()

The interop option allows us to convert the fetched data into different data structures that best fit our use case. This completes the three-step process. However, it's not mandatory to follow the three steps independently to fetch data. Airbyte supports a one-step process to achieve the same.

Fetching Data Using PyAirbyte in a Single Step

This is the speedrun version. It involves combining all the three steps into a single step.

import airbyte as ab

source = ab.get_source(

"source-github",

config={

# Replace with your desired repository

"repositories": ["FINCH285/engineering-education"],

"credentials": {

"personal_access_token": ab.get_secret(

"GITHUB_PERSONAL_ACCESS_TOKEN"

),

},

},

streams=['branches', 'collaborators']

).read()

The code does all three operations in a single step. It creates the source, configures it, and finally reads the data. You can now use the interop option to convert the fetched data into different data structures as we did earlier. But if you want to generate LLM documents from tabular data, the next section shows you how to.

Generating LLM Documents From Tabular Data

If you want your data to be compatible with LangChain you need to convert it to LLM Documents. You can then use these documents for various natural language processing tasks, such as question-answering, summarization, or text generation.

import rich

from itertools import islice

dataset = source_github.get_documents(

"issues",

title_property="title",

content_properties=["body"],

)

# Grab a few documents (skipping first 2)

documents = list(islice(dataset, 2, 5))

# Print as markdown

for document in documents:

display(rich.markdown.Markdown(document.content))

The code will fetch the GitHub issues and render them as documents. It will then set the title of the document to the title of the issue and the document contents will be set to the body of the issue. It will then use rich to print the documents in markdown format to the console.

With two choices available, let's see which approach best suits you.

Choosing Between Airbyte and PyAirbyte

If you want the zero code to vector stores approach go for the hosted version. If you want full control with minimal code go for PyAirbyte. AJ asserts that in theory anywhere Python runs supported by a virtual environment PyAirbyte can run. I have put together a table to help you decide which approach suits you.

| Criteria | Airbyte (UI/Hosted) | PyAirbyte |

| Approach | No-code | Code/Python |

| Setup | Simple sign-up process | Requires Python coding skills |

| User Interface | Provides a user-friendly interface | No user interface, code-based |

| Data Integration | Suitable for both traditional and modern data integration tasks (unstructured data, vector databases, etc.) | Suitable for both traditional and modern data integration tasks (unstructured data, vector databases, etc.) |

| Flexibility | Limited to the options provided in the UI | Highly flexible, can be integrated with other Python tools and frameworks |

| Environment | Hosted environment | Can run in any Python environment (e.g., Jupyter Notebook, local machine) |

| Deployment | Deploys data pipelines to Airbyte's hosted environment | Code-based deployment can be integrated with CI/CD pipelines |

| Prototyping | Suitable for non-technical users or quick prototyping | Ideal for technical users and fast prototyping |

| Learning Curve | Lower learning curve | Requires Python coding skills or basic coding skills |

After the comparison, you can continue to choose your own adventure.

PyAirbyte’s Upcoming Features

PyAirbyte is still in the beta stage. Hence as of now, it does not support full destinations meaning it doesn’t have vector stores as destinations but we can pass them along to LangChain. Aj shares a couple of expectations we should have from the PyAirbyte team this year: What's Next for PyAirbyte?

Q2 2024 Plans:

Improve support for Python 3.9 and 3.11

Add Windows support

Improve API for configuring sources

Enable interoperability with Airbyte Cloud, OSS, and Enterprise versions

H2 2024 Plans:

Add support for Reverse ETL

Add support for Vector Store destinations

Add support for Publish-type destinations

If you want to offer feedback and report bugs, consider opening an issue on PyAirbyte’s GitHub page.

Conclusion

Whether you prefer a no-code or minimal-code approach, Airbyte and PyAirbyte offer robust solutions for integrating both structured and unstructured data. AJ Steers' painted a good picture of the potential of these tools in revolutionizing data workflows. For more information, check out the resources below and start your journey in leveraging text as data, from anywhere to anywhere.

Further Resources

https://zilliz.com/blog/use-milvus-and-airbyte-for-similarity-search-on-all-your-data

https://zilliz.com/blog/zilliz-introduces-upsert-kafka-connector-and-airbyte-integration

https://github.com/airbytehq/quickstarts/tree/main/pyairbyte_notebooks

Link to the YouTube replay of AJ Steer’s talk: Watch the talk on YouTube.

- Introduction

- Who is AJ Steers and Why Should You Care About Unified Data Integration?

- Airbyte’s Expansion of supported sources and destinations

- Introducing PyAirbyte

- UI and Code Demos

- Fetching Data Using PyAirbyte in a Single Step

- Generating LLM Documents From Tabular Data

- Choosing Between Airbyte and PyAirbyte

- PyAirbyte’s Upcoming Features

- Conclusion

- Further Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Why Context Engineering Is Becoming the Full Stack of AI Agents

Context engineering integrates knowledge, tools, and reasoning into AI agents—making them smarter, faster, and production-ready with Milvus.

Top 5 AI Search Engines to Know in 2025

Discover the top AI-powered search engines of 2025, including OpenAI, Google AI, Bing, Perplexity, and Arc Search. Compare features, strengths, and limitations.

Enhancing AI Reliability Through Fine-Grained Hallucination Detection and Correction with FAVA

In this blog, we will explore the nature of hallucinations, the taxonomy that provides a framework for categorizing them, the FAVABENCH dataset designed for evaluation, and how FAVA detects and corrects errors.