Simplifying Legal Research with RAG, Milvus, and Ollama

In this blog post, we will see how we can apply RAG to Legal data.

Legal research can be time-consuming. You usually need to review a large number of documents to find the answers you need. Retrieval-Augmented Generation (RAG) can help streamline your research process.

What is RAG?

Retrieval Augmented Generation (RAG) is a technique that enhances LLMs by integrating additional data sources. A typical RAG application involves:

Indexing - a pipeline for ingesting data from a source and indexing it, which usually consists of loading, splitting, and sorting the data in Milvus.

Retrieval and generation - At runtime, RAG processes the user's query, fetches relevant data from the index stored in Milvus, and the LLM generates a response based on this enriched context.

In this hands-on guide we’ll explore how to set up a Retrieval Augmented Generation (RAG) system using Ollama, focusing on legal data and leveraging Milvus as our vector database.

The tools:

Ollama: Brings the power of LLMs to your laptop, simplifying local operation.

Milvus: The vector database we use to store and retrieve your data efficiently.

Llama 3: Meta’s latest iteration of a lineup of large language models.

Voyage AI - Provides embedding models specialized in specific domains. We’re using their specialized law embedding, optimized for legal and long-context retrieval.

Preparation

Dependencies and Data

First, install the necessary dependencies:

! pip install --upgrade pymilvus ollama tqdm pypdf voyageai openai wget

Import API Keys

If you have your API key set in a .env file, you can load it using dotenv to avoid leaking the key when you push your notebook.

import os

from dotenv import load_dotenv

load_dotenv()

VOYAGE_API_KEY = os.getenv('VOYAGE_API_KEY')

If you don’t have an .env file, just skip the dotenv import.

Prepare the data

For this tutorial, we’ll use data from the Royal Courts of Justice in London. Download it using wget and save it locally:

! wget https://www.judiciary.uk/wp-content/uploads/2024/07/Final-Judgment-CA-2023-001978-BBC-v-BBC-Pension-Trust-another.pdf

Next, read the PDF file and extract its content for use in our RAG application:

from pypdf import PdfReader

reader = PdfReader("Final-Judgment-CA-2023-001978-BBC-v-BBC-Pension-Trust-another.pdf")

pages = [page.extract_text() for page in reader.pages]

print(pages[0])

This should give you the following output:

Neutral Citation Number [2024] EWCA Civ 767

Case No: CA-2023 -001978

IN THE COURT OF APPEAL ( CIVIL DIVISION)

ON APPEAL FROM THE HIGH COURT OF JUSTICE

BUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES

BUSINESS LIST : PENSIONS (ChD)

The Hon Mr Justice Adam Johnson

[2023] EWHC 1965 (Ch)

Royal Courts of Justice

Strand, London, WC2A 2LL

Date: 09/07 /2024

Before :

LORD JUSTICE LEWISON

LADY JUSTICE FALK

and

SIR CHRISTOPHER FLOYD

Embed your Documents

We’ll use voyage-law-2, an embedding model specialized for the legal domain.

First, define a function to generate text embeddings using the Voyage AI API:

import voyageai

voyage_client = voyageai.Client()

def embed_text(text: str) -> str:

return voyage_client.embed([text], model="voyage-law-2").embeddings[0]

Generate a test embedding and print its dimension and first few elements.

result = voyage_client.embed(["hello world"], model="voyage-law-2")

embedding_dim = len(result.embeddings[0])

print(embedding_dim)

print(result.embeddings[0][:10])

1024

[0.000756315013859421, -0.02162403240799904, 0.0052010356448590755, -0.02917512319982052, -0.00796651840209961, -0.03238343447446823, 0.0660339742898941, 0.03845587745308876, -0.01913367211818695, 0.05562642216682434]

Load data into Milvus

Create the collection in Milvus

from pymilvus import MilvusClient

milvus_client = MilvusClient(uri="./milvus_legal.db")

collection_name = "my_rag_collection"

Setting the URI as a local file, e.g., ./milvus_legal.db, is convenient as it automatically uses Milvus Lite to store all data in this file. For large-scale data, you can set up a more performant Milvus server on Docker or Kubernetes.

Drop the collection if it exists already, then create a new one:

if milvus_client.has_collection(collection_name):

milvus_client.drop_collection(collection_name)

milvus_client.create_collection(

collection_name=collection_name,

dimension=embedding_dim,

metric_type="IP", # Inner product distance

consistency_level="Strong", # Strong consistency level

)

If we don't specify any field information, Milvus will automatically create a default id field for primary key, and a vector field to store the vector data. A reserved JSON field is used to store non-schema-defined fields and their values.

Insert Data

Iterate through the pages of our document, create the embeddings, and then insert the data into Milvus.

We introduce a new field called text, non-defined field in the collection schema. It will be automatically added to the reserved JSON dynamic field, which can be treated as a normal field at a high level.

from tqdm import tqdm

data = []

for i, page in enumerate(tqdm(pages, desc="Creating embeddings")):

data.append({"id": i, "vector": embed_text(page), "text": page})

milvus_client.insert(collection_name=collection_name, data=data)

Creating embeddings: 100%|███████████████████████████████████████████████████████████████████████████████████████| 20/20 [00:08<00:00, 2.45it/s]

{'insert_count': 20,

'ids': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19],

'cost': 0}

Build the basic RAG system

Next, let’s define a query about the content of the court hearing:

question = "who are the lawyers?"

We’ll use Milvus to search our indexed data. Later, we’ll integrate this with an LLM.

search_res = milvus_client.search(

collection_name=collection_name,

data=[

embed_text(question)

], # Use the `embed_text` function to convert the question to an embedding vector

limit=3, # Return top 3 results

search_params={"metric_type": "IP", "params": {}}, # Inner product distance

output_fields=["text"], # Return the text field

)

Print the retrieved lines with their distances:

import json

retrieved_lines_with_distances = [

(res["entity"]["text"], res["distance"]) for res in search_res[0]

]

print(json.dumps(retrieved_lines_with_distances, indent=4))

```

The text associated with our embeddings is quite long but here is an example of what Milvus returns

[" n nNeutral Citation Number [2024] EWCA Civ 767 n nCase No: CA-2023 -001978 nIN THE COURT OF APPEAL ( CIVIL DIVISION) nON APPEAL FROM THE HIGH COURT OF JUSTICE nBUSINESS AND PROPERTY COURTS OF ENGLAND AND WALES nBUSINESS LIST : PENSIONS (ChD) nThe Hon Mr Justice Adam Johnson n[2023] EWHC 1965 (Ch) nRoyal Courts of Justice nStrand, London, WC2A 2LL n nDate: 09/07 /2024 nBefore : n nLORD JUSTICE LEWISON nLADY JUSTICE FALK nand nSIR CHRISTOPHER FLOYD n n- - - - - - - - - - - - - - - - - - - - - n nBetween : n n BRITISH BROADCASTING CORPORATION Appellant n - and - n (1) BBC P ENSION TRUST LIMITED n(2) CHRISTINA BURNS nRespondent s n n- - - - - - - - - - - - - - - - - - - - - n n Michael Tennet KC and Edward Sawyer (instructed by Linklaters LLP ) nfor the Appellant n Brian Green KC and Joseph Steadman (instructed by Slaughter and May Solicitors ) nfor the First Respondent nAndrew Spink KC and Saul Margo (instructed by Stephenson Harwood LLP ) nfor the Second Respondent n nHearing dates: 25, 26 & 27/06/2024 n- - - - - - - - - - - - - - - - - - - - - n nApproved Judgment n nThis judgment was handed down remotely at 11.00am on 09/07/2024 by circulation to the nparties or their representatives by e -mail and by release to the Nat ional Archives. n n............................. n",

0.1101425364613533

],

Use an LLM to get a RAG Response

Combine the retrieved documents into a context and define system and user prompts for the language model:

context = "n".join(

[line_with_distance[0] for line_with_distance in retrieved_lines_with_distances]

)

SYSTEM_PROMPT = """

Human: You are an AI assistant. You are able to find answers to the questions from the contextual passage snippets provided.

"""

USER_PROMPT = f"""

Use the following pieces of information enclosed in <context> tags to provide an answer to the question enclosed in <question> tags.

<context>

{context}

</context>

<question>

{question}

</question>

"""

Ollama has a fully compatible OpenAI API, meaning we can use the OpenAI Python SDK to call Ollama:

from openai import OpenAI

client = OpenAI(

base_url = 'http://localhost:11434/v1',

api_key='ollama', # required, but unused

)

response = client.chat.completions.create(

model="llama3",

messages=[

{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": USER_PROMPT},

],

)

print(response.choices[0].message.content)

According to the text, the lawyers involved in this case are:

* Andrew Spink KC (instructed by Stephenson Harwood LLP) for the First Respondent

* Saul Margo (also instructed by Stephenson Harwood LLP) for the First Respondent

* Mr. Tennet (representing the Second Respondent)

* Mr. Spink KC (also representing the Second Respondent)

* Arden LJ (mentioned as having given a previous judgment in Stena Line)

Note that "KC" stands for King's Counsel, which is a title of distinction conferred upon certain senior barristers in England and Wales.

Conclusion

Setting up RAG with Milvus and Ollama for legal data can make legal research a lot easier and more efficient.

Feel free to check out Milvus, the code on Github, and share your experiences with the community by joining our Discord.

More resources on Milvus and Ollama:

- What is RAG?

- Preparation

- Prepare the data

- Embed your Documents

- Load data into Milvus

- Build the basic RAG system

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Milvus 2.6.x Now Generally Available on Zilliz Cloud, Making Vector Search Faster, Smarter, and More Cost-Efficient for Production AI

Milvus 2.6.x is now GA on Zilliz Cloud, delivering faster vector search, smarter hybrid queries, and lower costs for production RAG and AI applications.

How to Build RAG with Milvus, QwQ-32B and Ollama

Hands-on tutorial on how to create a streamlined, powerful RAG pipeline that balances efficiency, accuracy, and scalability using the QwQ-32B model and Milvus.

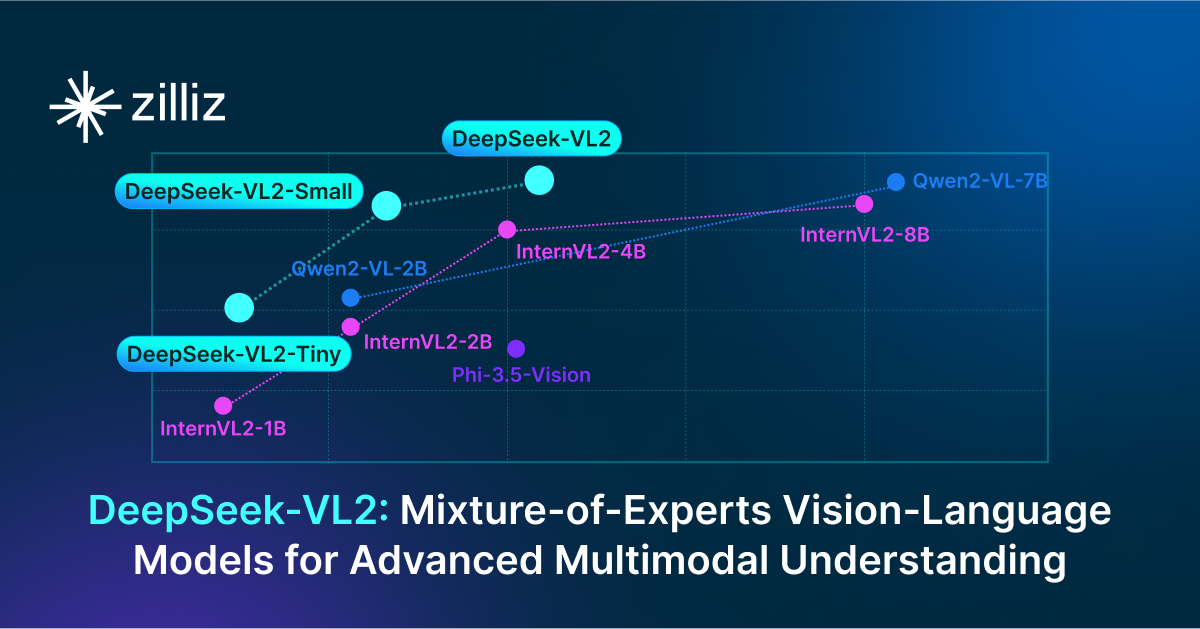

DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding

Explore DeepSeek-VL2, the open-source MoE vision-language model. Discover its architecture, efficient training pipeline, and top-tier performance.