How Testcontainers Streamlines the Development of AI-Powered Applications

In today’s fast-paced and increasingly competitive business landscape, organizations must find ways to expedite completing their digital transformation projects. Minimize the delays in deploying new applications or features, and you can reap their productivity and efficiency benefits in less time–gaining an edge over your competitors. Prolonging the development of new software or the release of updates, however, can erode your market share, as other companies in your industry innovate faster.

Containers have emerged as the go-to technology for companies looking to streamline their application development practices, allowing developers to build applications which are more secure, robust, and scalable - in less time than ever before.

In this article, inspired by this talk by Oleg Šelajev at a recent Unstructured Data Meetup hosted by Zilliz, we explore the concept of containerization and one of its essential tools, Docker, and how they decrease the complexity of the application development process. We’ll then turn our attention to an open-source framework, Testcontainers, that expands the capabilities of Docker considerably - enabling developers to streamline the development of AI-powered applications.

What Are Containers?

Containers are standardized application code packages containing all of the required components, including binaries, dependencies, libraries, and configuration files, to run in any environment. Traditionally, applications were monolithic, with different components within a single codebase. Today, developers divide the software into components according to their function or service, each with its codebase and dependencies–containerization or microservices architecture.

Benefits of containers include:

Increased Business Agility: dividing applications into services enables developers to work on different project parts concurrently. Similarly, containers’ modularity allows for updates and new features without modifying the entire application.

Enhanced Scalability: if an application can be packaged within a container, you need only deploy an additional container to create another instance. In modular applications, meanwhile, teams can simply spin out new containers for the service nearing capacity.

Increased Efficiency: because containers are highly portable and platform-independent, developers don’t have to devote time to setting up and maintaining different environments.

Lower Costs: relieving developers of the responsibility of managing servers and environments makes them more productive elsewhere, i.e., actually developing applications! This reduces the man-hours required to complete projects - lowering their cost.

Use cases for containers include:

Developing Cloud-Native Applications: containerized architectures fully harness the capabilities of the cloud, including enhanced scalability, greater data access, and stronger cybersecurity.

Modernizing Applications: containers can be used to refactor legacy applications to increase their compatibility with a cloud environment, making them more performant, resource-efficient, and easier to update.

Prototyping: because they’re platform-independent, containers prevent the need to set up different environments for development, testing, staging, etc - making it easier for developers to experiment with new features.

Internet of Things (IoT) Devices: being lightweight and resource-efficient, containers provide an ideal software deployment mechanism for constrained IoT hardware.

- DevOps: containers are used to create consistent, isolated environments, ensuring an application runs the same regardless of where it's deployed, resulting in faster deployment and enhanced collaboration between development and operations teams.

What Is Docker?

Docker is a platform that allows developers to create, deploy, configure, and manage containers. Docker is so synonymous with containers, in fact, that they’re frequently referred to as “Docker containers. In reality, however, when people mention Docker, they’re actually referring to the Docker engine: the runtime environment on which containers are built and run. This engine sits beneath the containers to virtualize the host device’s operating system (OS) and allocate them the optimal amount of resources, such as CPU and memory.

This is in notable contrast to a similar solution in virtual machines (VMs) - which require their own OS and computational resources. In addition to making containers environment-agnostic, the use of Docker makes them resource-intensive, faster to start up and shut down, and greater portability than VMs.

Widely-Used Docker Tools

As powerful as Docker is, its popularity is in large part to its rich ecosystem of associated tools and libraries. Here are some of the most notable Docker tools.

Docker Enterprise: a version of Docker designed for critical deployments, offering advanced security, management, and orchestration features for certified, production-grade releases.

Docker Swarm: a clustering and orchestration tool for Docker that enables users to manage multiple Docker nodes as a single virtual system, allows for simpler scaling and load balancing.

Docker Compose: a tool for streamlining the development and management of complex, multi-container Docker applications.

Kubernetes: An open-source container orchestration platform that automates the deployment, scaling, and management of large containerized applications across server clusters.

You can learn more about the docker ecosystem in this article https://middleware.io/blog/understanding-the-docker-ecosystem/

Testcontainers: Simplified Dependency Management

Testcontainers is an open-source framework that provides lightweight, modular instances of databases, browsers, message brokers, and other pre-configured dependencies that can run in a container. By placing a library of programmatic APIs for manipulating containers at the user’s disposal, Testcontainers abstracts the complicated details of each dependency, making it easier to work with. This reduces the operational cost of projects while encouraging experimentation and facilitating streamlined development.

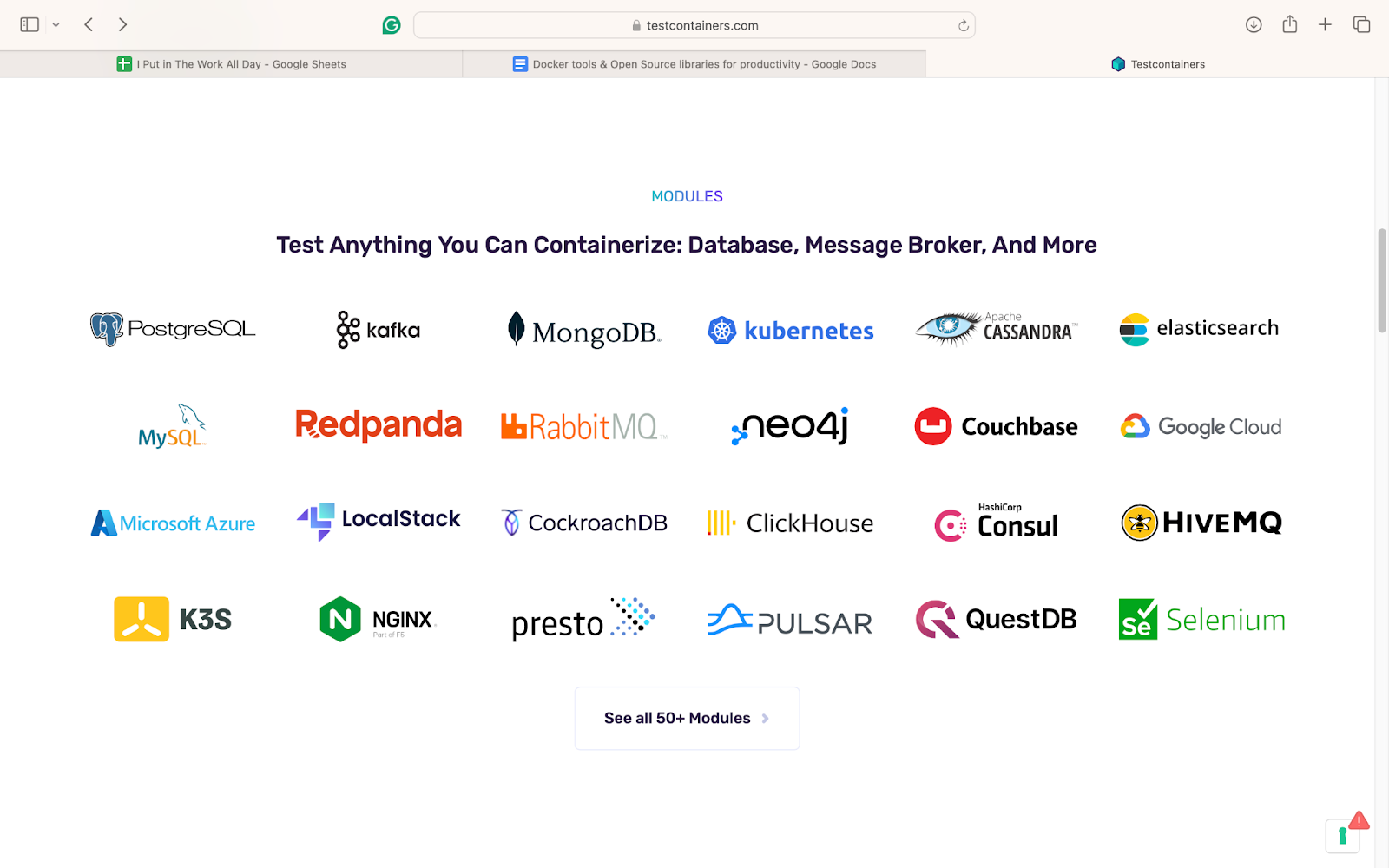

A selection of Testcontainers’s 50+ modular integrations

A selection of Testcontainers’s 50+ modular integrations

As its name suggests, Testcontainers offer the most significant productivity boosts when it comes to application testing, including data access layer, integration, and acceptance tests.

The benefits of conducting application testing with Testcontainers include:

Isolation and Consistency: containerizing components ensures that each test runs in a fresh, known state. For example, testing databases in isolation eliminates the inconsistencies that arise from differing local database setups, while containerized browsers enable more reliable UI testing by remediating the variations caused by local browser setups.

Environment Parity: developers can end-to-end carry out testing in an environment that closely resembles production, reducing the discrepancies between environments while increasing test result accuracy.

Resource Management: as containers have short runtimes, you can manage resources more efficiently, while enabling quick test execution and teardown.

Scalability and Reliability: consequently, tests can be easily scaled without having to consider available resources.

Aside from making application testing easier and more effective, Testcontainers can help you develop environment-agnostic AI applications. Let’s now turn our attention to a few of the other key ways that Testcontainers increases productivity.

Running a Milvus Instance

For a first example of how to use Testcontainers to increase productivity, let’s create a Milvus instance, as shown in the code sample below (all code samples in this article, are written Java, but the Testcontainers library is also available in Python, Node.js Go, Rust, and several other languages):

import io.milvus.client.MilvusServiceClient;

import io.milvus.param.ConnectParam;

import org.testcontainers.milvus.MilvusContainer;

public class MilvusExample {

public static void main(String[] args) {

MilvusContainer milvus = new MilvusContainer("milvusdb/milvus:v2.3.9");

milvus.start();

MilvusServiceClient milvusClient = new MilvusServiceClient(

ConnectParam.newBuilder().withUri(milvus.getEndpoint()).build());

}

}

Instead of having to install and configure a separate Milvus instance and integrate it with your application, Testcontainers provides an easy-to-use abstraction that simply invokes the instance from within the code.

First, we create a new instance of a Milvus container, specifying the version by passing the appropriate container image as a parameter. As well as streamlining application development, this also makes it easier to update applications going forward, as you only have to specify the updated image. From there, we run the container using the .start() method and then pass the container as a parameter to a new instance of a Milvus Service Client that allows you to interact with the service within the container.

In addition to creating a Milvus instance in a mere few lines of code, this approach ensures the component also never falls out of sync with the application’s requirements - because it is being invoked by the application itself.

Integrating Ollama and HuggingFace for LLM Intregation

A second way that Testcontainers enhance productivity is by streamlining the integration of AI components, such as LLMs, within applications. This allows you to easily make LLM calls from within the code to add LLM functionality to applications and, just as importantly, makes AI components a normal part of the DevOps process.

In the code snippet below, we create an OllamaHuggingFaceContainer class, which extends the existing OllamaContainer to launch an instance running an Ollama container that runs a deep learning model downloaded from HuggingFace.

Ollama is a platform that allows you to serve models for inference locally on your device, while HuggingFace is an indispensable hub for downloading open-source AI models. By combining the two resources, you’re granted access to the dozens of models supported by Ollama, as well as any model in GGUF (GPT-Generated Unified Format)—of which there are 23,000 on HuggingFace.

package org.testcontainers.huggingface;

import com.github.dockerjava.api.command.InspectContainerResponse;

import org.testcontainers.containers.ContainerLaunchException;

import org.testcontainers.images.builder.ImageFromDockerfile;

import org.testcontainers.ollama.OllamaContainer;

import org.testcontainers.utility.DockerImageName;

import java.io.IOException;

public class OllamaHuggingFaceContainer extends OllamaContainer {

private final HuggingFaceModel huggingFaceModel;

public OllamaHuggingFaceContainer(HuggingFaceModel model) {

super(DockerImageName.parse("ollama/ollama:0.1.44"));

this.huggingFaceModel = model;

}

Automated Deployment of AI Models

Subsequently, because Testcontainers enables the invocation of AI models from within the application, it also presents a more convenient, automated way of distributing those models. While open-source models are easily obtained from HuggingFace, they are stored as a series of files and directories in git repositories. Not only is this too complicated for the uninitiated but it's also far from the most convenient way for seasoned developers to work with models.

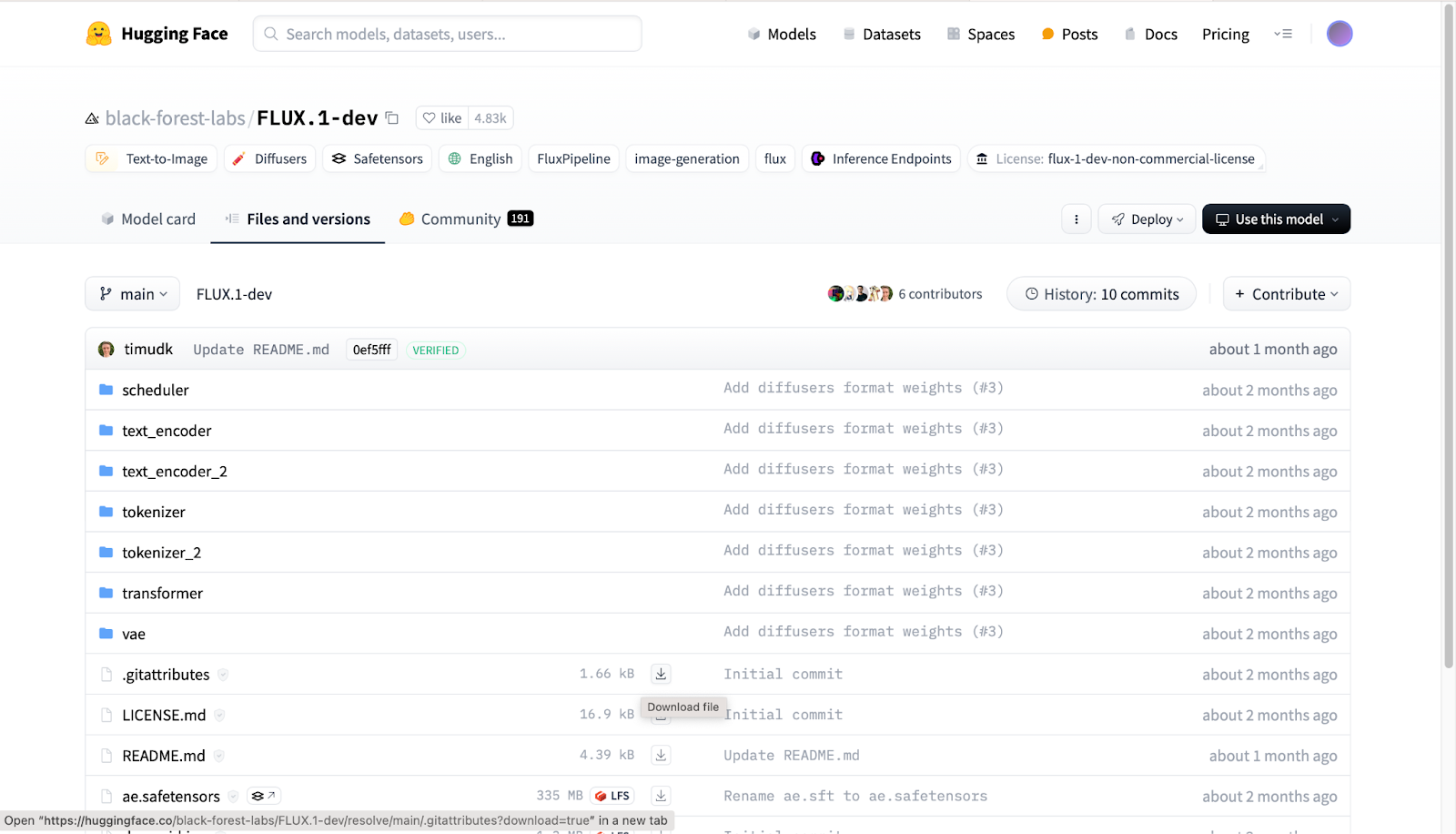

A typical HuggingFace repository

A typical HuggingFace repository

Instead of configuring and deploying a model manually, you can automate the process with a script. This. again, reduces the administrative overhead for developers while adding another level of abstraction for the model itself. This is exemplified in the code below (which is a snippet from a longer testing script), which shows how a model can be specified and configured from within the code. As well as specifying the directory from which the model is to be drawn from and the exact model to be used, the variable modelfiledefines a prompt template.

public class HuggingFaceVisionModelTest {

@Test

public void visionModelWithHuggingFace() throws IOException {

String imageName = "vision-model-from-HuggingFace";

String repository = "xtuner/llava-phi-3-mini-gguf";

String model = "llava-phi-3-mini-int4.gguf";

String visionAdapter = "llava-phi-3-mini-mmproj-f16.gguf";

String modelfile = """

FROM ./llava-phi-3-mini-int4.gguf

FROM ./llava-phi-3-mini-mmproj-f16.gguf

TEMPLATE """{{ if .System }}<|system|>

{{ .System }}<|end|>

{{ end }}{{ if .Prompt }}<|user|>

{{ .Prompt }}<|end|>

{{ end }}<|assistant|>

{{ .Response }}<|end|>

"""

PARAMETER stop "<|user|>"

PARAMETER stop "<|assistant|>"

PARAMETER stop "<|system|>"

PARAMETER stop "<|end|>"

PARAMETER stop "<|endoftext|>"

PARAMETER num_keep 4

PARAMETER num_ctx 4096

""";

try (

OllamaContainer ollama = new OllamaContainer(DockerImageName.parse(imageName).asCompatibleSubstituteFor("ollama/ollama:0.1.44")).withReuse(true)

) {

try {

ollama.start();

} catch (ContainerFetchException ex) {

createImage(imageName, repository, model, modelfile, visionAdapter);

ollama.start();

}

As alluded to earlier, this can be applied to any model on Ollama, as well as the vast number available in GGUF format. Deploying a model in this manner also makes it easier to upgrade the AI model in future iterations of your application, as you won’t have to make changes to the environment - just to the references to the model in the code.

Conclusion

In summary:

Containers are standardized packages of application code that contain all of the required components, including binaries, dependencies, libraries, and configuration files, to run in any environment.

Benefits of containers include:

Increased business agility

Enhanced scalability

Increased efficiency

Lower costs

Use cases for containers include:

Developing cloud-native applications

Modernizing applications

Prototyping

IoT devices

DevOps

Docker is a platform that allows developers to create, deploy, configure, and manage containers.

Some of the most widely-used Docker tools include:

Docker Enterprise

Docker Swarm

Docker Compose

Kubernetes

Testcontainers

Testcontainers is an open-source framework that provides modular instances of pre-configured dependencies that can run in a container, such as databases, browsers, and message brokers.

Testcontainers streamlines application testing by providing:

Isolation and consistency

Environment parity

Resource management

Scalability and reliability

Other productivity and efficiency boosts presented by Testcontainers include:

The abstraction of dependencies, making them easier to work with - without having to install additional software or make changes to the application’s environment(s).

The simple invocation of AI components, making them a normal part of DevOps processes.

The abstraction and automated deployment of LLMs.

Further Reading

We encourage you to check out the resources below to learn more about containers, Docker, DevOps, and their role in creating the next generation of AI-driven solutions.

- What Are Containers?

- What Is Docker?

- Widely-Used Docker Tools

- Testcontainers: Simplified Dependency Management

- Conclusion

- Further Reading

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Introducing Zilliz Cloud Global Cluster: Region-Level Resilience for Mission-Critical AI

Zilliz Cloud Global Cluster delivers multi-region resilience, automatic failover, and fast global AI search with built-in security and compliance.

Announcing the General Availability of Single Sign-On (SSO) on Zilliz Cloud

SSO is GA on Zilliz Cloud, delivering the enterprise-grade identity management capabilities your teams need to deploy vectorDB with confidence.

Introducing DeepSearcher: A Local Open Source Deep Research

In contrast to OpenAI’s Deep Research, this example ran locally, using only open-source models and tools like Milvus and LangChain.