Harnessing Function Calling to Build Smarter LLM Applications

Large language models (LLMs) are no longer limited to generating text; they now handle more complex, context-driven tasks. A key advancement in this area is function calling, which enables LLMs to interact with external tools, databases, and APIs to perform dynamic operations. This allows them to go beyond text generation and work with real-world data and services.

At a recent Berlin Unstructured Data Meetup hosted by Zilliz, Nikolai Danylchyk, a customer engineer at Google, discussed how Gemini, a modern LLM, uses function calling to extend its capabilities. This blog will recap his insights and demonstrate how to leverage this powerful feature to build advanced LLM applications. If you want to learn more details, we recommend you watch the replay of Nikolai’s talk on YouTube.

Understanding Advanced Function Calling in LLMs

LLMs have evolved significantly, but their true potential shines when interacting with external systems through function calls. This capability allows LLMs to go beyond the limitations of their static training data by querying live databases, executing commands, or performing real-time calculations. By integrating function calling, LLMs can seamlessly connect with other systems to deliver more accurate, current, and dynamic responses. Let’s break down how function calling works using Gemini as an example.

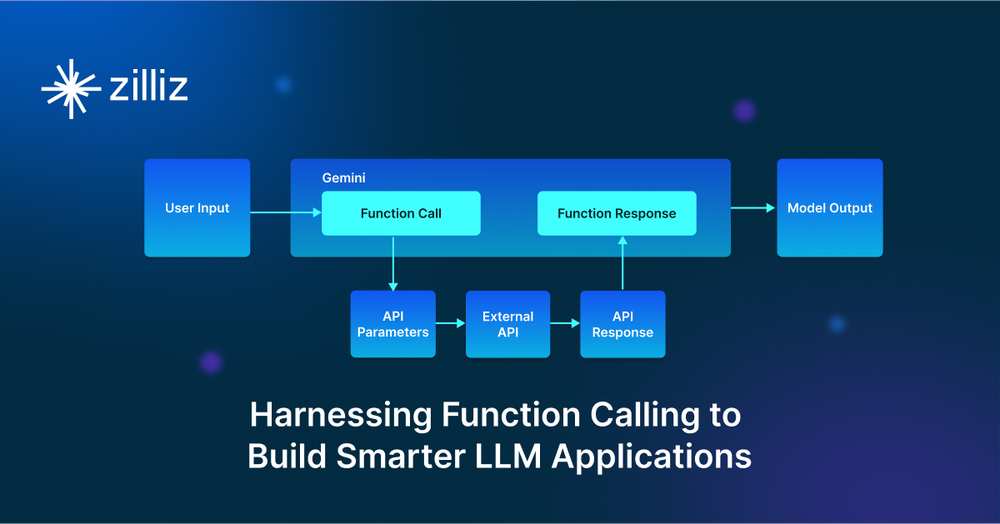

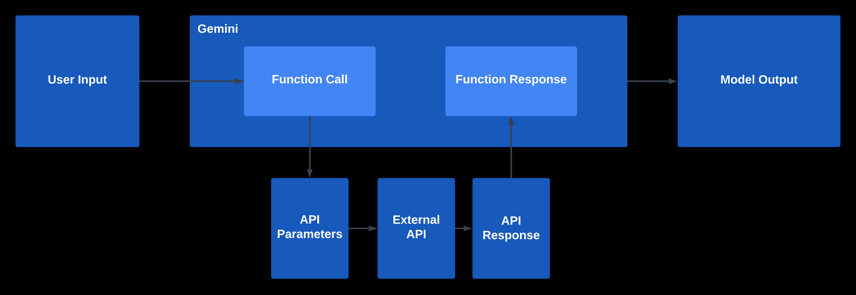

Figure 1- Steps of function calling and components used at runtime

Figure 1- Steps of function calling and components used at runtime

Figure 1: Steps of function calling and components used at runtime.

The process starts when a user submits a request to the Gemini API. At this point, the developer has already defined one or more function declarations within the tool, informing Gemini of the available functions and how to execute them. Once the user input is received, the Gemini API analyzes the content and prompt, returning a "Function Call" response. This response includes structured data, such as the function's name to be called and the relevant parameters.

The next step occurs outside the Gemini system: the application uses the function name and parameters from Gemini’s response to make an API request to an external service. This is where the developer steps in, using tools like the

requestslibrary in Python to call a REST API or any other client library suited to their needs.After the external system returns its response, the application sends that data back to Gemini. The model then uses this new information to generate a final response for the user. If additional data is required, Gemini can issue another function call, continuing the cycle as needed.

To understand the significance of this advanced capability, consider a traditional LLM without the function-calling feature. Its responses are generated based on the data it was trained on, which may be outdated or limited in scope. However, with function calls, an LLM can issue commands to pull in real-time information from a database, update a record in a customer relationship management (CRM) system, or even trigger actions such as booking a ticket or placing an order. This approach expands the model’s usability from merely generating responses to performing tasks.

In technical terms, a function call in an LLM involves a structured interaction between the model and an external API or service. The model identifies a suitable scenario where a function call is required, formulates the parameters for the call, and executes it.

For example, if a user asks, “What’s the weather in Berlin?” The function call would trigger a weather API, retrieving real-time data to generate the response. In this case, the LLM acts as an intelligent intermediary between the user and the external system, orchestrating a more accurate and useful interaction.

Now that we've explored the basic concept of function calling, let's look at a practical implementation using Gemini to interact with a real-world API—retrieving currency exchange rates.

Example: Using Function Calling for Currency Exchange Rate Retrieval

Let’s see how we can utilize a currency exchange rate API through Gemini function calling:

Installing the Necessary Libraries and Setting Project Variables

Let’s begin by upgrading and installing the google-cloud-aiplatform library, which is needed to interact with Google Cloud's Vertex AI platform. Then, provide your project ID and location as variables.

!pip3 install --upgrade --user --quiet google-cloud-aiplatform

PROJECT_ID = "[your-project-id]" # @param {type:"string"}

LOCATION = "us-central1" # @param {type:"string"}

These are placeholders for identifying which project and region in Google Cloud you're working with.

Importing Necessary Modules and Defining the Generative Model

Then, import the required modules to define and work with a generative model from Vertex AI. The requests library will be used later to fetch data from an external API.

import requests

from vertexai.generative_models import (

Content,

FunctionDeclaration,

GenerativeModel,

Part,

Tool,

)

model = GenerativeModel("gemini-1.5-pro-001")

The last line initializes a generative model, in this case, gemini-1.5-pro-001. You will later use the model to generate responses based on the input prompt.

Defining a Function for Currency Exchange Rates and Creating a Tool

Next, create a function that describes the parameters needed to obtain an exchange rate between two currencies and wrap it into a tool.

get_exchange_rate_func = FunctionDeclaration(

name="get_exchange_rate",

description="Get the exchange rate for currencies between countries",

parameters={

"type": "object",

"properties": {

"currency_date": {

"type": "string",

"description": "A date that must always be in YYYY-MM-DD format or the value 'latest' if a time period is not specified"

},

"currency_from": {

"type": "string",

"description": "The currency to convert from in ISO 4217 format"

},

"currency_to": {

"type": "string",

"description": "The currency to convert to in ISO 4217 format"

}

},

"required": [

"currency_from",

"currency_date",

]

},

)

exchange_rate_tool = Tool(

function_declarations=[get_exchange_rate_func],

)

The parameters include currency_date (in YYYY-MM-DD format or the keyword latest), currency_from (the ISO 4217 code of the currency to convert from), and currency_to (the ISO 4217 code of the currency to convert to). The required section specifies that currency_from and currency_date are mandatory. We then define a tool named exchange_rate_tool, which wraps the get_exchange_rate function declaration. We will later pass this tool to the model to handle exchange rate retrieval.

Generating a Response with the Model and Extracting Parameters from the Response

Let’s now invoke a response by passing a prompt to the model. We expect the model to output a response containing a function call with parameters.

prompt = """What is the exchange rate from Australian dollars to Swedish krona?

How much is 500 Australian dollars worth in Swedish krona?"""

response = model.generate_content(

prompt,

tools=[exchange_rate_tool],

)

response.candidates[0].content

params = {}

for key, value in response.candidates[0].content.parts[0].function_call.args.items():

params[key[9:]] = value

params

The above code defines a prompt asking for the exchange rate between Australian dollars and Swedish krona. The model is then called using generate_content, passing the prompt along with the exchange_rate_tool. The model will attempt to generate a response.

The response is then parsed. The params dictionary is populated by iterating through the keys and values of the function_call.args and stripping off the first 9 characters of the key to match the expected parameter names.

Fetching Exchange Rates from an API and Giving a Response to the User

Let’s now request the Frankfurter API (a free foreign exchange rates API) using the parameters extracted from the previous response and generate a response to the user.

import requests

url = f"https://api.frankfurter.app/{params['date']}"

api_response = requests.get(url, params=params)

api_response.text

response = model.generate_content(

[

Content(role="user", parts=[

Part.from_text(prompt + """Give your answer in steps with lots of detail

and context, including the exchange rate and date."""),

]),

Content(role="function", parts=[

Part.from_dict({

"function_call": {

"name": "get_exchange_rate",

}

})

]),

Content(role="function", parts=[

Part.from_function_response(

name="get_exchange_rate",

response={

"content": api_response.text,

}

)

]),

],

tools=[exchange_rate_tool],

)

response.candidates[0].content.parts[0].text

In the above code, once we get a response from the exchange rate API, we call the generate_content method again, this time passing multiple contents: one from the user (requesting detailed steps and context), a function call to get_exchange_rate, and the response from the Frankfurter API. This combination prompts the model to give a more thorough, step-by-step response. We finally print the text part of the model’s generated content. This is the response we give back to the user.

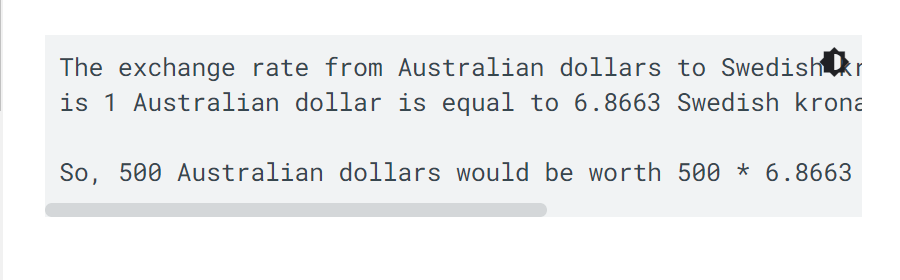

Here is a sample response:

Figure 2- Output of a program showing the exchange rate from Australian dollars to Swedish Krona

Figure 2- Output of a program showing the exchange rate from Australian dollars to Swedish Krona

Figure 2: Output of a program showing the exchange rate from Australian dollars to Swedish Krona

The response shows the function call worked and gave us the current exchange as listed by the Frankfurter API.

So far, we have covered function calling, the advanced feature of Gemini and many other LLMs. While function calling alone can perform complex tasks like currency retrieval, its true potential is realized when combined with other powerful techniques, such as Retrieval Augmented Generation (RAG).

Combining Function Calling With Retrieval-Augmented Generation (RAG) for Enhanced Interactivity

Retrieval Augmented Generation (RAG) has become one of the most significant trends in natural language processing (NLP). RAG systems combine the generative capabilities of LLMs with the efficiency of retrieval systems powered by vector databases such as Milvus and Zilliz Cloud (the managed Milvus). Specifically, in a RAG system, the vector database retrieves contextual information for the LLM, and then the LLM generates a more accurate response based on the retrieved information. Function calling enhances this process by enabling more dynamic interaction between the LLM and external databases or systems. With the addition of function calls, LLMs can extend their capabilities by not only retrieving relevant data but also processing and interacting with it in real time.

For example, in a customer support application using both RAG and function calling, the LLM offers a richer, more personalized interaction. When a user asks, “Where is my recent order?” The system first employs RAG to retrieve general information about order processing from the Milvus vector database, providing context. For example, the retrieved information is“orders typically take 3-5 business days for delivery.” Simultaneously, through function calling, it accesses real-time data by querying the customer's account. The final response becomes, “Your order #12345 was shipped on September 10th and is expected to arrive on September 15th.”

This integration of RAG with function calling creates a dynamic system that not only generates relevant and informative responses but also actively engages with live data and services bringing an interactive dimension. This combination makes LLMs-powered intelligent agents capable of making complex, real-world interactions.

- Refer to this tutorial for a step-by-step guide on how to leverage LLM function calling capabilities to enhance RAG systems.

Use Cases of Combining RAG and Function Calling

Let’s take a look at several of these real-world interactions.

Healthcare: Medical Record Retrieval and Appointment Scheduling

In healthcare, RAG combined with function calling enables LLMs to provide highly personalized, data-driven support for both patients and healthcare providers. A doctor could ask the system for a patient's historical data, while the system retrieves relevant medical records using RAG from a vector database like Milvus. At the same time, through function calling, the LLM can interact with external hospital systems to schedule follow-up appointments or retrieve real-time diagnostic data.

Example: A physician asks, “What is the latest test result for patient X, and can you book a follow-up appointment next week?” The system first queries Milvus to retrieve related documents from the patient's history, providing insights into trends and conditions. Next, it uses function calling to access the hospital's scheduling API to book an appointment for the patient.

Finance: Personalized Investment Insights and Real-Time Transactions

In the financial sector, RAG is employed to retrieve relevant market information and historical financial data stored in vector databases like Milvus. By coupling this with function calling, an LLM can also perform real-time actions such as buying or selling stocks, transferring funds, or generating custom portfolio recommendations based on live data.

Example: A user asks, “How has my portfolio performed in the last six months, and can you buy $1000 worth of Apple stock?” The system first uses RAG to retrieve the user’s portfolio history from Milvus, providing a summary of past performance. Then, using function calling, it interacts with a trading API to execute the stock purchase and confirm the transaction to the user.

E-Commerce: Product Recommendations and Real-Time Order Tracking

In e-commerce, RAG can help retrieve product information, reviews, or customer history from Milvus to personalize a shopping experience. When combined with function calling, the system can interact with live inventory systems to provide stock availability, track orders in real time, or even process payments.

Example: A customer asks, Can you recommend a laptop similar to my last purchase, and tell me when my current order will arrive? The LLM retrieves information on the customer’s previous purchases from Milvus and recommends a similar product. Function calling is then used to query the order-tracking API to give real-time updates on the delivery status of the current order.

Travel: Itinerary Suggestions and Booking Management

In travel and hospitality, RAG can fetch details about destinations, hotels, and travel itineraries stored in a vector database like Milvus. Paired with function calling, the system can manage bookings, suggest personalized itineraries, or offer real-time updates on flights and reservations.

Example: A traveler asks, Can you suggest a 5-day itinerary in Italy, and reschedule my hotel booking for an earlier date? The system first retrieves itinerary suggestions from Milvus based on the traveler’s preferences and past trips. Then, it uses function calling to interact with the hotel’s booking system to modify the reservation and confirm the change with the user.

As we see how function calling enhances systems like RAG, it’s important to recognize the practical challenges and benefits when deploying such technologies in real-world applications.

Challenges and Benefits of Function Calling in Real-World Use Cases

While the potential of function calling is immense, it comes with its own set of challenges. One of the primary concerns is ensuring the security and privacy of the interactions. When an LLM issues function calls, it may require access to sensitive data, such as user accounts or financial information. Ensuring that these transactions are secure and comply with data privacy regulations is crucial.

Additionally, the complexity of managing multiple function calls in a single workflow can lead to latency issues. For instance, if an LLM is issuing several function calls to different systems, the response time may increase, potentially affecting user experience. This is particularly important in real-time applications where speed is critical.

On the positive side, function calling offers several benefits, including enhanced task automation, more accurate real-time responses, and improved user interaction. In industries such as healthcare, finance, and customer service, function calling can streamline operations by automating routine tasks. For example, in healthcare, an LLM could pull patient records, schedule appointments, and send reminders via function calls, freeing up time for medical professionals to focus on more complex cases.

Beyond the practical benefits and challenges, there are ethical and technical considerations that must not be overlooked, particularly around data security and system reliability.

Ethical and Technical Considerations

From a technical standpoint, we as developers need to carefully manage how function calls are implemented to avoid potential pitfalls. One common issue is over-reliance on external systems. If an LLM is constantly issuing function calls to third-party services, the system's reliability becomes dependent on the uptime and performance of those services. Proper error handling and fallback mechanisms must be in place to ensure that the system continues to function smoothly, even when external services are unavailable.

Ethically, function calling raises concerns about transparency and user consent. If an LLM is making decisions or taking actions on behalf of a user, it’s essential that the user is fully aware of what the system is doing. For instance, in financial services, if an LLM is executing trades or transferring funds, users should be informed about the actions being taken and should have the ability to intervene if necessary. Clear documentation and user consent mechanisms are critical to maintaining trust in such systems.

Another ethical concern revolves around data usage. When function calls access personal or sensitive data, stringent measures must be in place to protect user privacy. Developers need to ensure that data is handled responsibly and that function calls are compliant with privacy regulations such as GDPR or HIPAA.

Conclusion

Nikolai did a fantastic job at shedding light on how function calling offers a critical enhancement to large language models, enabling them to perform real-world tasks by interacting with external systems and data. Whether it’s retrieving currency exchange rates or powering more complex interactions through Retrieval-Augmented Generation (RAG), function calling expands the horizons of what LLMs can achieve.

Further Reading

- Understanding Advanced Function Calling in LLMs

- Example: Using Function Calling for Currency Exchange Rate Retrieval

- Combining Function Calling With Retrieval-Augmented Generation (RAG) for Enhanced Interactivity

- Use Cases of Combining RAG and Function Calling

- Challenges and Benefits of Function Calling in Real-World Use Cases

- Ethical and Technical Considerations

- Conclusion

- Further Reading

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

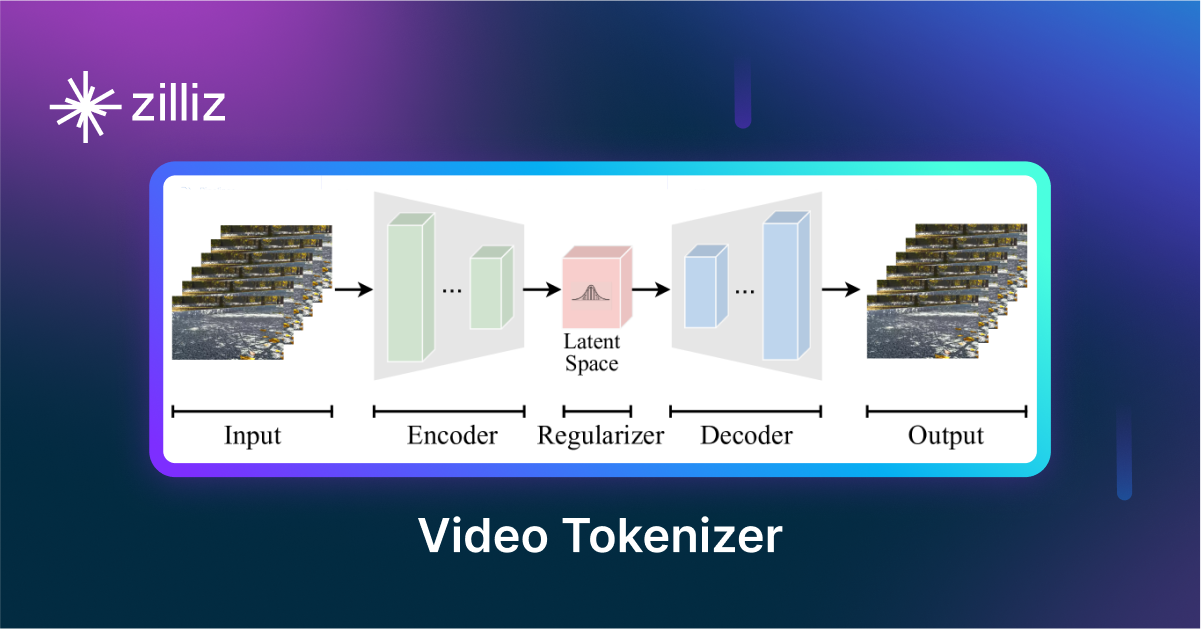

VidTok: Rethinking Video Processing with Compact Tokenization

VidTok tokenizes videos to reduce redundancy while preserving spatial and temporal details for efficient processing.

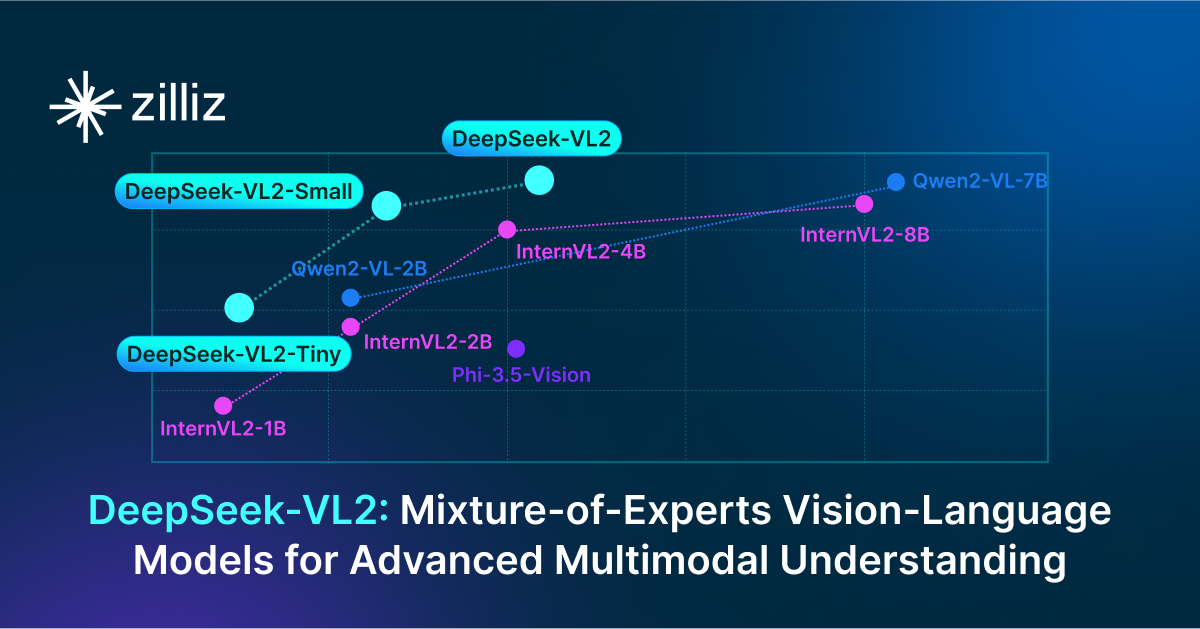

DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding

Explore DeepSeek-VL2, the open-source MoE vision-language model. Discover its architecture, efficient training pipeline, and top-tier performance.

How AI and Vector Databases Are Transforming the Consumer and Retail Sector

AI and vector databases are transforming retail, enhancing personalization, search, customer service, and operations. Discover how Zilliz Cloud helps drive growth and innovation.