Elevating User Experience with Image-based Fashion Recommendations

Fashion retail is a rapidly evolving industry with constantly changing consumer preferences and shopping behaviors. To stay competitive, brands must offer personalized shopping experiences catering to individual tastes.

In a recent talk, Joan Kusuma shared her innovative approach to enhancing the fashion retail experience using image-based recommendations. From her background in fashion retail and AI, Joan demonstrated how convolutional neural networks (CNNs) and visual embeddings can be utilized to create a personalized outfit recommendation system.

This article explores the concepts and architecture, highlighting how AI can transform the fashion industry. We'll begin by explaining visual embeddings, which are crucial for understanding the article. Next, we'll detail how Joan built and stored images in a vector database like Milvus. Finally, we'll outline the step-by-step process of how the model generates recommendations.

Understanding Visual Embeddings and Its Role in a Recommender

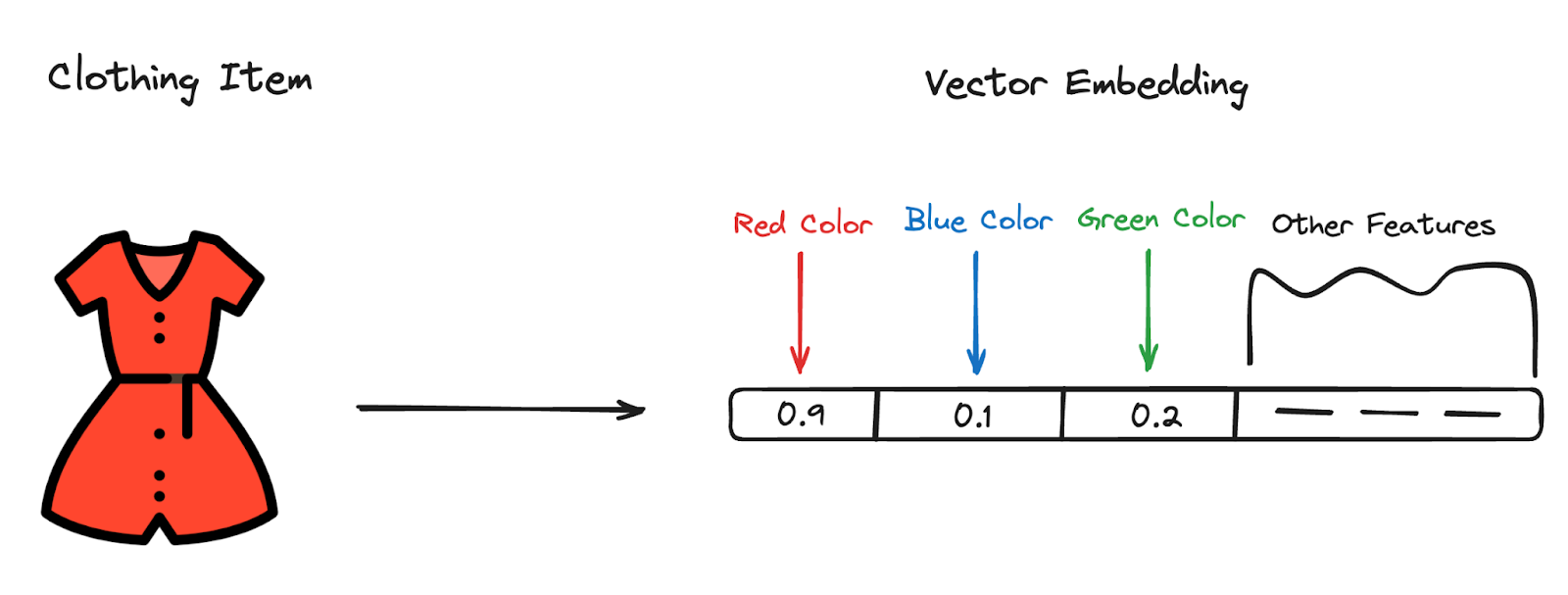

Visual embeddings are a fundamental concept in image-based recommendation systems. These embeddings are vector (numerical) representations of images that capture the essential features and characteristics of the items depicted.

By converting images into embeddings, AI models can analyze and compare their similarity efficiently. In the example below, the encoder assigns different values to various clothing item features, such as the three color spectrums (Red, Blue, Green) and various shape characteristics, depending on the specific attributes of the clothing.

In Joan’s presentation, she used a PyTorch autoencoder model to generate visual embeddings for clothing items. Additionally, Joan chose YOLOv5 (You Only Look Once), a convolutional neural network, for real-time object detection. YOLOv5 processes an entire image in a single pass, identifying and classifying objects with remarkable speed and precision. Once we generate embeddings, they are stored in a vector database like Milvus and used for similarity retrieval.

How Recommenders Work with Embeddings

To understand how visual embeddings are utilized in recommender systems, let’s break down the process into several key steps:

Image Preprocessing: The first step involves preprocessing the images to ensure consistency in size, resolution, and format. This preprocessing step is essential for maintaining the accuracy of the embeddings.

Image Embedding(Feature Extraction): Using the Pytorch auto-encoder model, the system extracts features from the preprocessed images. These features are then converted into embeddings stored in a vector database.

Vector Search with Vector Databases: Vector databases are a crucial part of the system. They store the embeddings of all clothing items, allowing for quick and efficient retrieval of similar items. Joan experimented with FAISS, a Facebook-created vector search library.

Indexing: Constructing an index of the embeddings involves organizing the vectors to allow quick and efficient similarity searches. This index helps navigate the high-dimensional space to find visually similar items to a given query image. Joan’s index of choice for the vector database is the Approximate Nearest Neighbor (ANN) search algorithm.

Image Recommendation Model In Action

Below is an illustration of the architecture of the visual search and recommender system Joan presented.

The system comprises several key steps. Let's cover them one by one to understand the full picture.

Step 1: User Inputs a Clothing Image

The process begins when a user uploads or selects an image of a clothing item they are interested in. This image serves as the query for the recommendation system, initiating the search for visually similar items.

Step 2: Image Embedding (Feature Extraction)

The uploaded image is converted into a visual embedding, a numerical representation of its essential features. This embedding process ensures that we can compare the query image with the pre-stored embeddings in the vector database, making it easy to search for similar items.

Step 3: Similarity Search

The generated embedding of the query image is used to perform a similarity search within the vector database. Joan employs an Approximate Nearest Neighbor (ANN) search algorithm to efficiently find the embeddings in the database that are closest to the query embedding. This algorithm quickly identifies the most similar images without exhaustive searching.

Step 4: Recommendation Engine

The recommendation engine is the final component of the system. It filters and ranks the search results based on additional criteria such as user preferences, seasonal trends, and inventory availability. This step ensures that the recommendations are visually similar to the query image and relevant and personalized, providing a tailored shopping experience for the user.

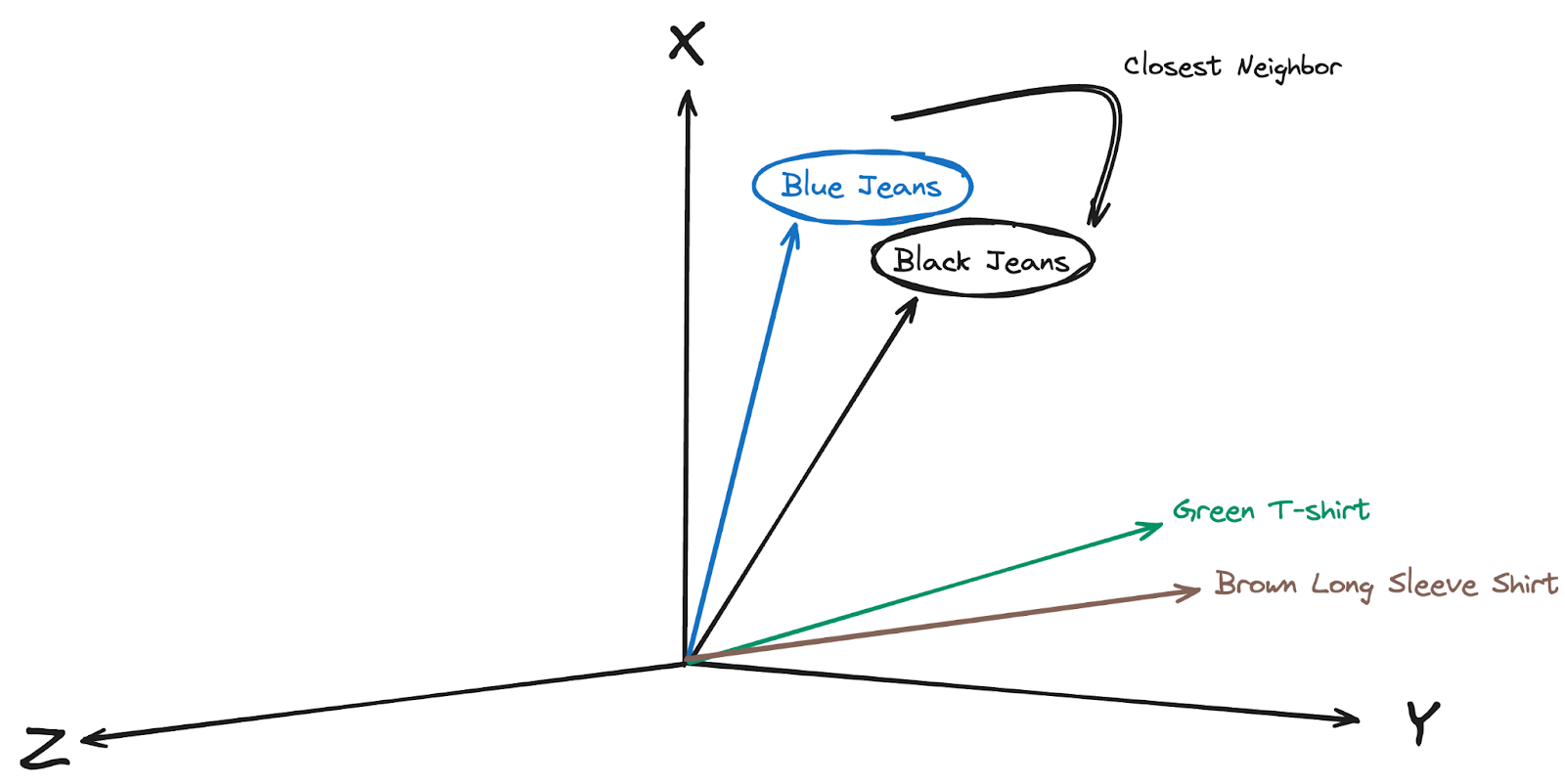

Approximate Nearest Neighbor Search

A key aspect of Joan's recommendation system is using an approximate nearest neighbor (ANN) search within the vector database to return relevant results quickly and accurately. ANN search algorithms find points in a high-dimensional space that are closest to a given query point.

Unlike exact nearest neighbor searches, which can be computationally expensive, ANN searches balance speed and accuracy, making them ideal for large-scale datasets.

In the context of image-based fashion recommendations, ANN search is used to identify clothing items with embeddings similar to the query image's embedding.

This process involves several steps:

Index Construction: The first step is constructing an index of the embeddings stored in the vector database. This index allows the system to efficiently navigate the high-dimensional space and locate similar items.

Query Processing: When a user uploads an image, the system generates its embedding and uses the ANN search algorithm to find embeddings in the index closest to the query embedding.

Result Ranking: The search results are then ranked based on similarity scores. Additional filtering and ranking criteria, such as user preferences and inventory availability, can be applied to further refine the recommendations.

Web Demo App

Joan developed a fully functional web demo app to showcase her final product and its image-based recommendation capability. The app allows users to upload images of clothing items and receive personalized recommendations in real time.

The app features a simple and intuitive interface with two simple steps, making it easy for users to upload images and view recommendations. Additionally, it offers real-time processing for real-time image recommendations.

Step 1: Upload Clothing Image

Step 2: Model Returns Similarly Styled Clothing Items

Conclusion

Joan Kusuma's work demonstrates the potential of AI in transforming fashion retail. She developed recommenders that deliver personalized outfit suggestions using visual embeddings and vector databases. By combining PyTorch’s autoencoder for feature extraction with YOLOv5 for real-time object detection and utilizing the speed and accuracy of approximate nearest neighbor search in vector databases, her system ensures quick and precise recommendations.

Joan also showed her web demo app, showcasing these capabilities and providing a user-friendly platform for real-time, tailored fashion advice. This approach enhances the customer shopping experience and sets a new standard for AI-driven personalization, driving growth for fashion retailers.

- Understanding Visual Embeddings and Its Role in a Recommender

- How Recommenders Work with Embeddings

- Image Recommendation Model In Action

- Approximate Nearest Neighbor Search

- Web Demo App

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Will Amazon S3 Vectors Kill Vector Databases—or Save Them?

AWS S3 Vectors aims for 90% cost savings for vector storage. But will it kill vectordbs like Milvus? A deep dive into costs, limits, and the future of tiered storage.

AI Integration in Video Surveillance Tools: Transforming the Industry with Vector Databases

Discover how AI and vector databases are revolutionizing video surveillance with real-time analysis, faster threat detection, and intelligent search capabilities for enhanced security.

RocketQA: Optimized Dense Passage Retrieval for Open-Domain Question Answering

RocketQA is a highly optimized dense passage retrieval framework designed to enhance open-domain question-answering (QA) systems.