Streamlining Data Processing with Zilliz Cloud Pipelines: A Deep Dive into Document Chunking

Streamlining data processing using Zilliz Cloud Pipelines involves examining document chunking. It is a component of transforming unstructured data into a searchable vector collection. Zilliz Cloud Pipelines’ ingestion, deletion, and search pipelines further facilitate this process. Each serves a different purpose in the data processing workflow.

Zilliz Cloud Pipelines play a role in simplifying the document chunking process and enhancing searchability. The platform enables use cases with semantic search in text documents and provides a critical building block for Retrieval-Augmented Generation (RAG) applications.

Zilliz Cloud Pipelines include various functions like SEARCH_DOC_CHUNK, which convert the query text into vector embedding. It will then retrieve the top-K relevant document chunks, making it easier to find the related information based on the query’s meaning.

Zilliz Cloud Pipelines: Transforming Data Processing

The engineers at Zilliz designed Zilliz Cloud Pipelines to transform unstructured data from various sources into a searchable vector collection for busy Gen AI developers. This pipeline will take unstructured data, split it, convert it to embeddings, index it, and store it in Zilliz Cloud with the designated metadata. This architecture uses various key technological components and capabilities that enhance performance.

Technological Foundation

Milvus Vector Database

Milvus Vector Database is designed to handle high-dimensional vector data efficiently. It further supports different use cases, including Zilliz Cloud, a cloud service that helps developers focus on core applications rather than managing database operations.

Connectors

The connectors are an in-built tool that effortlessly connects various data sources, enabling real-time data ingestion and indexing to ensure the freshest content is instantly accessible for all search inquiries. Scalable and adaptive, the connectors can seamlessly handle fluctuating traffic loads for smooth scalability with zero DevOps hassle. They automatically sync document additions and deletions to the collection, keeping it in sync. Moreover, detailed logging provides observability into the dataflow, ensuring transparency and the ability to detect any anomalies that may arise.

Document Splitters

Splitters are commonly used in document processing and text analysis tasks to divide a document or text into smaller chunks or segments based on specified characters or patterns. These chunks can then be processed or analyzed separately. For example, for Gen AI applications, splitters may be used to split a long text into individual sentences or paragraphs before converting it into vector embeddings. These embeddings are to find semantically similar chunks.

Machine Learning Models

Machine learning models are used to create Vector embeddings. These embeddings capture semantic relationships and contextual information, enabling algorithms to understand and process language more effectively. Open source, commercial, and private machine learning models can be installed on-prem or in your cloud environment or accessible via an API to generate vector embeddings.

Rerankers

In information retrieval systems, a reranker refines the initial search results to improve their relevance to the query. Unlike just vector approximate nearest neighbor (ANN) search for retrieval, adding a reranker can boost search quality by better assessing the semantic relationship between documents and the query. For retrieval-augmented generation (RAG) applications, rerankers can enhance the accuracy of generated answers by providing a smaller but higher-quality set of context documents. However, rerankers are computationally expensive, leading to increased costs and longer query latency times than ANN retrieval alone. The decision to integrate a reranker should balance the need for improved relevance against performance and cost constraints.

Capabilities

Ingestion Pipeline Creation

Users have the option to create pipelines either through the user-friendly Zilliz Cloud console or via the more customizable RESTful APIs. This dual approach ensures that users can tailor their pipeline creation experience according to their specific needs and preferences, whether they prioritize simplicity or require advanced customization options. When creating the pipeline, users can choose to split their documents first then choose from one of six models (zilliz/bge-base-en-v1.5, voyageai/voyage-2, voyageai/voyage-code-2, openai/text-embedding-3-small, and openai/text-embedding-3-large. For the Chinese language, only zilliz/bge-base-zh-v1.5) to create vector embeddings. They can also save any pertinent metadata which will be useful during retrieval.

Data source connection

Connectors, as integral tools in Zilliz Cloud, are designed to simplify the process of linking diverse data sources with a vector database. With the intuitive instructions provided in the user interface (UI), users can effortlessly integrate various data sources into their pipelines. This not only enhances the versatility and expansiveness of their data processing capabilities but also underscores the user-friendly nature of Zilliz Cloud.

For instance, a connector serves as a mechanism for ingesting data into Zilliz Cloud from a range of sources, including Object Storage, Kafka (soon to be available), and more. Taking the object storage connector as an example, it enables users to monitor a directory within an object storage bucket and synchronize files such as PDFs and HTML to Zilliz Cloud Pipelines. Subsequently, these files can be converted into vector representations and stored in the vector database for search. With dedicated ingestion and deletion pipelines, the files and their corresponding vector representations within Zilliz Cloud remain synchronized. The vector database collection automatically reflects any addition or removal of files in the object storage, ensuring data consistency and accuracy.

Running Search pipeline

Once pipelines are created, they can be executed to process unstructured data, facilitating various functionalities within the Zilliz Cloud ecosystem. For instance, upon creating an Ingestion pipeline, users can initiate its execution to transform unstructured data into searchable vector embeddings. These embeddings can then be stored in a Zilliz Cloud vector database, enhancing the accessibility and efficiency of data retrieval.

Similarly, upon creating a Search pipeline, users can run it, enabling the system to conduct a semantic search. This functionality allows users to explore and retrieve relevant information from the vector database, leveraging the power of semantic analysis to refine search results.

Additionally, upon creating a deletion pipeline, users can initiate its execution by removing all chunks associated with a specified document from a collection within the vector database. This capability ensures the integrity and cleanliness of the database by facilitating the removal of unnecessary or outdated data chunks.

Estimate Pipeline cost

The cost associated with running pipelines is quantified in terms of tokens, which serve as the fundamental unit of measurement. Analogous to how Large Language Models (LLMs) utilize tokens as the basic building blocks, pipelines undertake document processing and search query execution by parsing and embedding text as a sequence of tokens. Users can leverage our Estimate Pipeline Usage tool to gain insights into the cost implications of executing a pipeline. This tool facilitates counting tokens within a file or text string, enabling users to gauge the anticipated resource utilization and associated costs of running a pipeline. This tool allows users to make informed decisions regarding resource allocation and budgeting, ensuring optimal efficiency and cost-effectiveness in their pipeline operations.

Reranking search results

The initial retrieval with Approximate Nearest Neighbor (ANN) vector search alone is very efficient and often yields satisfactory results. In many situations, the secondary stage of reranking may be deemed optional. For applications with high-quality standards, employing a reranker can enhance accuracy, albeit with increased computational demands and longer search times. Typically, integrating a reranker model can add 100ms to a few seconds of latency to the search query, depending on factors like topK selection and reranker model size. When the initial retrieval yields incorrect or irrelevant documents, using a reranker effectively filters them out, thus improving the quality of the final generated response.

If a reranker is deemed necessary for your use case, you can choose it from the UI.

Advantages

Flexible Pipeline Creation: Users can create pipelines through the user-friendly Zilliz Cloud console or the more customizable RESTful APIs. This dual approach allows users to tailor the pipeline creation experience to their specific needs and preferences, whether they prioritize simplicity or require advanced customization options.

Seamless Data Source Integration: Connectors in Zilliz Cloud simplify linking diverse data sources with a vector database. With intuitive instructions in the user interface (UI), users can effortlessly integrate various data sources into their pipelines, enhancing the versatility and expansiveness of their data processing capabilities.

Cost Estimation and Optimization: Zilliz Cloud provides an Estimate Pipeline Usage tool that helps users gauge the anticipated resource utilization and associated pipeline costs. Users can make informed decisions regarding resource allocation and budgeting by counting tokens within a file or text string, ensuring optimal efficiency and cost-effectiveness in their pipeline operations.

Zilliz Cloud pipelines

Ingestion Pipelines

The main purpose of ingestion pipelines is that they are designed to process unstructured data like text pieces and documents into searchable vector embeddings. They contain a function INDEX_DOC, which splits the input text document into various chunks and generates a vector embedding for each chunk. The input field (doc_url) is mapped to four output fields (doc_name, chunk_id, chunk_text, and embedding) as the scalar and vector fields of the automatically generated collection.

Search Pipelines

The process of search pipelines is pretty straightforward. It helps semantic search by converting a query string into a vector embedding and retrieving the top-K vectors with their metadata and corresponding text. For this, a function named SEARCH_DOC_CHUNK is used.

Deletion Pipelines

Deletion pipelines are used to remove all chunks in a specified document from a collection. This makes it easier to purge all data of a document. There is a function named PURGE_DOC_INDEX that deletes all document chunks that have a specified doc_name.

The Mechanics of Document Chunking

The goal of chunking is, as its name suggests, to break down information into multiple smaller pieces to store it more efficiently and meaningfully. This allows the retrieval to capture pieces of information that are more related to the question and the generation to be more precise but less costly, as only a part of a document will be included in the LLM prompt instead of the whole document. Finally, document chunking makes searchability efficient because information is retrieved based on semantic understanding rather than exact matches.

With Zilliz Cloud Pipelines, you can split documents into sentences, paragraphs, lines, or a list of customized strings. In addition, you can define the chunk size. By default, each contains at most 500 tokens. The following table lists the mapping relationship between applicable models and their corresponding chunk size ranges.

| Model | Chunk Size Range |

|---|---|

| zilliz/bge-base-en-v1.5 | 20-500 tokens |

| zilliz/bge-base-zh-v1.5 | 20-500 tokens |

| voyageai/voyage-2 | 20-3,000 tokens |

| voyageai/voyage-code-2 | 20-12,000 tokens |

| voyageai/voyage-large-2 | 20-12,000 tokens |

| openai/text-embedding-3-small | 250-8,191 tokens |

| openai/text-embedding-3-large | 250-8,191 tokens |

Practical Applications and Benefits of Document Chunking

Document chunking has a wide range of practical applications in various domains. By breaking down text data into manageable pieces, a more streamlined approach is offered to handling large volumes of unstructured data. The following are some of the real-world applications of document chunking.

Real-World Applications

Content Management

In content management, the document chunking process makes it easier to index and retrieve large documents by breaking them into small pieces. This approach makes searching efficient and allows users to find the desired information quickly.

Research

Document chunking comes in handy in the research domain to index academic papers, reports, and research articles. Due to this, the researchers can search for their specific segments of interest.

Legal

Chunking documents assist in the legal domain as well. Chunking facilitates searching specific clauses, contracts, terms, and court decisions. This improves the accuracy and efficiency of legal research.

Medical

Medical professionals can also use document chunking to process and index patients’ medical records, clinical guidelines, and research papers. This helps them access critical medical information without delay.

Benefits

Chunking offers three key benefits in the context of Retrieval Augmented Generation (RAG) models:

- Enhanced Precision: By breaking down text into smaller, more manageable chunks, chunking enables the retrieval process to capture information specifically relevant to the query. This enhances the precision of search results and ensures that retrieved information aligns closely with the user's requirements.

- Reduced Computational Costs: Chunking minimizes computational costs by including only relevant document portions in the language model prompt. By avoiding unnecessary information, chunking helps optimize processing overhead, resulting in more efficient text retrieval and generation processes.

- Improved Coherence and Relevance: Chunking strategies such as Document Specific Chunking respect the original organization of the document, creating chunks aligned with logical sections such as paragraphs or subsections. This approach maintains text coherence and relevance, particularly for structured documents, leading to more meaningful and contextually appropriate search results.

Implementing Document Chunking with Zilliz Cloud Pipelines: A Step-by-Step Guide

Implementing document chunking with Zilliz Cloud Pipelines involves a number of steps. The comprehensive guide includes setup, configurations, best practices, and optimization strategies. Key points involve signing up for Zilliz Cloud, creating pipelines, indexing documents, and customizing chunking strategies.

Setup and Configuration

Sign Up or Log In

The first step involves signing up or signing in to the Zilliz Cloud account. This will help you to access the Zilliz Cloud Pipelines Service.

Create Pipelines

Users must follow the instructions given by Zilliz in order to create the ingestion, search, and deletion pipelines. Make sure to note down the search pipeline ID and API-Key for further operations.

Web UI Method

- Navigate to Your Project

- Go to your project using Zilliz Cloud Web UI.

- In the navigation panel, select the “Pipelines” option.

- Navigate to the “Overview” tab and then select “Pipelines”.

- Click on “+Pipeline” to create a new pipeline.

- Choose Pipeline Type

- Select “+Pipeline” button to create a new pipeline.

- Make sure to create the ingestion pipeline before the search and deletion pipelines.

- Configure the Ingestion Pipeline

- Click on the cluster where the new collection will be created.

- Enter the name of the newly created collection.

- Provide a name by following the format restrictions.

- Describe the pipeline if you want.

- Add Functions to the Pipeline

- ·Use INDEX_DOC function as it divides the document into smaller chunks, vectorizes each chunk, and saves the vector embeddings.

- Use PRESERVE function to add scalar fields into the collection during data ingestion.

- Customization of Chunking Strategy

- Customize the splitter in order to determine how the document is divided into chunks. Use characters like “.”, “\n” or any other customized strings.

- Save Your Pipeline Configuration

- After adding and configuring functions now is the time to save your pipeline.

- Click on “Create Ingestion Pipeline”.

RESTful API Method

Create an Ingestion Pipeline via API. Use the curl command to create an ingestion pipeline.

--header "Content-Type: application/json" \

--header "Authorization: Bearer ${YOUR_API_KEY}" \

--url "https://controller.api.{cloud-region}.zillizcloud.com/v1/pipelines" \

-d '{

"projectId": "proj-xxxx",

"name": "my_doc_ingestion_pipeline",

"description": "Pipeline description",

"type": "INGESTION",

"functions": [

{

"name": "index_my_doc",

"action": "INDEX_DOC",

"inputField": "doc_url",

"language": "ENGLISH",

"chunkSize": 500,

"embedding": "zilliz/bge-base-en-v1.5",

"splitBy": ["\n\n", "\n", " "]

},

{

"name": "keep_doc_info",

"action": "PRESERVE",

"inputField": "publish_year",

"outputField": "publish_year",

"fieldType": "Int16"

}

],

"clusterId": "${CLUSTER_ID}",

"newCollectionName": "my_new_collection"

}'

- Managing Pipelines

- View and Manage Pipelines

- Click on “Pipelines” to see the available pipelines along with their details and token usage.

- Use GET request via API to list or view the details of pipelines.

- Run Pipelines

- To process and store data in vector format run the ingestion pipeline.

- Now execute the search pipeline to perform the semantic searches.

- Make use of the deletion pipeline to remove unwanted document chunks from the collection.

- Estimate Pipeline Usage

- You can see the token usage for running pipelines with the help of Zilliz’s Web UI.

- Drop Pipeline

- You can drop the pipeline that hasn’t been in use via API (GET request) or using web UI.

Index Documents

Users can enter the URL of the document in the doc_url field when in the pipeline playground. If they wish to ingest the document then they will click on “RUN” and the process will be done. This step converts the document into a searchable vector collection.

Customize Chunking Strategy

With Zilliz Cloud Pipelines you can customize the chunking strategy. Users can define the chunk size using a splitter which is used to divide the document into chunks. Moreover, custom separators for sentences, lines, and paragraphs can also be specified.

Choose a Model

Choose a model for generating vector embeddings based on the desired chunk size and overall size of your document. Zilliz Cloud Pipelines show support with various models, each having its own chunk size range.

Best Practices

- Experiment with different chunk sizes to find the optimal balance between the retrieval quality and efficiency.

- Use the splitter feature to divide the documents into small chunks based on specific criteria such as paragraphs, custom separators, or sentences.

- Keep checking the pipeline usage and be aware of Zilliz Cloud Limits.

Troubleshooting Tips and Optimization Strategies

- Your document should be compatible with the INDEX_DOC function.

- For markdown or HTML files, the INDEX_DOC function divides the document by headers, then larger sections based on the specified chunk size. So, make sure to adjust the chunk size accordingly.

- If the chosen model is not giving the best results, then you can also experiment with other models.

Conclusion

Zilliz Cloud Pipelines turn unstructured data into a searchable vector collection. They significantly impact data processing and semantic search. Zilliz Cloud Pipelines can search images, video frames, and multi-modal documents. Additional pipelines for these types of searches will be added in the future.

To sum up, Zilliz Cloud Pipelines has completely changed data processing and semantic search by streamlining the document chunking process and improving searchability. When working with unstructured data, its features—which include the ability to create pipelines for ingestion, search, and deletion—make it a priceless tool for developers and data engineers. The platform promises to revolutionize further how we process and search for information by emphasizing efficiency, scalability, and ease of use. This encourages exploration and integration into diverse data workflows.

If you want to try Zilliz Cloud Pipelines for yourself, try out this bootcamp titled Build RAG using Zilliz Cloud Pipelines.

- Zilliz Cloud Pipelines: Transforming Data Processing

- Technological Foundation

- Capabilities

- Advantages

- Zilliz Cloud pipelines

- The Mechanics of Document Chunking

- Practical Applications and Benefits of Document Chunking

- Implementing Document Chunking with Zilliz Cloud Pipelines: A Step-by-Step Guide

- Best Practices

- Troubleshooting Tips and Optimization Strategies

- Conclusion

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Cloud Audit Logs Goes GA: Security, Compliance, and Transparency at Scale

Zilliz Cloud Audit Logs are GA—delivering security, compliance, and transparency at scale with real-time visibility and enterprise-ready audit trails.

Zilliz Named "Highest Performer" and "Easiest to Use" in G2's Summer 2025 Grid® Report for Vector Databases

This dual recognition shows that Zilliz solved a challenge that has long defined the database industry—delivering enterprise-grade performance without the complexity typically associated with it.

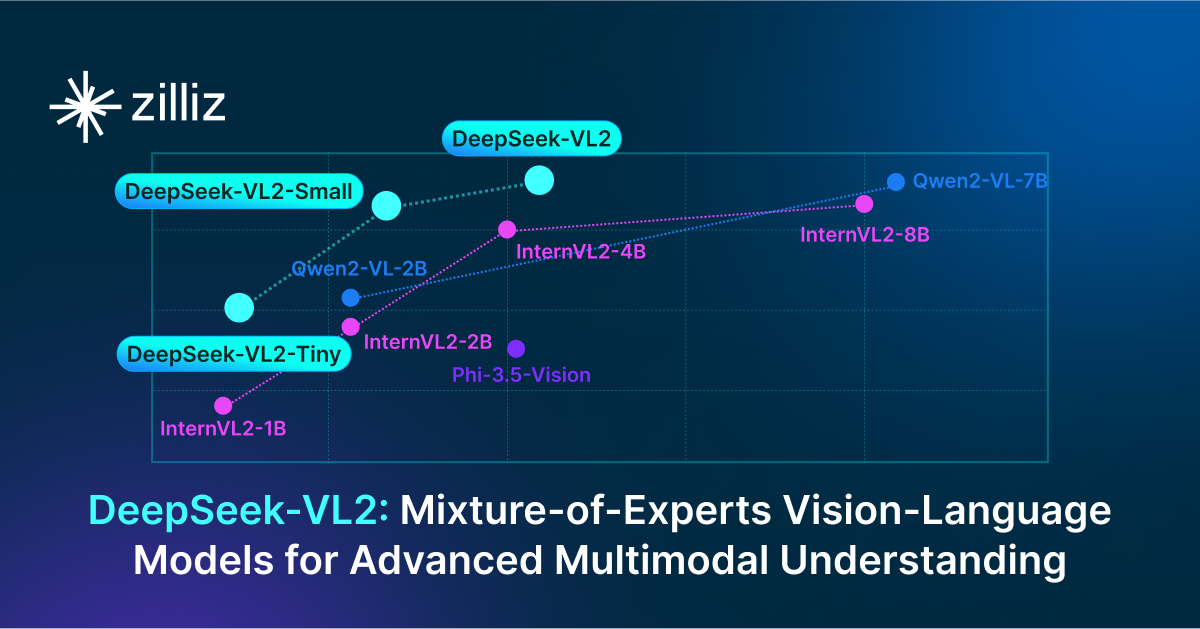

DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding

Explore DeepSeek-VL2, the open-source MoE vision-language model. Discover its architecture, efficient training pipeline, and top-tier performance.