Milvus Performance Evaluation 2023

At Zilliz, developers often ask us: "How does Milvus compare to previous versions for embedding workloads?" There are two main reasons for this. Firstly, developers starting a new project are eager to use the latest software versions to access the latest features but may be hesitant due to concerns about performance degradation. Secondly, current users may also have performance concerns and will only upgrade if the new capabilities are appealing.

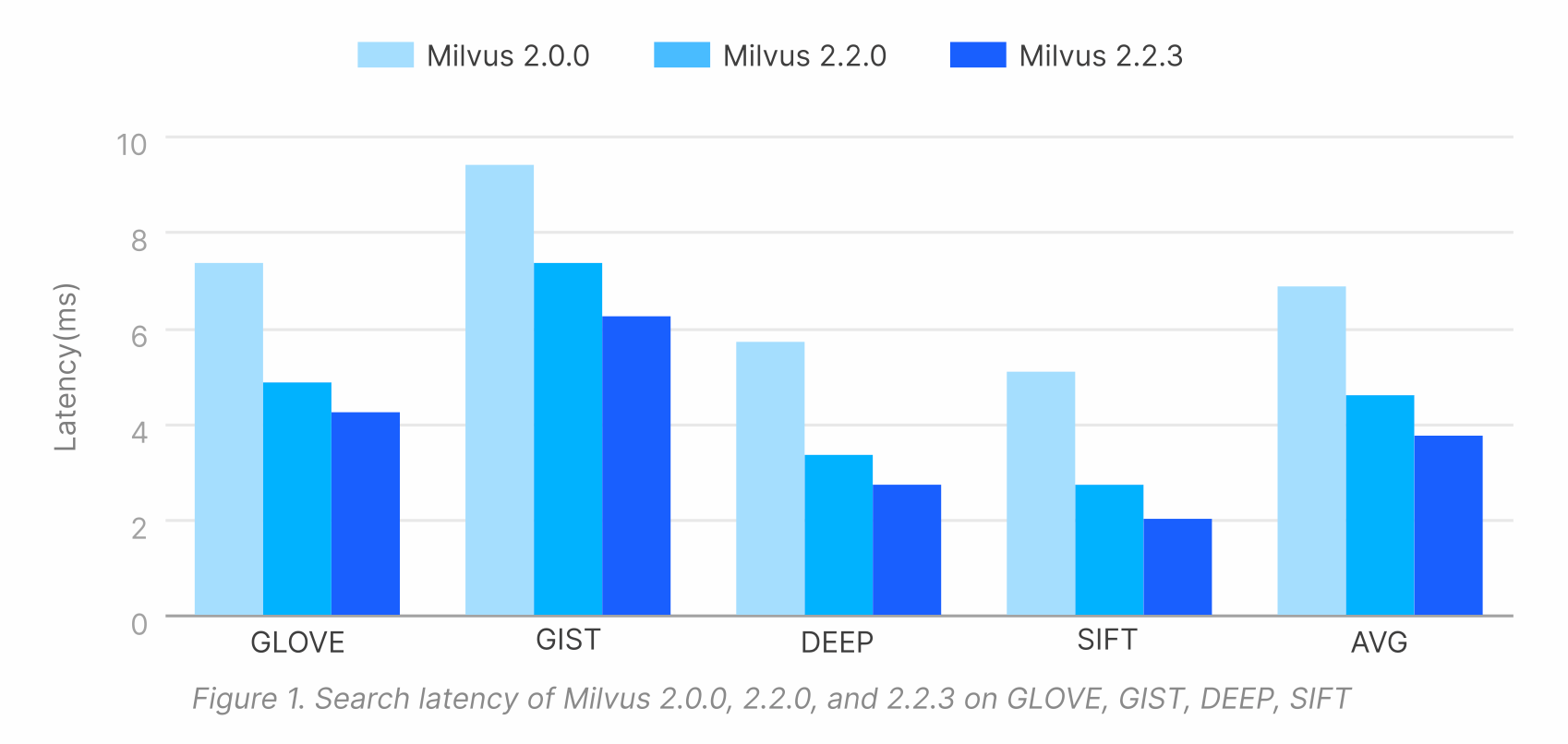

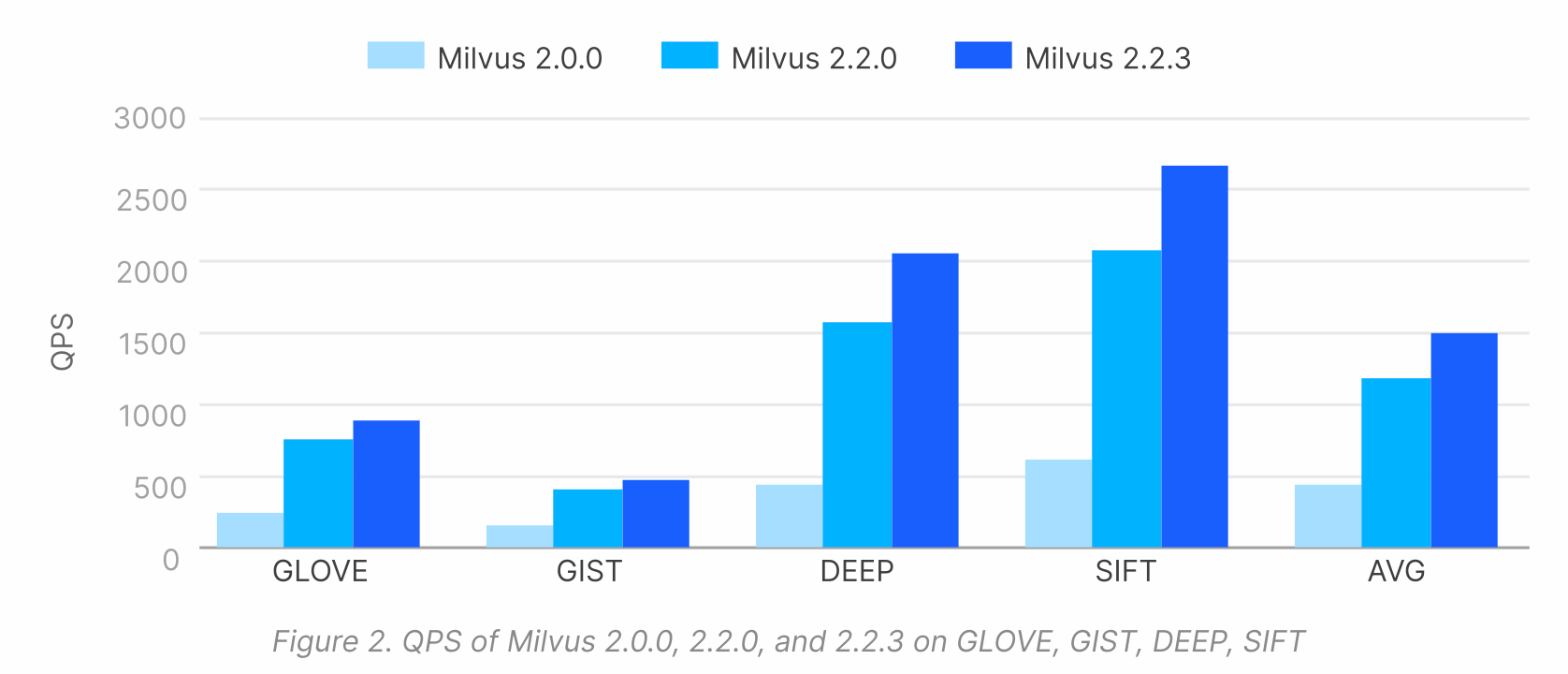

To alleviate these concerns, we would like to share the latest benchmarks conducted on Milvus v2.2.3 vs. Milvus 2.2.0 and Milvus v2.0.0, comparing the search latencies and throughput across four well-known datasets (DEPP, GIST, GloVe, and SIFT). The benchmarks showed that Milvus v2.2.3 outperforms v2.2.0 and v2.0.0, significantly improving search and indexing speeds. Specifically, in Milvus 2.2.3, we achieved a 2.5x reduction in search latency compared to the original Milvus 2.0.0 release. In addition, our testing under an identical environment showed a 4.5x increase in QPS with Milvus 2.2.3 compared to 2.0.0.

We felt that this data would prove valuable to engineers evaluating the suitability of the latest version of Milvus for their similarity search use cases; Download the "Milvus Performance Evaluation 2023" technical paper to read the Methodology and Evaluation Results.

Our overriding goal was to create a consistent, up-to-date comparison that reflects the latest Milvus developments. We will periodically re-run these and other vector database benchmarks and update our detailed technical paper with our findings. All of the code for these benchmarks is available on Github. Feel free to open up issues or pull requests on that repository if you have any questions, comments, or suggestions.

Versions tested

- Milvus v2.2.3 | release date: February 10, 2023

- Milvus v2.2.2 | release date: November 18, 2022

- Milvus v2.0.0 | release date: January 25, 2022

We identified the most commonly evaluated characteristics for working with embeddings in building a representative benchmark suite. We looked at performance across:

- Query latency – measured in milliseconds

- QOS – queries per second

- Latency vs. Throughput

- Scalability at the Billion-scale

- Scalability on Multi-replica

About the dataset

In addition, there are six datasets used for the benchmarks:

- Four (4) datasets from ann-benchmarks were used to evaluate the different Milvus versions' search performance.

- Two (2) billion-scale datasets (BIGANN and LAION's LAION-5B) were used to evaluate the scalability of Milvus 2.2.3.

Results

ANN Search Latency

Milvus 2.2.3 we achieved a 2.5x reduction in search latency compared to the original Milvus 2.0.0 release.

Search Latency of Milvus 2.0.0, 2.2.0 and 2.2.3.png

Search Latency of Milvus 2.0.0, 2.2.0 and 2.2.3.png

ANN Search Throughput

We saw a 4.5x increase in QPS with Milvus 2.2.3 compared to 2.0.0.

QPS of Milvus 2.0.0, 2.2.9 and 2.2.3.png

QPS of Milvus 2.0.0, 2.2.9 and 2.2.3.png

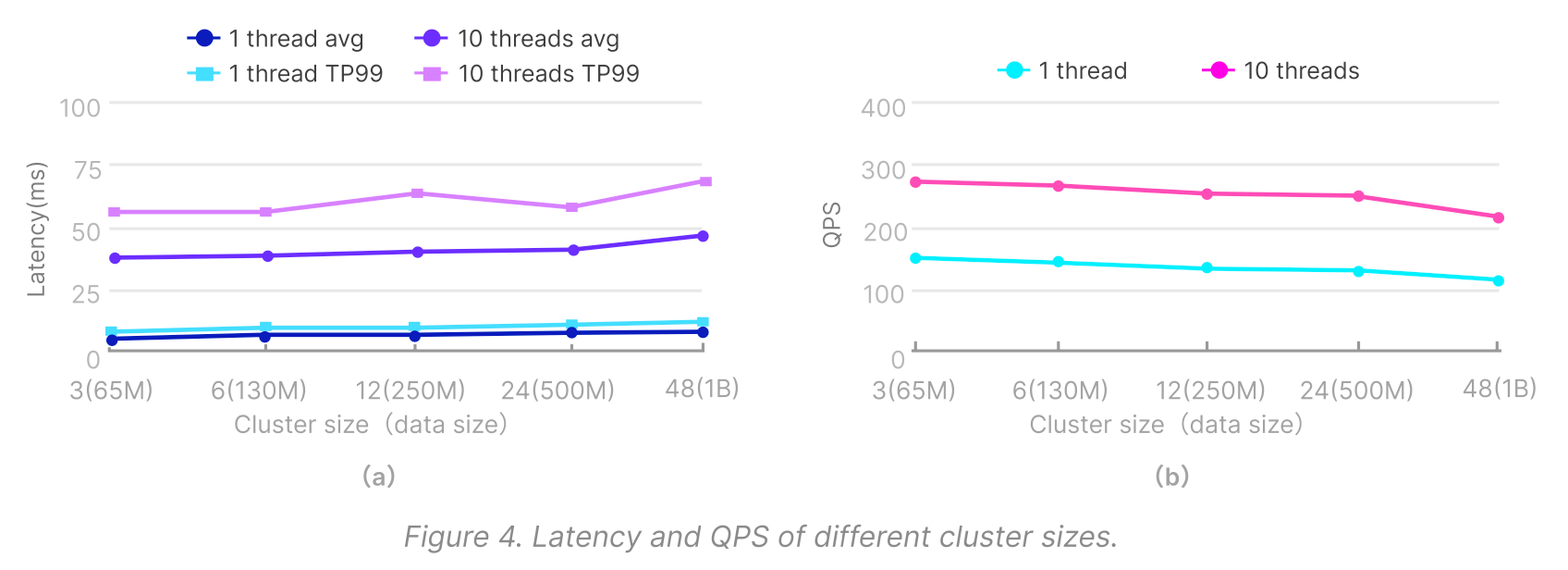

Billion Scale

A scaled-out Milvus 2.2.3 cluster showed little performance degradation in both search latency and QPS.

Latency and QPS of differnt cluster sizes | Milvus.png

Latency and QPS of differnt cluster sizes | Milvus.png

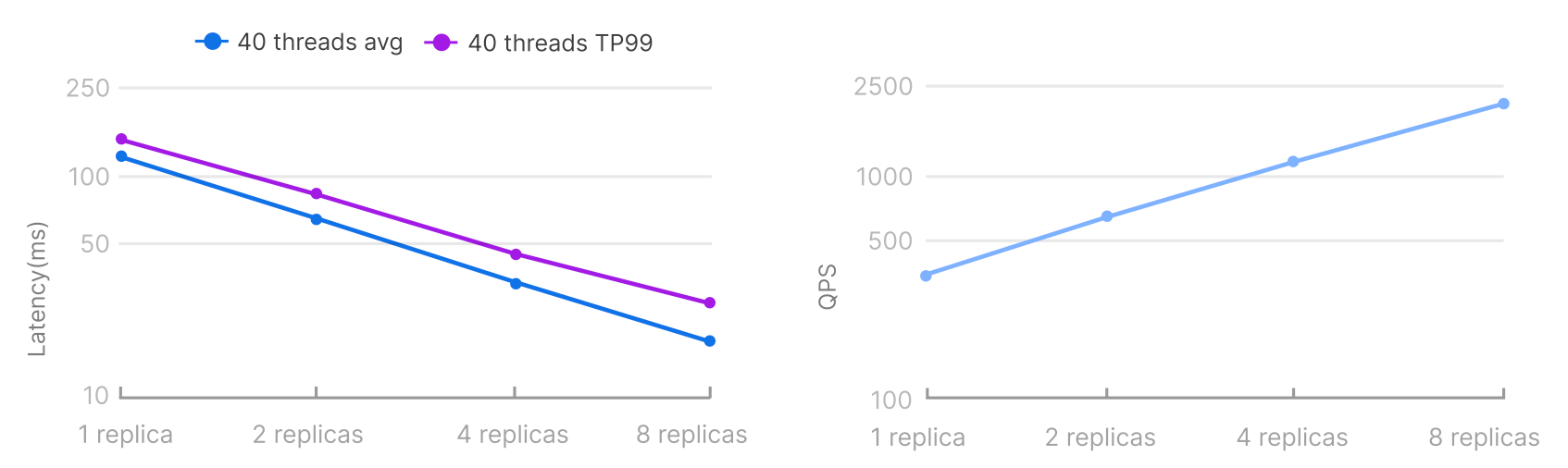

Multi-replicat (Linear scalability)

Milvus 2.2.3 showed linear scalability when using multiple replicas.

Latency and QPS at replica number doubles | Milvus.png

Latency and QPS at replica number doubles | Milvus.png

Summary

We highly encourage developers to run these benchmarks to independently verify the results on the environment and data sets of their choice.

What's next?

- Download the detailed technical paper: "Milvus Performance Evaluation 2023."

- Sign Up and get started with Zilliz Cloud.

- Join us on the Slack Community!

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

How Zilliz Saw the Future of Vector Databases—and Built for Production

Zilliz anticipated vector databases early, building Milvus to bring scalable, reliable vector search from research into production AI systems.

Zilliz Cloud Introduces Advanced BYOC-I Solution for Ultimate Enterprise Data Sovereignty

Explore Zilliz Cloud BYOC-I, the solution that balances AI innovation with data control, enabling secure deployments in finance, healthcare, and education sectors.

Why Deepseek is Waking up AI Giants Like OpenAI And Why You Should Care

Discover how DeepSeek R1's open-source AI model with superior reasoning capabilities and lower costs is disrupting the AI landscape and challenging tech giants like OpenAI.