Chat with Towards Data Science Using LlamaIndex

In this second post of the four-part Chat Towards Data Science blog series, we show why LlamaIndex is the leading open source data retrieval framework.

This article is the second post of the four-part Chat Towards Data Science blog series. See the first part for how to build a chatbot with Zilliz Cloud.

LlamaIndex is the leading open source data retrieval framework, and you’re about to see why. Chatbots with access to your internal knowledge store are a popular use case for many businesses. The more documents you have, the harder it becomes to manage. When it comes to making these chatbots, you have three major concerns: how to chunk the data, what metadata to store, and how to route your queries.

The Chat TDS project so far

This tutorial builds on our last tutorial on how to build a chatbot for Towards Data Science. In the last post, we made the simplest possible retrieval augmented generation chatbot using just Milvus/Zilliz. I put it up on Zilliz Cloud, the fully managed Milvus, so we could have access to the same vector database across multiple projects. You can use the free tier of Zilliz Cloud for this project, or use your own instance of Milvus, which you can spin up in the notebook using Milvus Lite.

Last time we examined our splits and saw that we had many small chunks of text. When we tried simple retrieval for the question “what is a large language model?”, the chunks we got back were semantically similar, but didn’t answer the question. In this project, we see how we can use the same vector database as the backend, but use a different retrieval process to get much better results. In this case, we use LlamaIndex to orchestrate that effective retrieval.

Why use LlamaIndex for a chatbot?

LlamaIndex is a framework for working with your data on top of a large language model. One of the major abstractions that LlamaIndex offers is the “index” abstraction. An index is a model of how the data is distributed. On top of that, LlamaIndex also offers the ability to turn these indexes into query engines. The query engine leverages a large language model and an embedding model to orchestrate effective queries and retrieve relevant results.

LlamaIndex and Milvus for Chat Towards Data Science

So, LlamaIndex helps us with orchestrating our data retrieval, how does Milvus help? Milvus is what we use as our backend for persistent vector storage with LlamaIndex. Using a Milvus or Zilliz instance allows us to port our vectors from one framework to another. In this case, we’re moving from a Python native, no orchestration app to a retrieval application powered by LlamaIndex.

Setting up your notebook to use Zilliz and LlamaIndex

As we covered earlier, for this set of projects (Chat with Towards Data Science), we use Zilliz for easier portability. Connecting to Zilliz Cloud and connecting to Milvus is almost the exact same. For an example of how to connect to Milvus and use Milvus as your local vector store, see this example on comparing vector embeddings.

For this notebook, we need to install three libraries, which we can do through pip install llama-index python-dotenv openai. I like to use python-dotenv for environment variable management.

After we get our imports, we need to load our .env file with load_dotenv(). The three environment variables we need for this project are our OpenAI API key, the URI for our Zilliz Cloud cluster, and the token for our Zilliz Cloud cluster.

! pip install llama-index python-dotenv openai

import os

from dotenv import load_dotenv

import openai

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

zilliz_uri = os.getenv("ZILLIZ_URI")

zilliz_token = os.getenv("ZILLIZ_TOKEN")

Bring your own existing collection to LlamaIndex

For this project, we are bringing an existing collection to LlamaIndex. This poses a unique challenge. LlamaIndex has its own internal structure for creating and accessing a vector database collection. However, this time, we aren’t using LlamaIndex to build our collection directly.

The primary differences between the native LlamaIndex vector store interface and bringing your own models are the embeddings and the way the metadata is accessed. I actually wrote a LlamaIndex contribution to enable this project!

LlamaIndex uses OpenAI embeddings by default, but we used a HuggingFace model to generate our embeddings. This means we have to pass the right embedding model. In addition, we use a different field to store our text. We use “paragraph” while LlamaIndex uses “_node_content” by default.

We need four imports from LlamaIndex for this section. First, we need MilvusVectorStore to use Milvus with LlamaIndex. We also need the VectorStoreIndex module to use Milvus as a vector store index, and the service context module to pass the services we want to use around. The last import we need here is the HuggingFaceEmbedding module so we can use an open source embedding model from Hugging Face.

Let’s start by getting our embedding model. All we have to do is declare a HuggingFaceEmbedding object and pass in our model name. In this case, the MiniLM L12 model. Next, we create a service context object so we can pass this embedding model around.

from llama_index.vector_stores import MilvusVectorStore

from llama_index import VectorStoreIndex, ServiceContext

from llama_index.embeddings import HuggingFaceEmbedding

embed_model = HuggingFaceEmbedding(model_name="sentence-transformers/all-MiniLM-L12-v2")

service_context = ServiceContext.from_defaults(embed_model=embed_model)

We also need to connect to our Milvus vector store. For this project, we pass five parameters: the URI for our collection, the token to access our collection, the collection name we used (“Llamalection” by default), the similarity metric we used, and the key that corresponds to which metadata field stores the text.

vdb = MilvusVectorStore(

uri = zilliz_uri,

token = zilliz_token,

collection_name = "tds_articles",

similarity_metric = "L2",

text_key="paragraph"

)

Querying your existing Milvus collection using LlamaIndex

Now that we’ve connected our existing Milvus collection and pulled the model we need, let’s check out our query. First, we create a storage context object to pass our Milvus vector database around. Then, we turn our Milvus collection into a vector store index. This is also where we pass our embedding model using the service context object we made above.

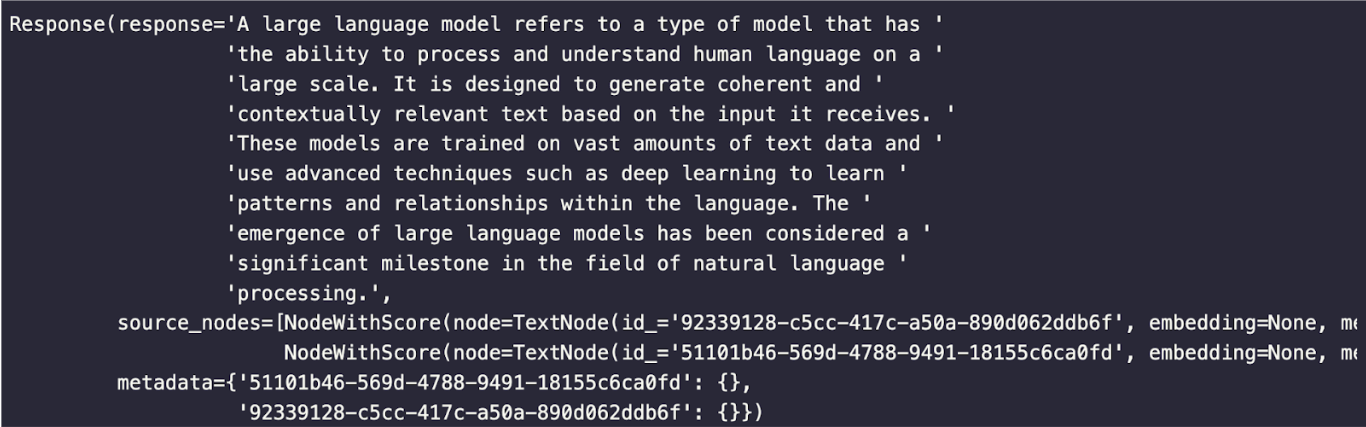

With a vector store index object initialized, we simply call the as_query_engine() function to turn it into a query engine. For this example, we compare the difference between a straightforward semantic search and the usage of a LlamaIndex query engine using by using the same question as before - “What is a large language model?”

vector_index = VectorStoreIndex.from_vector_store(vector_store=vdb, service_context=service_context)

query_engine = vector_index.as_query_engine()

response = query_engine.query("What is a large language model?")

To make the output look nice, I imported pprint and used it to print the response.

from pprint import pprint

pprint(response)

This is the response that we get using LlamaIndex to do our retrieval. This is a much better result than simple semantic search!

llamaindex-response.png

llamaindex-response.png

Summary of Chat Towards Data Science with LlamaIndex

In this version of our chat Towards Data Science project, we used LlamaIndex along with our existing Milvus collection to improve upon our first version. The first version used simple semantic similarity through vector search to find answers. Those results were not very good. Using LlamaIndex to implement a query engine gives us much better results.

The biggest challenge for this project was bringing our own existing Milvus collection. Our existing collection did not use the default values for the embedding vector dimension nor for the metadata field used to store text. These two differences can be handled through passing a specific embedding model through a service context and defining the correct text field when creating your Milvus Vector Store object.

Once we created our vector store object, we turned it into an index with the Hugging Face embeddings we used. Then, we turned that index into a query engine. The query engine leverages an LLM to make sense of the question as well as collect the responses and construct a better response.

To read the entire Chat Towards Data Science series again, here are the links:

- The Chat TDS project so far

- Why use LlamaIndex for a chatbot?

- LlamaIndex and Milvus for Chat Towards Data Science

- Summary of Chat Towards Data Science with LlamaIndex

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Getting Started with LlamaIndex

Learn about LlamaIndex, a flexible data framework connecting private, customized data sources to your large language models (LLMs).

Persistent Vector Storage for LlamaIndex

Discover the benefits of Persistent Vector Storage and optimize your data storage strategy.

Query Multiple Documents Using LlamaIndex, LangChain, and Milvus

Unlock the power of LLM: Combine and query multiple documents with LlamaIndex, LangChain, and Milvus Vector Database