LlamaIndex

LlamaIndex Integration | Build Retrieval-Augmented Generation applications with Zilliz Cloud and Milvus Vector Database

Use this integration for FreeLlamaIndex Integration, Build Retrieval-Augmented Generation applications with Zilliz Cloud

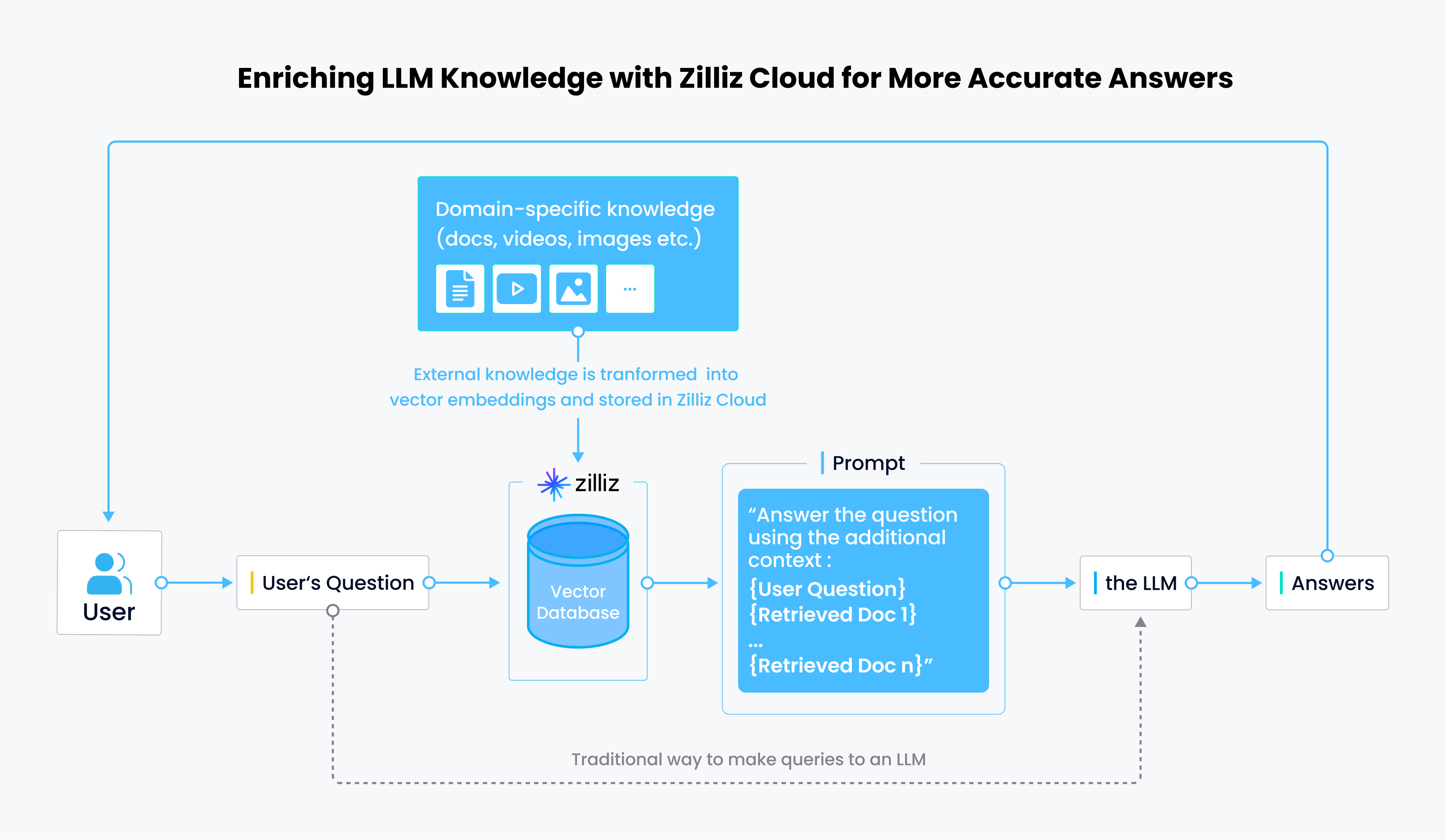

LlamaIndex (formerly GPT Index) is a data framework tailored for Large Language Models (LLM) applications, facilitating the ingestion, structuring, and access of private or domain-specific data. At their core, LLMs act as a bridge between human language and inferred data, both structured and unstructured data. While widely accessible LLMs arrive pre-trained on extensive publicly available datasets, they often are missing critical data, which results in hallucinations or incorrect answers generated from the LLMs.

LlamaIndex integrates with vector databases:

- Internal Index Usage: LlamaIndex can function as an index using a vector store. Similar to traditional indices, this LlamaIndex-based index can store documents and effectively respond to queries.

- External Data Integration: LlamaIndex can retrieve data from vector stores, operating like a conventional data connector. Once retrieved, this data can seamlessly integrate into LlamaIndex's data structures for further processing and utilization. This is often referred to as Retrieval-Augmented Generated or RAG.

How the LlamaIndex Integration with Zilliz Cloud Works

Learn More on How To Use Llama

- Tutorial | Getting Started with LlamaIndex

- Docs | Documentation QA using Zilliz Cloud and LlamaIndex

- Video Shorts with Yujian Tang | Persistent Vector Storage with LlamaIndex

- Video with Jerry Liu, CEO LlamaIndex | Boost your LLM with Private Data using LlamaIndex

- Building a Chatbot with Zilliz Cloud, LlamaIndex and LangChain Part I

- Building LLM Apps with 100x Faster Responses and Drastic Cost Reduction Using GPTCache