Building LLM Augmented Apps with Zilliz Cloud

Over the last few months, the release of GPT-3.5 in Nov 2022 and GPT-4 in Mar 2023 has sparked a seismic shift in how users interact with data and applications. The transformative power of ChatGPT lies not merely in its ability to generate text but in its astounding comprehension and adaptability to context. It provides a more natural and intuitive communication interface, forging an intimate connection between humans and machines and turning arduous tasks into effortless dialogue exchanges. This burgeoning realm of Large Language Models (LLMs) has changed our expectations and desires for software applications, pushing developers toward a future where applications are used and interacted with.

Current challenges of LLMs in applications

Despite their potential, developers must tackle the challenges that LLMs bring when implementing LLMs like ChatGPT in their applications.

First, LLMs are primarily trained on publicly available data, so they sometimes need more nuance and context of domain-specific, proprietary, or private information. This lack of private data access hampers the model's ability to provide solutions or responses tailored to some specialized industries or applications.

The second challenge is known as hallucination. This term refers to the tendency of LLMs to generate responses based on incomplete or inaccurate data. As the LLMs base their answers solely on the data they've been trained on, they can sometimes create misleading, incorrect, or completely fabricated responses when they need more information.

Furthermore, LLMs can struggle with keeping up-to-date. Training data often becomes outdated quickly, and due to the significant costs associated with retraining these models — in the realm of 1.4 million dollars for models like GPT-3 — their knowledge base is not updated regularly. This limitation can cause them to provide outdated information in their responses.

From a performance standpoint, using LLMs for applications can be costly and slow. They operate on a token-based charging system, where LLMs like Chat GPT bill on every token used in a query. The cost can add up quickly, especially when dealing with large volumes of inquiries or repetitive questions. Moreover, performance issues can arise during peak usage hours, leading to frustrating delays. Additionally, LLMs have a hard cap on the number of tokens in query prompts, which restricts the complexity and length of interactions. For instance, ChatGPT-3 has a limit of 4,096 tokens, while GPT-4 has a token limit of 8,192.

Lastly, the pre-training data used by LLMs is immutable. Any incorrect or outdated information included during the training phase will persist, as there currently needs to be a way to modify, correct, or remove such data post-training.

As we venture further into the world of LLM-augmented applications, it's crucial to address these challenges. Overcoming the challenges is where innovative solutions like Zilliz Cloud and GPTCache come into play, which we will explore in the upcoming sections.

Augmenting LLM apps with Zilliz Cloud and GPTCache

Harnessing the full potential of Large LLMs like ChatGPT in applications involves overcoming critical challenges in accuracy, information timeliness, cost-efficiency, and performance, as discussed above. Innovative solutions such as Zilliz Cloud and GPTCache provide robust answers to these issues.

Enhancing accuracy and timeliness

Zilliz Cloud brings transformative solutions that substantially improve the accuracy of responses generated by LLMs and keep their knowledge base up-to-date.

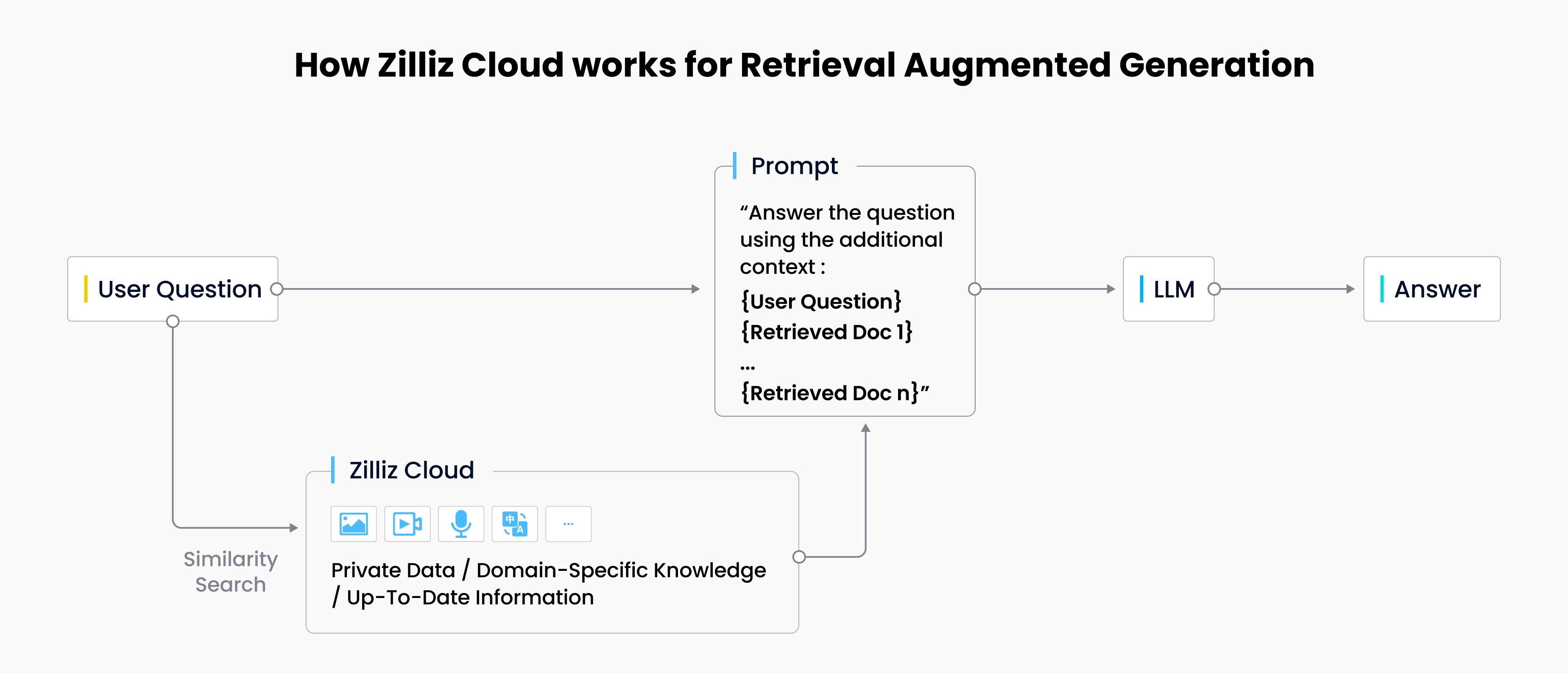

By allowing developers and enterprises to securely store domain-specific, contemporary, and confidential private data outside the LLMs, Zilliz Cloud ensures that the responses are accurate and relevant. When a user poses a question, LLM applications use embedding models to convert the question into vectors. Subsequently, Zilliz Cloud performs a similarity search to offer the top-k results relevant to the query. These results and the original question, form a comprehensive context for the LLM to generate more precise responses. This approach mitigates the hallucination problem, where LLMs might produce incorrect or fabricated information due to insufficient or out-of-date data.

How Zilliz Cloud works for Retrieval Augmented Generation

How Zilliz Cloud works for Retrieval Augmented Generation

Moreover, the integration of Zilliz Cloud allows for regular updates to the knowledge base, ensuring that the LLMs can always access the most recent and accurate information. This feature addresses the problem of LLMs being trained on outdated data, providing users with answers that reflect the latest insights.

Maximizing cost-efficiency and performance

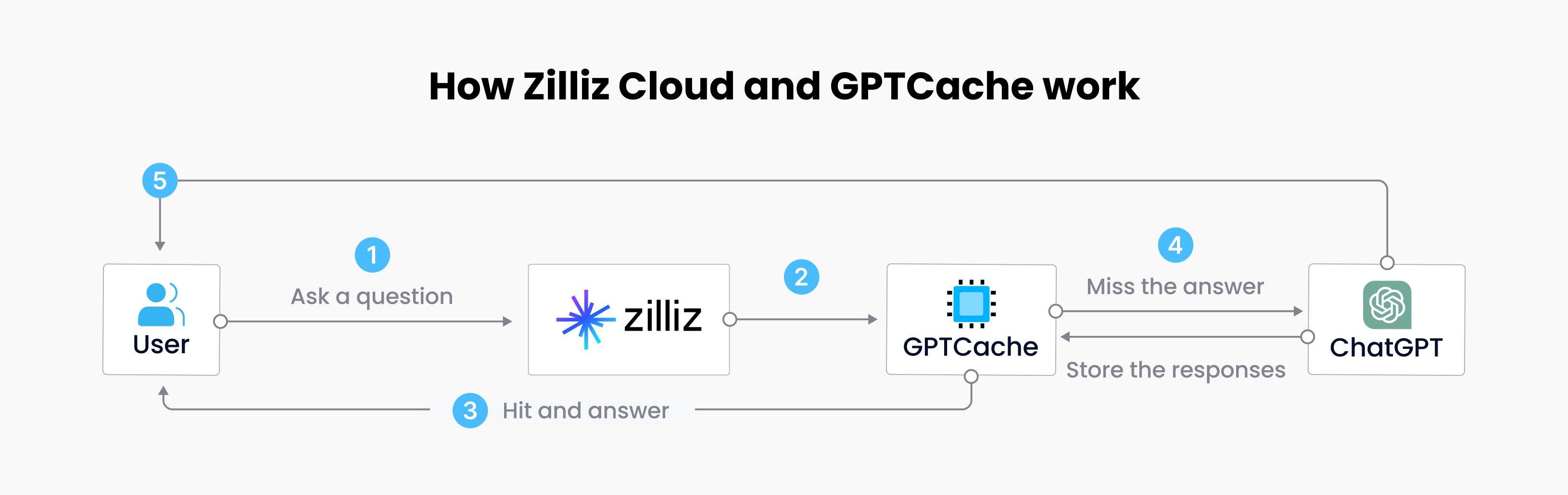

Combining Zilliz Cloud with GPTCache creates an ecosystem that reduces operational costs and enhances the performance of LLM apps.

GPTCache is an open-source semantic cache that stores LLM responses. This storage feature is particularly beneficial for handling repetitive or similar queries, reducing unnecessary LLM invocations that result in increased costs and slowed responses, especially during peak usage. When a user poses a question in this ecosystem, Zilliz Cloud first checks GPTCache for any pre-existing responses. If Zilliz Cloud finds an answer, it quickly returns it to the user. Zilliz Cloud sends the query to the LLM for a response if it doesn't find any results. This response is then stored in GPTCache for future use, optimizing resource utilization.

How Zilliz Cloud and GPTCache work

How Zilliz Cloud and GPTCache work

By working in tandem, Zilliz Cloud and GPTCache present a powerful solution for overcoming the challenges of implementing LLMs in applications. This synergy creates an environment where AI applications can generate more accurate, timely, cost-efficient, and performant responses, significantly improving user experience and interaction.

Get started with the CVP stack to build your LLMs apps

Having explored the robust solutions that Zilliz Cloud and GPTCache provide to enhance LLM applications, the next step is to explore how to apply these tools and start building your own applications. An exciting development in AI technology, the CVP Stack (ChatGPT/LLMs + a vector database + prompt-as-code), presents a robust framework to accomplish this goal. Let's explore this further in the following section.

For those new to this space, OSS Chat is an excellent showcase of the powerful CVP stack. OSS Chat is an AI chatbot that uses grounded information from sources such as GitHub to answer questions about popular open-source software. Under the hood, OSS Chat is built with Akcio, an open-source CVP stack for Retrieval Augmented Generation (RAG). The information retrieval logic is expressed in Ackio to transform GitHub repositories and documentation pages into embedding vectors and store the vector data in Zilliz Cloud. When a user asks about an open-source project, Zilliz Cloud conducts a similarity search to find the top-k most relevant results. These results and the user’s original question are re-composed into a comprehensive prompt for LLM to return a high-quality answer.

To provide a more interactive learning experience, we invite you to join a webinar with OriginTrail and us on September 7. This session will delve deeper into the process of building LLM apps, allowing you to engage directly with experienced developers and experts in the field of LLM app development. The innovative combination of Zilliz Cloud, GPTCache, and LLMs opens up possibilities in the AI application development landscape. There's no better time to explore these groundbreaking technologies and embark on your journey.

Getting started with Zilliz Cloud

- Start for free with the new Starter Plan! A great plan to get started with no installation hassles and does not require a credit card!

- Or start your 30-day free trial of the Standard plan with $100 worth of credits upon registration and the opportunity to earn up to $200 worth of credits in total.

- Dive deeper into the Zilliz Cloud documentation.

- Check out the guide on migrating from Milvus to Zilliz Cloud.

- Current challenges of LLMs in applications

- Augmenting LLM apps with Zilliz Cloud and GPTCache

- Get started with the CVP stack to build your LLMs apps

- Getting started with Zilliz Cloud

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Zilliz Named "Highest Performer" and "Easiest to Use" in G2's Summer 2025 Grid® Report for Vector Databases

This dual recognition shows that Zilliz solved a challenge that has long defined the database industry—delivering enterprise-grade performance without the complexity typically associated with it.

How to Build RAG with Milvus, QwQ-32B and Ollama

Hands-on tutorial on how to create a streamlined, powerful RAG pipeline that balances efficiency, accuracy, and scalability using the QwQ-32B model and Milvus.

AI Integration in Video Surveillance Tools: Transforming the Industry with Vector Databases

Discover how AI and vector databases are revolutionizing video surveillance with real-time analysis, faster threat detection, and intelligent search capabilities for enhanced security.