WhyHow

Build more controlled retrieval workflows within your RAG pipeline with WhyHow and Milvus or Zilliz Cloud

Use this integration for FreeWhat is WhyHow?

WhyHow is a platform that provides developers with the building blocks to organize, contextualize, and reliably retrieve unstructured data to perform complex Retrieval Augmented Generation (RAG). The Rule-based Retrieval Package is a Python package developed by WhyHow that helps developers build more accurate retrieval workflows within RAG by adding advanced filtering capabilities. This package integrates with OpenAI for text generation and Milvus and Zilliz Cloud (fully managed Milvus) for efficient vector storage and similarity search.

Why Integrating WhyHow and Milvus/Zilliz?

Retrieval Augmented Generation (RAG) is an advanced technology that enhances large language models (LLMs) by providing contextual query information for more accurate answers. However, a simple RAG pipeline can sometimes fail to consistently retrieve the correct data chunks. This issue can be due to the black-box nature of retrieval and LLM response generation, poorly phrased user queries yielding suboptimal results from a vector database, or the need to include contextually relevant but semantically dissimilar data in responses.

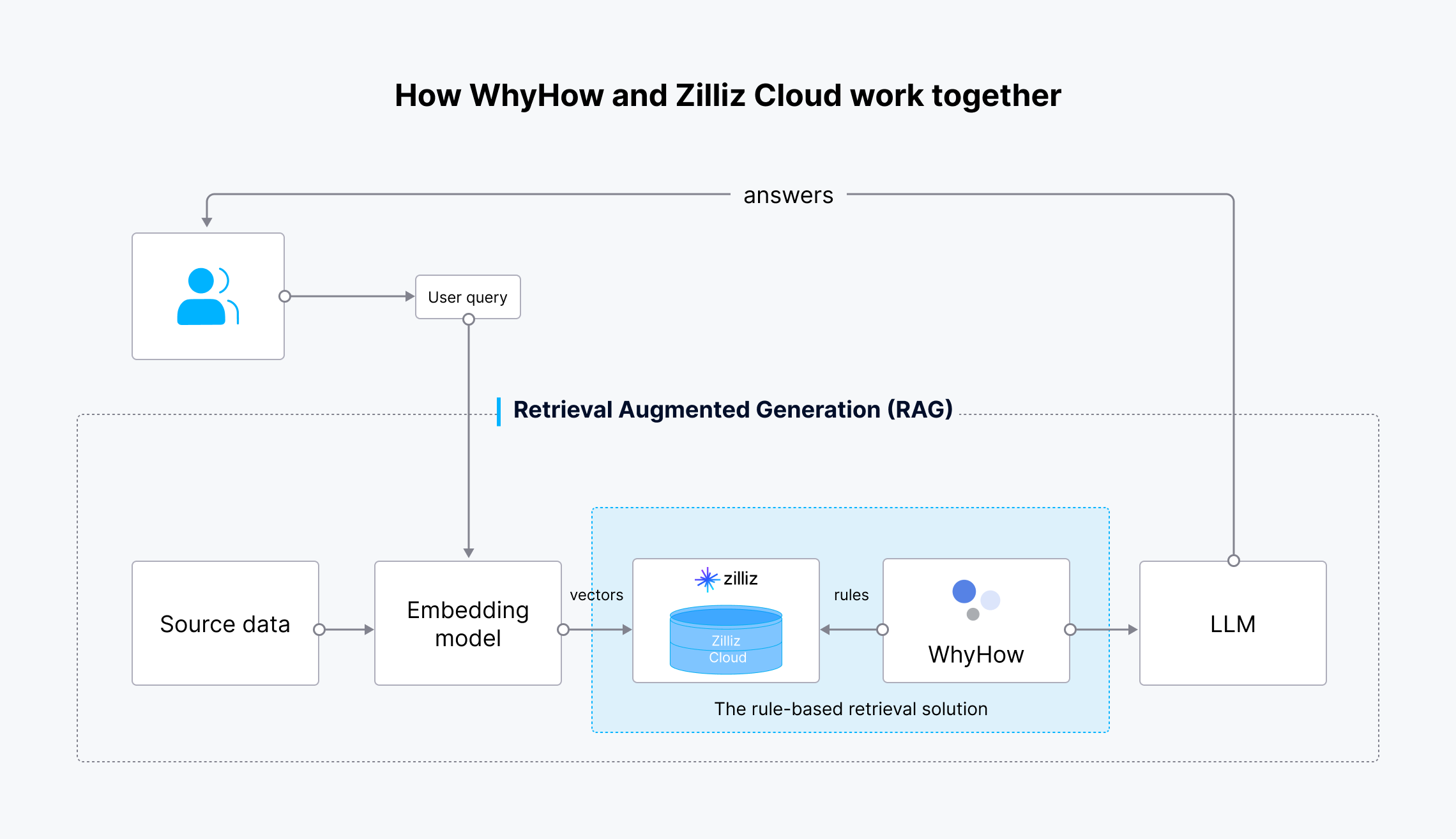

To overcome these challenges, we need to have greater control over the retrieval of raw data chunks. By integrating WhyHow and Milvus/Zilliz, we can build a rule-based retrieval solution. This approach allows you to define and map specific rules to relevant data chunks before performing a similarity search, enhancing control over the retrieval workflow. Implementing these rules narrows the scope of queries to a more targeted set of chunks, increasing the chances of retrieving relevant data for generating accurate responses. With further prompt and query tuning, the output quality can be continuously improved.

How the WhyHow and Milvus/Zilliz Integration Works

The rule-based retrieval solution built with WhyHow and Milvus/Zilliz performs the following tasks:

Vector Store Creation: This integration creates a Milvus collection to store the chunk embeddings.

Splitting, Chunking, and Embedding: When you upload your documents, the integration automatically splits, chunks, and creates embeddings of the documents before ingesting them into Milvus or Zilliz Cloud. This rule-based retrieval package currently supports LangChain's PyPDFLoader and RecursiveCharacterTextSplitter for PDF processing, metadata extraction, and chunking. For embedding, it supports the OpenAI text-embedding-3-small model.

Data Insertion: Uploads the embeddings and the metadata to Milvus or Zilliz Cloud.

Auto-filtering: Using user-defined rules, the integration automatically builds a metadata filter to narrow the query against the vector store.

The workflow of this integration is as follows:

How WhyHow and Zilliz Cloud work together.png

How WhyHow and Zilliz Cloud work together.png

- The source data is transformed into vector embeddings using OpenAI’s embedding model.

- The vector embeddings are ingested into Milvus or Zilliz Cloud for storage and retrieval.

- The user query is also transformed into vector embeddings and sent to Milvus or Zilliz Cloud to search for the most relevant results.

- WhyHow sets rules and adds filters to the vector search.

- The retrieved results and the original user query are sent to the LLM.

- The LLM generates more accurate results and sends them to the user.

How to Use WhyHow and Milvus/Zilliz Cloud