Gaussian Processes: A Comprehensive Guide to Probabilistic Modeling

Gaussian Processes: A Comprehensive Guide to Probabilistic Modeling

Machine learning models traditionally produce point predictions, representing the most probable outcome based on the input data. Real-life situations do not follow this simple pattern. Predicting future outcomes in financial sectors, healthcare, and robotics requires understanding prediction results and their accompanying uncertainty levels.

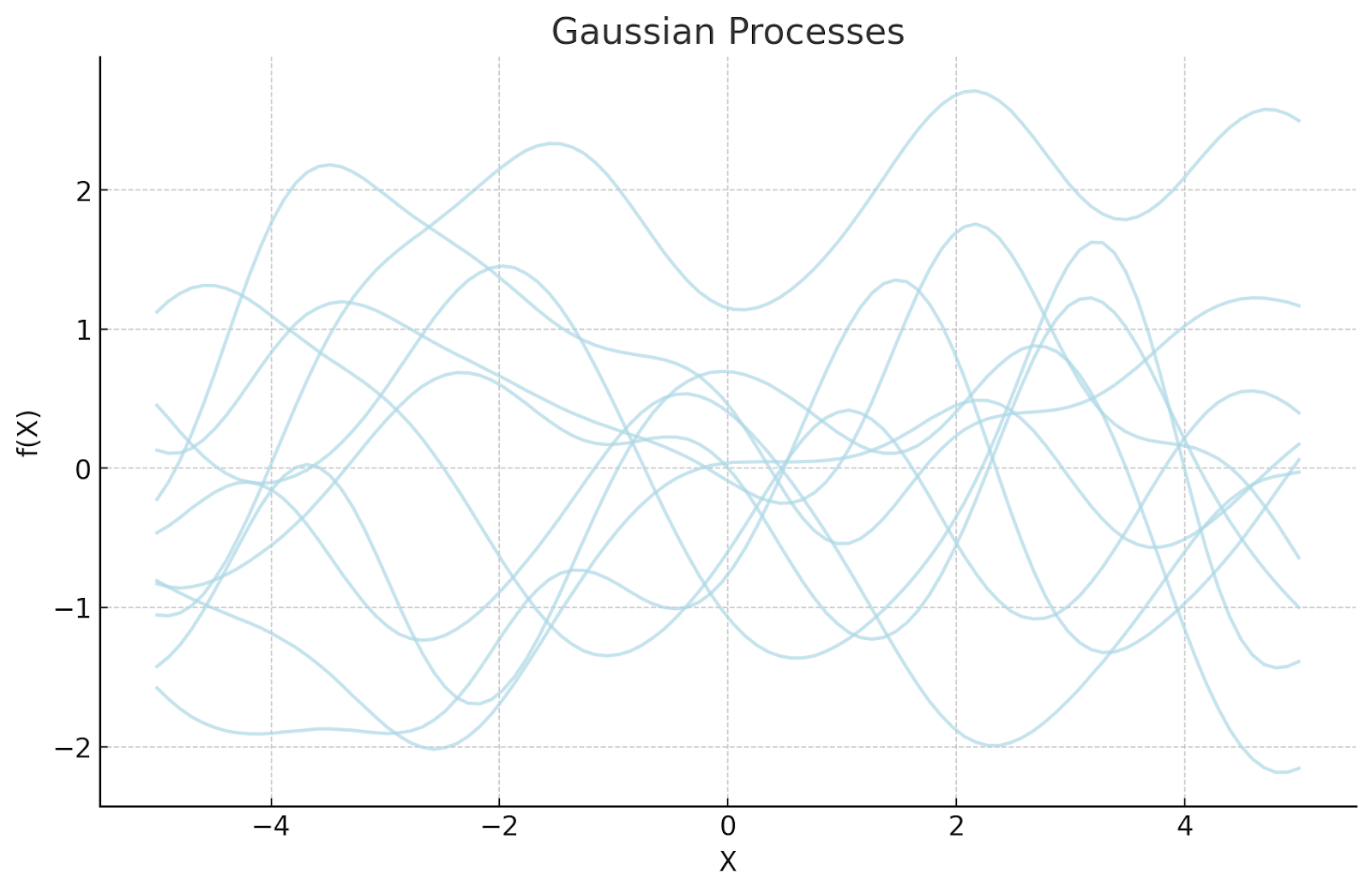

Figure 1 - Gaussian Processes Illustration

Figure 1 - Gaussian Processes Illustration

Figure 1: Gaussian Processes Illustration

Gaussian Processes (GPs) serve as a solution to these challenges. GPs provide probabilistic predictions that deliver an estimated value and a confidence measurement, representing the prediction's uncertainty level. GPs are valuable for probabilistic modeling, offering robust quantitative uncertainty assessment.

Gaussian Processes (GPs) differ from many machine learning models by defining a distribution over functions instead of relying on fixed parameters. This allows them to adapt flexibly to data and provide explicit uncertainty quantification in predictions.

One of their biggest strengths is working well with small datasets while avoiding overfitting. They also adapt dynamically by incorporating new information, making them ideal for situations where data is limited or constantly changing.

This guide explains Gaussian processes by presenting their fundamental concepts, operational mechanisms, and practical applications. We will also see the tools you can use to implement these processes.

What Is the Gaussian Process?

The Gaussian process is a flexible, non-parametric machine learning model that infers continuous functions. It models data relationships by defining a distribution over functions rather than relying on fixed parameters. The Gaussian process differs from parametric functions because it adjusts its behavior according to observed data.

GPs are particularly useful in probabilistic modeling because they provide both predictions and uncertainty estimates. This is possible through Bayesian inference, which helps GPs refine their predictions as new data becomes available.

GPs maintain flexibility through their adaptable structure, which enables them to handle complex data structures without predefined mathematical patterns. They are valuable in regression models, optimization problems, and forecasting scenarios that need uncertainty estimation.

Approximation methods enable the practical utilization of these models despite their computational complexity. The data-learning capability of GPs makes them valuable for many contemporary machine-learning applications, particularly those requiring uncertainty quantification.

How It Works

Now that we have established the fundamental concepts of Gaussian processes, let’s discuss how they model data, define relationships, and make confident predictions.

Multivariate Normal Distribution

GPs rely on the multivariate normal distribution as their fundamental building block, combined with covariance functions (kernels) to model relationships and capture uncertainty in data. The distribution expands the fundamental Gaussian distribution to analyze multiple variables through a single probabilistic framework. GPs use this capability to construct complex data relationships while preserving predictive consistency.

The multivariate normal distribution effectively models dependencies between variables, which is its main operational advantage. The covariance matrix functions as the central component that establishes the degree of influence between two variables as they change.

The principle allows GPs to define distributions representing all possible functions suitable for observed data. The training points lead to a GP creating a probabilistic model that includes observed data and unknown points. The known values in the data allow the model to update its prediction for new points while maintaining a probabilistic and continuous interpolation.

Kernels (Covariance Functions)

The Gaussian Process defines data point relationships through kernels, which are also known as covariance functions. The kernel controls information transmission between points, determining functional output patterns. The choice of kernel determines the pattern types that the model detects, including periodic patterns alongside smooth and abrupt changes. Popular kernel functions include:

Squared exponential kernel: It creates smooth, continuous patterns, making it suitable for most regression applications. The model predicts that points nearer to one another demonstrate higher levels of correlation.

Matérn kernel: The kernel enables users to specify the level of function smoothness, thus making it applicable to data sets featuring irregular patterns and abrupt changes.

Periodic kernel: This recognizes repetitive data patterns and seasonal effects, which makes it suitable for forecasting time series data and detecting cyclical patterns.

Linear kernel: It is an effective model for detecting linear relationships, which helps discover linear dependencies in data.

GPs achieve better accuracy and interpretability when users select appropriate kernels for different datasets.

Non-Parametric Models

Gaussian processes function as non-parametric methods because they avoid making assumptions about fixed equation descriptions for data. The model draws patterns from observed points without imposing any fixed equation.

GPs maintain flexibility because they can handle complex, evolving functions through new data inputs. GPs expand their complexity through data collection because they do not use fixed mathematical structures like parametric models. Such applications benefit enormously from using GPs because of their ability to adapt to unknown or changing functions.

Joint and Conditional Probability

The predictive process of GPs depends on using joint and conditional probability distributions. A GP creates a joint Gaussian distribution structure for observed data points. Each new point leads the model to condition its predictions based on previously observed data.

The estimation process becomes possible through Bayesian inference because new data helps improve function predictions without losing previously acquired knowledge. The model produces both predictive values and uncertainty measures that become confidence intervals. This feature makes estimates trustworthy for essential applications, including robotics, finance, and healthcare.

Hyperparameters and Their Influence

The GP model operates under hyperparameters' control, which defines kernel actions and model adaptability. Key hyperparameters include:

Length scale: The length scale parameter controls the speed at which correlations decrease, determining the smoothness of the resulting functions. The model length scale controls the speed of change and the detection of detailed patterns but also affects the establishment of broader data trends.

Variance: The variance parameter directly controls how much the function values spread across the domain, which affects the uncertainty predictions. A higher variance increases the model's capability to detect significant function value changes, but lower variance produces more risk-averse predictions.

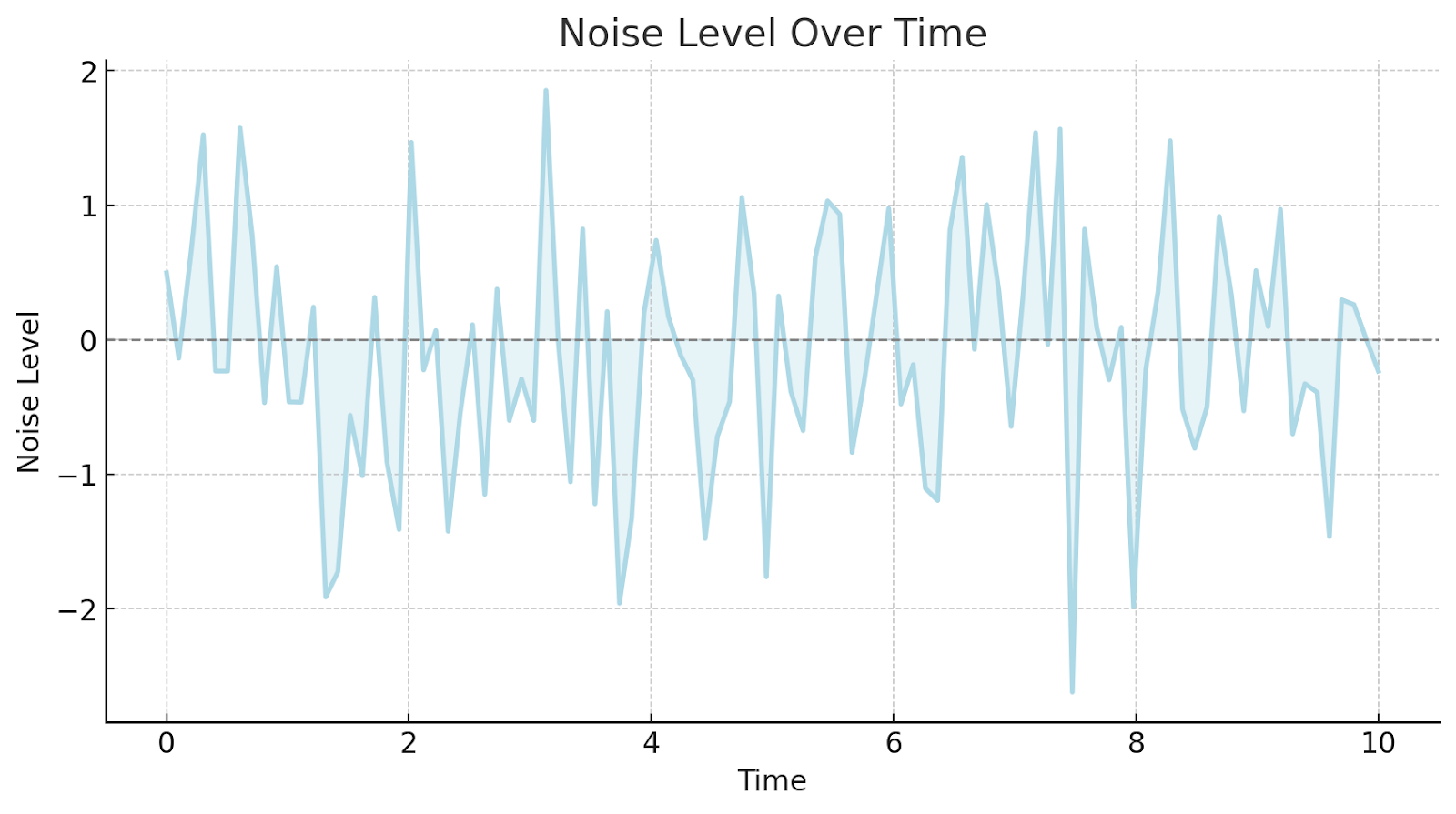

Noise level: The noise level parameter in Gaussian processes distinguishes actual data signals from random noise by accounting for data variability. It regulates measurement uncertainty to prevent the overfitting of noisy observations but allows reliable measurements to pass through.

Figure 2 - Noise Level over Time

Figure 2 - Noise Level over Time

Figure 2: Noise Level over Time

Accurate predictions require adjusting these hyperparameters. Optimization techniques, such as maximum likelihood estimation and Bayesian optimization, discover optimal parameter values for specific datasets.

Connections to Other Models

Gaussian processes operate independently but share key principles with multiple machine-learning models. The relationships between GPs and other methods help explain their strengths and suitable applications.

Relevance Vector Machines (RVMs)

GPs demonstrate parallel functionality with Relevance Vector Machines (RVMs) because they both employ probabilistic prediction models. RVMs operate with a limited basis function set, which results in better computational performance. GPs provide continuous function distributions that generate more detailed uncertainty predictions than other models.

RVMs' Bayesian inference depends on data sparsity assumptions, but GPs model uncertainty through kernel functions without these constraints. GPs are better for situations requiring precise confidence interval calculations and adaptable function estimation capabilities.

Kalman Filtering

The probabilistic modeling capabilities of Gaussian Processes match those of Kalman Filters through their shared ability to handle uncertainty. Kalman Filters excel in linear dynamic systems through recursive estimation techniques, which enables them to function effectively in real-time tracking and control systems.

GPs deliver a generalized modeling system that handles diverse data structures through nonlinear functions. Markovian state dependencies form the basis of Kalman Filters, but GPs establish their relationships through covariance structures, which support flexible and smooth function approximations.

Comparison with Other Machine Learning Models

GPs present distinctive benefits but require comparison with standard machine learning models to determine appropriate applications and limitations.

| Aspect | Gaussian Processes (GPs) | Neural Networks (NNs) | Support Vector Machines (SVMs) |

| Model Type | Non-parametric, probabilistic | Parametric, deep learning-based | Parametric, margin-based |

| Uncertainty Quantification | Provides confidence intervals | Limited, except for Bayesian NNs | Requires additional methods |

| Scalability | O(N³) complexity, less suited for large datasets | Scales well with large datasets | Efficient for smaller datasets |

| Flexibility | Kernel choice determines adaptability | Can model highly complex functions | Kernel-dependent flexibility |

| Interpretability | Moderate; kernels provide insights | Low; often considered a "black box" | Moderate; decision boundary explicit |

| Training Data Requirements | Performs well with small datasets | Requires large datasets | Effective with medium-sized datasets |

| Applications | Regression, forecasting, Bayesian optimization | Image, speech recognition, NLP | Classification, bioinformatics |

Benefits and Challenges

GPs are machine learning approaches that deliver substantial benefits and technical constraints. Understanding both advantages and limitations helps determine proper usage scenarios for GPs.

Benefits

Probabilistic framework: GPs define function distributions for predictive results and confidence estimates. These models excel in diagnostic systems and risk assessments needing precise uncertainty calculations.

Non-parametric nature: GPs' model structure remains independent of any predetermined function shape. This demonstrates dynamic pattern adaptation capabilities because they adjust to complex data structures.

Incorporation of prior knowledge: The mean and covariance functions enable GPs to incorporate domain-specific knowledge into their modeling process. The addition of historical data or expert insights improves model accuracy through GPs.

Versatility across domains: GPs effectively serve geostatistics, time-series forecasting, and Bayesian optimization, proving useful for adaptable function modeling.

Closed-form inference: Gaussian Processes deliver exact posterior solutions for Gaussian noise regression, enabling efficient inference without lengthy numerical approximations.

Challenges

Computational scalability: GPs need O(N³) (cubic time complexity in the number of data points, N) operations to function, which results in high computational costs for large datasets. Approximation methods known as sparse GPs deliver better efficiency yet introduce new limitations to the model.

Kernel selection sensitivity: The selection of kernel function remains a critical factor in determining how accurately GPs model data. Using an inappropriate kernel selection results in generalization problems requiring thorough tuning and validation steps.

Limited extrapolation capability: Generalization beyond known areas remains challenging for GPs, who perform better with interpolation than extrapolation. The model relies on observed data, leading to unreliable predictions outside these areas.

Hyperparameter optimization: Finding proper hyperparameters, including length scale and variance, is difficult. Bayesian optimization is an automated system that enhances the efficiency of parameter adjustments.

Implementation complexity: Implementing GPs requires advanced math, such as Bayesian inference and covariance function analysis. Successful implementation and tuning require a complete understanding of these concepts.

Use Cases

GPs are widely used in various real-world applications due to their flexibility and ability to quantify uncertainty. Some of the key use cases include:

Time-series forecasting: GPs excel at forecasting future data points while producing precise uncertainty measurements. Financial markets, climate modeling, and demand forecasting use GPs as their standard tools because they deliver accurate predictions with confidence intervals.

Spatial data analysis: GPs are robust spatial data analysis tools. They extract spatial relationships from environmental monitoring data, land-use information, and meteorological observations. Geostatistics applications mainly use these models for kriging operations.

Hyperparameter optimization: GPs are vital in Bayesian optimization, optimizing machine learning parameters, deep learning structures, and experimental designs involving costly function evaluations.

Anomaly detection: GPs excel at detecting anomalies, which proves essential for detecting fraud and maintaining predictive equipment systems and medical diagnostics.

Reinforcement learning: GPs support decision-making systems through reinforcement learning, especially when uncertainty modeling remains essential in robotics, autonomous systems, and gameplay.

Tools and Libraries

Specialized tools are necessary for efficient GP implementation because they simplify model training, inference, and optimization tasks. Different libraries offer comprehensive frameworks enabling practitioners to use GPs for practical applications. Some of the tools include:

GPy: A user-friendly library for performing Gaussian Process modeling. It provides a simple interface for kernel definition, model fitting, and prediction tasks.

GPflow: A large-scale Gaussian Process library built on TensorFlow. It supports modern optimization approaches, including variational inference, making it ideal for scalable applications.

Scikit-learn: It offers straightforward GP regression and classification implementation, enabling novices and practitioners to work with it.

GPyTorch: A Gaussian Process library built on top of PyTorch enables scalable inference and supports deep kernel learning integration.

Stan: A probabilistic programming language that implements GP modeling through Bayesian inference applications.

Emukit: A toolkit for Bayesian optimization and probabilistic modeling tools that help implement GPs for decision-making needs.

FAQs

What are Gaussian Processes used for?

GPs are used for regression, classification, and Bayesian optimization, providing probabilistic predictions with uncertainty estimations. They are used in ML, geo-statics, and time-series forecasting.

How do Gaussian Processes handle uncertainty?

GPs manage uncertainty by defining probability distributions over all functions that match observed data points. This enables predictions with calculated means and quantified confidence intervals.

What is a kernel in the context of Gaussian Processes?

GPs use kernels as covariance functions to identify data point similarities by defining process covariance structures. The chosen kernel influences the model's smoothness.

Can Gaussian Processes be used for large datasets?

Traditional GPs face computational challenges with large datasets due to their cubic time complexity, but scalability has improved with sparse approximations like sparse GPs.

How do Gaussian Processes compare to neural networks?

GPs deliver predictions that include precise uncertainty measurements. Neural networks provide deterministic results but need extensive datasets to match performance outcomes.

Related Resources

- What Is the Gaussian Process?

- How It Works

- Comparison with Other Machine Learning Models

- Benefits and Challenges

- Use Cases

- Tools and Libraries

- FAQs

- Related Resources

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for Free