Zilliz Cloud Pipelines February Release - 3rd Party Embedding Models and Usability Improvements!

We're thrilled to unveil a significant update to Zilliz Cloud Pipelines, packed with exciting features to empower your RAG applications with flexible and high-quality retrieval and zero DevOps overhead.

Our February release focused on two key areas: embedding models and ease of use.

New Embedding Models from OpenAI and Voyage AI

With the addition of 3rd-party embedding models from OpenAI and Voyage AI, Zilliz Cloud Pipelines now provides the flexible option of 6 embedding models (5 English models and 1 Chinese model)! Whether your focus is on the balance of storage cost, retrieval quality, or latency, there is a suitable choice for you:

- Balancing quality and overhead:

openai/text-embedding-3-smallhas competitive quality with a highly efficient model producing lower-dimension vectors. It has the smallest inference and storage overhead. - Looking for the best performance for a specific task:

voyageai/voyage-code-2has outstanding retrieval quality on code-intensive text, including source code and technical documentation. - Sensitive to the network latency in ingestion and query serving: Choose

zilliz/bge-base-en-v1.5, an excellent general-purpose model co-located with the vector database in the same network environment, achieving the best end-to-end ingestion and query latency.

| Embedding Model | Description |

|---|---|

| zilliz/bge-base-en-v1.5 | Released by BAAI, this state-of-the-art open-source model is hosted on Zilliz Cloud and co-located with vector databases, providing good quality and best network latency. This is the default embedding model when language is ENGLISH. |

| voyageai/voyage-2 | Hosted by Voyage AI. This general purpose model excels in retrieving technical documentation containing descriptive text and code. Its lighter version voyage-lite-02-instruct ranks top on MTEB leaderboard. This model is only available when language is ENGLISH. |

| voyageai/voyage-code-2 | Hosted by Voyage AI. This model is optimized for programming code, providing outstanding quality for retrieval code blocks. This model is only available when language is ENGLISH. |

| openai/text-embedding-3-small | Hosted by OpenAI. This highly efficient embedding model has stronger performance over its predecessor text-embedding-ada-002 and balances inference cost and quality. This model is only available when language is ENGLISH. |

| openai/text-embedding-3-large | Hosted by OpenAI. This is OpenAI's best performing model. Compared to text-embedding-ada-002, the MTEB score has increased from 61.0% to 64.6%. This model is only available when language is ENGLISH. |

| zilliz/bge-base-zh-v1.5 | Released by BAAI, this state-of-the-art open-source model is hosted on Zilliz Cloud and co-located with vector databases, providing good quality and best network latency. This is the default embedding model when language is CHINESE. |

Enhanced Usability for a Better Onboarding Experience

As a one-stop solution for retrieval, we aim to offer the best developer experience in Zilliz Cloud Pipelines in both the development and onboarding phases. This release added the new "Run Pipeline" page and local file upload feature. We also added support for all dedicated vector db clusters in GCP us-west-2 region, so the pipelines feature is no longer restricted to the Serverless free plan.

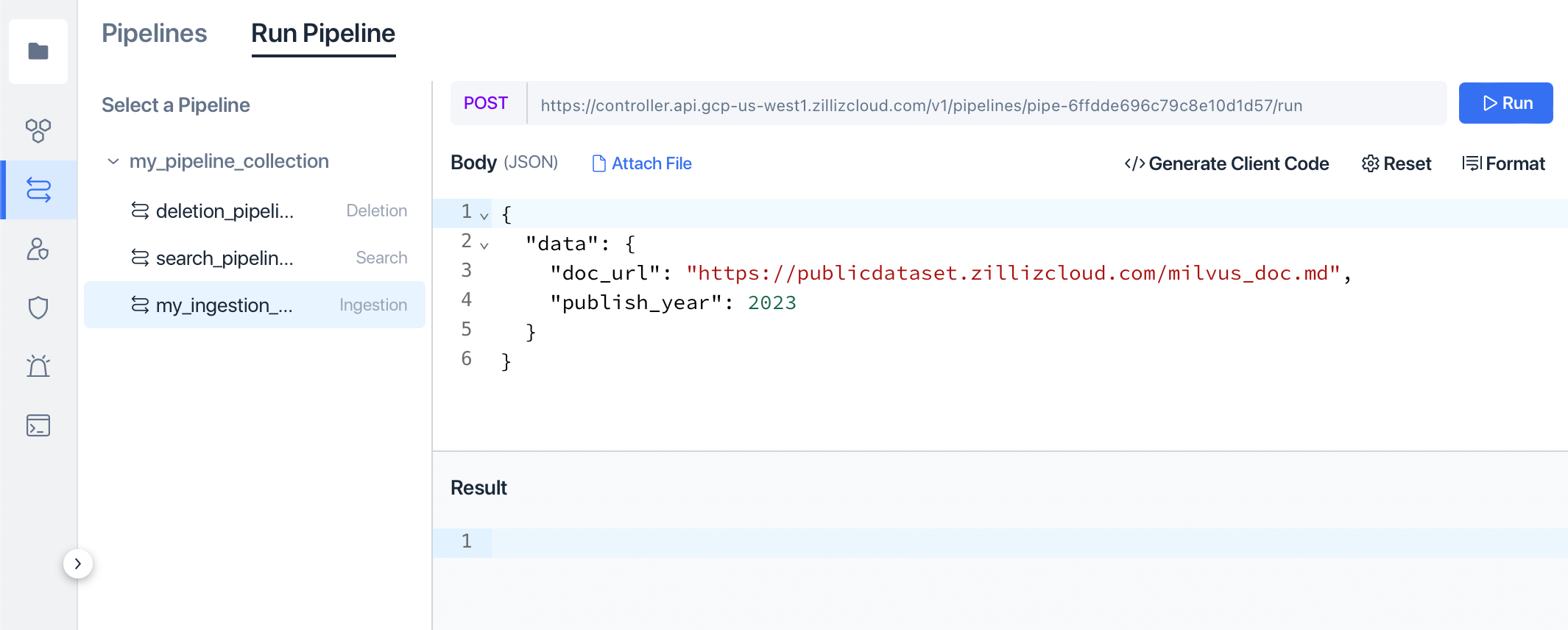

New UX for Running Pipeline

When testing your newly created pipelines, we understand the urge to give it a spin with just a few clicks. Testing the pipelines feature is now more accessible with the newly designed "Run Pipeline" page. We organized all your pipelines by collection in the left panel. You can select a pipeline from the left panel and give it a trial run to ingest a document or make a search query. The pipeline type is indicated in the UI so you can quickly distinguish between them.

Local File Upload

Though it's convenient and secure in production to ingest documents from object stores, many developers favor uploading local files during development for convenience. In this release, we support local file uploads in addition to S3 and GCS. You can directly ingest the local file by clicking the "Attach File" button in the "Run Pipeline" UI.

Support Dedicated Vector Database Clusters

Since the launch of Zilliz Cloud Pipelines, we have received user feedback to extend the service to more types of vector database clusters. In this release, we are excited to remove the restriction. Zilliz Cloud Pipelines can now work with any kind of vector database cluster in the GCP us-west-2 region, including Serverless and Dedicated. We will expand the service to more clouds and regions in the upcoming releases, including AWS, Azure, and Alicloud.

With dedicated cluster support, you can enjoy enhanced performance and reliability of vector search while benefiting from the convenient one-stop retrieval service — Zilliz Cloud Pipelines.

Get Started with Zilliz Cloud Pipelines Today

That wraps it up for our February release of Zilliz Cloud Pipelines: more embedding models to choose from, a better user experience, local file upload, and dedicated cluster support.

Ready to experience the power of Zilliz Cloud Pipelines for yourself? Sign up for free today and try a streamlined retrieval solution with quality, flexibility, and no DevOps overhead. You can also use Zilliz Cloud Pipelines with popular RAG frameworks like LlamaIndex. Join the many developers who use Zilliz Cloud Pipelines to free their innovation from infrastructure maintenance and DevOps.

- New Embedding Models from OpenAI and Voyage AI

- Enhanced Usability for a Better Onboarding Experience

- Get Started with Zilliz Cloud Pipelines Today

Content

Start Free, Scale Easily

Try the fully-managed vector database built for your GenAI applications.

Try Zilliz Cloud for FreeKeep Reading

Will Amazon S3 Vectors Kill Vector Databases—or Save Them?

AWS S3 Vectors aims for 90% cost savings for vector storage. But will it kill vectordbs like Milvus? A deep dive into costs, limits, and the future of tiered storage.

Zilliz Cloud Delivers Better Performance and Lower Costs with Arm Neoverse-based AWS Graviton

Zilliz Cloud adopts Arm-based AWS Graviton3 CPUs to cut costs, speed up AI vector search, and power billion-scale RAG and semantic search workloads.

Empowering Women in AI: RAG Hackathon at Stanford

Empower and celebrate women in AI at the Women in AI RAG Hackathon at Stanford. Engage with experts, build innovative AI projects, and compete for prizes.